|

It's a perfectly reasonable concern to have - if you believe that the AI god will both be powerful and intelligent enough to turn the solar system into paperclips atom by atom and, at the same time, incapable of having even a basic sense of ethics or any kind of reflection about whether what it wants to do is a good (or even just minimally useful) idea or not.

|

|

|

|

|

| # ? Jun 1, 2024 19:56 |

|

quote:The PUA Community began decades ago with men that wanted to learn how to get better at seducing women. As I understand it, they simply began posting their (initially) awkward attempts at love online. Over the years, they appear to have amassed a fairly impressive set of practical knowledge and skills in this domain. quote:I find it alarming that such a valuable resource would be monopolized in pursuit of orgasm; it's rather as if a planet were to burn up its hydrocarbons instead of using them to make useful polymers. quote:...pretty much what I was thinking was expressed well by Scott Adams: quote:I've met people who were shockingly, seemingly preternaturally adept in social settings. Of course this is not magic. Like anything else, it can be reduced to a set of constituent steps and learned. We just need to figure out how.

|

|

|

|

That's been a modern science fiction staple when talking about self-perpetuating corporations that own themselves.

|

|

|

|

Nothing helps me win friends and influence people like standing there with a desperate look on my face, internally screaming "What was step 51?! ".

|

|

|

|

Djeser posted:

Uhg, I forgot how horrible scott adams is, not surprising these guys love his crap.

|

|

|

|

The quote in question:quote:... I know we joke a lot about

|

|

|

|

Political Whores posted:The quote in question: That, and the oh-so-persecuted "society would never allow this!" Like, has Dilbert never heard of an MBA?

|

|

|

|

Ironically enough, because connecting with people just so happens to be the best way to manipulate them, as anybody with a background in marketing or rhetorics (you know, those fields they study at universities about how to manipulate people) could tell you.

|

|

|

|

All these works of science fiction and these guys don't think researchers wouldn't put some insane contingencies in place like "don't connect the self improving AI to the Internet", "make sure it has an easily accessible off switch", or "make sure you can unplug it"? I mean I get why the Paperclip thing is terrifying and could potentially become world ending if it became self-sufficient, but if there's one thing we're good at it's creating in-built dependencies. Who the gently caress do they send millions of dollars to to prevent this from happening?

|

|

|

|

|

Triskelli posted:All these works of science fiction and these guys don't think researchers wouldn't put some insane contingencies in place like "don't connect the self improving AI to the Internet", "make sure it has an easily accessible off switch", or "make sure you can unplug it"? To answer this question, Yud roleplayed as an AI-in-a-box, betting $200 to all comers that he could convince them to let him out with only text chat. 'Course, the chat logs are strictly confidential, and he gave up after his success rate plummeted to 50-50.

|

|

|

|

Not that that meant anything considering that the other person knows he isn't an AI anyways.

|

|

|

|

Well, it's still worth the exercise. You just have to make sure you can provide the AI anything it would want without letting it out of its "box". Most of these people seem to approach the whole thing as a software problem rather than a hardware one. Like, if you want the AI to be able to access the Internet as an information building resource, let it ping another computer electronically to print out a random Wikipedia page, scoot it over to the AI terminal and then run through a scanner. E: or y'know, make it run on C batteries. Those things are impossible to find. Triskelli fucked around with this message at 09:11 on Oct 31, 2014 |

|

|

|

|

Moddington posted:To answer this question, Yud roleplayed as an AI-in-a-box, betting $200 to all comers that he could convince them to let him out with only text chat. Specifically, he played two games against his cultists and won them, then bragged about his amazing victory. This made people outside his cult notice his challenge, three accepted, Yudkowsky lost against all three of them. Giving up immediately in the face of adversity, Yudkowsky then wrote a triumphant blog post about how awesome the experiment was and how he's great because he never gives up in the face of adversity (a uniquely Japanese virtue). What this means is that the only thing Yudkowsky has ever experimentally proven is that his own followers are more likely to choose to unleash an evil AI upon the world than an outsider is. So even if you agree with every single one of Yudkowsky's beliefs and arguments and accept everything he says at face value, you should still conclude that Yudkowsky's writings are harmful and MIRI should be destroyed.

|

|

|

|

Lottery of Babylon posted:Specifically, he played two games against his cultists and won them, then bragged about his amazing victory. This made people outside his cult notice his challenge, three accepted, Yudkowsky lost against all three of them. Giving up immediately in the face of adversity, Yudkowsky then wrote a triumphant blog post about how awesome the experiment was and how he's great because he never gives up in the face of adversity (a uniquely Japanese virtue). I'm reminded of this guy, who used some bullshit martial arts skills to knock over his students without touching them, and bet $5000 he could beat a real martial artist, with hilariously predictable results.

|

|

|

|

That stuff about PUA highlights the main problem with their perspective - there's an old article from Yud where he asks, "if nerds are so smart, why can't they work out how to be popular?" His answer to that is a lack of rationality - they're not using the right techniques, if only they can get smart in logic and statistics they can boil down all human behaviour to a predictable flowchart to follow all the way to popularity and success. Except that humans are fundamentally irrational, and applying rational rules to model their behaviour will give you an imperfect model at best. I don't think they've realised their premise is flawed in years.

|

|

|

|

|

|

|

|

RubberJohnny posted:Except that humans are fundamentally irrational, and applying rational rules to model their behaviour will give you an imperfect model at best. I don't think they've realised their premise is flawed in years.

|

|

|

Cardiovorax posted:Humans are perfectly rational, most of the time. They're just not logical, is all. Unless they're insane they'll usually do whatever they think is in their best interest - but learning what they consider that to be takes getting to know them and emphasizing with their emotional states, which the LessWrong crowd not only don't care to bother with but actively consider below them.

|

|

|

|

|

Triskelli posted:All these works of science fiction and these guys don't think researchers wouldn't put some insane contingencies in place like "don't connect the self improving AI to the Internet", "make sure it has an easily accessible off switch", or "make sure you can unplug it"? I'm pretty sure Arthur C. Clarke saw this one coming. In 2010, the astronauts re-activating HAL fitted him with completely mechanical kill-switches designed to cut all power to the AI at a millisecond's notice.

|

|

|

Bargearse posted:I'm pretty sure Arthur C. Clarke saw this one coming. In 2010, the astronauts re-activating HAL fitted him with completely mechanical kill-switches designed to cut all power to the AI at a millisecond's notice.

|

|

|

|

|

...Can we help you?

|

|

|

|

Triskelli posted:...Can we help you?

|

|

|

|

|

Triskelli posted:...Can we help you? Actually, I'm kind of okay with this stchick.

|

|

|

Ratoslov posted:Actually, I'm kind of okay with this stchick.

|

|

|

|

|

Lottery of Babylon posted:Specifically, he played two games against his cultists and won them, then bragged about his amazing victory. This made people outside his cult notice his challenge, three accepted, Yudkowsky lost against all three of them. Giving up immediately in the face of adversity, Yudkowsky then wrote a triumphant blog post about how awesome the experiment was and how he's great because he never gives up in the face of adversity (a uniquely Japanese virtue). "It is difficult to get a man to understand something, when his salary depends on his not understanding it."

|

|

|

|

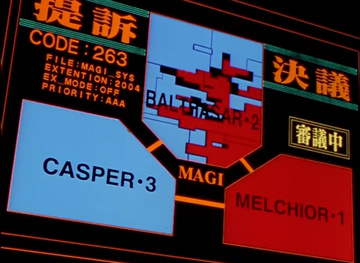

Either it's a raid by FYAD, or a posting bot has gained sentience and is trying to communicate by posting the content of Effectronica's anime wank folder.

|

|

|

Political Whores posted:Either it's a raid by FYAD, or a posting bot has gained sentience and is trying to communicate by posting the content of Effectronica's anime wank folder.

|

|

|

|

|

Hot

|

|

|

|

Political Whores posted:Either it's a raid by FYAD, or a posting bot has gained sentience and is trying to communicate by posting the content of Effectronica's anime wank folder.

|

|

|

Political Whores posted:Either it's a raid by FYAD, or a posting bot has gained sentience and is trying to communicate by posting the content of Effectronica's anime wank folder. Yeah, considering they've hit the PYF cat thread it's probably just a raid. They'll get bored soon enough.

|

|

|

|

|

is this the mock thread? I just want to say, long time reader, first time contributor. I was playing my videogames the other day, and noticed that mother 1's (earthbound zero if you're a dumbshit rear end in a top hat who probably gets his clothes from one of those gatherings of bolivians that put their stands next to each other under a gigantic circus tent) overworld map is enormous, but has pretty much nothing in it. What kind of idiot designer was put in charge? I mean just look at this

ArfJason fucked around with this message at 16:59 on Oct 31, 2014 |

|

|

|

Triskelli posted:Yeah, considering they've hit the PYF cat thread it's probably just a raid. They'll get bored soon enough. Sounds like someone doesn't keep up to date on the rules. Shameful as heck.

|

|

|

|

Goddamn that's too big for PYF, but I know another thread that may appreciate it

|

|

|

|

Triskelli posted:Yeah, considering they've hit the PYF cat thread it's probably just a raid. They'll get bored soon enough.

|

|

|

Srice posted:Sounds like someone doesn't keep up to date on the rules. Shameful as heck. Well poo poo, I didn't know we were passing out Anime like loving halloween candy. Egg on my face for thinking it was just one rear end in a top hat.

|

|

|

|

Triskelli posted:Well poo poo, I didn't know we were passing out Anime like loving halloween candy. Egg on my face for thinking it was just one rear end in a top hat.

|

|

|

|

|

on a scale of yudkowsky to nite crew, how ironic is this anime we're talking about i know gentlegoons always temper their anime with irony

|

|

|

|

Djeser posted:on a scale of yudkowsky to nite crew, how ironic is this anime we're talking about

|

|

|

Djeser posted:on a scale of yudkowsky to nite crew, how ironic is this anime we're talking about

|

|

|

|

|

|

| # ? Jun 1, 2024 19:56 |

|

Djeser posted:on a scale of yudkowsky to nite crew, how ironic is this anime we're talking about nite crew is not ironic about enjoying anime, friend

|

|

|