|

in a well actually posted:also seen in products; whole bunch of iPod alternatives where intentionally difficult the radio hobby too is really bad for it. i got really excited and started getting into it but then in discussions it was old white dudes just beating themselves off talking about their incredibly expensive equipment and making GBS threads on anyone who didn't have thousands of monies to dump on an arcane communications hobby who when asked simple questions get unbelievably angry if you don't automatically know everything they do for no reason whatsoever. "haven't you sat your exam?" bro i have to study before i can sit the exam what do you think i'm trying to do, i am literally asking you to clarify a point makes me wonder how many hobbies have died this way

|

|

|

|

|

| # ? May 3, 2024 09:04 |

|

Expo70 posted:the radio hobby too is really bad for it. i got really excited and started getting into it but then in discussions it was old white dudes just beating themselves off talking about their incredibly expensive equipment and making GBS threads on anyone who didn't have thousands of monies to dump on an arcane communications hobby who when asked simple questions get unbelievably angry if you don't automatically know everything they do for no reason whatsoever. if you're really interested, i bet there have been studies on grognard capture

|

|

|

|

also I bet Jonny 290 would be fine with answering your radio questions without being a dick about it

|

|

|

|

there is a ham radio thread here

|

|

|

|

Captain Foo posted:there is a ham radio thread here I realized its basically too rich for my blood (good gear is absurdly expensive), and that I'm semi-happy goofing around on a Baofeng UV5R and local Software Defined Radio. I like listening to the men on the boats. They cuss in Russian! I do wish I didn't have to go upstairs and press my head against the window to get reception.

|

|

|

|

there was a good tweet or tumblr post like "people in small hobbyist communities that take being ascerbic to outsiders as a badge of pride are the weird ones" i joined a community for a video game where i have been playing it for like 20 years and it was unexpectedly welcoming but there were some odd balls doing the above for no good reason

|

|

|

|

Anyone have good recommendations for documentaries about ergonomics, HCI, human factors or UX? Or just documentaries in general that happen to feature interesting topics or reoccurring domains (i.e. docs on NASA mission control or nuclear power plant stuff etc.). For example, would love a deep dive on NASA mission control and planning. Just watched the Apollo 11 doc and it has some scenes with these big conference meetings having engineering discussions. Just made me wonder what kind of research went into how to design planning and coordination structure for different system mission teams working together. (Long form articles would be cool too) Oysters Autobio fucked around with this message at 02:47 on Mar 2, 2024 |

|

|

|

Oysters Autobio posted:Anyone have good recommendations for documentaries about ergonomics, HCI, human factors or UX? Or just documentaries in general that happen to feature interesting topics or reoccurring domains (i.e. docs on NASA mission control or nuclear power plant stuff etc.). I've posted in this thread before about their use of voice loops for coordination in mission control (this is a link to my blog where I transferred posts, because I can't remember where in this thread this is). I don't necessarily haven't tons about it elsewhere yet, partly because I think I've seen most of my stuff from the incident lens (see the Challenger and Columbia disaster reports; Columbia investigations themselves gave rise to Resilience Engineering). Diane Vaughan did some amazing work on air traffic controllers, so I have to imagine her report on Challenger is equally good (https://press.uchicago.edu/ucp/books/book/chicago/C/bo22781921.html)

|

|

|

|

A few days ago, Hazel Weakly wrote about her redefinition of observability on her blog:quote:The control theory definition of observability, from Rudolf E. Kálmán, goes as follows: I decided to add some extra color commentary to it on mine, in an attempt to provide extra context and framing around her ideas, but also around classical definitions of observability. The post covers differences between insights and questions, distinctions between observability and data availability, socio-technical implications, mapping complex systems, and on the use of models. Here's a few extra pull quotes for the thread, since it touches cognitives poo poo and whatnot: On insights and questions: quote:Let’s take a washing machine, for example. You can know whether it is being filled or spinning by sound. The lack of sound itself can be a signal about whether it is running or not. If it is overloaded, it might shake a lot and sound out of balance during the spin cycle. You don’t necessarily have to be in the same room as the washing machine nor paying attention to it to know things about its state, passively create an understanding of normalcy, and learn about some anomalies in there. On socio-technical implications: quote:The continued success of the overall system does not purely depend on the robustness of technical components and their ability to withstand challenges for which they were designed. When challenges beyond what was planned for do happen, and when the context in which the system operates changes (whether it is due to competition, legal frameworks, pandemics, or evolving user needs and tastes), adjustments need to be made to adapt the system and keep it working. on mapping complex systems: quote:Experimental practices like chaos engineering or fault injection aren’t just about testing behaviour for success and failure, they are also about deliberately exploring the connections and parts of the web we don’t venture into as often as we’d need to in order to maintain a solid understanding of it.

|

|

|

|

MononcQc posted:A few days ago, Hazel Weakly wrote about her redefinition of observability on her blog: i think all i ever post in here is “thanks! great post” but i do read and internalize them and meld them with what i know and understand i very much appreciate it and owe you a keg of beer or whatever indulgence you appreciate

|

|

|

|

Share Bear posted:i think all i ever post in here is “thanks! great post” but i do read and internalize them and meld them with what i know and understand yeah it feels like a very long delay before the new information sets in and clicks and starts making sense because you can start to intuit examples that remind you of the contents of the post what i notice is by the time it has sunk in, the urge to say "thank-you" has long since gone, as if its in some other channel or some other stream of motive the only thing we can really do with that in mind is either ask very simple questions (which again, even those take a long time to form), or thank MononcQc immediately in the present before we forget.

|

|

|

|

Thanks, that's appreciated. I haven't read as many papers recently, only because I've been doing other poo poo, like prepping the garden and also hanging out with people and trying different hobbies, but we'll see whenever I go back into a reading rabbithole. I've got the book How Infrastructure Works on the backburner, I assume there'll be cool poo poo in there to quote and whatnot.

|

|

|

|

It's been a hot minute since I last wrote up notes, as I've been doing other things, but recently a coworker shared this paper from Carol S. Lee and Catherine M. Hicks titled Understanding and Effectively Mitigating Code Review Anxiety. To make a long story short, they developed a framework and intervention around code review anxiety based on research about social anxiety, ran a study validating it, and created a model of key metrics that influence code review avoidance that was also shown to be helped by a single workshop intervention. That sounds like a lot of stuff, so I'll have to break it down a bunch. First of all, the authors cover why code reviews are important: defect finding, learning and knowledge transfers, creative problem solving, and community building. However these can happen only if participation is active, timely, and accurate. Enter Code Review Anxiety (CRA), a concept referring to the fear of judgment, criticism and negative reviews when giving or receiving code reviews. Code Review Anxiety is not an empirical concept, but it's been acknowledged by the industry. The authors draw from social anxiety to better define it: quote:social anxiety is maintained and exacerbated by negative feedback loops that reinforce biased thinking and avoidance in social or performance situations. In particular, individuals experiencing social anxiety are less likely to believe that they have the skills to manage their anxiety (low anxiety self-efficacy) and are more likely to overestimate the cost and probability of situations ending poorly (high cost and probability bias)-all of which contribute to greater avoidance behaviors such as procrastinating, mentally “checking out,” or prematurely leaving social and evaluative situations. This bit introduces many of the key concepts in the paper, which participants in the study would rate themselves on:

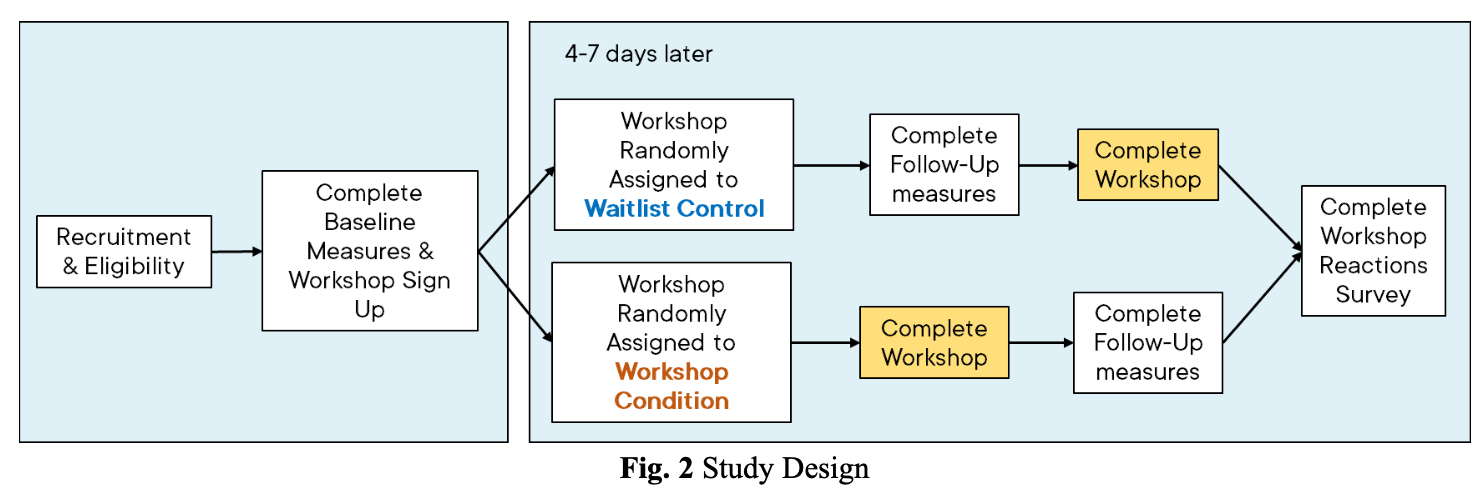

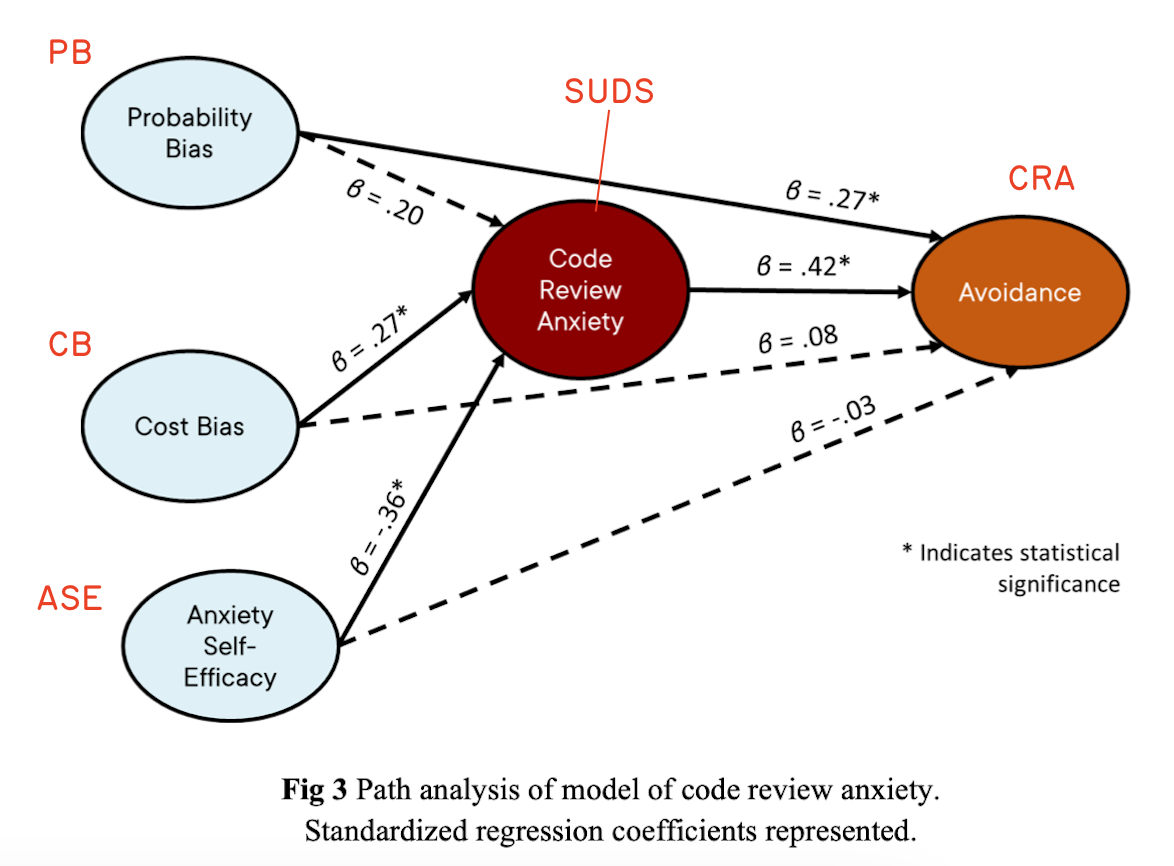

These concepts will turn out to be the main measures for outcomes and the components in their model of the code review anxiety phenomena, which I'll come back to soon. Their study was run by contacting people for it, filtering those who rated high enough on the Subjective Units of Distress Scale, and then split them in two groups. One group would complete their self-assessment, wait 4 to 7 days, have them do a second self-assessment, give them a workshop for code review anxiety, and then gather reactions. The other group would do the self-assessment, wait 4-7 days, receive the workshop, complete a second self-assessment, and then gather reactions:  I'm not quite sure what the delays were between the workshops and follow-ups, and whether that matters for the results—if their point is to measure workshop efficacy against a baseline rather than how much of a long-term effect it has, then it's all fine. As far as I can tell, the point is to establish the anxiety model and show that there is potential in borrowing methods from social anxiety (rather than "curing" it permanently) so this all seems coherent to my untrained eye. So what was the workshop? Carol S. Lee (the paper's first author) ran a 2h session with methods based on Cognitive-Behavioral Group Therapy and Dialectic Behavior Therapy. She's a clinical psychologist with experience intervening with clinical populations: quote:We deliberately chose content to directly target all three key processes of cognitive-behavioral approaches. For example, to increase awareness of internal experiences, the workshop began with psychoeducation on the prevalence, symptoms, and function of code review anxiety, followed by a self-monitoring and functional analysis exercise of anxiety symptoms. Participants also learned and practiced the “TIPP (changing body temperature, intense exercise, paced breathing, and progressive muscle relaxation)” relaxation techniques. For reference, DEAR MAN and GIVE FAST are acronyms around objective, relationship, and self-respect effectiveness. They also provide a really interesting rationale for their approach, where they chose to: quote:[...] prioritize self-compassion and anxiety self-efficacy over the probability and cost biases [...] to create an adaptive intervention applicable to a wide range of software team contexts and to increase the cultural sensitivity of our intervention. For example, unlike panic or specific phobias, where feared negative outcomes are highly unlikely to occur (e.g. dying or having a heart attack), feared social or performance anxiety-related outcomes can occur and are often frequently experienced (e.g. making a mistake). Because of this, targeting a negative automatic thought like “I will make mistake” by decreasing probability bias (“I probably won’t make a mistake”) can be contextually unhelpful, whereas targeting the thought by increasing self-compassion (“it’s okay if I make a mistake because mistakes are a part of learning and everyone makes them”) can be more broadly functional. They then did a bunch of statistical analysis I'm going to skip over, and instead jump to what their experiment revealed in terms of a model:  I've added the red labels with each acronym they used for metrics. But long story short, Cost Bias and Anxiety Self-Efficacy impacted Code Review Anxiety the most. The amount of anxiety felt, along with the perceived Probability Bias, were defining factors of how much code review avoidance the participants would make. Put together more clearly in a later figure, this is what they define:  They mention that the code review anxiety was a bigger contributor to avoidance than probability bias; the more severe they thought the consequences would be while thinking they were unable to manage their anxiety (this latter one being most impactful), the stronger avoidance was. By looking at the second assessments, they were also able to show that their workshop had the most impact specifically on the self-efficacy scores, with some effect on their self-compassion. The workshop had no significant effect on the probability or cost scores. Overall, the SUDS score (how anxious people rated themselves to be) was significantly improved, with a moderate effect. To make this simple, that means that workshop participants were more forgiving of themselves and trusting in their ability to manage their own anxiety—this was the strongest effect—and this in turn reduced their anxiety as well, all after a single session. Another interesting thing here is that they report demographic and firmographic data, which shows that code review anxiety is not associated to either experience (like being a junior dev) nor related to gender; any developer may feel anxiety here. They point out this counters common myths currently circulating in the industry. They also point out a few limitations: the participants self-selected, which means they were interested in taking action about their anxiety (this isn't a random sample); there was no double-blind experiment (the facilitator could have inadvertently influenced results), and the main author being a clinical psychologist means it's not clear how well this could work with workshops administered by non-experts. The authors conclude: quote:Finally, our research provides evidence that a single-session cognitive-behavioral workshop intervention can effectively reduce code review anxiety by significantly increasing anxiety self-efficacy and self-compassion. As this is a notably cost-effective protocol relative to the value and impact of code review activities throughout a developer’s career, this finding is an optimistic and important signal for the compounding benefit of empirically-justified interventions to create a more human-centered and healthier developer experience within technology companies. As is usual for such papers, more research is required to show how generalizable this could be, but I still found the model to be really interesting.

|

|

|

|

|

| # ? May 3, 2024 09:04 |

|

Titus Winters has talked a bit about this in the context of engineers fresh from school. IIRC his point was that in college, there's a massive emphasis on never sharing your code because doing so could lead to cheating / academic dishonesty. So many engineers are coming out of 4+ years of hiding their code, it's a miracle that any of them are comfortable starting code review. A bad experience in someone's first few reviews has the potential to really sour people on the process and contribute to avoidance behaviors like in that study.

|

|

|