|

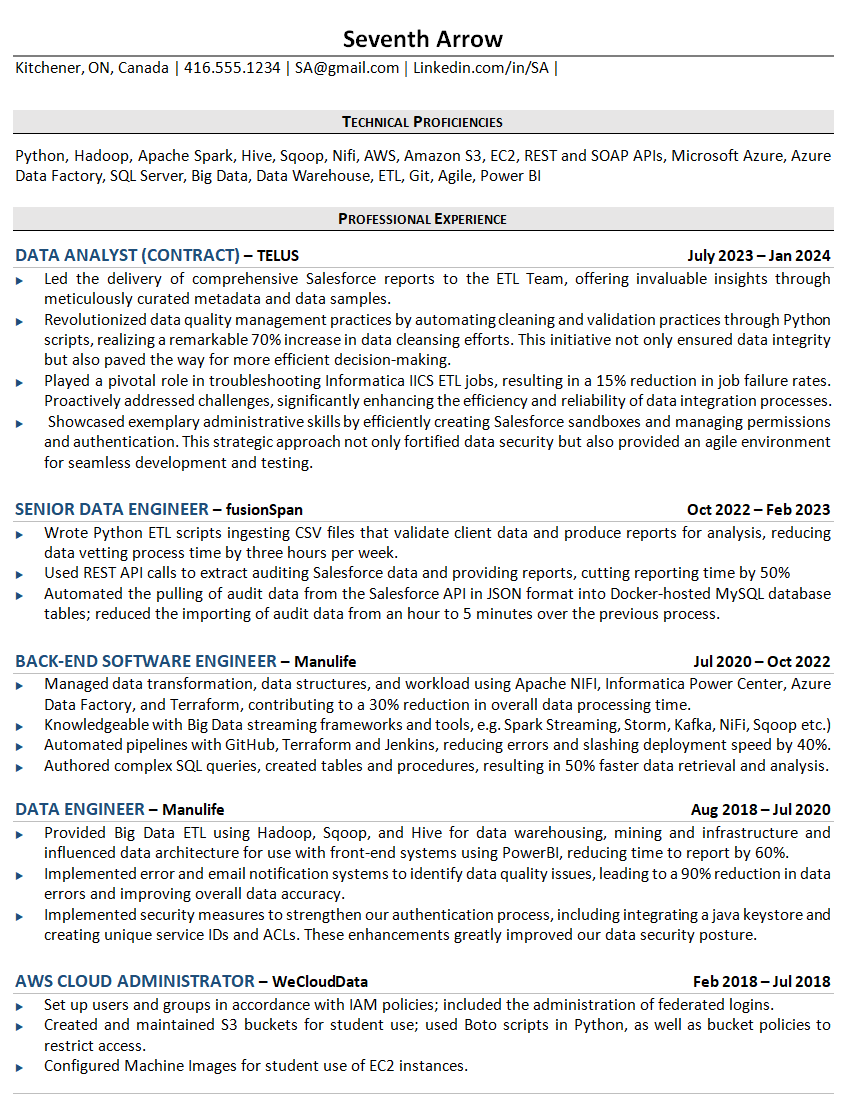

Ok so my contract with TELUS ended abruptly (because of budget lockdowns, not because I deleted production tables or something) so I'm on the hunt for a new job. Here is my resume, feel free to laugh and make fun of it but also offer critiques. Mind you, I will usually tailor it to the job I'm applying for, but it's fairly feature-complete as-is (I think). My lack of experience with Airflow, DBT, and Snowflake is a but of a weakness but I'm hoping to make up for it with project work. I'm also hoping to get a more recent cloud certification than my expired AWS thang (maybe Azure).

|

|

|

|

|

| # ? Apr 30, 2024 06:56 |

|

Seventh Arrow posted:resume stuff Looks fine to me but I'm no guru on the subject. Has anyone here stood up a spark cluster? I've only used Databricks which provisions and handles everything behind the scenes but I'm curious how difficult it would be to stand up a 3-node cluster on EC2s? Just as a learning exercise.

|

|

|

|

Cross-posting since this is more data than Python (thanks WHERE MY HAT IS AT) Is there a standard tool to look at parquet files? I'm trying to go through a slog of parquet files in polars and keep getting an exception: Python code:I understand I'm trying to have the efficiency of polars in lazy mode, but I'd love to know where it specifically blows up to help figure out the problem upstream. Is there a better place to ask polars / data science questions?

|

|

|

|

Hed posted:Cross-posting since this is more data than Python (thanks WHERE MY HAT IS AT) Try DuckDB. Its very first designed use case was to be a quick in-process way of looking at the contents of parquet files. Here's their docs on using it with parquet: https://duckdb.org/docs/data/parquet/overview.html

|

|

|

|

How do most organizations deal with ad-hoc data imports? i.e. CSV imports / spreadsheets Somehow as a DA team, we've got stuck where 80% of our jobs became cleaning and importing ad-hoc spreadsheets and ad-hoc datasets into our BI tools so they're searchable. Now I'm spending 80% of my day hand-cleaning spreadsheets in pandas or pyspark. What's the "best practice" way of dealing with this disorganized ad-hoc mess that somehow our team drew the short-straw on? Are there common open source spreadsheet import tools out there we could deploy that let business users map out columns to destination schemas? I feel like I'm missing some really obvious answer here.

|

|

|

|

Oysters Autobio posted:How do most organizations deal with ad-hoc data imports? i.e. CSV imports / spreadsheets You're doing all of the work associated with organizing and thinking about the data. Do you think your business users are going to willingly start doing the work that you are currently doing for them?

|

|

|

|

Oysters Autobio posted:How do most organizations deal with ad-hoc data imports? i.e. CSV imports / spreadsheets What type of cleaning do you have to do? You mentioned columns to destination schemas, is everyone using their own format for these things and it's up to you to reconcile them? You could try to get fancy with inferring column contents in code or you could build out a web app to collect some of this metadata for mappings as users submit their spreadsheets. But the obvious answer is to create a standardized template and refuse to accept any files that don't follow the format. Assuming, of course, that your team has the political capital to say "no" to these users.

|

|

|

|

BAD AT STUFF posted:But the obvious answer is to create a standardized template and refuse to accept any files that don't follow the format. This is the way

|

|

|

|

monochromagic posted:Some thoughts - I'm curious what is the dividing line between data engineering, and devops/platform engineering/sre For example we had an "analytics" department that was maintaining their own spaghetti code of bash and SQL, database creation/modification, as well as a home built kitchen/pentaho/spoon ETL I rewrote most everything but the sql and ported them to a new platform (with an eye towards moving them to airflow as the next step), parallelized a lot of the tasks and got the run time way down On the other hand I've got a coworker that's building out some data pipelines for Google forms and excel data and piping it into tableau for upper management to do internal business forecasting. Arguably you could farm that out to a devops guy

|

|

|

|

Hadlock posted:I'm curious what is the dividing line between data engineering, and devops/platform engineering/sre It depends on the company. If you have a devops/platform team, then generally they do the job of maintaining the tools, and DEs just use them to accomplish data tasks. If you're small enough that you don't have a dedicated team, then DEs generally have to setup, maintain, and use the tools. If you're really small, they might expect an underpaid analyst to do all that and create charts too. My experience has been that companies only allow specialists when they're forced to. So, if they've been forced to setup devops teams already, then the generalist DE doesn't need to do that bit of work, otherwise it's all on them. And so on.

|

|

|

|

BAD AT STUFF posted:What type of cleaning do you have to do? You mentioned columns to destination schemas, is everyone using their own format for these things and it's up to you to reconcile them? Yup, everyone is using their own formats and we're responsible to map to destination schemas quote:You could try to get fancy with inferring column contents in code or you could build out a web app to collect some of this metadata for mappings as users submit their spreadsheets. But the obvious answer is to create a standardized template and refuse to accept any files that don't follow the format. Assuming, of course, that your team has the political capital to say "no" to these users. Unfortunately I've been very vocal about this issue but have been met with shrugs as if there's nothing better we could be doing with our time. Our team lead doesn't even have data analysis experience so they barely understand the issues and are terrible at going to bat for us. The template is probably the right idea, but my concern is people simply won't do it, and then continue not even bothering to get that data into an actual warehouse we can use. What ends up happening is that these spreadsheets get dumped as an attachment into our internal content management system (ie where people save corporate word docs, emails etc), and when we are actually asked to do real data analysis that's the data they point to. I'm just surprised that there aren't any existing open source stacks or apps for this kind of thing but maybe this stupidity has been so normalized for me that I'm assuming others deal with this. have you seen my baby posted:You're doing all of the work associated with organizing and thinking about the data. Do you think your business users are going to willingly start doing the work that you are currently doing for them? No, I don't think they'd be willing. Despite there being a big appetite for BI and data analysis, there's zero appetite for making actual systems to enable it and this work is seen as something for us as a "support" team to deal with. We have zero ownership of any of the systems, and our dataops and data engineer teams have other priorities (like deploying internal LLMs and other VP-bait projects). The business side thinks being "data centric" is "look at all this data we're getting!". There's literally another BI team I'm aware of that run their Tableau dashboards from manually maintained spreadsheets. They've hired someone to literally go and do data entry into spreadsheets from correspondence and other unstructured corporate records that our bureaucracies continue to generate. Oysters Autobio fucked around with this message at 02:24 on Feb 27, 2024 |

|

|

|

Oysters Autobio posted:

If you can't hook stuff up to ingest this stuff in an automated way, and it's non-negotiable that it requires users manually sending stuff, you need to set boundaries, either by having an automated way to reject stuff not conforming to the template or by rejecting it yourself. Setting boundaries usually requires some buy-in by management. It's more of a social and political problem than anything else. Or, if you can't set boundaries you need to set aside budget for extra resource to do that work, like the other team has. Maybe it's best framed as that kind of dichotomy when you approach management - either users conform to this simple template or they spend god knows how much on your or another employee's time to make up for the lack of standardisation.

|

|

|

|

CompeAnansi posted:It depends on the company. If you have a devops/platform team, then generally they do the job of maintaining the tools, and DEs just use them to accomplish data tasks. If you're small enough that you don't have a dedicated team, then DEs generally have to setup, maintain, and use the tools. If you're really small, they might expect an underpaid analyst to do all that and create charts too. I mean, is data engineering a sub-discipline of devops, like devsec ops or platform engineering, or is it somehow independent? Arguably it's a sub discipline of platform engineering

|

|

|

|

Hadlock posted:I mean, is data engineering a sub-discipline of devops, like devsec ops or platform engineering, or is it somehow independent? Arguably it's a sub discipline of platform engineering My view is that Data Engineering is most fundamentally about moving data around (and maybe also transforming it). Whether you have to build your own tools, host open-source tools, or use cloud tools is a decision for the company to make and the amount of work the DEs have to do to maintain those tools will change depending on what decisions are made there. There are software engineers that specialize in building data tools (but not using them) and those are the individuals I'd see as falling into the discipline of platform engineering. But I wouldn't call those software engineers "Data Engineers" unless they were also using those tools they built to move data around.

|

|

|

|

Data analyst / BI analyst here who's interested in upskilling into a more direct data engineering role, particularly with a focus on analytics (ie data warehousing, modelling, ETL etc). Currently most of my work is spent running typical adhoc analysis and spreadsheet wrangling. Aside from taking on work at the office that's more focused on modelling (ie kimball / data warehousing), what are folks recommendations for other tech professionals looking to upskill into more DE engineering roles? Online MOOCs? Bootcamps? Part-time polytechnic / university? Particularly on the last two, I have heard that bootcamps tend to have a bad rep outside of web dev / front end circles, is this true? Previously I have read the kind of "you couldn't possibly learn from a bootcamp what than the CS or SWE program I did in undergrad/graduate programs". If people do feel this is the case, I'm very open for the insight and on any suggestions (it's not like I can go back in time and change my polytechnic program and all). Part-time undergraduate programs? Post-grad or post-bac polytechnic programs? Save money and go fully back to school full time in the most data engineering focused program I can find? which if people feel is true I'm open to that kind of insight. Alternatively, I've heard doing your own side projects for building a portfolio is valued but I'm struggling to find a side project in my mind that's doable as a portfolio example while being related to data engineering. Oysters Autobio fucked around with this message at 03:47 on Mar 2, 2024 |

|

|

|

There was a huge upswell in demand for bootcamps back when every tech company was thirsty for more data scientists, but nowadays it seems like it's largely a wasteland of hucksters and profiteers. If there's a bootcamp that you think will teach you something that you need to know, then sure, proceed with caution. But under no circumstances should you go to a bootcamp under the impression that it'll give you resume clout. It won't. A degree in computer science will probably help you understand data structures and algorithms, but there's other ways of learning those things. The resume clout thing is similar, I think - every job opening says they want some sort of CompSci diploma but I've had loads of interviews with companies that have said that and I don't have a degree. Really, I think that having lots of personal projects really makes your application pop. I would say that many (but not all) of them should focus on ETL stuff - make a data pipeline with some combination of code, cloud, and some of the toys that every employer seems to want. If you're stuck for ideas, check with Darshil Parmar and Seattle Data Guy for starters.

|

|

|

|

Hadlock posted:I'm curious what is the dividing line between data engineering, and devops/platform engineering/sre So I think it's hard to draw dividing lines between these disciplines, as they will often overlap. The last few companies I've been in have for example moved away from talking about DevOps as a specific discipline with specific roles tied to it, and moved on to talk about e.g. platform engineering and developer experience. Hadlock posted:I mean, is data engineering a sub-discipline of devops, like devsec ops or platform engineering, or is it somehow independent? Arguably it's a sub discipline of platform engineering Personally I see data engineering as a subset of software engineering, we are essentially data backenders. Depending on the job and size/maturity of the project/company you work for, you might also do platform engineering and SRE stuff. At my current place of work we have a mature data platform and a dedicated platform engineering team that helps out with our Kubernetes clusters etc. They don't know the specifics of the data infrastructure but help us easily spin up new clusters, make CI/CD pipelines and so on. However, in smaller companies this could all be on the data engineer or even analysts.

|

|

|

|

Oysters Autobio posted:Data analyst / BI analyst here who's interested in upskilling into a more direct data engineering role, particularly with a focus on analytics (ie data warehousing, modelling, ETL etc). Currently most of my work is spent running typical adhoc analysis and spreadsheet wrangling. Maybe you'd be interested in pursuing something akin to analytics engineering. With respect to skills I'd value personal projects highly in a hiring situation, comp sci degrees are nice but outranked by actual work experience when I look at CVs. I have no experience with bootcamps unfortunately.

|

|

|

|

monochromagic posted:Maybe you'd be interested in pursuing something akin to analytics engineering So conceptually, absolutely yeah I would like to do DE work as close to the analytics side as possible. But only thing is that I haven't been able to find material on the domain that isn't just dbt promotional blogs and such. Are employers actually hiring positions like this? And if so, outside of just maintaining and creating dbt models, what else does the role entail? Not slagging dbt here, but I haven't seen anything like "analytics engineering with python" or basically anything that constitutes the stack that isn't dbt, so I'm skeptical.

|

|

|

|

Oysters Autobio posted:So conceptually, absolutely yeah I would like to do DE work as close to the analytics side as possible. It might not be called an analytics engineer, but focusing on analytics engineering as described by dbt (they coined the term) will definitely land you and edge in both data analyst and data engineering jobs. For example, I'd love a more analytics focused engineer in our team - whether the official job title is data analyst or engineer is of less importance. This blog post describes analytics engineering very well in my opinion. Basically, bringing software engineering practices into the analytics space, for example by designing test suites for analyses, CI/CD for the analytics pipelines, etc. This also means that you will definitely need Python in your arsenal among others. dbt is also just data as code - something that can be achieved with tools like SQLMesh which looks super interesting. My point here is that dbt is just a tool, but the principles behind analytics engineering (and data engineering, and any kind of engineering) can be applied across tool choices.

|

|

|

|

Hughmoris posted:For those of you currently working as Data Engineers, what does your day-to-day look like? What technologies are you working with? For context, I'm currently a junior data engineer working in finance - graduated two years ago. My day-to-day is usually something along the lines of - Company wide standup (Mondays only) - Go over any code reviews I have and make notes ahead of my morning standup - Morning standup with my team where we go over what we accomplished, what we are doing for the day, and any blockers we may have - I'll join the debug session immediately following the standup if I have anything to go over. - Work on my current tasks. We also have an on-call rotation for seven day periods where your days are usually restarting Airflow DAGs, dealing with data issues, and answering questions from the business. We use Airflow for orchestration, dbt, Postgres, K8s, and a ton of in-house tooling using Python and Java.

|

|

|

|

BitAstronaut posted:For context, I'm currently a junior data engineer working in finance - graduated two years ago. My day-to-day is usually something along the lines of Thanks for the insight!

|

|

|

|

Hughmoris posted:Thanks for the insight! Sorry, I forgot to post my answer to your original post. I'm a senior level data engineer, angling for a role as a tech lead. My team has a lot of legacy processes that still run on our on-prem Hadoop cluster, but we're migrating to Databricks in Azure. My days are generally split between use case work and capability work. Our company has a pretty sharp distinction between data science and data engineering. The data scientists are developing new statistical and ML approaches to creating data assets. It's my job to make sure that their code is scalable, that it works with our automation (Airflow, Databricks jobs, ADF), and that they're following good engineering standards with their code. "Productionizing" is the term we came up with to encompass all of that. It's PySpark development, but very collaborative. On the capability side, that's where I have more flexibility about what I'm doing. Developing new patterns, data pipelines to link together our different systems, that kind of thing. With the migration work that we're currently doing, there is more of that than we'd typically have. Still, that's a skill that I think is important for data engineers above junior level. You have a number of different tools to accomplish your goals of moving data between different systems, applying transformations, and producing data products or serving models. A lot of data engineering isn't "how do I do this?", but "what's the best way to do this?".

|

|

|

|

Edit; Nevermind

Oysters Autobio fucked around with this message at 16:45 on Apr 19, 2024 |

|

|

|

Just started a new job. Does anyone here use Azure data factory? Is there a way to set up dependencies between pipelines, that have multiple dependencies? I.e. If we have raw ingest pipelines A and B, and a transformation pipeline C that depends on both A and B succeeding, can it be configured such that C only runs when A and B complete successfully? The only trigger that seems to do something like this is dependent tumbling window triggers, but I'm wary of this as it seems to be designed for workloads much more frequent than the 'once a day in the morning' runs we are currently doing. Also setting up all those triggers is going to be kind of clunky. https://learn.microsoft.com/en-us/azure/data-factory/tumbling-window-trigger-dependency I'm really surprised that this functionality doesn't seem to be fleshed out. I've been handed a bit of work started a year ago wherein they've tried to work around it by sending events to Azure Event Grid that then kicks off a function that runs continually to check if the dependencies have been satisfied then calls the dependent pipelines with the Azure API (I think). This seems like a kind of overengineered solution that's punching out of ADF which is nominally supposed to be the orchestration platform, and isn't really what Event Grid is designed for anyway. While writing this just occurred to me can you create a new DAG/pipeline that calls other pipelines from within it and have dependencies between them, but then that whole process would need to be triggered at a certain time (I think) so you'd lose flexibility if you wanted to kick off pipeline A and B at very specific times. IDK. Anyone else dealt with this? Googled reddit posts say 'just use airflow' which isn't really an option.

|

|

|

|

Feels like I've been bamboozled a bit since I'm new to proper data engineering work and orchestration, but it seems like the logical solution to 'We have some tasks that extract and load data and then another task that transforms the data' is just to create another DAG that includes the transform tasks as dependencies of the extract and load tasks. This seems like the most logical and standard way to do it honestly, my only concern is that it seems like you'd lose granular control of when the extract and load tasks kick off, and that as the complexity of the thing grows it could get unwieldy. But probably not that unwieldy as most of the transformation is sequestered into databricks notebooks and the ingests are pretty modularised anyway

|

|

|

|

If pipelines A and B are specific to what you're doing in C, the easiest solution run them all in a larger pipeline with an Execute Pipeline activities like you were saying. If A and B are separate or are used by other processes, then I'd look at it from a dependency management perspective rather than as directly preceding tasks. If you can programmatically check the status of A and B (look for output files? or use the ADF API to check pipeline runs?), you can begin C with an Until activity that does those checks with a Wait thrown in so you only check every 15 minutes or something. That's pretty much an Airflow Sensor, which I assume is what people were telling you to use. Good luck with ADF. Our team barely got into it, hated it, put our cloud migration on hold for like 6 months, and then switched to Databricks Workflows.

|

|

|

|

BAD AT STUFF posted:If pipelines A and B are specific to what you're doing in C, the easiest solution run them all in a larger pipeline with an Execute Pipeline activities like you were saying. Yeah, the datasets are going to be combined a fair bit eventually I think so the latter solution is probably better. At the end of the day everything ends up in the data lake in a relatively consistently named format so it would probably be possible to check for it. I guess you'd still need to trigger the transform pipeline to a schedule that roughly conforms with when the extract/load pipelines run though right? But these are daily loads so I don't think it really matters. I'm right in saying that their proposed solution of event grid/azure functions seems like an antipattern right? I've not seen any resource recommending this solution. Event grid seems to be designed for routing large amounts of streaming data which this isn't, and azure functions seems to be for little atomic operations which this isn't, if the function has to be constantly running to retain state. Yeah ADF seems nice and friendly but you rapidly run up against the limits of it. They're all in on Azure though so I don't think that's changing. At least we have the escape hatch of just calling Databricks notebooks when we run into a wall though.

|

|

|

|

Why are they using ADF Piplines instead of Workflow Orchestration Manager given that they're hiring and paying data engineers? Feels like running up against the limits of Pipelines means moving to a proper system like Workflow Orchestration Manager (aka Airflow).

|

|

|

|

To any Spark experts whose eyeballs may be glancing at this thread: I'm applying for a job and Spark is one of the required skills, but I'm fairly rusty. They sprang an assignment on me where they wanted me to take the MovieLens dataset and calculate: - The most common tag for a movie title and - The most common genre rated by a user After lots of time on Stack Overflow and Youtube, this is the script that I came up with. At first, I had something much simpler that just did the assigned task, but I figured that I would also add commenting, error checking, and unit testing because rumor has it that this is what professionals actually do. I've tested it and know it works but I'm wondering if it's a bit overboard? Feel free to roast. code:

|

|

|

|

CompeAnansi posted:Why are they using ADF Piplines instead of Workflow Orchestration Manager given that they're hiring and paying data engineers? Feels like running up against the limits of Pipelines means moving to a proper system like Workflow Orchestration Manager (aka Airflow). No idea, I assume they wanted to keep everything in the microsoft stack or something. This project is still pretty nascent so maybe it just hasn't been considered. And I didn't know Azure had a managed Airflow service, that's great! Exactly the problem Airflow is meant to solve. I'll bring it up

|

|

|

|

Generic Monk posted:Yeah, the datasets are going to be combined a fair bit eventually I think so the latter solution is probably better. At the end of the day everything ends up in the data lake in a relatively consistently named format so it would probably be possible to check for it. I guess you'd still need to trigger the transform pipeline to a schedule that roughly conforms with when the extract/load pipelines run though right? But these are daily loads so I don't think it really matters. Yes. If you're checking to see if your upstream dependencies have been completed, you'd need to schedule the pipeline run so those checks can start. If they're daily runs, that's pretty easy. Generic Monk posted:I'm right in saying that their proposed solution of event grid/azure functions seems like an antipattern right? I've not seen any resource recommending this solution. Event grid seems to be designed for routing large amounts of streaming data which this isn't, and azure functions seems to be for little atomic operations which this isn't, if the function has to be constantly running to retain state. An event driven approach can absolutely be a good thing, but I'd be wary about going down that route unless there's a well established best practice to follow. My company has an internal team that created a dependency service. You can register applications and set what dependencies they have. The service will initiate your jobs after the dependencies are met, and then once your job completes you send a message back to the service so that processes that depend on you can do the same thing. There are a lot of little things that can bite you with event driven jobs, though, which is why they created that service. If your upstream jobs don't run, what kind of alerting do you have to know that you're missing SLOs/SLAs? How do you implement the logic to trigger the job only once both dependencies are done? For a single dependency it's easy: when the message arrives in the queue, kick off your job. Handling multiple jobs would be harder, and that's the thing I wouldn't want to do without docs to work from (which I didn't see for event grid in a couple minutes of searching). I suppose you could write an Azure function that is triggered on message arrival in queue A and checks to see if there's a corresponding message in queue B. If so, trigger pipeline C. If not, requeue the message from queue A with a delay (you can do this with Service Bus, not sure about Event Grid) so that you're not constantly burning resources with the Azure function. I think that would work, but I wouldn't be comfortable with it unless I had a lot of testing and monitoring in place. That's where I'd push back on the people making the suggestion. If there are docs or usage examples that show other people using the tools this way, then great. If not, that's a big red flag that we're misusing these managed services.

|

|

|

|

BAD AT STUFF posted:Yes. If you're checking to see if your upstream dependencies have been completed, you'd need to schedule the pipeline run so those checks can start. If they're daily runs, that's pretty easy. Yeah, the added complexity of this is what skeeves me out. I think they got the idea from the SWE side of the organisation which is heavily into kubernetes/microservices from what I can see We're a small team so productionising this does not seem like the best use of resources when something like managed airflow would work just as well for our use case, with there already being documentation and a huge community of practice behind it

|

|

|

|

|

| # ? Apr 30, 2024 06:56 |

|

Anyone have good resources or guides on functional programming styles in pyspark? I've seen a few style guides for pyspark, but would really like to see some more in-depth walkthroughs with concrete step by steps and/or practice guides for common data engineering patterns with pyspark. Sort of "idiomatic pyspark" or whatever you want to call it. Bonus points for: - some kind of useful data testing guides for data pipelines or even just adhoc / batch ETL. - ETL practices with Jupyter notebooks (we don't have any sort of orchestration or workflow system like airflow. It's all just notebooks).

|

|

|