|

LCD Deathpanel posted:Just wait until you strap a custom cooler on the videocard and start getting I've thought about getting one actually, a 7950 at 1100 can get sorta warm.

|

|

|

|

|

| # ? May 9, 2024 15:03 |

|

Endymion FRS MK1 posted:I've thought about getting one actually, a 7950 at 1100 can get sorta warm. I just went from a custom-cooled 6950 (still working, but the digital outputs died, so its being used by the wife) to a 7950, not custom-cooled. Its so loud  I'm getting really close to picking up a cooler for it. But I'm waiting for one more generation of card, then I'll do it. I'm getting really close to picking up a cooler for it. But I'm waiting for one more generation of card, then I'll do it.

|

|

|

|

Siochain posted:I just went from a custom-cooled 6950 (still working, but the digital outputs died, so its being used by the wife) to a 7950, not custom-cooled. Its so loud Luckily Gigabyte's Windforce cooler is pretty good and relatively quiet. Much better than the Sapphire 6950 and it's cooler it replaced.

|

|

|

|

Here's a fun one, since I've been on an electronics kick recently: Overclocking the Game Boy Color: https://www.youtube.com/watch?v=nwJQxD8LLNY

|

|

|

|

Endymion FRS MK1 posted:Luckily Gigabyte's Windforce cooler is pretty good and relatively quiet. Much better than the Sapphire 6950 and it's cooler it replaced. I've got the Gigabyte. Its so loud compared to the Arctic Cooling Accelero Xtreme III I was running on the 6950 hahah.

|

|

|

|

Factory Factory posted:Here's a fun one, since I've been on an electronics kick recently: Overclocking the Game Boy Color: I'm surprised how high it goes without locking up! That's pretty awesome.

|

|

|

|

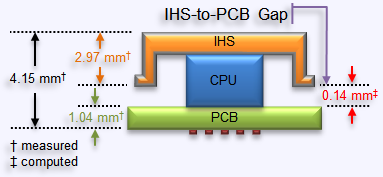

This is probably old reading for people but whatever. Whether you advocate delidding/direct die cooling or not, I found this post on anandtech forums really entertaining in a positive way. The poster is quite meticulous in a very scientific/engineery way. http://forums.anandtech.com/showthread.php?t=2261855

Shaocaholica fucked around with this message at 02:09 on May 20, 2013 |

|

|

|

Does anyone know how to run a Radeon 4870 at more than 1.276 volts? In ATI tray tools whenever I try to set the voltage higher it sets the clock speed to default and doesn't save the new voltage.

|

|

|

|

You could try MSI Afterburner if tray tools is giving you problems, or you could reflash the BIOS with one configured for a higher voltage. Are you sure the extra voltage is worth it? Unless you're doing a suicide run on the card, 1.276V is pretty close to the card's limits as it is, IIRC.

|

|

|

|

Finally got a NH-D14 to replace my Hyper 212+. Doesn't come with PWM fans though  I'm pretty impressed. Ran my i5-3570K (that is a horrible OC-er) through 10 runs of IBT at 4.4ghz and 1.31v, only cracked 100C a couple times. Even a 12 hour Prime95 run ran high 80's, this time with the ULN adapter. Hopefully they release a mounting system for whatever socket Skylake uses, would love to keep this thing when I upgrade.

|

|

|

|

Those voltages and temperatures are both really high. You probably want to drop down a multiplier or two unless you're planning on buying a new CPU soon.

|

|

|

|

craig588 posted:Those voltages and temperatures are both really high. You probably want to drop down a multiplier or two unless you're planning on buying a new CPU soon. It's not running those voltages 24/7, just when under load. so that's fine, right?

|

|

|

|

Ehhh. That does help, don't get me wrong, and most people don't actually do the "100% load 24 hours a day" type loading that a 24/7 overclock is geared to, but you are around the range where if you need to keep using the chip 4-5 years down the line, you suddenly might not be able to. Three years if you're unlucky, and with a chip that doesn't clock well to begin with, you may well be unlucky.

|

|

|

|

SanitysEdge posted:Does anyone know how to run a Radeon 4870 at more than 1.276 volts? In ATI tray tools whenever I try to set the voltage higher it sets the clock speed to default and doesn't save the new voltage. Thats probably the max you can get through the voltage control in the bios, if you want higher you will need to do a pencil mod or solder on a variable resistor to increase it. Factory Factory posted:Unless you're doing a suicide run on the card, 1.276V is pretty close to the card's limits as it is, IIRC. The 48xx series could eat plenty of volts, I still have my volt modded 4850 running on 1.4v after all these years. But I wouldn't advise everyone to go do that.

|

|

|

|

ATI Tray Tools is ancient, and doesn't even work on anything newer than XP as far as I know. Use Afterburner and select extend official overclocking limits (if needed). If it won't let you go any higher still, then you're at the limits of voltage without volt-modding the card like lovely Treat said. Performance-wise, a newer card might be a better choice over spending alot of effort on the 4870 though. edit: Also the Afterburner betas changed again. I updated my 6970's drivers since I was having issues in Metro:LL, and it turned out that some old Afterburner driver hooks were still in place. Completely removing & reinstalling Afterburner's newest beta made things work properly again, and you don't even have to use the /XCL switch to bypass overclocking limits now. Afterburner's finally approaching the ease of use that ATT and ATItool had years ago (although with vastly more complicated drivers). future ghost fucked around with this message at 22:23 on May 24, 2013 |

|

|

|

Endymion FRS MK1 posted:Finally got a NH-D14 to replace my Hyper 212+. Doesn't come with PWM fans though They will. Noctua are pretty good about supporting their gear.

|

|

|

|

HalloKitty posted:They will. Noctua are pretty good about supporting their gear. Awesome. I was thinking about replacing the fans eventually. Any good PWM fans cheaper (and preferably quieter) than Noctua's F12 and whatever the 140mm equivalent is? Factory Factory posted:Ehhh. That does help, don't get me wrong, and most people don't actually do the "100% load 24 hours a day" type loading that a 24/7 overclock is geared to, but you are around the range where if you need to keep using the chip 4-5 years down the line, you suddenly might not be able to. Three years if you're unlucky, and with a chip that doesn't clock well to begin with, you may well be unlucky. Hmm. Might back down to the old 4.3 @ 1.24 I had going. I keep hitting that voltage wall once I try to go above it.

|

|

|

|

Anyone remember how I messed with my 670s bios and got it to run at 1300Mhz no matter the load? I also said no one should do it because of how scary it was to actually create a functional bios (You had to half corrupt a bios and splice two edited bios halves together, it was a mess). I just noticed there's now a tool to mess with your power target, I haven't personally used it, but I did load up my manually modded bios with it and it confirmed the changes I made and validated the checksum so it's probably pretty safe. http://rog.asus.com/forum/showthread.php?30356-Nvidia-Kepler-BIOS-Tweaker-v1.25-f%FCr-GTX-6xx-Reihe-680-670-660-650-UPDATE With a modded power target you get to keep the power management features unlike that K Boost overclocking feature that makes the card ignore all of the sensors. As long as you keep track of your temperatures and have a big enough power supply it should be pretty safe to set the power target to whatever you want, I've been running the equivalent of 200% for something like 8 months now without any issues. Edit: Found the screen shot of the log from back then  I guess it's not always sticking to 1300mhz, but it's far tighter than it was before that. This card used to have greater than 200Mhz swings during gameplay. craig588 fucked around with this message at 04:26 on May 25, 2013 |

|

|

|

I'm looking to get some performance numbers for a image resizing tool that can use either CPU or GPU+CPU. Its called Photozoom Pro. The trial should work as it has everything turned on except that it watermarks the output image. It even comes with a sample image as well which could serve for the test. Its such a niche tool that there aren't any real performance scaling data out there and I'm trying to figure out a sweet spot to build a few machines to run it for work. http://www.benvista.com/downloads Here's what I'm getting with my old home machine: CPU: Q6600 @ 2.83Ghz - 1m:17s (77 seconds) GPU: GTX460 - 0m:17s (17 seconds) Here's how I'm running it: 1)Start the app 2)Choose 'Later' for registration/serial entry 3)The sample image should be loaded automatically 4)On the left panel, set 'Width' to 400%. Height should automatically update to match 5)On the left panel, set 'Resize Method' to 'S-Spline Max' 6)On the left panel, set 'Preset' to 'Photo - Extra Detailed' 7)Hit the 'Save' button in the upper left 8)Choose 'No' on registration again 9)Choose TIFF as the format and use whatever filename 10)TIFF options I'm using are 'LZW' for compression and 'IBM PC' for byte order not that these should affect the performance 11)Hit 'OK' and the elapsed time shown should be the GPU score since the app uses GPU by default 12)Go to Options > Preferences > Processing, and uncheck 'Use GPU acceleration', hit OK 13)Hit the 'Save' button in the upper left again, the previous settings will be the same 14)Choose 'No' on registration again 15)Choose TIFF as the format and use whatever filename, overwrite is fine 16)TIFF options I'm using are 'LZW' for compression and 'IBM PC' for byte order 17)Hit 'OK' and the elapsed time shown will now be the CPU score I would be really grateful if anyone here can test with a stock and OC 3770 as well as GTX680 or faster GPUs. Right now its really painful on my old desktop as my current work project requires hundreds of thousands of images to be processed (video frames) and its going to take days. Shaocaholica fucked around with this message at 00:18 on May 26, 2013 |

|

|

|

CPU: i5-2500K @ 3.4 GHz - 45 seconds CPU: i5-2500K @ 4.6 GHz - 33 seconds GPU: Radeon 6850 @ 850 MHz (OpenCL) - 26 seconds

|

|

|

|

Factory Factory posted:CPU: i5-2500K @ 3.4 GHz - 45 seconds Thanks! The 6850 numbers are pretty poo poo compared to my old rear end GTX 460. Tested on some machine at work: HP xw8600 Dual Xeon X5482 3.2Ghz(2x4 cores) - 33 seconds Quadro FX5600 (8800 Ultra) - 18 seconds HP z800 Dual Xeon X5570 (2x4 cores, HT off) - 28 seconds Quadro FX4800 (GTX 260? Haha, why are you inside a z800???) - 17 seconds The X5570 is barely much better than the X5482. edit: Oh the X5570 isn't all that new. I gotta find someones z820! Shaocaholica fucked around with this message at 00:00 on May 26, 2013 |

|

|

|

The 6000 series isn't particularly known for GPGPU. If someone had a GCN-based card and ran the test, I'm sure it would come out better. Or it could be a CUDA vs. OpenCL thing.

|

|

|

|

Factory Factory posted:The 6000 series isn't particularly known for GPGPU. If someone had a GCN-based card and ran the test, I'm sure it would come out better. Or it could be a CUDA vs. OpenCL thing. I think the app is only OpenCL. At least the graphic says so but that could be wrong. edit: Correction on how the app works. It looks like with GPU acceleration on it still pegs the CPU pretty hard(I've seen 90% over 8 cores) so 2 machines with the same GPU but different CPUs should give different results. Shaocaholica fucked around with this message at 00:20 on May 26, 2013 |

|

|

|

Not exactly what you were looking for, but what I have, so what the hell. AMD Radeon 6950 unlocked to 6970 @ 850/1300 (effectively a slightly underclocked 6970): 14 seconds Intel Core i5-2500K @ 4.3GHz: 37 seconds HalloKitty fucked around with this message at 00:49 on May 26, 2013 |

|

|

|

Just for fun: CPU: i5-2500K @ 4.6 GHz (3 cores) - 43 seconds CPU: i5-2500K @ 4.6 GHz (2 cores) - 1:05 (65 seconds) CPU: i5-2500K @ 4.6 GHz (1 core) - 2:07 (127 seconds CPU: i5-2500K @ 4.0 GHz - 38 seconds   If I'm calculating the speedup stuff right, your algorithm of choice basically scales perfectly with clocks and all-but-perfectly with cores. Probably why it's a GPGPU app. Factory Factory fucked around with this message at 00:44 on May 26, 2013 |

|

|

|

Factory Factory posted:Just for fun Thanks! That was with GPU acceleration off right? Shaocaholica fucked around with this message at 01:27 on May 26, 2013 |

|

|

|

Yep.

|

|

|

|

Got some great data from Anandtech forums. Best yet is this: 3930k@ 4Ghz with 2x GeForce 680s CPU time: 17 seconds 100% CPU usage 1 GPU time: 8 seconds 100% CPU usage 2 GPU time: 8 seconds 100% CPU usage Clearly it can't use SLI but the faster 3930 beat out a 990X + Titan. I'll post a full chart tomorrow of all the sample points I get. And on tuesday when I get back into work I should be able to test on a LGA2011 2x12 core and 2x16 core workstation. Shaocaholica fucked around with this message at 02:00 on May 26, 2013 |

|

|

|

The CPU time on that 3930K suggests that L3 cache/memory speed is important, too, since those are the same cores as my 2500K with lower clock speeds, but with twice the L3 cache (and more per core) and probably ~double+ the RAM bandwidth. And if the 3930K beats out the i7-990X, that means architecture IPC makes a big difference, too (which we already got from my SNB i5 knocking around the Core 2 Quad at close clocks). So basically, the more and newer the computer, the merrier.

Factory Factory fucked around with this message at 02:21 on May 26, 2013 |

|

|

|

Factory Factory posted:The CPU time on that 3930K suggests that L3 cache/memory speed is important, too, since those are the same cores as my 2500K with lower clock speeds. And if the 3930K beats out the i7-990X, that means architecture IPC makes a big difference, too. So basically, the more and newer the computer, the merrier. But its also got 50% more cores as well. Anyway the Xeons I'll be using at work should have plenty of cache. The latest and greatest LGA2011 Xeons are still Sandy Bridge right? edit: 990X was stock. Anything without a note is stock. Shaocaholica fucked around with this message at 02:23 on May 26, 2013 |

|

|

|

What was the 990X clocked at? Edit: and how much did it lose by? Double edit: found the thread on AnandTech, it's OK HalloKitty fucked around with this message at 02:24 on May 26, 2013 |

|

|

|

From the trend line I got for time vs. core, if I gave my i5-2500K @ 4.6 GHz two more cores and sized the L3 cache proportionately, I'd be finishing in 22 seconds, not 17. And that 3930K is at a 600 MHz clock disadvantage.

|

|

|

|

HalloKitty posted:What was the 990X clocked at? 990X was stock. Here's the data: CPU: i7 990X(stock) - 22 secs GPU: Geforce GTX Titan (stock) - 10 secs

|

|

|

|

Why would a man leave his 990X at a stock and buy a Titan? The mind absolutely boggles. I bet he could beat the 8s score if he overclocked his 990X, or at least match it.

|

|

|

|

Found the AnandTech thread and reading through it... Looks like Hyperthreading makes a really big difference. The i7-3770 time solidly thwomps my i5-2500K@4.6. Makes me curious how an FX-8 chip would do.

|

|

|

|

Factory Factory posted:Found the AnandTech thread and reading through it... AMD stuff would be interesting academically but totally not feasible for actual deployment on my project. Whatever hardware I come up with has to fit in a Z800 or Z820.

|

|

|

|

Ran the test on my laptop which has an unsupported graphics card (Nvidia GT650m), so I have the CPU results only. I doubt you really need the data anyway  . .Samsung 550P5C i7-3610QM - 1:18s

|

|

|

|

Dodoman posted:Ran the test on my laptop which has an unsupported graphics card (Nvidia GT650m), so I have the CPU results only. I doubt you really need the data anyway I won't turn it down! I also got i7-3630QM results from the other thread.

|

|

|

|

i3-3220: 57s Radeon 7870: 18s A bit surprised by the mediocre result from the Radeon. e: possibly hampered by the i3.

|

|

|

|

|

| # ? May 9, 2024 15:03 |

|

Ran it on my AMD rig just for fun 1090T @ 3.8GHz 43 seconds HD 7950 11 seconds

|

|

|