I liked my phenom II 965BE  . But that was when it really was a better value for the dollar. I was a AMD fanboy for a long, long time for nothing else than cost for performance at around the $100 range. Also the sockets lasted a long time. Once the FX series hit I looked at the numbers and thought "well mine is literally just as good as those, if not better in some ways" and then the second FX series came around and, I don't know, is it the third now? ... now I have Intel. Anyways I think you should leave turbo on and figure out whats up with your cooling if anything. I never got close to those temps and until I hit 4.0 ghz (and that was with auto tuning . But that was when it really was a better value for the dollar. I was a AMD fanboy for a long, long time for nothing else than cost for performance at around the $100 range. Also the sockets lasted a long time. Once the FX series hit I looked at the numbers and thought "well mine is literally just as good as those, if not better in some ways" and then the second FX series came around and, I don't know, is it the third now? ... now I have Intel. Anyways I think you should leave turbo on and figure out whats up with your cooling if anything. I never got close to those temps and until I hit 4.0 ghz (and that was with auto tuning  the vcore was nuts). I wish AMD would be competitive again just for the sake of healthy competition. the vcore was nuts). I wish AMD would be competitive again just for the sake of healthy competition. Anyways I had a PSU question. If a PSU is 90% efficient but is rated for 750 watts, does that mean it will pull 833 watts from the wall to produce 750 watts in the case or will it only ever pull 750 watts from the wall and less wattage in the case after efficiency loss? I ask because I used the "eXtreme Power Supply Calculator" and at my overclock and vcore I'm brushing close to 700 watts, plus it does not take into account increased wattage from the two overclocked video cards. I understand its an unrealistic 100% everything running extreme scenario but the overclocked video cards might make that closer to accurate than not. Ignoarints fucked around with this message at 19:44 on Mar 13, 2014 |

|

|

|

|

|

| # ? Jun 9, 2024 05:19 |

|

cisco privilege posted:AMD CPUs are garbage, and you'd be laughed out of the parts-picking thread for suggesting them. According to comparisons, they stack up pretty well with similarly priced Intel chips. (Prices according to Newegg) AMD FX-8320 - $179 Intel Core i5 3350P - $179 http://cpuboss.com/cpus/Intel-Core-i5-3350P-vs-AMD-FX-8320 AMD FX-8350 - $199 Intel Core i5 3470 - $189 http://cpuboss.com/cpus/Intel-Core-i5-3470-vs-AMD-FX-8350 Again guys, I'm not saying AMD is better in any way, I'm simply postulating that it isn't as bad as people seem to think it is. Ignoarints posted:I liked my phenom II 965BE I believe this thread will have everything you need. Complete with graph.

|

|

|

|

AbsolutZeroGI posted:According to comparisons, they stack up pretty well with similarly priced Intel chips. (Prices according to Newegg) We're not harping on this because we hate AMD, I frequently recommend APUs where it makes sense. It's just that the matchup between AMD FX-series CPUs and Intel CPUs is one of the only cases where one product is simply worse without any redeeming characteristics. At the end of the day you can say that you don't think you'll notice or care about the performance difference, but if that's the case you could have just got a much cheaper Intel processor. Edit: Also, I'm not trying to dogpile you and my goal isn't to make you feel bad about your purchase, I just think it's important that readers of this thread get an accurate perception of these products so they can make informed buying decisions. Alereon fucked around with this message at 20:29 on Mar 13, 2014 |

|

|

|

AbsolutZeroGI posted:According to comparisons, they stack up pretty well with similarly priced Intel chips. (Prices according to Newegg) FX-8320 uses 125 watts versus the Core i5 3350P's 69 watts, and the Core i5 3350P is itself just a binning title for various processors in that series with defects particularly in the GPU component (which is why the gpu is present but disabled on them). It's also a chip from 2012. AbsolutZeroGI posted:AMD FX-8350 - $199 That AMD processor was nearly 6 months newer than the i5 you're comparing it to, both of them released in 2012, and the current $199 is a price cut from the original $219 - so if you had bought them when new you'd have paid a full $30 more for the AMD with marginally better performance and vastly worse power usage. Additionally the FX-8350 is 160 watts versus the i5 3470's 77 watts. To noone's surprise you couldn't find recent AMD chips competitive with recent intel chips, and the ones you did find were both old and after price cuts on both sides, and with the intel chips still having radical power consumption savings which mean not only less power spent running the system, but also a lot less cooling and thus less noise.

|

|

|

AbsolutZeroGI posted:

Nice answered completely in one sentence too. Makes me feel better about pushin the gpus more then

|

|

|

|

|

Install Windows posted:FX-8320 uses 125 watts versus the Core i5 3350P's 69 watts, and the Core i5 3350P is itself just a binning title for various processors in that series with defects particularly in the GPU component (which is why the gpu is present but disabled on them). It's also a chip from 2012. Talking about what processors used to cost is worthless information. What they cost now is the only important metric. Also, I provided links to my findings (sourcing your information is important, especially when you're a stranger on the internet). You have not. I was unable to find comparably priced desktop CPUs that out perform AMD processors in the areas I need them to. If you go back a page and look at my original problem, you'd see that I use this rig predominately for video rendering and not for gaming so gaming benchmarks take a backseat to heavily threaded benchmarks in my case. Not everyone needs Crysis 3 to run at 63fps instead of 60fps and be comfortable with paying nearly double the money to do so. Alereon posted:You're comparing last-gen Intel CPUs to current-gen AMD CPUs and using pretty awful benchmarks to boot. Use Intel's current price-competitive models and it is an embarrassment. There are cases, specifically heavily multithreaded integer-only workloads, where the AMD FX processors can pull slightly ahead. However, there are many other workloads where the Intel CPUs are up to twice as fast, or it's simply a case where AMD CPUs stutter in games where Intel CPUs won't. I'm just talking about raw performance here, not the fact that the Intel CPUs use half the power, have much better and more capable chipsets, and offer integrated video with hardware acceleration for video decoding and encoding. You could've fooled me. Usually people who don't hate things are capable of saying something not negative about them and so far in this conversation I'm the only one who has said "AMD is okay but Intel is better" whereas you guys are putting it in terms of black and white, good and bad, and leaving out the giant gray area in between. Like I said above, I use my rig predominately for video rendering and not for gaming which is why I bought a processor known for doing well with heavily threaded tasks for a long period of time. And I've seen all the benchmarks and they paint a different picture. http://www.cpubenchmark.net/cpu.php?cpu=AMD+FX-8320+Eight-Core 8000 is lower than 13000 but if you look at the price/performance ratio, AMD is much higher than all Intel chips. http://www.cpu-world.com/benchmarks/AMD/FX-8320.html Same thing, ~35% faster but those chips are also 200% more expensive http://www.futuremark.com/hardware/cpu/AMD+FX-8320/review Again, the cheapest chip on that chart (for the sake of being fair, there is a comparably priced Intel chip that does a bit better than the 8320..but it's still nearly $30 more expensive) http://www.anandtech.com/bench/product/698?vs=836 At no point does the 4770k outperform the 8320 by more than 200% yet the 4770k costs more than 200%. Price/Performance ratio intact even on Anandtech. I've done the homework and if you only look at gaming benchmarks, the AMD chip does look bad. But when you bench it in any other area, the price/performance ratio meets or exceeds Intel in every other area. If I were trying to build a gaming rig I'd feel really stupid but I didn't build a gaming rig nor was I trying to. I would never recommend Intel for a gamer. That's just silly. But for someone on a $800-$900 budget who needed a work station for compiling code, rendering video, and light game development (which is predominantly 3D modeling and rendering), yeah I'd say get an AMD. AbsolutZeroGI fucked around with this message at 22:52 on Mar 13, 2014 |

|

|

|

For the moment, let's assume you're right on all points. You're still wrong. An FX CPU uses a ton more power than an Intel chip. Even at weekend-warrior use patterns, it's only about two years until you've used so much extra electricity that your initial savings have been eaten up and your FX CPU is now more expensive than the i7.

|

|

|

|

AbsolutZeroGI posted:Talking about what processors used to cost is worthless information. What they cost now is the only important metric. Also, I provided links to my findings (sourcing your information is important, especially when you're a stranger on the internet). You have not. I was unable to find comparably priced desktop CPUs that out perform AMD processors in the areas I need them to. If you go back a page and look at my original problem, you'd see that I use this rig predominately for video rendering and not for gaming so gaming benchmarks take a backseat to heavily threaded benchmarks in my case. Not everyone needs Crysis 3 to run at 63fps instead of 60fps and be comfortable with paying nearly double the money to do so. If you only care about what they cost now, then you also have to care about the cost, now, to run them due to their massively greater power draw, which directly relates to the power supply, cooling systems and energy you need to purchase to run them. Quite simply the full-system price works out cheaper for Intel, especially once you start including power costs at all. AMD processors are pieces of poo poo, and you've deliberately needed to dig back to intel chips that were at their end of their generation when the "current" AMD processors came out to find a favorable comparison.

|

|

|

|

Install Windows posted:If you only care about what they cost now, then you also have to care about the cost, now, to run them due to their massively greater power draw, which directly relates to the power supply, cooling systems and energy you need to purchase to run them. Quite simply the full-system price works out cheaper for Intel, especially once you start including power costs at all. I dug up chips that, on newegg, fell into the Venn diagram of being in the "$100-$200" range and had decent ratings. It's not my fault those are the highest rated Intel chips under $200 and the only two non i3 chips I could find in the first 3 pages. I don't really have the time to surf through 30 pages of Newegg products just to prove your point for you. If you know a chip that costs under $200 that performs that much better (and is from a reputable retailer, posting a $150 ivy bridge from imagiantdouchescammer.com is not an appropriate link) then by all means please post it. Factory Factory posted:For the moment, let's assume you're right on all points. You're still wrong. As a person who frequently straddles the poverty line, I can attest that it's quite easy to justify coming up with a couple of extra bucks per month for the extra electricity cost (I believe 24/7 use is rated at like $30-$40/year). I take exactly one fewer midnight snack trips to taco bell every month. It's probably better for me anyway. At the end of two years (assuming I don't scrounge up enough to upgrade my system before then which I probably will...PC building is a marathon, not a sprint), I will have ingested approx 12,000 fewer calories (6,000 from fat) and my CPU gets to remain running. That actually doesn't sound like all that much of a sacrifice to me. AbsolutZeroGI fucked around with this message at 23:54 on Mar 13, 2014 |

|

|

|

Great, you like AMD in the face of all reason and logic. Enjoy.

|

|

|

|

AbsolutZeroGI posted:I dug up chips that, on newegg, fell into the Venn diagram of being in the "$100-$200" range and had decent ratings. It's not my fault those are the highest rated Intel chips under $200 and the only two non i3 chips I could find in the first 3 pages. I don't really have the time to surf through 30 pages of Newegg products just to prove your point for you. If you know a chip that costs under $200 that performs that much better (and is from a reputable retailer, posting a $150 ivy bridge from imagiantdouchescammer.com is not an appropriate link) then by all means please post it. The CPU cost isn't the only portion of the computer so again you're just spinning bullshit. Spending $200 for the CPU doesn't help when you spend (on average) $100-$300 more on an AMD system than a Intel system after all other parts are accounted for, and before the electricity costs set in.

|

|

|

|

Even if it wasn't a dumb idea to get an AMD CPU I had forgotten how loving terrible their chipsets were. They're better than they used to be when your options were AMD, Intel**, SiS, Nvidia (lol), and VIA, but they're still nowhere near as reliable as modern Intel chipsets. It's not worth theoretically saving money to have to deal with lower general performance and bad single-threaded performance, or bizarre chipset-related SATA quirks. Anyone have that graph of average time below X fps or whatever showcasing Bulldozer chips? AMD boards tend to be cheaper as a result but with fun caveats like 'overclocking' models with zero PWM heatsinks.  **edit: Intel CPUs were pretty awful then but their chipsets have nearly always been top-notch. future ghost fucked around with this message at 00:48 on Mar 14, 2014 |

|

|

|

Dude, I had this exact argument with this forum 3 years ago, and I was only less wrong then, than you are now. http://forums.somethingawful.com/showthread.php?threadid=3380752&pagenumber=16&perpage=40#post396064984

|

|

|

|

He's literally arguing that AMD processors costing significantly more to operate is a good thing.

|

|

|

|

Install Windows posted:The CPU cost isn't the only portion of the computer so again you're just spinning bullshit. Spending $200 for the CPU doesn't help when you spend (on average) $100-$300 more on an AMD system than a Intel system after all other parts are accounted for, and before the electricity costs set in. I've demonstrated conclusively that you can build an AMD system for less than it costs to build an Intel system. An 8320 + an ASUS M5A97 costs less than $250. Even if you did ever post a link to something (which I'm starting to doubt at this point), a similarly priced intel + mobo would cost about the same. The rest of the computer is not dependent at all on what type of processor you have so the rest of the computer would be literally identically priced. I would not continue down that line of thinking because that is absolutely not true. Yip Yips posted:He's literally arguing that AMD processors costing significantly more to operate is a good thing. Jago posted:Dude, I had this exact argument with this forum 3 years ago, and I was only less wrong then, than you are now. We're making exactly the same points and being argued with exactly the same data. "No, AMD sucks because look how much better Intel does on this one video game that's developed on a proprietary gaming engine that like 2-3 other games use and the rest don't." Far Cry 2 was built on a special engine that Ubisoft developed in house just like Crysis 3 is developed on a heavily modded game engine specifically designed for Crysis games. They are not indicative of how the chips perform on a game developed on your standard Unity/UDK engines. Do you wanna tell me the 4770k does better on the Winzip benchmarks too? It would probably do way better but it doesn't matter because no one uses Winzip anymore. Yip Yips posted:Great, you like AMD in the face of all reason and logic. Enjoy. I like how you grazed over the part where I abjectly admitted that Intel was better. Intel > AMD What I'm arguing here is that AMD doesn't "suck terrible donkey balls in the darkest depths of hell" as you all so eloquently put it. I acknowledged repeatedly that Intel was the superior chip maker so please stop trying to have this conversation with me on the basis that I think AMD is better. I do not think AMD is better. Intel is clearly better but AMD is not nearly as terrible as you all seem to think it is. That's my argument. It's really not that difficult. Does AMD processors perform to a standard that can be considered "not lovely". The answer is yes. There being chips orders of magnitude better than AMD does not raise the level of the bar that constitutes "not lovely" it simply makes the chips that are orders of magnitude better just that: orders of magnitude better. AbsolutZeroGI fucked around with this message at 01:39 on Mar 14, 2014 |

|

|

|

Okay, we've all had our fun arguing about AMD CPUs but it's time to get this thread back on track. Okay, we've all had our fun arguing about AMD CPUs but it's time to get this thread back on track. We now return to your regularly scheduled short questions thread.

|

|

|

|

I've got one, even if it isn't currently an issue or even going on anymore. Over the course of the years I've had three (wildly) different computers have their built-in ethernet port's MAC address change to 00:00:00:00:00:10. One of those happened after brownouts, but the other two just happened randomly. Anyone have any idea what's up with that? My biggest regret is that I never got to see what happened when two of them were on the network at the same time, because they just happened to be spaced out over various upgrades or replacements.

|

|

|

|

Geemer posted:I've got one, even if it isn't currently an issue or even going on anymore. Over the course of the years I've had three (wildly) different computers have their built-in ethernet port's MAC address change to 00:00:00:00:00:10.

|

|

|

|

Alereon posted:That's the first valid MAC address, indicating that something wiped it or prevented the MAC address from being read. Are you sure it wasn't being overridden via some software you had installed? Pretty sure I never had any MAC address changing software on those computers. It was two XP boxes and one was a Windows Home Server v1, that's the one that got changed during brownouts. I think that I didn't even know you could change your MAC address back then. Only times I noticed what in the router's DHCP tables so I really can't say for sure what was going on in the software department back then. E: One of the XPs was mine, the other was my dad's. So he probably had different software running than me.

|

|

|

|

Did a search and found something only slightly relevant, but the solution wasn't what I was looking for. I have a Creative X-Fi Titanium PCI-e sound card that has SPDIF I/O. I have a game console running Optical OUT to the Creative card SPDIF IN. There's no sound, at all. I know it's not going to be digital 5.1, but it should mix to stereo and I'd be just fine with that. Instead I get nothing. I've tried every bitrate I can and still get nothing. Downloaded the most current DTS/DDL poo poo from Creative, nothing. The forums search led me to someone recommending a desktop USB module to connect to, but I already have the drat card installed. So how do I get SPDIF IN using my sound card?

|

|

|

|

What game console? If it's a PS3 try disabling Dolby Digital in the audio settings. Apparently some people have trouble getting the X-fi to decode that. Also stop buying Creative products ;-) Pivo fucked around with this message at 18:33 on Mar 15, 2014 |

|

|

|

It's a 360, I should have mentioned. This is the first and only Creative thing I've ever bought – I doubt I'll get another one after spending an afternoon finding no answers to this.

|

|

|

|

Can you record from it with something like Audacity? Could help determine whether you're really not getting anything through it or if it's just a "monitor input" setting somewhere.

|

|

|

|

I plugged headphones into the 3.5 jack on the card, and got nothing at all. Moved a PS3 from the other room and connected that, and can get audio out through the LR jack. I guess it's just not going to play nice with my USB preamp. Oh well, screw it.

|

|

|

|

I got this power supply as a replacement for my living room server machine, because after a recent power failure, the old power supply would switch the fans and some of the voltage lines on full power when the machine was supposed to be soft-off. Seasonic SS-460FL2 Active PFC F3, 460W Fanless ATX12V Fanless 80Plus PLATINUM Certified, Modular Power Supply New 4th Gen CPU Certified Haswell Ready - http://www.newegg.com/Product/Product.aspx?Item=N82E16817151099 And now, whenever the machine is turned on, one of my cats acts all crazy whenever he's in the living room. The crazy subsides the moment the machine is either powered off or put into sleep mode. Could this be a case of high pitched coil whine? Should I ask the YOSPOS catte topic instead?

|

|

|

|

Just picked up a new computer, along with dual monitors... Video card = Gigabyte Radeon 7870 HD Monitors = Asus VS238 For some reason my monitors are slightly different colors -- one is using HDMI and one DVI. I assumed they'd match perfectly since it's digital input. The only thing I can think of is the Catalyst control center is setting the HDMI one up as HDMI and the other as standard 1920x1080 format. There are totally different options for both monitors. Sound normal? The card has a DVI, HDMI and 2 Mini DisplayPort connections.

|

|

|

|

My lame guess response is that it may be using some stock HDTV profile for the HDMI connected monitor because it's being detected as a TV. I don't know how right that sounds, or how to deal with it, shows how useless my response is.

|

|

|

|

emotive posted:Just picked up a new computer, along with dual monitors... Catalyst CC -> Desktop Management -> Desktop Color -> Reactivate AMD color controls ? You can also set colour controls for individual displays from CCC. Set those to defaults... should override any profile you have set, hopefully.

|

|

|

|

kode54 posted:And now, whenever the machine is turned on, one of my cats acts all crazy whenever he's in the living room. The crazy subsides the moment the machine is either powered off or put into sleep mode. Probably when you power or sleep your machine you also go into sleep mode and cat doesn't beg for your attention anymore. This is really more of a "soft"ware question... ;-)

|

|

|

|

kode54 posted:My lame guess response is that it may be using some stock HDTV profile for the HDMI connected monitor because it's being detected as a TV. I don't know how right that sounds, or how to deal with it, shows how useless my response is. Not really a lame guess. It's either profiling these screens differently, or the monitors' settings are out of whack, or they are just different through manufacturing, in which case you'll need to calibrate each one.

|

|

|

|

3-Pin fans plugged into the "Chassis Fan" slots on the motherboard can still be throttled up and down by the MOBO automatically just like the CPU fan right? I can't remember if Modern MOBOs can do that or if I have to plug my case fans into my Fan Controller and do it manually (which I hate)

|

|

|

|

Stinky Pit posted:3-Pin fans plugged into the "Chassis Fan" slots on the motherboard can still be throttled up and down by the MOBO automatically just like the CPU fan right?

|

|

|

|

I'm too dumb to know. http://www.newegg.com/Product/Product.aspx?Item=N82E16813130700 Looks like all the headers on the board are 4 pin headers which is promising.

|

|

|

|

Stinky Pit posted:I'm too dumb to know.

|

|

|

|

Thanks! I'll just have to deal with my case's fan controller.

|

|

|

|

Pivo posted:Catalyst CC -> Desktop Management -> Desktop Color -> Reactivate AMD color controls ? The "reactivate AMD color controls" button is greyed out. The left monitor just seems a little warmer and not as contrasty/bright as the right monitor even though the monitor settings are identical. Maybe I'll just buy a calibrator -- I should, anyways, since I've been doing a lot of photography.

|

|

|

|

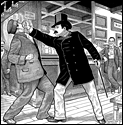

I really, really didn't know where to post this but I guess this is the most accurate thread. In old school installations of software, I'm not sure they were even called install wizards then, it was typically a big blue windowed screen and when the install was actually occurring there were (I think) three sets of bars. One was the hard drive (purple?), one was... I believe the progress of each file being copied, and another one. Anyways this apparently is so old or hard to find now I can't actually find a picture of this anymore. Does anybody know where I can find this again?

|

|

|

|

|

Ignoarints posted:I really, really didn't know where to post this but I guess this is the most accurate thread.  Edit: oh gently caress me this CSS Alereon fucked around with this message at 21:56 on Mar 16, 2014 |

|

|

|

Oh that brings back memories

|

|

|

|

|

| # ? Jun 9, 2024 05:19 |

|

Just looking at that picture hurts. . . I never knew what all those bars do, still don't know.

|

|

|