|

Don Lapre posted:Maybe AMD will get lucky and someone will find a crytocurrency the 390 works great for In what way would that alleviate a supply shortage?

|

|

|

|

|

| # ? Jun 4, 2024 21:41 |

|

Really makes you wonder what AMD was doing in those years though. Radeons were the best thing to mine bitcoins on for at least 2 years. Some altcoins are still mined best with GPUs, so I'm sure some cretins still buy them for that reason. But AMD couldn't manage to get more cards out there? Sounds odd. e: I bought my 6950s to game on, spent 400 euros on them, which seemed like a lot at the time. Then they mined 100 bitcoins.

|

|

|

|

repiv posted:There's some logic there: the 750ti is first generation Maxwell, which to the end-user is closer to the 700 series in features since it lacks MFAA, HEVC and HDMI 2.0. And HEVC decoding is only on GTX 960 and not on 970 or 980.

|

|

|

|

Truga posted:Really makes you wonder what AMD was doing in those years though. Radeons were the best thing to mine bitcoins on for at least 2 years. Some altcoins are still mined best with GPUs, so I'm sure some cretins still buy them for that reason. I guess a byproduct of sharing Foundry space at TSMC with other companies, and yields on the relatively large mm^2 chips never really getting too fantastic? I dunno, it's an interesting question!

|

|

|

|

Truga posted:Really makes you wonder what AMD was doing in those years though. Radeons were the best thing to mine bitcoins on for at least 2 years. Some altcoins are still mined best with GPUs, so I'm sure some cretins still buy them for that reason. And how many of those buttcoins were you actually able to spend/sell?

|

|

|

|

I have 8 coins left just in case. Cashed in the rest, when they first hit 500 or so.

|

|

|

|

MikusR posted:And HEVC decoding is only on GTX 960 and not on 970 or 980. Is that something that could be fixed in a driver or is it baked into the silicon?

|

|

|

|

When I last cared about gpu decoders, it was an entirely separate chip on the card. Probably still is.

|

|

|

|

Panty Saluter posted:Is that something that could be fixed in a driver or is it baked into the silicon? I think this is how it breaks down: Decode: Only the 960 has dedicated HEVC decoding silicon, but the driver has a hybrid decoder for any other 1st/2nd gen Maxwell card which uses a mix of the GPU core and the CPU Encode: All 2nd gen Maxwell cards have decicated HEVC encoding silicon, but there's no fallback for first generation hardware

|

|

|

|

Has anyone else been hit by the last .5GB of the 970? I thought nvidia made drivers to avoid it. I have 350.12 and geforce experience told me it had some optimized settings for GTAV. I figured "Screw it, I've been messing with the settings for so long, let's see what they have." I enabled it and started up GTAV. It worked great until I started driving out of town. Once I drove into Blaine County my FPS dropped to 11. I opened up the settings menu and it said my VRAM was at 3.2 something. I lowered a setting to get the VRAM usage down and all of a sudden I was getting 80 FPS again.

|

|

|

|

Cojawfee posted:Has anyone else been hit by the last .5GB of the 970? I thought nvidia made drivers to avoid it. I have 350.12 and geforce experience told me it had some optimized settings for GTAV. I figured "Screw it, I've been messing with the settings for so long, let's see what they have." I enabled it and started up GTAV. It worked great until I started driving out of town. Once I drove into Blaine County my FPS dropped to 11. I opened up the settings menu and it said my VRAM was at 3.2 something. I lowered a setting to get the VRAM usage down and all of a sudden I was getting 80 FPS again. The VRAM bar is pretty inaccurate and the performance problems only started with the patches that came out.

|

|

|

|

Is the setting you changed Grass Quality by any chance? The higher settings are brutal on performance but they only kick in outside the city.

|

|

|

|

My card is a 3GB card but I was able to get up to 3.8 used on their slider without crashes, etc. It's just a helpful guide.

|

|

|

|

Cojawfee posted:Has anyone else been hit by the last .5GB of the 970? I thought nvidia made drivers to avoid it. I have 350.12 and geforce experience told me it had some optimized settings for GTAV. I figured "Screw it, I've been messing with the settings for so long, let's see what they have." I enabled it and started up GTAV. It worked great until I started driving out of town. Once I drove into Blaine County my FPS dropped to 11. I opened up the settings menu and it said my VRAM was at 3.2 something. I lowered a setting to get the VRAM usage down and all of a sudden I was getting 80 FPS again. repiv posted:Is the setting you changed Grass Quality by any chance? The higher settings are brutal on performance but they only kick in outside the city. ^^^ That's a big one, especially since you noted it only happened when you drove outside the city. Incredulous Dylan posted:My card is a 3GB card but I was able to get up to 3.8 used on their slider without crashes, etc. It's just a helpful guide. It shouldn't ever crash, you'd just see stuttering as the card paged stuff to system RAM. You're totally right that the in game guide is just in general terms though. Best to use something like afterburner to see what your GPU's memory usage actually looks like. Speaking of GTA V, I was getting really damned frustrated with a ton of crashes (Driver crashes where you'd get the red X message about the driver crashing and recovering). Thought it was the OC on my card at first, dialed everything down to stock or lower, but it still happened. I noticed that Firefox was crashing at the same time as GTA/the driver, started closing FF while gaming, all crashes are gone now, GPU is back to running at 1.6ghz boost. Mozillaaaaa!

|

|

|

|

Gwaihir posted:Mozillaaaaa! Nah, that's 100% NVIDIA being poo poo at drivers.

|

|

|

|

is there any way to see what programs are using your GPU? in the past few days my GPU usage in windows keeps going to 100% for no discernible reason, which since this is a 290x means that my PC's effectively functioning as a space heater. could it be the GTAV beta drivers I've got installed?

|

|

|

|

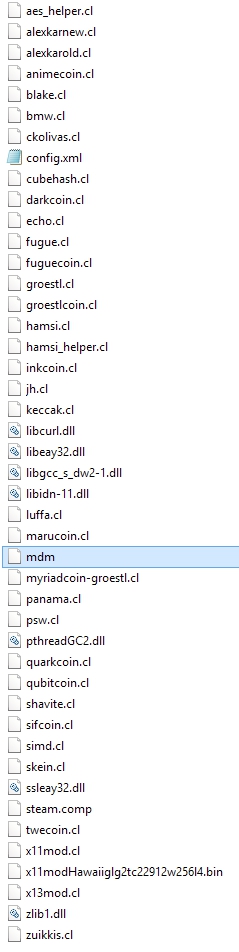

Generic Monk posted:is there any way to see what programs are using your GPU? in the past few days my GPU usage in windows keeps going to 100% for no discernible reason, which since this is a 290x means that my PC's effectively functioning as a space heater. could it be the GTAV beta drivers I've got installed? Check for any unusual folders or programs running. Could be coin mining malware

|

|

|

|

Broke down and bought GTA5 since I had a 20 dollar reward. Been installing it over the last 5 hours. Are there any benchmarks built in that I can run?

|

|

|

|

veedubfreak posted:Broke down and bought GTA5 since I had a 20 dollar reward. Been installing it over the last 5 hours. Are there any benchmarks built in that I can run? yeah

|

|

|

|

veedubfreak posted:Broke down and bought GTA5 since I had a 20 dollar reward. Been installing it over the last 5 hours. Are there any benchmarks built in that I can run? Settings, advanced graphics, press.. uh, don't know on the keyboard from memory, y on the 360 pad.

|

|

|

|

Grim Up North posted:Nah, that's 100% NVIDIA being poo poo at drivers. Seconding this, Firefox uses GPU acceleration for everything and it's unable to recover from driver crashes. I can still see disabling FF alleviating problems in games though.

|

|

|

|

Desuwa posted:Seconding this, Firefox uses GPU acceleration for everything and it's unable to recover from driver crashes. ....huh. Crap. Am I going to have to move back to Chrome on my CAD lappy?

|

|

|

|

Desuwa posted:Seconding this, Firefox uses GPU acceleration for everything and it's unable to recover from driver crashes. that would explain why firefox runs like garbage on my crappy laptop but chrome is silky smooth

|

|

|

|

Settings > Advanced. Turn off hardware acceleration. No more problems. NVidia has known driver issues with Fx, to the point where Mozilla keeps a blacklist of cards where hardware accel is automatically disabled. NV isn't invincible to long standing driver issues. Fx uses plain old D2D calls that no other drivers/cards have issues with.

|

|

|

|

does nvidia 3d vision only work with certified monitors or will it work with any 120hz screen provided i buy their glasses? I just found out my huge korean monitor is 120hz and i have a gtx 970

|

|

|

|

At what point is it throwing good money after bad? I'm looking to upgrade to one of three videocards - the MSI 960 2GB (~$240), 960 4GB (~$320), or 970 (~440) to go along with a Phenom II 840 (I've only 4 GB of RAM at the moment but that's easily upgradeable - I'd like to get a bit more life out of my processor). I haven't had a problem playing most games with my aging 5750 since I don't care about graphics as long as my framerate is stable but I vastly underestimated how poorly GTAV runs - it runs okay up until a car crash, at which point the particle system drops the framerate to nearly nothing for a few seconds. I'm looking forward to playing Witcher 3 and Cyberpunk 2077 soon so I'm hoping to upgrade where I think my system is hurting right now, and that's the GPU. I've looked at GPU comparison sites but I don't know what they mean in terms of real world use. I only have one monitor that does 1080P. My question is basically what kind of performance difference can I expect between the cards? Spending the money on the 970 is a bit exorbitant considering how rarely I actually play a videogame that's demanding, but the price difference isn't that much to pay if it means I avoid being frustrated by lack of performance and I think I just answered my own question.

|

|

|

|

WorldWarWonderful posted:At what point is it throwing good money after bad? Well if Witcher 3 is on your list of games you want to play, I think anything but a full upgrade is going to leave you unhappy. Witcher 3 minimum requirements are a Phenom II X4 940, 6GB of system RAM and a GTX 660. You could probably overclock your CPU, if you aren't already, to match the performance of a 940 but you would still be at bare minimum for CPU there. As for GPU, I wouldn't spend more than the money to get a GTX 960 if you decide not to upgrade your CPU since you're likely to be CPU limited on modern games as is. Waiting for Witcher 3 to come out and see what the benchmarks say would be your best bet, perhaps they are being overly cautious with the CPU requirements, or perhaps the game is gonna run like crap if you aren't throwing a Sandy Bridge+ CPU at it. One bright side is that nVidia is currently running a promotion where you get The Witcher 3 for free with the purchase of a qualifying GPU, of which the GTX 960 or 970 both qualify for.

|

|

|

|

veedubfreak posted:Broke down and bought GTA5 since I had a 20 dollar reward. Been installing it over the last 5 hours. Are there any benchmarks built in that I can run? Make sure you start the game and run through the prologue before launching the benchmark. Otherwise it tries to do both and breaks all sorts of things.

|

|

|

|

Don Lapre posted:Check for any unusual folders or programs running. Could be coin mining malware I'd done a virus scan so I was a little skeptical of this, but I looked through task manager and found a process called 'mdm' constantly eating about 5% of my CPU. Killed it and the GPU usage went away. Searched the filesystem for it and these turned up nestled in a subfolder of appdata:  oops  guess that's one upside of the 290X being deafeningly loud; if it wasn't it'd probably have taken me a few weeks to notice Generic Monk fucked around with this message at 16:47 on Apr 25, 2015 |

|

|

|

Generic Monk posted:I'd done a virus scan so I was a little skeptical of this, but I looked through task manager and found a process called 'mdm' constantly eating about 5% of my CPU. Killed it and the GPU usage went away. Searched the filesystem for it and these turned up nestled in a subfolder of appdata: Just pay attention to what linux isos you download and use, ive seen this bundled with some.

|

|

|

|

Beautiful Ninja posted:Waiting for Witcher 3 to come out and see what the benchmarks say would be your best bet, perhaps they are being overly cautious with the CPU requirements, or perhaps the game is gonna run like crap if you aren't throwing a Sandy Bridge+ CPU at it e: goddamnit of course there's an animecoin

|

|

|

WorldWarWonderful posted:At what point is it throwing good money after bad? As others have said, you really need both a CPU and GPU upgrade. For a CPU I'd get a k-series i5 CPU plus a decent mobo, for a GPU the 960 is not worth it, it's just a much slower card than the 970 due to it's very narrow memory bus and it will lag behind badly with all these new console ports coming out. Unfortunately I'd say that you have to save up and get a CPU, GPU and mother board, anything else will leave you with bad performance.

|

|

|

|

|

I don't know why anyone would consider a 2GB 960 when a 290's around that price after rebates, or you can find a used 280X for like $140-$160 for the extra VRAM. Alternatively just step up to a 970.

|

|

|

|

You all have sold me on the 970; current rebates and "The Witcher 3" bundle seals the deal. I'll pick one up before going 1440p. I wish it was a little more of a jump from my venerable 7970: it's too bad the planar 20nm node didn't work out.

|

|

|

|

Judging from my AMD experience you will get a lot of celsius improvement at least. Is it generally a thig that amd card run hot or did I just have bad luck with my 7970m? Mobile card sure, but a lot hotter that nvidias counterparts at the same level.

|

|

|

|

Sistergodiva posted:Judging from my AMD experience you will get a lot of celsius improvement at least. The desktop 7970/280x has a TDP of 250w, which, I take it, when compared to the new Nvidia cards makes it a gas guzzler. However, it is a 28nm part so it is nowhere near as dense as Haswell, Broadwell, et al. that get hot very fast. My 7970 comes clocked at 1 GHZ and has two big fans mounted on it; it runs between 60-70C when under full load, which seems fine to me. It is a bit loud, which would be my only complaint. Otherwise, great card. I doubt the design would scale down without having serous heat problems.

|

|

|

|

AMD cards are only "gas guzzlers" in comparison to the 2nd-generation Maxwell parts. A 290X pulls the same amount of power as a 780ti does, or even less in some cases. Nobody derided the first-gen Maxwells as being particularly loud and hot, actually they were considered to be very efficient at the time. It's more that the 900s are a very efficient design, plus the fact that it's been 4 years since AMD's major architecture upgrade and 2 years since a minor refresh. AMD is way, way overdue for an upgrade. Circa 2013 cards don't cut it anymore, and the wait for R300 is just getting stupid. At this point it'll be a toss-up on which comes first, the 390X or the new 980ti cards (a cut-down Titan X from what I remember). If you're letting your competitors put out 2 new flagship/halo-class chips before you can get one out you're not doing real well. I can understand the business sense of trying to burn down your oversupply of R200 products but at some point you have to get your product on the market. Paul MaudDib fucked around with this message at 02:31 on Apr 26, 2015 |

|

|

|

I was hoping to avoid a full upgrade but I guess it's due after six years. I suppose my best bet is to nab the 970 now to see me through GTAV and take my time looking and saving up for the rest until next month where I can splurge on the rest. Thanks thread! I can put a machine together but when it comes to picking parts I'm lost.

|

|

|

|

Paul MaudDib posted:AMD cards are only "gas guzzlers" in comparison to the 2nd-generation Maxwell parts. A 290X pulls the same amount of power as a 780ti does, or even less in some cases. Nobody derided the first-gen Maxwells as being particularly loud and hot, actually they were considered to be very efficient at the time. What you're saying is basically right though in that Kepler (760, 770, 780 etc) consumed way more power than Maxwell and was not considered hot or inefficient in its time. Maxwell really upped the ante for power efficiency.

|

|

|

|

|

| # ? Jun 4, 2024 21:41 |

|

Col.Kiwi posted:780ti is not Maxwell, it's Kepler which is a generation before. The only Maxwell parts in the 700 series are 745, 750, 750ti and they came way after the Kepler 700 series parts. They are really low TDP just like the 900 series Maxwells. It's confusing. Whoops, yeah, looks like you're right. That is confusing. AMD's numbering doesn't break down real nicely either. R9 280 is GCN 1.0 but R9 290 is GCN 1.1, R9 285 is GCN 1.2 (and slower than a 280X). Don Lapre posted:Maybe AMD will get lucky and someone will find a crytocurrency the 390 works great for AMD has traditionally held the cryptocurrency crown for some architectural reason I don't know. I think it might be that AMDs focus on integer performance while NVIDIA focuses on floating-point performance? Or instructions that AMD implements that NVIDIA doesn't? It's not about memory performance, though, which is the big selling point of the 390X's HBM. The processor will be faster to take advantage of that extra bandwidth and that's where any performance increase would come from. It would be interesting to see if it did improve performance on some of the "GPU-resistant" coin types like Tenebrix. These work by doing an operation that is simple if you can store the results of the computations as you go but expensive if you have to recompute the whole thing - basically making the cache/memory requirements too large to fit into a GPU's on-chip memory. HBM might help there. Paul MaudDib fucked around with this message at 02:48 on Apr 26, 2015 |

|

|