|

Rabid Snake posted:Thats what I was thinking, I can always use it as a normal 75hz ultrawide if I switch to a Nvidia card. I love my 290 (except for the power draw) so I might stick to AMD if they can release some good competitive cards. Right now, NV is the only game in town for top performance, AMD's holding out for HBM2 to release their top two chips, but that means that they should be getting their 1080Ti equivalent out about the same time as NV starts selling that to consumers rather than data centers. If you don't mind flipping cards an NV card in the interim may be a good call. I'm personally committed to riding my 290 out unless Polaris is better than expected and it's viable for me on an XR341ck, but I don't mind turning down a few settings and running sub 60 with freesync on for demanding games.

|

|

|

|

|

| # ? May 28, 2024 11:02 |

|

This belogns to the GPU Megathread but it's worth crossposting https://www.youtube.com/watch?v=WpUX8ZNkn2U Frakkin amazing setting, makes me wish I had an high refresh rate monitor (w/o gsync!)

|

|

|

|

froody guy posted:This belogns to the GPU Megathread but it's worth crossposting But fast sync matters when your render rate is higher than your refresh rate, so having a high refresh rate display makes fast sync *less* valuable, and makes vsync less of a latency issue.

|

|

|

|

Yeah, I've seen a few people jumping to the conclusion that fast sync obsoletes gsync but they don't seem to be solving the same problem. From reading pcper, it sounds like there would be judder at lower framerates and the feature would really be great when you are rendering many more frames than your monitor's refresh (like CS:GO or a map game or whatever) I'm curious if fast sync works with ulmb; I assume it does, and that might be (subtle) enhancement for less demanding games where you are pegged at 120hz.

|

|

|

fozzy fosbourne posted:Yeah, I've seen a few people jumping to the conclusion that fast sync obsoletes gsync but they don't seem to be solving the same problem. From reading pcper, it sounds like there would be judder at lower framerates and the feature would really be great when you are rendering many more frames than your monitor's refresh (like CS:GO or a map game or whatever) The Nvidia dude in the video actually goes into the Gsync/Fast sync relationship, basically he says that ideally you want both because Gsync helps at low frame rates and Fast sync is the opposite helping remove tearing seen at very high frame rates beyond your monitor's refresh rate. Also Fast sync should be coming to most Nvidia cards including past ones, it's not a 1080/70 thing, it's just a driver thing.

|

|

|

|

|

Rabid Snake posted:Everyone's been talking about the Asus X34 but how is the freesync version, the XR341CK? I know the biggest knock is 100hz -> 75hz. I mean, if price is no object, and you have an NVidia card, then by all means go with the X34. But if $400+ is something that matters to you, or you have or think you will have an AMD card, the XR34 looks mighty attractive.

|

|

|

|

AVeryLargeRadish posted:The Nvidia dude in the video actually goes into the Gsync/Fast sync relationship, basically he says that ideally you want both because Gsync helps at low frame rates and Fast sync is the opposite helping remove tearing seen at very high frame rates beyond your monitor's refresh rate. Interesting. I've read about people capping their fps a few frames below their refresh rate to keep gsync enabled; I wonder if it's still worth doing that in some contexts since it sounds like fast vsync still adds a frame of latency

|

|

|

|

DrDork posted:I mean, if price is no object, and you have an NVidia card, then by all means go with the X34. But if $400+ is something that matters to you, or you have or think you will have an AMD card, the XR34 looks mighty attractive. The XR341 is one of those happy freesync monitors that are usually limited to the low end that are real compelling even without freesync turned on. A lot of the reason I got one is because that made it really easy to not worry about vendor lock-in.

|

|

|

|

Even in cases when your gpu rendering is below the monitor's refresh rate fast sync will show the "last rendered frame" entirely so no tearing, no stuttering. It's not dynamic as gsync but sounds like gold to me.

|

|

|

|

froody guy posted:Even in cases when your gpu rendering is below the monitor's refresh rate fast sync will show the "last rendered frame" entirely so no tearing, no stuttering. It's not dynamic as gsync but sounds like gold to me.

|

|

|

|

froody guy posted:Even in cases when your gpu rendering is below the monitor's refresh rate fast sync will show the "last rendered frame" entirely so no tearing, no stuttering. It's not dynamic as gsync but sounds like gold to me. In a context where rendering framerate is lower than display refresh rate, isn't that pretty much standard (double buffered) vsync, though? If it can't render frames faster than your monitor wants to display them, the system will only be able to buffer one complete frame and it will have the same limitations with regards to stuttering and latency as vsync. It seems like you would need to sometimes hit 2x your refresh rate in order to be able to do better than vsync (and even then, it seems like it would effect latency but not judder) e: clarified the context in first sentence fozzy fosbourne fucked around with this message at 23:59 on May 17, 2016 |

|

|

|

The principle is the same of the triple buffering but detaching the rendering from the display you don't create latency and don't risk to fill up the buffer and create drops or queue on the render/gpu side. Basically with triple buffering once the buffer is full the rendering stops, this creates latency and lots of overhead, with fast sync the rendering keeps going as fast as it can (as in vsync off) so you'll always have the best and newest "last rendered frame" avaialble to display and zero talking between the display and the gpu as in "hey you can start filling up the buffer again, I'm done with the previous frame". Hope it makes sense, actually the guy in the vid answers the same question at a certain point.

froody guy fucked around with this message at 23:26 on May 17, 2016 |

|

|

|

Triple buffering should work like fast sync, but instead usually works like double buffering with an extra linear queue entry. Fast sync actually works, works with DX and not just GL, and doesn't require application awareness of buffer state for doing things that need access to the previous frame. (I'm not sure how TXAA works if previous frames can be dropped, actually, but I can't be bothered to draw a diagram and try to figure it out right now!)

|

|

|

|

Subjunctive posted:Triple buffering should work like fast sync, but instead usually works like double buffering with an extra linear queue entry. Fast sync actually works, works with DX and not just GL, and doesn't require application awareness of buffer state for doing things that need access to the previous frame. (I'm not sure how TXAA works if previous frames can be dropped, actually, but I can't be bothered to draw a diagram and try to figure it out right now!) Yeah, that makes sense to me. But what I'm not clear on is how you take advantage of that when your rendering can't keep up with the display rate (in comparison to the usual double buffered vsync and not gross direct x triple buffering :P). It seems if your display rate is faster than you can render frames, then you'll still need to re-display the last displayed frame frequently and you'd have the same latency and judder from showing a stale frame as double buffered vsync. You can't display a partial frame (tearing) and you aren't able to fill the buffer with more than one complete frame since you can't render them at a rate of 2x the display, so you'd pretty much always have a buffer of 0-1.9 completely drawn frames, right? Maybe it provides advantages at lower frame rates if your rendering pipeline is occasionally able to catch up and fill the buffer, which would never happen with double buffered vsync? Around this timestamp, the presenter mentions that this is a technology for games that are rendering well in excess of the refresh rate https://www.youtube.com/watch?v=WpUX8ZNkn2U&t=847s. Also, at the end of the presentation, when comparing this to gsync, he also mentions that fast sync is useful when your render rate is a multiple of your display rate which seems to jive with my understanding of the technique not being so useful unless you can typically render greater than one frame per refresh. Not trying to be FUDdy here, but want to make sure I understand this right.

|

|

|

|

fozzy fosbourne posted:Maybe it provides advantages at lower frame rates if your rendering pipeline is occasionally able to catch up and fill the buffer, which would never happen with double buffered vsync? Fast sync only helps when render >> refresh, by reducing latency for vsync-on (by keeping the renderer from backing up on the back buffer). Gsync only helps when render < refresh (by varying the actual refresh so that it happens when the frame is ready rather than waiting until the next "tick"). Both avoid tearing that you get with vsync-off, in their respective refresh ranges.

|

|

|

|

Subjunctive posted:Fast sync only helps when render >> refresh, by reducing latency for vsync-on (by keeping the renderer from backing up on the back buffer). Gsync only helps when render < refresh (by varying the actual refresh so that it happens when the frame is ready rather than waiting until the next "tick"). Ok, yeah, that's my understanding. Still think it will be pretty sweet if you can somehow peg a game at 120+fps and enable ulmb. e: Also, it seems like you are still better capping your framerate just under your refresh and using gsync, if it's available. So you don't have the (however small) sampling misses and latency that they referred to in the video presentation. The crazies on blurbusters seem to think the same fozzy fosbourne fucked around with this message at 01:20 on May 18, 2016 |

|

|

|

How is the frame rate limit actually implemented in code? Does the driver simply block a present call until the "right" amount of time has passed? That seems like it would still introduce some latency even if the monitor supports *sync.

|

|

|

|

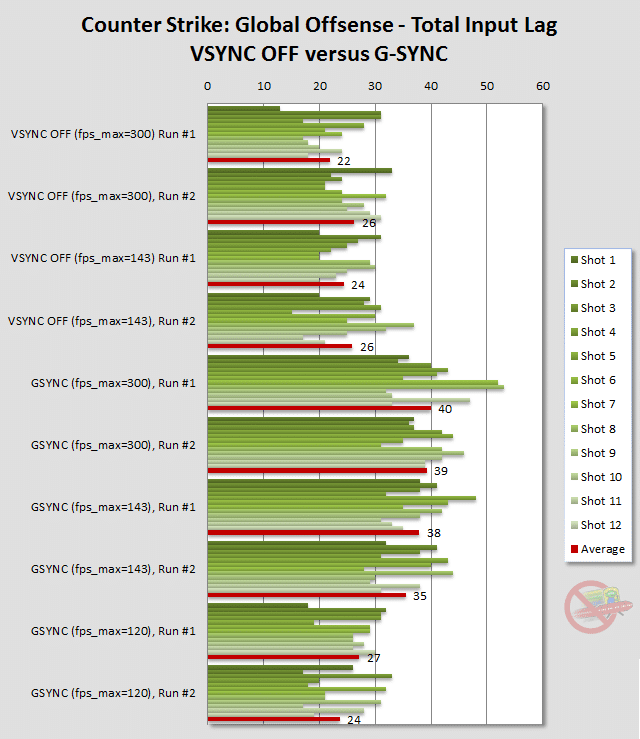

VostokProgram posted:How is the frame rate limit actually implemented in code? Does the driver simply block a present call until the "right" amount of time has passed? That seems like it would still introduce some latency even if the monitor supports *sync. Not sure, good question. This old blur busters article measured display lag with uncapped gsync and capped gsync in cs:go and found some interesting results, but didn't really have an explanation http://www.blurbusters.com/gsync/preview2/

|

|

|

|

Found this where durante describes the gedosato implementation of frame capping: http://blog.metaclassofnil.com/?p=715

|

|

|

|

fozzy fosbourne posted:Found this where durante describes the gedosato implementation of frame capping: http://blog.metaclassofnil.com/?p=715 That's interesting, and confirms what I was thinking. Frame rate capping seems to have the same input lag problem as vsync when you're rendering too fast, except the interval you wait for is configured by the user. Definitely seems like uncapped frame rate with fast sync is the way to go. That predictive waiting system is pretty cool. Also makes me wonder if phones and laptops can save some power by putting the GPU in a lower-power state during the waiting period. e: Good god it's impossible to read the slides on the fast sync video. People should be banned from making slides with anything other than black-white contrast for text. Yaoi Gagarin fucked around with this message at 05:39 on May 18, 2016 |

|

|

|

You might be able to get more consistent frame times with a cap at the cost of that input lag, but I bet that's highly implementation dependent and usually broken in a way that obviates the potential gains.

|

|

|

|

Hi guys, I just started building a new PC and awaiting to buy a 1080GTX for some high end gaming. While waiting I'm looking for the best monitor I could get in the 700-900 range. For now I'm torn between the Asus PG27AQ and the Acer Predator XB271HU. IPS and G-sync is a must and it basically comes down to: 4K (163PPI) with 60hz vs 1440p (108PPI) with 165hz One of my hobbies is photography and that 163PPI is really really tempting for some insane looking pictures. On the other hand I'm afraid the 1080GTX is going to be just barely not good enough for gaming at 4K according to the latest benchmarks. Or does G-sync really make gaming <60FPS more acceptable? (I've never used G-sync) I'm not even sure 165hz is worth it for non-competative gaming (read: at or below 60FPS)? Is the 4K one more 'future proof' considering I could just game at lower resolutions until appropriate GPU's are out? Mikojan fucked around with this message at 11:23 on May 18, 2016 |

|

|

Mikojan posted:Hi guys, I just started building a new PC and awaiting to buy a 1080GTX for some high end gaming. According to everyone I have heard who has used Gsync it really helps out a ton at lower frame rates, however I would probably go with the higher refresh monitor because from what I have heard the difference in smoothness going from 60Hz to 100Hz+ is huge and you will see much better frame rates in general so basically: High refresh + higher frame rates + Gsync > Higher DPI + lower frame rates compensated for by Gsync.

|

|

|

|

|

AVeryLargeRadish posted:According to everyone I have heard who has used Gsync it really helps out a ton at lower frame rates, however I would probably go with the higher refresh monitor because from what I have heard the difference in smoothness going from 60Hz to 100Hz+ is huge and you will see much better frame rates in general so basically: High refresh + higher frame rates + Gsync > Higher DPI + lower frame rates compensated for by Gsync. After delving a bit further into the DPI topic I found out that the 1440p monitor has the same sharpness sitting 32 inches from the screen compared to 21 inches from the 4K screen. I don't see myself sitting anywhere close to 21 inches from a 27 inch screen so that is off the table. XB271HU it is then Mikojan fucked around with this message at 13:28 on May 18, 2016 |

|

|

|

How is the Dell 2412M? I'm looking at work monitors but have to order from CDW.

|

|

|

PRADA SLUT posted:How is the Dell 2412M? I'm looking at work monitors but have to order from CDW. Get the Dell U2415 instead, it is the newer and better version of the U2412M and gets rid of some problem that monitor had like replacing the nasty screen door anti-glare coating with a much better one that is almost unnoticeable. I have the U2415 myself and it is a really nice monitor.

|

|

|

|

|

What's a 23-24" 16x9 or 16x10 IPS display that has component input and isn't terribly old. Something newer than a Dell 2408WFP or HP LP2475w??? Shaocaholica fucked around with this message at 00:16 on May 19, 2016 |

|

|

|

If you need component for some ungodly reason, buy a separate converter and buy whatever monitor you want. Component is a dead standard and it isn't going to be found on anything approaching a modern monitor.

|

|

|

|

bull3964 posted:If you need component for some ungodly reason, buy a separate converter and buy whatever monitor you want. Yeah I know it's dead. Just trying to find out what was the last run with them.

|

|

|

|

So I did some cable testing last night and I confirmed it. Over the 2m DP cable that came with my x34 I can overclock to 100hz without a problem. I just tell it in the monitor OSD and it switches and I left it sitting like that for half an hour and there wasn't a flicker or anything. Great. So the monitor can do it, my x34 enjoys 100Hz that's a grand thing. However, going to the 5 or even 10m DP cable and it doesn't work. When I make the change in the OSD the monitor will turn itself off, or perhaps display the new resolution for a second and then flicker off. The 10m cable cost me $100! I just like having the PC across the room next to the TV, cabled back to a desk where I do my desk related stuff. Either it's the length, putting that much bandwidth of 3440x1440@100Hz over anything longer than 2m isn't possible. Or it's the cable, but they are brandname cables and I paid lots for them. Anyone got some experience with this?

|

|

|

|

Tony Montana posted:So I did some cable testing last night and I confirmed it. Yeah, I had a similar experience trying to daisy chain because it seemed like I was only getting two lanes over the 10 foot cable. Works fine normally but you'd need them for > 60 adventures. AMD's drivers show it, no idea if you can see it on NV drivers. I've heard hearsay about cables that can do all four lanes at a 10 foot length, but I don't remember off the top of my head and they may not actually work or may be the exception.

|

|

|

|

Right. So 10 feet is.. 3 meters. That's a short cable! So my 5 meter cable is 15 feet and my 10m cable is over 30 feet! The point being my cables are much longer than the 10 foot cable you've noticed this behavior with. Sounds like cable length is the cause and you're talking about perhaps getting the data over a 3m cable, but it sounds like it's certainly not going to be a 5 or 10m cable. Time to move the whole bloody lounge room around so my PC can be 2 meters from the monitor. That's ok, it's worth it for that glorious monitor.

|

|

|

|

What's the major difference between the XB270HU and XB271HU at this point, that the latter is newer and overclocks to 165hz? I've been looking around more lately in my impatience for the 1070/1080 to go on sale and have seen more refurbished XB270HU monitors for around $450.

|

|

|

|

Evil Fluffy posted:What's the major difference between the XB270HU and XB271HU at this point, that the latter is newer and overclocks to 165hz? I've been looking around more lately in my impatience for the 1070/1080 to go on sale and have seen more refurbished XB270HU monitors for around $450. Just get the refurb!

|

|

|

|

Everyone seems to be waiting for the 1080. It's going to be really expensive, as the top tier always is. Isn't it going to be another incremental upgrade? Why not buy at 980GTX? I have had one for something like 18 months now and it's great.

|

|

|

|

Tony Montana posted:Everyone seems to be waiting for the 1080. It's going to be really expensive, as the top tier always is. Isn't it going to be another incremental upgrade? Why not buy at 980GTX? I have had one for something like 18 months now and it's great. In a thread filled with people buying/thinking of buying/lusting over $1300+ monitors, it seems odd to turn around and question spending an extra $200-$300 on a GPU.

|

|

|

|

Tony Montana posted:Why not buy at 980GTX? I have had one for something like 18 months now and it's great. Because the 1070 is faster and cheaper.

|

|

|

|

DrDork posted:In a thread filled with people buying/thinking of buying/lusting over $1300+ monitors, it seems odd to turn around and question spending an extra $200-$300 on a GPU. To be fair, monitors have a longer life cycle compared to GPUs.

|

|

|

|

Etrips posted:To be fair, monitors have a longer life cycle compared to GPUs. People buy this sort of stuff because they have the money to do so and want to, and much like the X34 offers things that no other monitor does (hence its price), so too with the 1080.

|

|

|

|

|

| # ? May 28, 2024 11:02 |

|

Evil Fluffy posted:What's the major difference between the XB270HU and XB271HU at this point, that the latter is newer and overclocks to 165hz? I've been looking around more lately in my impatience for the 1070/1080 to go on sale and have seen more refurbished XB270HU monitors for around $450. A lot of XB270HU's will OC to 165, it all depends on the manufacturing date.

|

|

|