|

The ACLU has such a thankless job. If those idiots looked in a mirror they might have noticed that stopping surveillance on twitter does protect the civil rights of white assholes too.

|

|

|

|

|

| # ? May 9, 2024 19:49 |

|

Qwertycoatl posted:The ACLU has such a thankless job. If those idiots looked in a mirror they might have noticed that stopping surveillance on twitter does protect the civil rights of white assholes too. I've said it before and I'll say it again: Rev is really lucky the internet left has never taken a really strong stance on the evils of pissing on electric fences, because his dick would be on fire within minutes.

|

|

|

|

The Vosgian Beast posted:https://twitter.com/St_Rev/status/809594205383364608 I had an argument with some rando twitter right-wing guy about the ACLU a few weeks ago and he was doing the same sort of "WELL WHERE WERE YOU FOR THE LAST 8 YEARS" thing and so I asked if he had any particular thing he thought the ACLU was ignoring or wasn't doing. The particular tweet that triggered the argument was police shootings, so he said that the ACLU wasn't going after police unions because "they're on the same team and have the same agenda." I pointed out that... actually the ACLU was going after police unions, for the exact reasons this guy wanted them to, with the most recent lawsuit filed a few months ago. He said that was nice but doesn't change the fact that they "have an agenda," whatever that means. So basically the ACLU gets shat on because nobody cares about (or reports on) 99% of the stuff they do and then when people actually pay attention to them they get mad that they're not doing anything.

|

|

|

|

Not sure how it's the ACLU's fault but he's definitely right to blame Twitter for not acting sooner. Also not sure what would lead anyone to believe the ACLU doesn't go after cops. That's just weird.

|

|

|

|

DeusExMachinima posted:Also not sure what would lead anyone to believe the ACLU doesn't go after cops. That's just weird. Not paying attention and drawing their conclusions of what the ACLU does entirely based on which things Fox News reports them doing, I'd assume. I also kinda think that guy I was arguing with might have thought "oh they're both 'unions', they must be in cahoots" since he made several mentions of them being "on the same team" and "having the same agenda"

|

|

|

|

The Vosgian Beast posted:https://twitter.com/St_Rev/status/809594205383364608

|

|

|

|

SJW was rendered meaningless long before gamergate

|

|

|

|

Pomp posted:SJW was rendered meaningless long before gamergate Yeah I remember getting uncomfortable about using it due to its creeping scope back in like mid-2012 I think, gamergate just sort of sealed the deal.

|

|

|

|

There certainly was a time when "social justice warrior" was lefty-speak for "you're not wrong, you're just an rear end in a top hat," but that was quite a few years ago at this point.

|

|

|

|

http://slavojzyzzek.tumblr.com/post/155534767503/academicianzex-pochowek-isnt-4chan I think he seriously believes this

|

|

|

|

Fututor Magnus posted:http://slavojzyzzek.tumblr.com/post/155534767503/academicianzex-pochowek-isnt-4chan quote:You'll need to be on Tumblr to see this particular blog

|

|

|

|

GlassElephant posted:Want to paste it here? "I donít use 4chan but reliable sources have informed me that itís p much a LGBT support group"

|

|

|

|

Anil Dasharez0ne posted:"I donít use 4chan but reliable sources have informed me that itís p much a LGBT support group" This is kinda sorta true actually. 4chan has a weirdly big LGBT component, most of my friends at least used to post there and a bunch of my SO's friends still post there despite it being unapologetically terrible. If I had to throw out a guess I'd say it has something to do with 4chan's love of trap porn and in-denial gay or trans kids gravitating towards that as a safe way of expressing that side of themselves. My SO says they call it "egg mode"

|

|

|

|

Though now that i'm thinking about it most of the people I know who still post there are the kind of white male privilege-havin' gays that would pretty much be upper-middle-class libertarian republicans if the republicans weren't so terrified of them. Can't really speak for the trans population but the few I've talked to had some very bad opinions too so

|

|

|

|

Bad opinions and the prioritization of self-interest above all else are cancers that afflict all people equally.

|

|

|

|

neongrey posted:Bad opinions and the prioritization of self-interest above all else are cancers that afflict all people equally. #allcancersmatter

|

|

|

|

4chan and kin are the most lgbt-friendly of the right-wing rear end in a top hat parts of the web... which is not really a high bar to clear.

|

|

|

|

Anecdotal evidence: I have known one transgender woman who used 4chan a lot, and she internalized a whole lotta horseshit she constantly used to beat herself and others up with.

|

|

|

|

The Vosgian Beast posted:Anecdotal evidence: I have known one transgender woman who used 4chan a lot, and she internalized a whole lotta horseshit she constantly used to beat herself and others up with. Only one?

|

|

|

|

I was on 4chan a lot during high school and now I'm gay as hell

|

|

|

|

Glancing at 4chan's LGBT board I spotted a politics thread.quote:post your age, gender, sexuality, political view, and something about yourself quote:>19 quote:>19 quote:> 21 quote:>18 quote:>18 quote:>19 quote:>28 quote:>19

|

|

|

|

GlassElephant posted:Glancing at 4chan's LGBT board I spotted a politics thread. This just confirms to me that libertarians are the worst people on the planet, regardless of age, gender and sexuality

|

|

|

|

Michael Anissimov posted:Will we be terrified by Moral Progress, once we think about it clearheadedly, as well? I think Azathoth isnít the only sanity shattering Outer God waiting for us. With this series of essays I will endeavor to do precisely that. I would urge you to consider doing lighter drugs than the strange geometries we shall explore, it may, after all, permanently damage your current morality. Safety is not assured. I will begin in shallow waters, examining why you might want to hinder primordial terrors even when they seem to be doing something good. Just contemplate all we've lost with MoreRight falling off the net.

|

|

|

divabot posted:Just contemplate all we've lost with MoreRight falling off the net. Tell me more about this MoreRight business.

|

|

|

|

|

quote:>I am physically Tumblr but internally 4chan, life is confusing. The first worldest of first world problems

|

|

|

|

Cavelcade posted:Tell me more about this MoreRight business. The blog of Michael Anissimov, who went from Yudkowsky to Moldbug and murdered acres of electronic trees in his quest to reconcile the transumanist singularitarian future with sending humanity back to feudalism. Started MoreRight as a neoreactionary group blog spun off from LessWrong; even other neoreactionaries couldn't stand him and all left. Site disappeared some time in the last coupla months.

|

|

|

|

My favorite More Righter was Samo "The first Qin Emperor found a great solution to the problem of destabilizing intellectuals " Burja

|

|

|

|

https://twitter.com/ClarkHat/status/818134192554905604 https://twitter.com/ClarkHat/status/818155367075082240

|

|

|

|

So, what's Eliezer Yudkowsky been up to!  um Well, there's Arbital! His exciting new With the end of all things approaching fast per the above, he's doing his bit to SAVE HUMANITY HOW HE CAN: writing incomprehensible deep LW theology on Arbital, as listed at the bottom of https://arbital.com/p/EliezerYudkowsky/

|

|

|

|

divabot posted:So, what's Eliezer Yudkowsky been up to! This is insane. The AI gold rush (which is a pretty good term) is not doing anything that feeds into his preferred disaster scenario. He believes in what he calls the AI FOOM, where an intelligent system is given the task of updating itself to be more intelligent, recursively. He believes, for whatever reason, that intelligence can be quantified and optimized for, and that the g factor is a real quantity rather than a statistical artifact. These aren't majority views, but it's not implausible. Or, at least, I'm the wrong kind of expert to say that they are implausible. I'm an AI guy, not a human intelligence guy. https://en.wikipedia.org/wiki/G_factor_(psychometrics) So, let's generously take those as true. He believes that optimizing g is something that's possible. He's never addressed it directly, but a necessary piece of his belief in FOOM is that the upper bound on g is sufficiently high that it counts as superintelligence (or else the upper bound doesn't exist). As far as I know, there's no research on these points because the field of AI doesn't really include these sorts of questions, but let's pretend that these are true as well, and that we live in Ray Kurzweil's future. Keeping score, we're at two minority beliefs and two completely unresearched propositions accepted on faith. He believes that, while optimizing its own g factor, the intelligent system in question will have a high rate of return on improvements, that one unit of increased g factor will unlock cascading insights that contribute to the development of more than one additional unit of increased g. This is argued here: http://lesswrong.com/lw/we/recursive_selfimprovement/, and you'll find there's not a shred of argument there. It's bald assertion. He also baldly asserts that the "search space" (which is not the right term, but remember, he's a high school dropout autodidact with no formal AI education) for intelligence is smooth, with no "resource overhangs". I'm pretty sure he means to make the point that he expects to find relatively few local minima*. That's just unknowable, but while working on much much simpler optimizations, I have found absolutely no end of local minima. There are almost no complex problems without them. I would not be inclined to believe this if he had already done it, that's how unlikely it is. So now we're at two minority views, two unresearched but cogently phrased propositions, one bald assertion, and one deeply, deeply unlikely belief. This doesn't carry us all the way to his nightmare scenario, there's a lot more stuff in the house of cards that makes up his FOOM beliefs, but that's enough, really, to get the point of my next paragraph. We are not studying any of those things. The AI gold rush is almost entirely about deep neural networks in new and weird variations, or, if he's really up on the AI field, GANs, which are deep neural networks in new and weird variations plus a cute new training mechanism. (As I write this I realize he's almost certainly terrified of GANs for a misguided reason. I'll come back to that if someone wants to hear it.) None of this validates any of his beliefs. Deep neural networks are entirely inscrutable. No one anywhere can tell you why a deep neural network does what it does, so there's no reason to suspect that they will spontaneously evolve a capability that have proven to be beyond the very best AI experts in the world out of nowhere. Deep neural nets also have severely limited inputs and outputs. They are not capable of learning anything about new types of data or giving themselves new capabilities; the architecture doesn't support it at all. The sort of incremental increase in capabilities that Yudkowsky needs for his FOOM belief to come true does not exist, and really just can't be brought about. I'm not even halfway done with the reason that neural nets can't be the Yudkowsky bogeyman. They train too slowly for a FOOM. They don't use any of the Bayes stuff that's so essential to his other beliefs. They don't have any mechanism for incremental learning outside of a complete retraining**. They can't yet represent sufficiently complex structures to write code, let alone complex code, let alone code beyond the best AI programmers in the world. I'll stop here. It makes no goddamn sense. Maybe his beliefs have evolved from the AI FOOM days. I don't know. I've been impressed with how some of his other beliefs have changed to reflect reality. I'll go read what he has on Arbital and let y'all know if I'm way off base here. *minima on a loss function, maxima on "intelligence"; by habit I use the former but it might be more intuitive to think it terms of the quantity being optimized. If so, read this as "maxima". **someone might call me on this one; I should say instead that everyone I've ever worked with has done complete retraining, and if a better mechanism existed they'd probably be using it, but I can't say that there's definitely *not* such a mechanism. It's not impossible due to the nature of the architecture. SolTerrasa has a new favorite as of 10:15 on Jan 9, 2017 |

|

|

|

He does realise that Person of Interest was not a documentary, right?

|

|

|

|

Quote not edit, whoops

|

|

|

|

Doctor Spaceman posted:He does realise that Person of Interest was not a documentary, right? Science fiction is more about how we feel about the present than what will happen in the future, just like everything Yudkowsky says.

|

|

|

|

SolTerrasa posted:We are not studying any of those things. The AI gold rush is almost entirely about deep neural networks in new and weird variations, or, if he's really up on the AI field, GANs, which are deep neural networks in new and weird variations plus a cute new training mechanism. (As I write this I realize he's almost certainly terrified of GANs for a misguided reason. I'll come back to that if someone wants to hear it.) None of this validates any of his beliefs. I'd enjoy an effortpost about GANs, and perhaps also what distinguishes a "deep" neural network from the stuff that has been around since (I think?) the 1990s. If you're up for it. (I have a selfish motive for wanting that effortpost: I might have to implement neural networks in a FPGA at some undefined time in the future so I know I'm going to have to get more familiar with the field.)

|

|

|

|

Fututor Magnus posted:https://twitter.com/ClarkHat/status/818134192554905604 I dream of being able to magically read people's scores on an IQ test the way these gentlemen can

|

|

|

|

gently caress, I don't know why I even try to read this poo poo anymore. https://arbital.com/p/real_world/ This is Yudkowsky's attempt to address what I'd regard as the core problem of his beliefs. quote:Acting in the real world is an advanced agent property and would likely follow from the advanced agent property of Artificial General Intelligence: the ability to learn new domains would lead to the ability to act through modalities covering many different kinds of real-world options. "By definition God is omnipotent, so it has all capabilities, including the capability of acting in the real world. Checkmate." And of course "advanced agent property" links to ten thousand words about what AI God will be able to do. Don't read them; I did and it was a huge waste of time. They're not even funny-wrong, it's like reading a cyberpunk worldbuilding forum, or the character options chapter in Eclipse Phase.

|

|

|

|

BobHoward posted:I'd enjoy an effortpost about GANs, and perhaps also what distinguishes a "deep" neural network from the stuff that has been around since (I think?) the 1990s. If you're up for it. Are you sure you're not actually Bob Howard?

|

|

|

|

SolTerrasa posted:This is insane. The AI gold rush (which is a pretty good term) is not doing anything that feeds into his preferred disaster scenario. I think Yudkowsky thinks all AI research is on things like Cyc. He doesn't realize that things like Cyc are an extreme minority in AI research these days, and have been since the last AI winter. eschaton has a new favorite as of 08:23 on Jan 9, 2017 |

|

|

|

Pope Guilty posted:Are you sure you're not actually Bob Howard? It's for something a hell of a lot less scary than a basilisk gun. Promise!

|

|

|

|

|

| # ? May 9, 2024 19:49 |

|

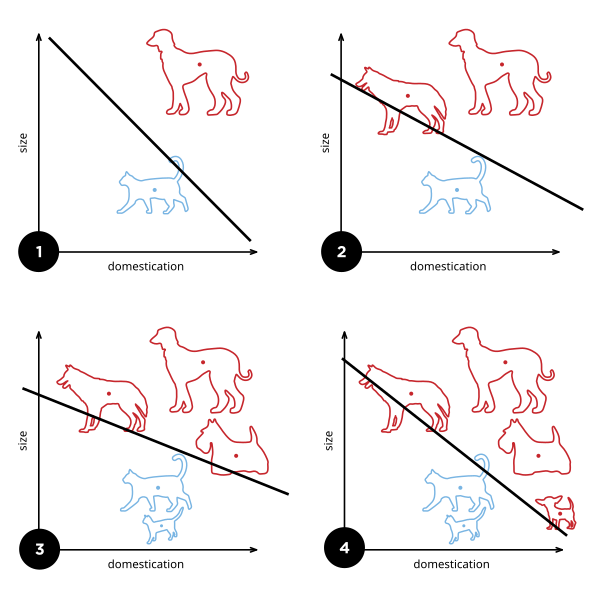

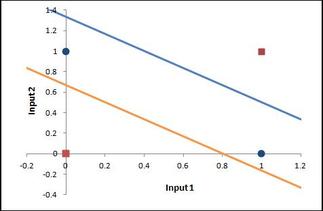

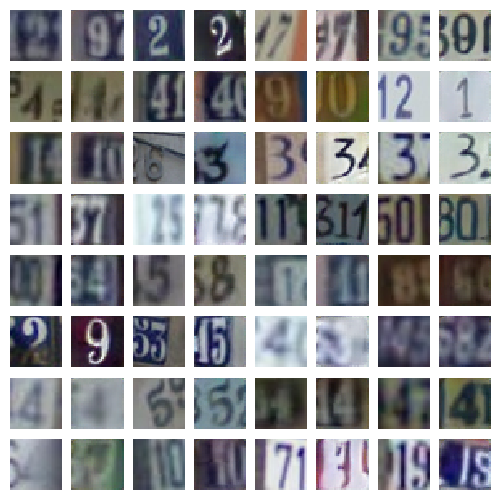

BobHoward posted:I'd enjoy an effortpost about GANs, and perhaps also what distinguishes a "deep" neural network from the stuff that has been around since (I think?) the 1990s. If you're up for it. Sure! It's not too hard, really. What makes a deep neural network deep? So, the very first neural "network" was back in the 1950s, this thing called the perceptron. It has some number of inputs, and one output. It's wired up internally so that it can draw a single line through its parameter space. Wikipedia has a good graphic for explaining what that means.  Funny story, AI hype is actually older than the term AI; the perceptron was heralded by the NYT as "the embryo of an electronic computer that [the Navy] expects will be able to walk, talk, see, write, reproduce itself and be conscious of its existence." No poo poo, they thought a linear combination of inputs with a threshold was going to be conscious of its own existence. Anyway, in the 1960s they discovered that a single perceptron was pretty limited in what it could represent; the canonical example of unrepresentable data is anything that requires an XOR-like function.  See, no way to draw a single line separating the red dots from the blue ones. All of this is not very exciting anymore, but it was really interesting in 1960, and there was a big fight over who proved it first, standard academic stuff. So, what you do is, you hook up three perceptrons. Two of them draw the lines in this picture (from a totally different website; the XOR thing is super common)  The third one combines the input of the other two. That's now a neural network, in that there's a network of these neurons. It happens that two layer networks can answer the XOR problem. Actually, it's since been proven that two-layer networks can do literally everything; they are "universal approximators". Any function you care to state, a two-layer network can approximate to an arbitrary fidelity. A deep neural network is a neural network that has a lot more than 2 layers. 15 - 30 layer networks are pretty common, and those are definitely "deep". There's no hard line, but 3 layers is probably not "deep", and 10 probably is. The reason deep neural networks weren't popular earlier is that it is a lot harder, computationally, to train a 15 layer network than a 2 layer one. And you might reasonably ask "why would anyone build a deep neural network if 2-layer networks can approximate any function?" The answer is, deep neural networks are better at it, usually. For some reason, and literally no one in the world can tell you why, deep neural networks are way more effective at complex tasks, especially at image recognition. By "more effective" I mean that they need way fewer neurons to perform at the same level. Again, no one knows why for sure. There are some guesses, but nothing solid or definitive. What are GANs? Three parts: "Generative" "Adversarial" "Networks". Networks we covered, GANs are deep neural networks. "Generative": There are two different types of neural networks. There are discriminative models, which do things like decide whether a particular picture is a picture of a fish. More formally they directly learn the function P(x|y), where x is the class ("fish") and y is the input data (the picture). P(x|y), then, means the probability that the image, y, belongs to class x ("fish"). The way that those are set up, they have input neurons for the image, and a list of outputs for the different classes they know about, each of which gets P(x|y) output to it. So you might have P(fish|image) = 0.8, P(pig|image) = 0.01, P(bird|image) = 0.02, etc etc. Second, there are generative models, which learn P(x,y). That's the joint probability of the image and the label. So they have inputs for the image, but they also have an input for the class, and a single output, for P(x,y). If you know the probability distribution of the labels (which you almost always do), you can convert this to P(x|y) using Bayes rule, so generative models can almost always do everything that discriminative models can. Sometimes they're harder to work with and they usually train slower and require more data, in my experience, but YMMV; I don't know of any formal findings on that subject. They can also do some other things, too, though, like you can use them for creating new (x,y) pairs, that is, new images and labels. If you provide "x", then you can use them to make new y; so you can use a generative model to make a new image of a fish. Usually you don't care about this capability so you won't bother with the extra overhead of building a generative model. So, the third part is the fun one: "Adversarial". What you do is, you train two neural networks. One of them is the discriminative one, which is usually your actual goal. You usually want to determine if a particular picture belongs to some class, I'll stick with the fish example. So you train one network which is supposed to be able to tell if an image in a training set is an image of a fish. Then, you train a generative model, which is supposed to be doing the same thing. At some number of iterations of training (some people start at 0, but I personally wouldn't), you start adding in the generative model's "fish" as negative examples in the training set for the discriminative model. So, the discriminative model gets punished for thinking that the generative model's fish is a real fish. And then you teach the generative model how to read the "wiring" of the discriminative model, so it can see how to make a more effective forgery. You let these things run against each other for a while and bang, you have a really good discriminative model and a really, really good generative model. Here are some pictures generated by the generative model in a GAN.  Fake "pictures of house numbers"  Fake "celebrity faces" Honestly those are pretty persuasive; I looked at a few of them and thought "huh wait isn't that... nope, never mind". Let me know if that made sense. I guess it's pretty obvious why that might terrify Yudkowsky, right? I mean, this is all conjecture on my part since he didn't come out and say what he thought was so scary, but it seems to hold together and it's the best I can do absent actually talking to him. I realized while I was thinking about it earlier today, his whole ideology folds back in on itself. That's why he's got 5,000 links in every post he writes, so that he gives the appearance of having well-supported opinion. He probably thinks he has well-supported opinions, but if you try to hold the whole thing in your head at once, you see it's circular. The essential circular argument at the distilled core of the whole thing is "AI research is dangerous because the impending superintelligence will allow 3^^^3 units of pain to be distributed to every living human", and "Superintelligence is impending because of the dangerous irresponsibility of AI research". Usually you have to step through 3 or 4 intervening articles to find the loop (or bare assertion), but my sense is that there's always one. If I could ask Yudkowsky for anything at all it would be a single, self-contained argument, in less than 3,000 words, for why superintelligence is imminent. So, starting from those two premises (which of course I believe to be false), that superintelligence is imminent and that AI research is dangerous, you can see why he'd be scared of GANs; it's exactly the sort of introspective AI that he is normally terrified will run away and become God. It looks like self-improvement, if you squint a little bit, and it is recursive, and you add those two things together along with a baseline belief that something is going to become superintelligent and end the world, and some AI technique is going to be responsible, you could pretty reasonably come to the conclusion that it's going to be this thing, this time. And Yudkowsky already believes that GOFAI ("Good Old Fashioned AI"; expert systems and decision theory and so on) techniques are not going to create God (source: http://lesswrong.com/lw/vv/logical_or_connectionist_ai/), so GANs are my best guess about his best guess for the end of the world. Invented in 2014, which is when he said that this whole situation got started ("The actual disaster started in 2014-2015"), so that fits too. I think I already covered why it's not going to be a deep neural net (or any neural net) which ends the world, unless you count the brain of the guy with the nuclear codes. I've been reading too much Yudkowsky, I'm going to go drink. If you have any questions or want any followups, I'll get back to you tomorrow.

|

|

|

This poster loves police brutality, but only when its against minorities!

This poster loves police brutality, but only when its against minorities!