|

klafbang posted:90% of a computer science education is learning the good patterns for building software. Yeah I kinda realize that every time I learn something, and it makes me really feel like I should have studied software engineering instead of attending art school. Not that I didn't enjoy it but man it'd have been so much more useful. TooMuchAbstraction posted:One of my past jobs was cleaning up a program written primarily by biology graduate students. One pattern I saw periodically was: These stories are great. Elentor fucked around with this message at 23:13 on Jul 20, 2018 |

|

|

|

|

| # ? May 23, 2024 15:44 |

|

So uh, I just read about this and how a bunch of YouTube videos are basically stagings of surreal titles procedurally generated by bots trying to maximize exposure. https://www.theguardian.com/technology/2018/jun/17/peppa-pig-youtube-weird-algorithms-automated-content We live in a procgen dystopia.

|

|

|

|

TooMuchAbstraction posted:One of my past jobs was cleaning up a program written primarily by biology graduate students. One pattern I saw periodically was: In a similar vein: in one of my first programming projects a couple years ago I had to create long arrays of points. I wanted to start with an undimensioned array and have a single function I could call to add points to it because it would look neater in the main function. My solution to this? code:

|

|

|

|

Since my last post mentioned word parsing, I figure this might be amusing. Hi REPLACE_THIS. https://twitter.com/SmashRiot/status/1022518849848180736

|

|

|

|

Guys, I'll be going on a hiatus for health reasons. Without delving into boring details if you've followed my last LP you should know that this, being chronic, happens from time to time and was one of the main reasons the FFVII LP took 4 years. I work on these things on and off, partially because I need to do other jobs to make money for my treatment. I can go for a while without posting updates but I thought this time I'd be straightforward about it. Now that TSID's code is pretty much 100% done, right now I need to do art assets for TSID and I can't produce them at a rhythm that is adequate. I've been posting a few chapters about general stuff because I like writing but it's very hard for me right now to proceed on the main project because of the art assets needed. I feel like Yoshihiro Togashi and his back problems at this point. I apologize for that. In the meanwhile, until I get better, I'll be working on less graphic-intensive stuff in my spare time. If there's any interest I can post some of the assorted stuff I do, but otherwise I'll be a bit on a hiatus to take some care of myself.

|

|

|

|

we just want you to be happy  whenever that includes LPing i will read

|

|

|

|

oystertoadfish posted:we just want you to be happy

|

|

|

|

Thanks guys, that's really heartwarming.  I did a quick write-up of the Spaceship Generator. You guys got the unabridged version: https://imgur.com/a/Hv1VwTY

|

|

|

|

It's been a fantastic ride seeing the development as it happened. You've been fantastic at spinning this into something as entertaining as it is informative, and we all hope for the best in your future. Get better soon.

|

|

|

|

Go take care of yourself first and post LP updates if it helps you with motivation or you have something really cool you want to share or something.

|

|

|

|

In a plot twist that I could only classify as a really weird case of inception, this happened: I posted the ship generator post mortem on Reddit. It got a very nice feedback.  https://www.reddit.com/r/Unity3D/comments/936f8q/procedural_spaceship_generation_in_unity/ And when someone asked for more details, I linked to this thread, and they actually gilded the post with the link to this thread. So yeah, my first gold on Reddit was linking to Something Awful. If you're by any chance reading this, thanks! We live in a most incredible world.

|

|

|

|

I just discovered this a few weeks ago and it's been awesome reading through it. I'll be keeping an eye on this bookmark.oystertoadfish posted:we just want you to be happy

|

|

|

|

hey girl you up posted:Go take care of yourself first and post LP updates if it helps you with motivation or you have something really cool you want to share or something. Seconded. I love reading this thread. You're lucky that you can do the music and gfx yourself, otherwise you'd get a whole bunch of "idea guys" following you around and not delivering assets in time.

|

|

|

|

There is good stuff here. It is well worth waiting for.

|

|

|

|

Dear Diary, Today I made a general modular save & load game state that work on two completely different projects. That was one of the stuff still missing in my code. Following that I made a nice save file structure. Both TSID and the RPG worked very nicely. I hadn't made a file structure in a while. I'm thinking of releasing a graphicless/minimalistic RPG as a techdemo until I can get back to doing proper artwork for TSID, which is pretty much what I need right now. Either way in these past weeks that I've been studying coding I'd like to thank my S/O because of how much she's been teaching me lately about modular programming. I'll be posting a few more chapters in the upcoming days. I'd like to say it'll be this weekend, but it always ends up being monday instead, so "someday in the near future".

|

|

|

|

I finally caught up! I'd initially only been reading + occasionally commenting on your project.log thread, but now I have slowly made my way through this Let's Dev too. And I wanted to say this has been a fantastic journey thus far! Thanks for the interesting and entertaining essays / war stories you've already written out, and I hope you're doing well, Elentor

|

|

|

|

Just caught up. What a great thread! Elentor, keep up the amazing work and I hope you're feeling well.

|

|

|

|

I'm cooking up the next chapters. The next two chapters will be the conclusion of my posts about rendering. I'm pausing a bit to play WoW with my friends because I haven't played in a while. I'm curious about something - Would you guys be mad if I turned this thread into a more general gamedev thread? I'm uh, almost done with something ready to be released that is unrelated to the Shmup, using the Shmup technology.

|

|

|

|

|

|

|

|

I would be happy to read more gamedev!

|

|

|

|

biosterous posted:I would be happy to read more gamedev! Who among us wouldn't? I know I'd love to read more!

|

|

|

|

Elentor posted:I'm curious about something - Would you guys be mad if I turned this thread into a more general gamedev thread? I'm uh, almost done with something ready to be released that is unrelated to the Shmup, using the Shmup technology.

|

|

|

|

I mean, if you keep writing things I'll keep reading them.

|

|

|

|

Funktor posted:I mean, if you keep writing things I'll keep reading them. Basically this

|

|

|

|

There's a shitload of stuff to write because new stuff keeps happening. The next chapter is almost ready and I already know what I'll do about the next ones. Basically: NVIDIA is about to release new video cards. It has a strong emphasis on Ray-Tracing. Path of Exile's about to implement a bunch of cool stuff. We have a lot of work to do and talk about.

|

|

|

|

Ohhhh nice Ray Tracing is really cool!

|

|

|

|

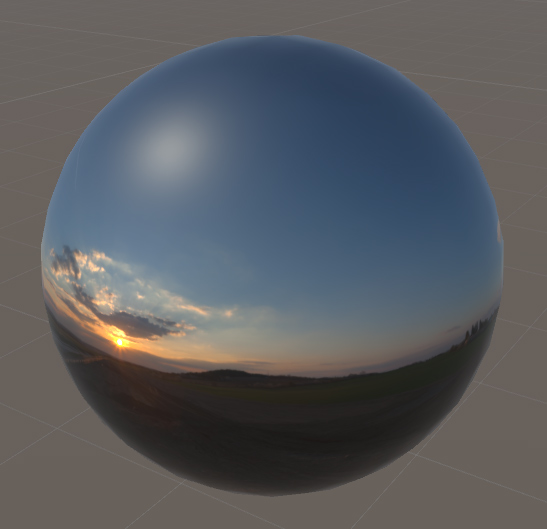

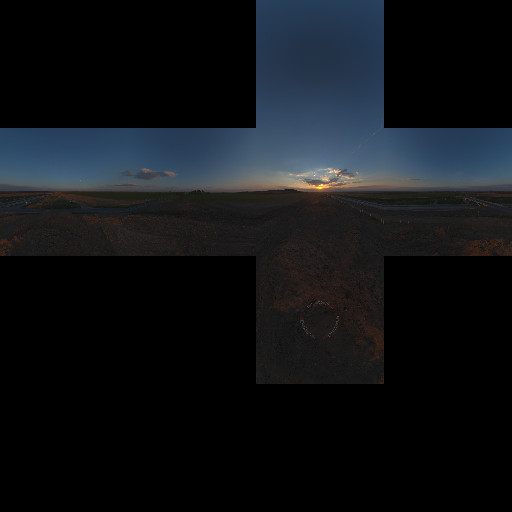

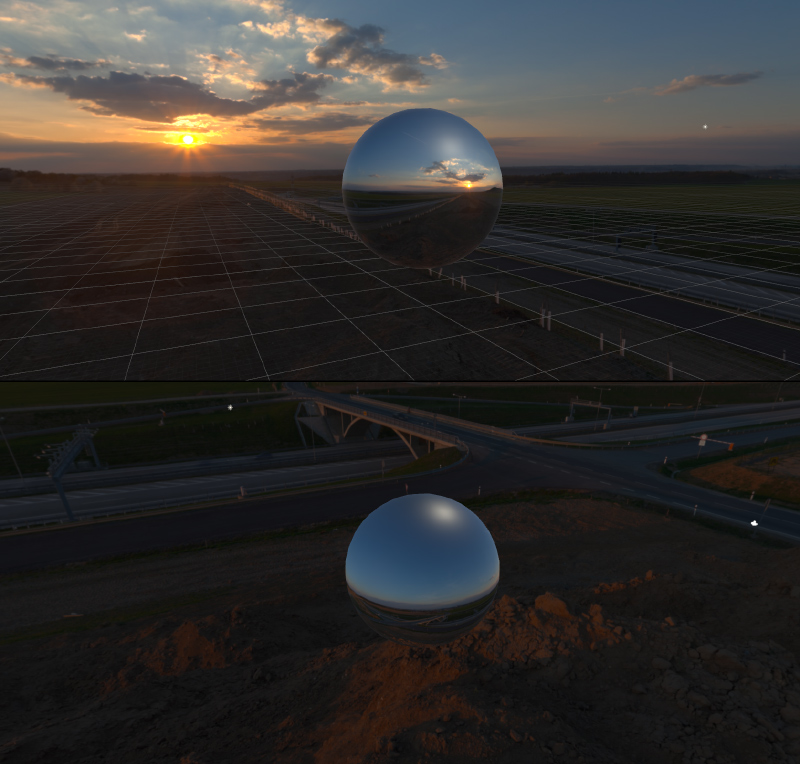

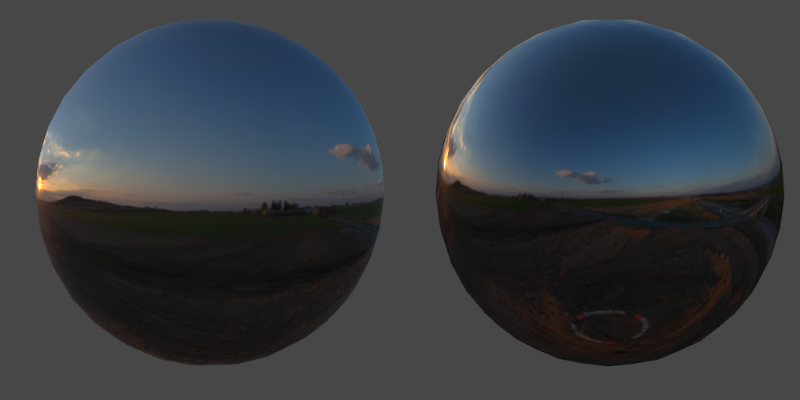

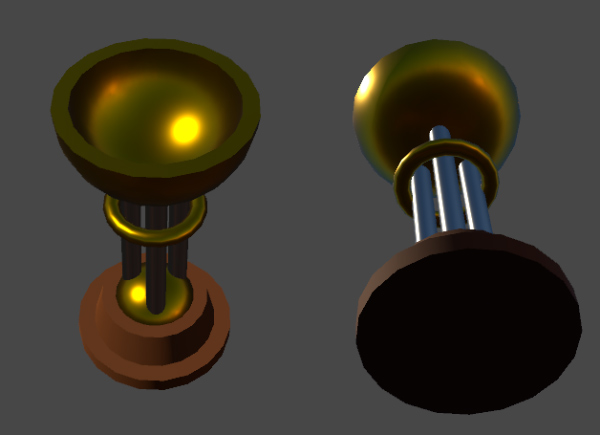

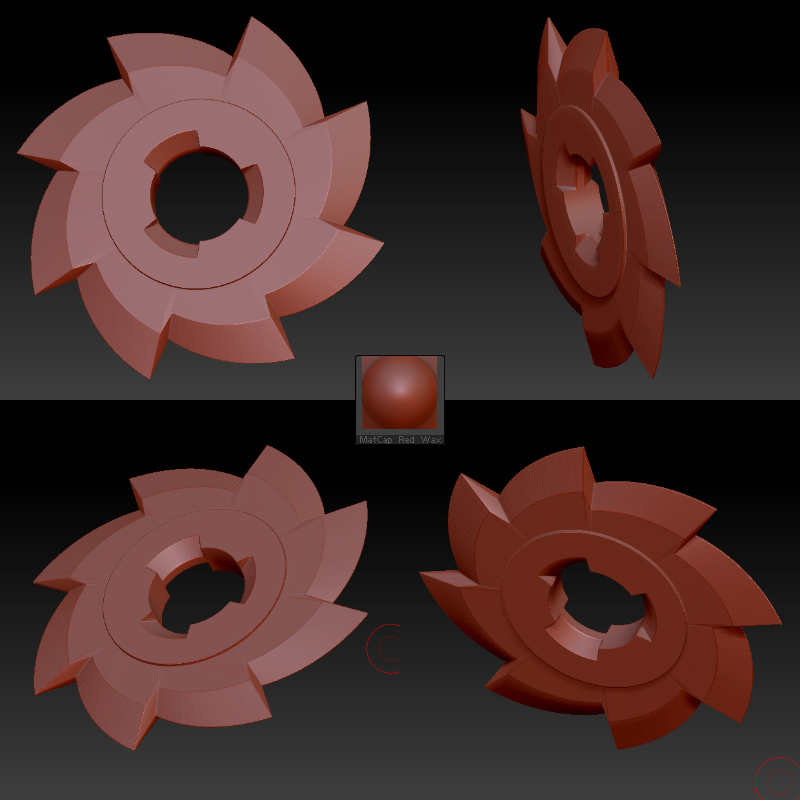

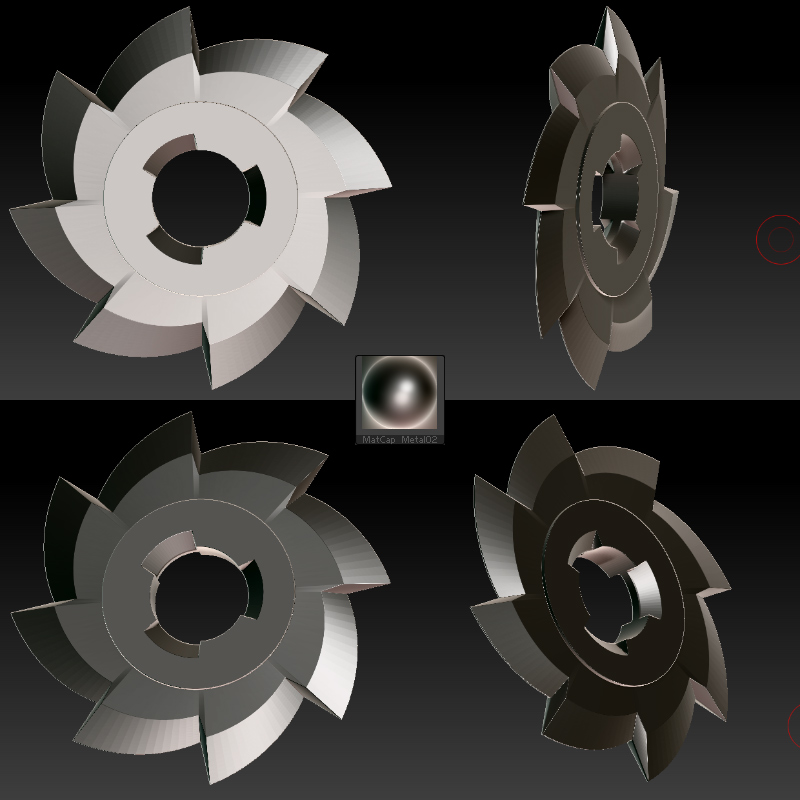

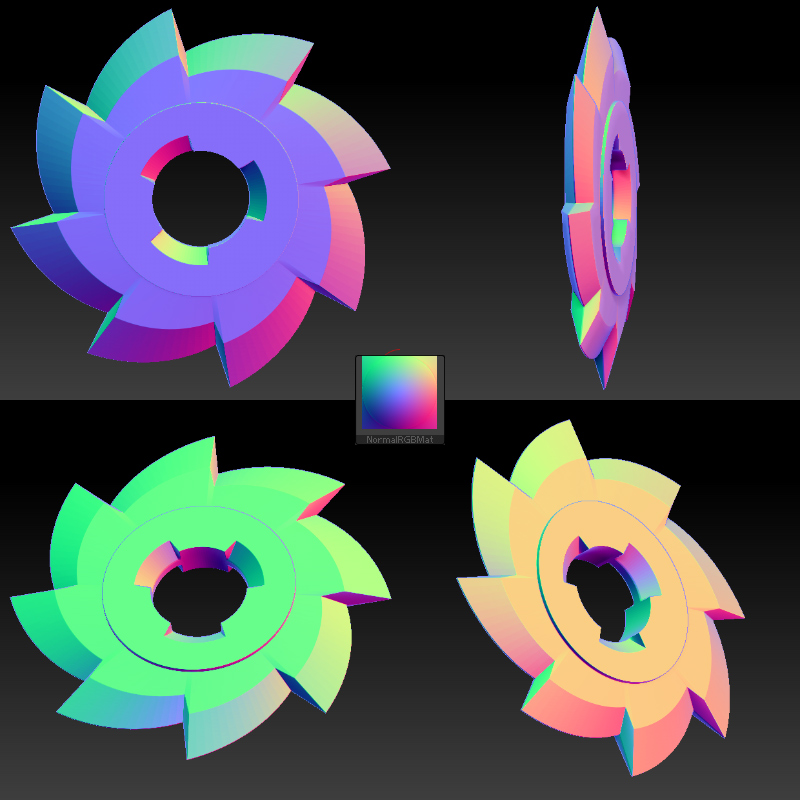

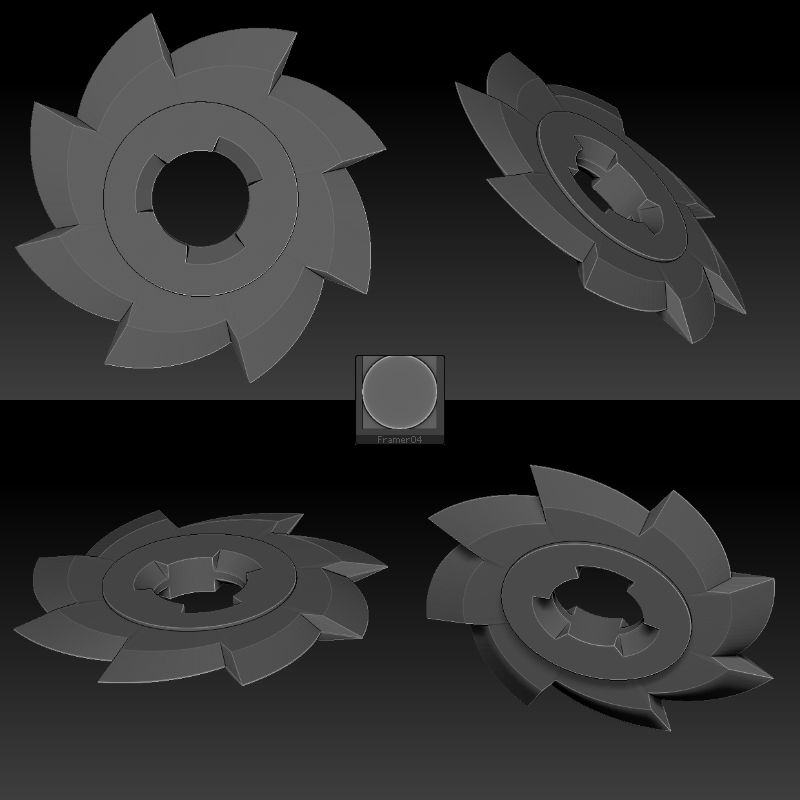

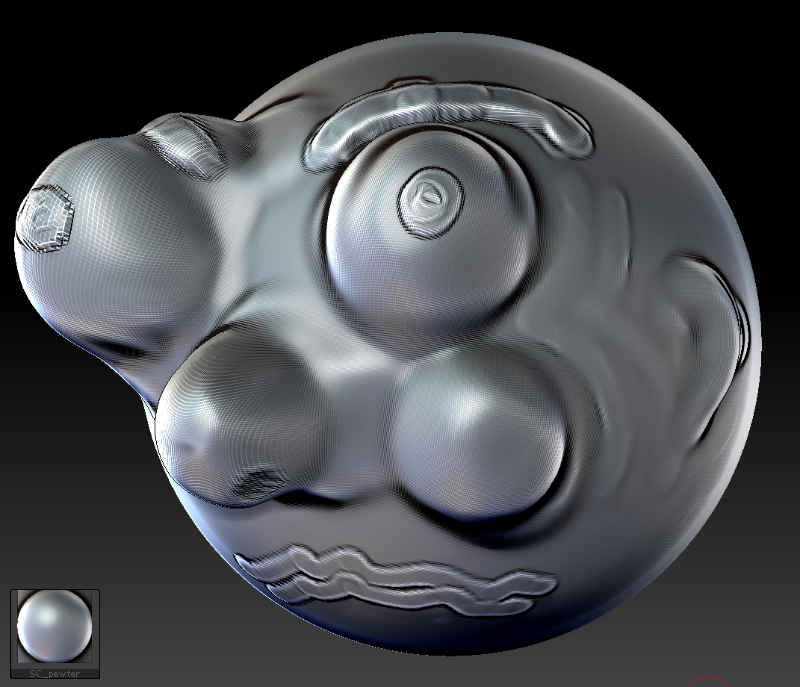

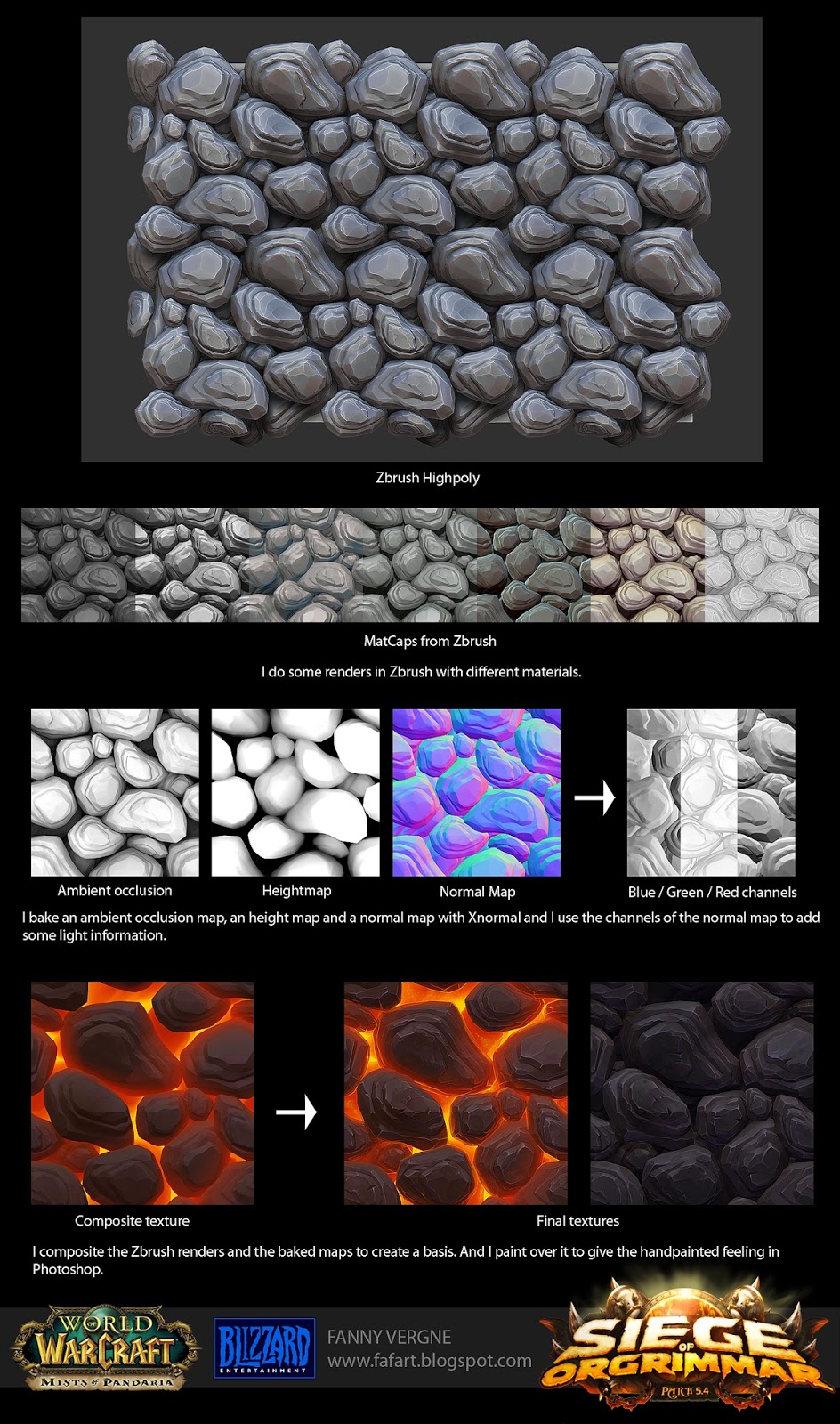

Chapter 35 - Real-Time Rendering, Part IV Onto the next step toward understanding modern rendering, we need to get to Reflection Maps. Reflection Maps Not all lighting models come from simulating a light ray from a direction, bouncing in your object. One of the most important variations come in form of Reflection Maps. These are static images representing the surrounding, and the objects instead of reflecting a mathematical representation of a light source, look at that map instead and reflect their respective pixel. Here's a classic example: Mario's cup (Mario Kart 64):  https://www.youtube.com/watch?v=9yowTB-lGcQ You can see that the cup does not have a static texture. Instead, it seems to be reflecting something. There are actually two ways to emulate reflection. Let's talk about the more important one - wherein you emulate an entire 3D environment, in which case you'll use cubemaps or skyboxes. Let me give you an example. There's this fantastic site called noemotion which provides panoramas, such as this:  This Panorama is in a cylindrical projection. It's distorted so it can be mapped to a sphere, and the sphere would look like this:  However, the point is not to have a sphere with that texture. The point is to have a sphere reflecting that texture. Modern engines support panoramas, but in the old days we'd have them mapped in a different way: As cubes. You might have heard the term cubemap or skybox. So, let's visualize it. Luckily, there's a filter that converts from panorama to skyboxes, made by yours truly.  Either way, we need to view that cube from the inside-out. So most engines will allow you to use a cubemap/skybox/panorama projected into an infinitely-distant cube/sphere/cylinder.  And yeah, the sphere is off on purpose. The sphere is not reflecting anything right now, it just has the same texture applied to it. This is how most old 3D games deal with background. And a lot of modern games as well. Remember Super Mario 64? Skyboxes. I mean, look at this video, at the timestamp. That city is infinitely distant. In this same video, you can pay attention at the end - the clouds in the far background are also infinitely distant. Either way, since they're being reflected by a simple mathematical formula, we can use them to create a reflection. So here's how a sphere would look reflecting the background:  You can see the difference between a flat texture and the reflection here. The reflection (with its according distortion) is the image on the right:  Or a cup!  There's also a far more simplistic form of reflection. These are called matcaps, for material capture. Matcaps are reflections, but the reflection is always moving with the camera, so the source of reflection is always the same. What this means is that the matcap comes in form of a 2D object shaped like a sphere. Because of our 2D vision, and our 2D monitor, and everything we see needs to be collapsed into a plane, barring a mirror behind an object we can never see 180 degrees past it. So a matcap is how we'd be projecting, say, a photograph of a sphere, to any other object, projecting the half sphere onto that object based on the angle relative to you. Example of Matcaps from ZBrush. ZBrush is famous for having great default Matcaps to use:  Matcaps are a fun thing to mess around. Let me show you a few more from ZBrush. First, the default wax material:  A simple, basic metal:  This is a funny one. It's basically a spherical normal map. Like the one we created in a previous chapter:  And here's a very special one:   This one is really interesting. It's called Framer and it accentuates the edges in such a way that it makes easy to see the details of what you're modeling. Keep this one in mind for later. And now onto today's chapter's eldritch abomination. The spherical structure and round features should make easy for you to see how the reflection always matches the one in the matcap:  Because Matcaps look so good since they're not trying too hard at mimicking any physical feature, they can be, funnily enough, used in the production of textures, even if the matcap itself is not used in the game. Fanny Vergne, who did textures for Blizzard, uses them extensively in the production of textures:  Speaking of WoW, WoW uses this kind of system a lot for the actual in-game reflection of objects. It's a very fast way to cheat having shiny cartoony reflections. Every time you see metal plate in WoW reflecting stuff, odds are that it's just a simple matcap reflection. Reflections in most game, however, are made with skyboxes/cubemaps/panoramas. Some are extremely important - for example, a key component of tunnels are the tunnel lights reflecting on your car as you pass by, so having a dynamic change of reflection skybox is important in car games. So the process is, you can't have real-time reflection. So you pre-render and bake a few local reflections, put those images into "probes" that are spreaded around the scene, and your object renders the closest one to it. Take a look at the lights reflecting on top of the car in these two videos: https://youtu.be/sSBXBcqwFgo?t=37 https://youtu.be/0XU8Lt3yn5A?t=95 Over time, the importance of having good, dynamic reflection probes evolved. NEXT TIME: The evolution of reflection maps! How they're everywhere today! Elentor fucked around with this message at 12:39 on Aug 26, 2018 |

|

|

|

I updated the OP. I also sorted chapters per subject. Figured out I'd be doing that sooner rather than later. I consider the Ship Generator finished (for now) as I have implemented everything I wanted in it - the next natural step would be to commercialize it, which would require a massive overhaul to make it user-friendly or at least have a GUI, and I don't intend to do that anytime soon because it'd be a massive effort at this point. It'd be cool to commercialize it somehow but I'm still thinking of how to do that. Anyway, I want the freedom to write some chapters about game tech as I've been doing lately so I rewrote the OP with that in mind, as well as made some sorted lists as I mentioned. Thank you for you guys' support. Last, I am (hoping) to get a few things out by the year's end and maybe get a site up and running with my demos in webgl and playable stuff on your browser. We'll see how it goes.

|

|

|

|

I love these deep dives into graphical techniques. This is a great thread.

|

|

|

|

I saw this tweet/paper and thought of the thread: https://twitter.com/McFunkypants/status/1036298407197925376

|

|

|

|

ProfessorProf posted:I love these deep dives into graphical techniques. This is a great thread. Thanks! hey girl you up posted:I saw this tweet/paper and thought of the thread: Thank you so, so much for sharing that. This is a very extensive paper! It made me realize I could have written (or still write) about my own analysis on the subject because it differs from their approach fundamentally (although both lead to the same place), but that also makes reading their analysis very, very interesting. I wish I could contact the author, but the paper is almost a decade old now.

|

|

|

|

Hey guys, guess who got some early access to The World of Warcraft Diary  Since I've been using WoW a lot as a reference to talk about engine pipelines, a few of the upcoming posts will be about that, with the writer's license. This is pretty exciting! Also, if you're a WoW/MMO player, or not, but you have any questions, this might be a nice time to shoot for them. John's been busy but said he might take some time to answer them, so no promises, but either way I got some very exciting stuff to go on.

|

|

|

|

Elentor posted:I wish I could contact the author, but the paper is almost a decade old now. That doesn't mean you can't contact the author. There's always a decent chance they might just love to talk about that kind of thing, if you can track them down. Some academics might want to put that kind of past behind them or may have written a paper about a topic just for a degree requirement, but a lot of them have enough of a passion to carry it forward. In this case the author's name pulls up an author of a book on Amazon mostly, but specifying the University that published this paper shows this gal who went to that university at the right time, in the degree program that that paper was published under, so she'd be my guess.

|

|

|

|

Nighthand posted:That doesn't mean you can't contact the author. There's always a decent chance they might just love to talk about that kind of thing, if you can track them down. Some academics might want to put that kind of past behind them or may have written a paper about a topic just for a degree requirement, but a lot of them have enough of a passion to carry it forward. Hey, thanks. I find the way she did greebles particularly interesting. Right now there are unsolved problems for the ship generator which are not really needed to be solved for ships to be generated, but prevent deeper parametric stuff that would allow for simpler solutions. These are almost entirely related to parametric creation of geometry or at the very least, geometric adaptation. I don't intend to work on it (specifically, the generator) for another year or so but when I get back I want to add stuff like dynamic deformation matrices (so that a wing can be dynamically bent!), dynamic NURBs connections and tubes to allow for, well, tubes, cables and the likes, parametric boolean operations and some way to use the current knowledge of symmetry to create arcs that can connect separate places like in Star Trek Vulcan Ships. Currently this can be solved in a much easier way but I don't like its limitations. And of course a code cleanup. I've been receiving requests left and right of companies to buy or license the generator  , especially after the Reddit post. , especially after the Reddit post.

|

|

|

|

Hey that's awesome, congrats!

|

|

|

|

These are not game-related, but these are two relatively new videos that I find very interesting: This AI Performs Super Resolution in Less Than a Second - https://www.youtube.com/watch?v=HvH0b9K_Iro With NVIDIA using Machine-Learning-based algorithms for its new Anti-Aliasing method, and MI-specific processors being produced, we can expect Neural Networks to make their way more and more towards gaming over the next decade. I would say that technology-wise, Deep Learning is going to be the next "big thing". Again, this is just my guess. But I'd say the overall major leaps in real-time 3D standards came would be: Standard Forward Rendering -> Universal Languages for Pixel Shaders -> Physically-Based Rendering and Pipelines -> Possibly Deep Learning used in Rendering Also, going back to Page 1 of the thread, wherein we all complained about Quaternions: What are quaternions, and how do you visualize them? A story of four dimensions. - https://www.youtube.com/watch?v=d4EgbgTm0Bg

|

|

|

|

Elentor posted:With NVIDIA using Machine-Learning-based algorithms for its new Anti-Aliasing method, and MI-specific processors being produced, we can expect Neural Networks to make their way more and more towards gaming over the next decade. I would say that technology-wise, Deep Learning is going to be the next "big thing". Again, this is just my guess. But I'd say the overall major leaps in real-time 3D standards came would be: This sort of thing is already used in real time applications, if not games specifically. If you run a newer Photoshop then Adobe are using various neural networks to do all sorts of image processing tasks, I believe including at least some of their upscaling filters. They've at least published a lot of research in that field. That particular upscaler from the two minute papers video seems to have been published at NTIRE 2018: it's one of multiple of different neural network upscaler papers presented just for that one conference, and even more are detailed in their 2018 Challenge paper. The "fully progressive" one didn't even have the lowest reconstruction error in its category if I'm reading the paper correctly, although it was a lot faster than the one with less error. Over in offline land, we use neural network-based filters in production today. Almost all offline renderers work by producing ever better approximate solutions where the error appears as white(ish) noise over the image. One of the major trends of the last couple of years has been applying denoising filters on our crappy intermediate results to get to a usable image faster. The one common major development of the last year is everyone and their dog implementing Nvidia's ML denoiser for their renderer, which like that upscaler is based on a neural network. Nvidia's latest research one uses what's called a recurrent autoencoder (as opposed to something like generative adversarial network like that particular upscaler also this is getting uselessly technical). Karoly actually did a video on that one too: https://www.youtube.com/watch?v=YjjTPV2pXY0 There are in general a lot of graphics problems that are variations of "I want to produce this known nice looking image that took me ages to get from this known lovely looking image that took a fraction of the time to generate, but I have no idea what a filter function that does that work should look like" which is a type of problem ML is very well-suited for. It's still very much an active research area because ANNs are fickle beasts: you need to be careful with your training data and make sure its sufficiently general to avoid fitting the filter to the data, and the more general you want it to be the harder it is. Nvidia's denoiser initially came with all sorts of evil caveats -- for instance we had to run it on 8-bit LDR images, which is just not right. They've refined it since and it's great for something that can clean up horrifyingly noisy inputs in milliseconds but it still overblurs or otherwise fails for some common cases. It also gets even trickier when you start looking at temporal stability: in a game, video or other sequence you need the images to not flicker from frame to frame, which means the filter needs to be more complex. Because of this all the production offline renderers I know of still use some sort of more predictable non-local means or other more traditional filter to do any final frame denoising. Here's one for deep images Disney published yesterday for instance. Anyhow. Machine learning is mature enough that you could use ML-based filter techniques in a game today if you really want to. You could train an ANN on the game's own output to produce a moderately effective game-specific upscaler, if you have a small research team and a love of adventure. It might even be a good idea if you want to do some sort of specific upscaling that's otherwise hard, like foveated rendering for VR. It'll have to be a lot simpler than the ones used in image processing, which take seconds rather than milliseconds, but it can probably be made to work pretty well. The problem is you'd be jumping into the unknown, and most game companies aren't super keen on betting their graphics pipeline on their ability to do weird stuff no one has tried on the fly (rule-proving exception: media molecule). I'd expect the real time ray tracing hardware to see quicker adoption even if the hardware is more recent (nonexistent, currently), simply because we've done raytrace queries in a raster pipeline since Pixar started making movies with Reyes 30 years ago. We've accumulated a lot of institutional knowledge on what sort of effects are feasible with 2 rays/sample, and now we finally have the hardware support to do 2 rays/sample in a 60 FPS game at a small but not insignificant cost. There's a lot of nicely visible low hanging fruit just waiting to be plucked, and promptly overused in a flurry of chrome spheres and refractive glass sculptures.

|

|

|

|

Elentor posted:These are not game-related, but these are two relatively new videos that I find very interesting: I watched this last night and while I kind of grasp what's supposed to be happening, I still don't have any intuition for what that means with the numbers. I think I'm just too dumb for 3b1b's videos.

|

|

|

|

Xerophyte posted:Anyhow. Machine learning is mature enough that you could use ML-based filter techniques in a game today if you really want to. You could train an ANN on the game's own output to produce a moderately effective game-specific upscaler, if you have a small research team and a love of adventure. It might even be a good idea if you want to do some sort of specific upscaling that's otherwise hard, like foveated rendering for VR. It'll have to be a lot simpler than the ones used in image processing, which take seconds rather than milliseconds, but it can probably be made to work pretty well. Years ago I daydreamed about an iterative ML AI to make smart competitors for a singleplayer game. Back then my idea had been simply that you'd write a basic AI, then record players playing against that basic AI, analyze their actions, and use that to automatically generate a "next-level" AI, which you'd then pit against the players. Keep iterating until either the players solve your game or nobody can beat the AI. I wonder if anyone's actually tried to do something like that, where the behavior of in-game actors is dictated by neural nets. I know people are making AIs that can play games the same way humans play them, but do you know if anyone's using ML to produce the gameplay that humans experience?

|

|

|

|

|

| # ? May 23, 2024 15:44 |

|

TooMuchAbstraction posted:I wonder if anyone's actually tried to do something like that, where the behavior of in-game actors is dictated by neural nets. I know people are making AIs that can play games the same way humans play them, but do you know if anyone's using ML to produce the gameplay that humans experience? AI isn't exactly my field, I just go to graphics conferences and listen to the occasional Nvidia engineer gush about machine learning things they'd rather do instead of push pixels. I'm sure there's research going on, but I at least haven't heard of any "real" video game AIs using neural nets. I know there's a couple of open source board game AI opponents built on the types of techniques Deep Mind used for alphago, which basically worked by using the ANN as a heuristic to importance sample for a traditional Monte Carlo tree search AI. Valve's collaboration with OpenAI for Dota bots and Blizzard's with Deep Mind to add an AI feature layer to Starcraft 2 are probably mostly done because those companies are very financially secure and full of nerds who think machine learning is cool, but in some part because they want to keep tabs on how close the tech is to viable for real usage. The Dota 2 bots are pretty credible -- if not all that open, far as I know -- and that's a drat complex game for an AI. I think there are a couple of practical reasons ANNs still haven't been used for real, even where they in principle could. One is that it's real hard to add any sort of manual control to an ANN. They're black boxes that approximate some optimization function and you generally can't open them to see how they work or tweak their behavior. The goal of most game AI isn't to defeat the player, it's to create something nice and varied that the player will enjoy repeatedly beating the tar out of. ANNs are good at making something very consistent that will either always lose or always win, depending on how well you did in designing and training it. They're not necessarily all that entertaining to play against and it'll be hard to make them varied and appropriately challenging. You can still make them useful by not using them directly. You could train a helper ANN for your AI that'll rank some action options depending on current game state and then you do some fuzzy logic weighted random selection on those with the weighting based on your difficulty to select a move. That just basically gives you a slightly different heuristic then what you'd have done by hand, which could be nice for complex things but is probably not critical. A manual heuristic combined with appropriate cheating works, is proven and is almost certainly easier to predict, control and tune. If your AI keeps always invading Russia in winter and the game ships next week you probably want to be able to tell it to stop, not train it until it figures out that it's a bad idea. Another is that we're at the point where it's technically feasible, but not really practical. A couple of researchers willing to push the envelope and given couple of weeks of extended ANN training before every balance patch could probably make a decent ANN for playing Civilization, or at least for handling some particularly hard and ML-appropriate sub-task like finding a good set of potential moves for a unit given the current game state. That's not useful enough to do for a release, and I think more boardgame-like games such as Civ are probably one of the easier targets as far as using ANNs for real game AI goes.

|

|

|