|

Don't Ask posted:Are CPUs still a significant bottleneck? I've got a 6700K that's so far handling everything I can throw at it. Paired with a 1080ti at 1440 I don't remember having slowdowns in any recent game. Depends on the game. You may run into core count issues sooner rather than later, but honestly I think the premonition of 4 cores being unsupportable is mostly overblown.

|

|

|

|

|

| # ? May 30, 2024 13:00 |

|

Lockback posted:Depends on the game. You may run into core count issues sooner rather than later, but honestly I think the premonition of 4 cores being unsupportable is mostly overblown. I think having only 4 THREADS may be an issue soon-ish, and maybe already is for some newer games I haven't gotten around to yet. I would ~guess~ that 4c/8t CPUs would be OK for a while yet.

|

|

|

|

Don't Ask posted:Are CPUs still a significant bottleneck? I've got a 6700K that's so far handling everything I can throw at it. Paired with a 1080ti at 1440 I don't remember having slowdowns in any recent game. I just upgraded from a 3770k to a 3600 and I can't say any games other than AC: Origins were hurting on the 3770k. Jedi Fallen Order too, to some smaller extent. RDR2 and similar had no problem at all on the 3770k. But that's in the games I tend to play, which are RPGs/action games. I upgraded partly because I wanted to, not because of need. The system was 7 years old, thought it was time to try some newer tech. I'd check cpu/gpu usage to see if the 6700k is indeed a bottleneck in the games you play. CaptainSarcastic posted:This is an aside, but I find it hilarious that UserBenchmark is such a rabid Intel fanboy that he has to throw anti-AMD propaganda onto every single comparison screen. I just noticed this myself when looking at the 3770k/6700k comparison. There's a prominent AMD bashing on a page that's comparing two Intel CPUs. I assume eFPS is the stat made just to dump on AMD that was mentioned in one of these threads recently.

|

|

|

|

Don't Ask posted:Are CPUs still a significant bottleneck? I've got a 6700K that's so far handling everything I can throw at it. Paired with a 1080ti at 1440 I don't remember having slowdowns in any recent game. I believe the general idea is that if you're on a budget, it's pretty much always a better set-up to skimp on the CPU (say, stay in the less-than-150-USD range) and then get the nicest GPU you can afford, than the other way around. CPUs are still potentially a bottleneck if you're chasing to max-out that 144 Hz monitor at 1440p or greater, but since a lot of people still game on 1080p with a 60 Hz monitor, even going with an Athlon or a Pentium could be workable if you're pairing it with a 1660. EDIT: to be clear, assuming that you couldn't afford anything better. I wouldn't recommend anyone actively do this if they had a choice. gradenko_2000 fucked around with this message at 17:25 on Jun 3, 2020 |

|

|

|

4C4T and 4C8T aren't completely dead yet (especially if you do not play AAA games other than e-sports titles) but not really advisable for a new build at this point in time. They are increasingly starting to crop up minimum-framerate issues. The 3600 is really the cheapest CPU that is adviseable at this point. When you go below that, you are past trimming the fat and starting to cut bone. The 1600AF is the only chip advisable that's any cheaper imo and you still are going into it knowing that you're taking a massive loss in gaming performance and AVX performance (streaming/video encoding/productivity tasks). The 2700/2700X make the same compromise in per-thread performance but combine that with an unacceptably high price and performance that barely exceeds the 3600 even in very thread-friendly tasks. Even the 3600 is a compromise, you are going into it knowing that you no longer have a per-thread performance advantage over consoles, only just matching them, and you will have 1 core less than a console. Consoles basically have a 3700X but with one core reserved for the OS.

|

|

|

|

It does have a bit of a speed advantage. The XBSX offers 7/14 @ 3.66 or 7/7 @ 3.8, with comments from Microsoft that they expect the latter to be more popular, at least early on. PS5 info is less concrete, but it appears they're aiming for 3.5, though not with the locked rates the Xbox is promising, while the 3600 can do 4.2. With everything still untested there's no idea of how much this could compensate for any hardware gap, but it's there. There's also the questions of when developers will take full advantage of the 7 cores available as I don't think anyone expects that right out of the gate, and the question I don't see asked, if they'll handicap games to keep the market of any PC's less capable than the new consoles.

|

|

|

|

Don't Ask posted:Are CPUs still a significant bottleneck? I've got a 6700K that's so far handling everything I can throw at it. Paired with a 1080ti at 1440 I don't remember having slowdowns in any recent game. For certain AAA games absolutely the CPU still matters I have to turn clutter down in AssCreed Odyssey, with 2x1080ti and an i9 cpu, at 1080p 60fps GTAV never ran perfectly either, the benchmark always had trouble near the end in the physics/explosion part Those are the ones that I remember I'm sure I could run at higher res but I want to max everything and forget about it in AC:Odyssey specially cities drop your frame rate noticeably and it's annoying

|

|

|

|

v1ld posted:I just upgraded from a 3770k to a 3600 and I can't say any games other than AC: Origins were hurting on the 3770k. Jedi Fallen Order too, to some smaller extent. RDR2 and similar had no problem at all on the 3770k. But that's in the games I tend to play, which are RPGs/action games. I made a similar upgrade this winter. Went from a 3770k to a 3800x. While my average FPS probably only increased slightly, Iíve found my minimum FPS, and general FPS stability to be much, much better. I use a 144hz gsync monitor, which made it pretty noticeable.

|

|

|

|

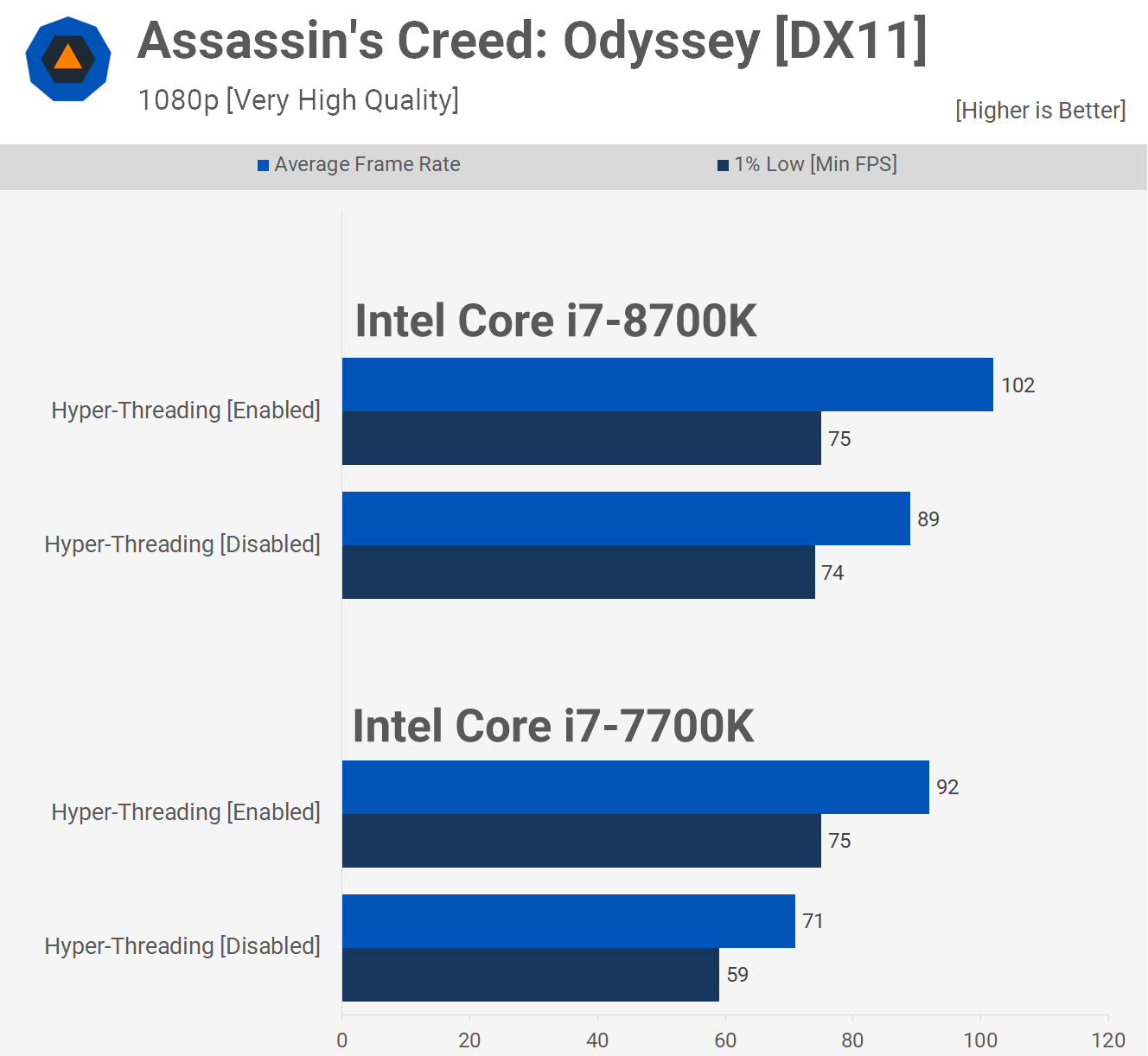

Some Goon posted:There's also the questions of when developers will take full advantage of the 7 cores available as I don't think anyone expects that right out of the gate, and the question I don't see asked, if they'll handicap games to keep the market of any PC's less capable than the new consoles. Yeah, this will be a particularly weird question for launch / near-launch titles on the PS5 side, since Sony is mandating that any new PS4 games submitted for certification starting next month will also need to be able to run on the PS5. So I'd expect there will be a solid crop of titles for a while that don't take full use of the hardware. Or, even if they do (via additional options or modes available on the PS5), the devs will already have done most of the work to ensure that it still runs alright on lower-core count CPUs for the inevitable PC port. But, yeah, 4-core CPUs are certainly getting to be past their prime. I don't really think that actual threads makes much of a difference, though, since the vast majority of games tested with hyperthreading on/off have shown very little performance impact (with some exceptions--Civ6 apparently drops turn-times by up to half using HT). Either way, you should be looking for 6+ core CPUs if you want to be sure you'll be able to keep up with games for the next few years.

|

|

|

|

Don't Ask posted:Are CPUs still a significant bottleneck? I've got a 6700K that's so far handling everything I can throw at it. Paired with a 1080ti at 1440 I don't remember having slowdowns in any recent game. You'll notice some speed ups for other stuff that's more productivity based (think transcoding, rendering, etc), but like your Civ 6 turn time will go from 36 seconds to 32 seconds if you replace your 6700K with a 10900K maybe? Ivy Bridge and Haswell era chips choke a bit on more modern games or VR games (see: modern games), but if you're happily plugging along at 60fps at 1080p right now, there's no compelling reason to upgrade.

|

|

|

|

a bunch of people posted:Stuff about multicore/threading. Welp thanks, I wasn't aware that games have better support for multicores. I'm still used to the days of having 1 thread at 100%while the others are completely idle. My current plan is to get a new mobo & modern CPU together with the 3080(ti), they should be able to stay relevant for most of the PS5/XBOXXX lifetime if past gens are any indication.

|

|

|

|

DrDork posted:Yeah, this will be a particularly weird question for launch / near-launch titles on the PS5 side, since Sony is mandating that any new PS4 games submitted for certification starting next month will also need to be able to run on the PS5. So I'd expect there will be a solid crop of titles for a while that don't take full use of the hardware. Or, even if they do (via additional options or modes available on the PS5), the devs will already have done most of the work to ensure that it still runs alright on lower-core count CPUs for the inevitable PC port. This is true when you have plenty of cores (like an i9) but not true if you have an i7. Again, depends A LOT on the game. AND this is assuming you aren't GPU bound https://www.techspot.com/article/1850-how-screwed-is-intel-no-hyper-threading/ AC Odyssey is a good example (when not GPU bound)

|

|

|

|

What makes asscreed games so cpu intensive? Ditto with kingdom come deliverance. It made my i5 6500 CPU seem incredibly old.

|

|

|

|

Honestly mostly bad coding.

|

|

|

|

Lockback posted:Honestly mostly bad coding. Don't forget the layers of DRM that have been proven to cause stutter!

|

|

|

|

Lockback posted:Honestly mostly bad coding. Yeah. AC games aren't indicative of hardware going bad on its own merits so much as programmers not caring to optimize / DRM issues. It's a moot point if you want to play them, but using them as a barometer of what is to come is perhaps misplaced. Of course, other companies could get lazy too and use the extra cores available to brute force though, but that's hasn't been seen yet. Iirc the issues with 4 or 6T CPUs so far is just rare but substantial frame drops not seen on higher thread counts.

|

|

|

|

buglord posted:What makes asscreed games so cpu intensive? Ditto with kingdom come deliverance. It made my i5 6500 CPU seem incredibly old. For both, it's just the high number of npcs that the cpu has to run routines for. Same for witcher 3's novigrad area. Cyberpunk is going to kill off four threads for good,unless they reduce the npc density shown in the gameplay reveal footage. (this is very likely, ps4/xbone versions having major performance problems apparently)

|

|

|

|

Some Goon posted:Yeah. AC games aren't indicative of hardware going bad on its own merits so much as programmers not caring to optimize / DRM issues. Indeed. There are, as I mentioned, a few games that do noticeably benefit from HT. AC games are one, Civ6 is another. But the vast majority of games today don't really get a whole lot of benefit. Now how it's gonna be two-three years from now? Who knows. Which is why I think you'd be silly to be buying a 4C CPU for gaming today, regardless of whether it/s 4/4 or 4/8. Especially with a Ryzen 3600 being only ~$165, there's really no reason to be buying anything less.

|

|

|

|

Zedsdeadbaby posted:For both, it's just the high number of npcs that the cpu has to run routines for. I am going to disagree here and point to AssCreed Unity (the France setting) which had a RIDICULOUS amount of npcs in crowds with no noticeable effect. I donít know if it was using a different engine or what, but the next games after that had way fewer NPCs in crowds, and suffered from hiccups in cities. Iím going with the DRM/bad optimization theory (As an aside, AC Unity is a little reviled in the series because of all the bugs at release. I played it a year after release, all patched up, and itís one of the best in the series - incredible visuals and good story, one of my favorites )

|

|

|

|

CaptainSarcastic posted:I was really happy with my 1060 6GB card. At 1080p the thing was awesome, and it did okay at 1440p but I had to set a lot of details down once I upgraded to a better monitor. It's still in my old desktop, chugging away quite happily. I'm still using my 1060 from 4 years ago as well. What are you doing now that you couldn't before the upgrade, other than rtx.

|

|

|

|

Comfy Fleece Sweater posted:I am going to disagree here and point to AssCreed Unity (the France setting) which had a RIDICULOUS amount of npcs in crowds with no noticeable effect. IIRC they started developing Unity before they had a clear picture of the PS4/XB1s performance, and the huge crowds ended up running like poo poo on final hardware (as low as 20fps). They scaled things back in the sequels to get closer to a 30fps lock.

|

|

|

|

Purgatory Glory posted:I'm still using my 1060 from 4 years ago as well. What are you doing now that you couldn't before the upgrade, other than rtx. I upgraded to a 27" 144hz 1440p monitor, and the 1060 just couldn't push that many pixels without seriously quality reducing settings and/or having the frame rate plunge well below 60 fps. If I was still at 1080p I might have put off upgrading my GPU even longer.

|

|

|

|

Can the latest Intel iGPU run CS:GO at 1080p high?

|

|

|

|

Shaocaholica posted:Can the latest Intel iGPU run CS:GO at 1080p high? The simple desktop 630 ones can do Low at around 60fps, High would be 20-30 I guess. If you're talking about a fancy IrisPro part or one of them 10nm mobile 11th gen UHD things, no idea. The 3000 series AMD APUs can do max details/no MSAA at around 70-80 last time I checked. sauer kraut fucked around with this message at 00:04 on Jun 4, 2020 |

|

|

|

Shaocaholica posted:Can the latest Intel iGPU run CS:GO at 1080p high? The latest desktop iGPU (the most recent mobile chips did a significant iGPU improvement) is still the UHD Graphics 630. AFAIK that would struggle to get 60fps on max CS:GO settings @ 1080p, but it should be able to run the game, and obviously turning settings down would get you an acceptable FPS.

|

|

|

|

Oh the laptop i7-1065G7 with the Iris Plus GPU seems to be able to get 110fps in CS:GO at 1080p/lowest so it might still be playable at highest.

|

|

|

|

buglord posted:What makes asscreed games so cpu intensive? Ditto with kingdom come deliverance. It made my i5 6500 CPU seem incredibly old. Stacked layers of DRM. The cracked versions of AssCreed with the DRM stripped out are vastly more performant.

|

|

|

|

SwissArmyDruid posted:Stacked layers of DRM. The cracked versions of AssCreed with the DRM stripped out are vastly more performant. Lol wat? Why is DRM running all the time?

|

|

|

|

Shaocaholica posted:Lol wat? Why is DRM running all the time? listen, you paid for assassin's creed, and you're going to pay for it to get your full money's worth

|

|

|

|

Shaocaholica posted:Lol wat? Why is DRM running all the time? The more robust DRM systems work by scattering heavily obfuscated (and slow) authentication checks all over the exe, rather than just checking once at the start. Developers are supposed to annotate their code with markers that limit the checks to specific regions that aren't performance critical, but if they let them slip into the hot path then FPS tanks.

|

|

|

|

Shaocaholica posted:Can the latest Intel iGPU run CS:GO at 1080p high? Comet Lake is still using Intel UHD graphics, so no. It's Rocket Lake (and Tiger Lake on mobile) that's supposed to move on to Intel's Xe graphics that are supposed to be much more capable.

|

|

|

|

repiv posted:The more robust DRM systems work by scattering heavily obfuscated (and slow) authentication checks all over the exe, rather than just checking once at the start. Developers are supposed to annotate their code with markers that limit the checks to specific regions that aren't performance critical, but if they let them slip into the hot path then FPS tanks. So its like "haha gently caress your draw calls imma run this other code instead"?

|

|

|

|

gradenko_2000 posted:Comet Lake is still using Intel UHD graphics, so no. It's Rocket Lake (and Tiger Lake on mobile) that's supposed to move on to Intel's Xe graphics that are supposed to be much more capable. Iris Pro is on Ice Lake, the other 10th gen Intel processor.

|

|

|

|

the asscreed drm killing performance turned out to be fake. it's become one of those internet things that people seem to have half-heard and misremembered. people did benchmarks with and without drm and didn't see a performance difference. https://old.reddit.com/r/Piracy/comments/e6f0mi/ac_origins_denuvo_vs_no_denuvo_benchmark/

|

|

|

|

shrike82 posted:the asscreed drm killing performance turned out to be fake. it's become one of those internet things that people seem to have half-heard and misremembered. And if you took more than two seconds to look at the graphs you linked, you'd see the MASSIVE difference in frametime pacing.  See those white spikes on the left side that you don't see on the right? Those are framedrops and microstutters caused by load spikes from the DRM. Killing performance is absolutely real. It is not enough, in this day and age, to rely on simplistic averages to determine performance. An average obscures the actual moment-to-moment values of what is actually happening. It's the same thing where the average of 4 and 6 is 5, and the average of 0 and 10 is also 5, but one is more even than the other. We did not get here on the backs of the work of Wasson et al. to just ignore everything on the left side of those screenshots to go, "UNGA, NUMBER SAME, NO PROBLEM". And even if there weren't any problems on this VERY well-specced machine, there WILL be problems when you scale your hardware down. So on top of being a caveman that can't read graphs, does this mean you're also a hardware elitist? The ONLY counterarguement here that you may have is that the CPU graph is hosed, but the other two graphs are still agree with the assertion that the DRM kills performance. SwissArmyDruid fucked around with this message at 07:23 on Jun 4, 2020 |

|

|

|

Do people still pirate games? Thatís like something Ďmy roommateí did in college 20 years ago.

|

|

|

|

Shaocaholica posted:Do people still pirate games? Thatís like something Ďmy roommateí did in college 20 years ago. Yes.

|

|

|

|

I have a bit of an odd question: are modern entry-level cards better than much older cards, setting aside API limitations, just because of the... general technological improvements? if you compared something like, say, a GT 730, and put it up against a GTS 450, or an 8800 GT, how would that fare? I guess this has to do with the question of "retro gaming" - assuming that you don't feel the need to use components that were contemporary to something like the early-aughts, would using an HD 6450 be good enough for a Windows XP machine?

|

|

|

|

Ihmemies posted:Yes. Seems like a great way to end up with a nice secret bitcoin miner running in your background, or worse.

|

|

|

|

|

| # ? May 30, 2024 13:00 |

|

SwissArmyDruid posted:And if you took more than two seconds to look at the graphs you linked, you'd see the MASSIVE difference in frametime pacing. look at the pirate that wants to justify pirating. if you bothered reading the thread I linked to - a) the pirates realize the y-axis of the two graphs don't match up b) the white lines don't represent frame-time and aren't even from the same run one of the pirate who realizes this discusses this - https://www.reddit.com/r/CrackWatch...tent=t1_f9uf6ct shrike82 fucked around with this message at 07:47 on Jun 4, 2020 |

|

|