|

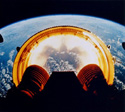

I've got a performance issue with AWS VPC Site-to-Site VPN that my NOC colleagues are too busy to troubleshoot so I'm going to post about it here in the hopes that someone else has encountered the same problem in the past. The setup consists of an on-premises VMware environment which is connected to an AWS VPC via an IPsec tunnel from a Cisco ASA at the edge of the on-premises network. Throughput over the tunnel is complete dogshit despite there being no congestion on the link and no traffic shaping/QoS policies configured. Testing with iperf3 reveals abysmal throughput as well as a bunch of TCP retransmits (The example below if from on-premises->AWS but it looks the same in the opposite direction): pre:[root@on-prem-server]# iperf3 -c aws-server.example.com -f K Connecting to host aws-server.example.com, port 5201 [ 4] local 172.16.8.152 port 58304 connected to 172.16.11.10 port 5201 [ ID] Interval Transfer Bandwidth Retr Cwnd [ 4] 0.00-1.00 sec 1.38 MBytes 1415 KBytes/sec 161 47.9 KBytes [ 4] 1.00-2.00 sec 767 KBytes 767 KBytes/sec 13 9.32 KBytes [ 4] 2.00-3.00 sec 511 KBytes 511 KBytes/sec 52 34.6 KBytes [ 4] 3.00-4.00 sec 767 KBytes 767 KBytes/sec 2 34.6 KBytes [ 4] 4.00-5.00 sec 767 KBytes 767 KBytes/sec 10 33.3 KBytes [ 4] 5.00-6.00 sec 767 KBytes 766 KBytes/sec 17 7.99 KBytes [ 4] 6.00-7.00 sec 511 KBytes 512 KBytes/sec 25 29.3 KBytes [ 4] 7.00-8.00 sec 511 KBytes 510 KBytes/sec 15 9.32 KBytes [ 4] 8.00-9.00 sec 511 KBytes 512 KBytes/sec 14 26.6 KBytes [ 4] 9.00-10.00 sec 767 KBytes 766 KBytes/sec 11 28.0 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth Retr [ 4] 0.00-10.00 sec 7.12 MBytes 729 KBytes/sec 320 sender [ 4] 0.00-10.00 sec 6.67 MBytes 683 KBytes/sec receiver iperf Done. pre:[root@on-prem-server]# hping3 -S -c 50 -V aws-server.example.com -p 9200 <SNIP> --- aws-server.example.com hping statistic --- 37 packets transmitted, 50 packets received, -35% packet loss round-trip min/avg/max = 46.9/47.6/50.2 ms pre:[root@aws-server]# hping3 -S -c 50 -V on-prem-server.example.com -p 5044 <SNIP> --- on-prem-server.example.com hping statistic --- 50 packets transmitted, 50 packets received, 0% packet loss round-trip min/avg/max = 46.8/47.0/47.4 ms quote:When packets are too large to be transmitted, they must be fragmented. We do not reassemble fragmented encrypted packets. Therefore, your VPN device must fragment packets before encapsulating with the VPN headers. The fragments are individually transmitted to the remote host, which reassembles them. pre:! ! This option instructs the firewall to fragment the unencrypted packets ! (prior to encryption). ! crypto ipsec fragmentation before-encryption <outside_interface> !

|

|

|

|

|

| # ? May 17, 2024 20:16 |

|

A VPN connection to AWS is two tunnels with asymmetric routing enabled - do you have both tunnels up and a bad route? Try bringing a tunnel down temporarily to see what happens.

|

|

|

|

Thanks Ants posted:A VPN connection to AWS is two tunnels with asymmetric routing enabled - do you have both tunnels up and a bad route? Try bringing a tunnel down temporarily to see what happens. We did have some issues a while ago when I shifted the tunnel from a TGW back to a regular VPG (Egress traffic from AWS was vanishing) but we sorted that (Needed to add a static 0.0.0.0 route on the Site-to-Site VPN connection on the AWS side). Routing at the moment is fine. To me this really seems more like Layer 4 issue than Layer 3. The packet loss I mentioned only presents when testing with hping3 sending TCP SYNs. Regular ICMP sees zero loss in either direction. Pile Of Garbage fucked around with this message at 20:57 on May 7, 2021 |

|

|

|

I've got a problem to solve and I want to make sure I'm not missing some weird esoteric AWS service or sub-feature that could do it easier for me. I have two s3 buckets. I need to copy all the files from one bucket to another, gzipping them in the process. Doesn't have to be a single step process (I.e there can be an intermediary bucket) and money is no object, it just needs to be done as quickly as possible. There won't be any other operations being made on either bucket during this time. The destination bucket should only contain the gzipped files. The files in the source bucket are on average 600kb in size uncompressed, and there are ~700,000 of them. Source bucket: s3://source-bucket/object1.txt s3://source-bucket/object2.txt ... s3://source-bucket/object699999.txt s3://source-bucket/object700999.txt Destination bucket (in future) s3://destination-bucket/object1.gz s3://destination-bucket/object2.gz ... s3://destination-bucket/object699999.gz s3://destination-bucket/object700999.gz Here is my solution: 1. Create an SQS queue and intermediate bucket 2. Create a lambda function that: 2.1. takes an s3 event message as an input, 2.2. fetches the s3 object contents referenced in the event payload 2.3. gzips the contents 2.3. writes out the gzip file to the destination s3 bucket. 3. add an object-create event on the intermediate bucket that sends a message to the sqs queue 4. attach the lambda function to the queue 5. in a largish ec2, run an s3 sync command to copy the contents of s3://source-bucket to s3://intermediate-bucket. the create event will be added to the queue, the lambda function will be invoked, and the file will be copied to the new bucket I'm using a queue to make it easier to throttle the events and to keep track of failed events. I'm using an intermediate bucket because I don't know of an elegant way to add every s3 object to a queue Thoughts? Is this overwrought and should I just write a script in the ec2 instance to basically do this in one go?

|

|

|

|

S3 Batch Operations can have a lambda as an invoke iirc, that'd let you do it in one go as quick as the services let you, you can feed it CSV or s3 manifest. Not sure if the porcelain is as nice as I remember but check it out.

|

|

|

|

Startyde posted:S3 Batch Operations can have a lambda as an invoke iirc, that'd let you do it in one go as quick as the services let you, you can feed it CSV or s3 manifest. Not sure if the porcelain is as nice as I remember but check it out. God drat this looks perfect, thank you

|

|

|

|

I would keep this very very simple: - Launch a c5.24x instance - List all objects in source S3 bucket to a file - Create as script to split that file into 24 equal sub-lists - Launch 24 instances of a script that downloads, gzips uploads files from one of the 24 sub lists and uploads them to target S3 bucket - Terminate EC2 instance This process scales horizontally pretty much infinitely, using any size and number of EC2 instances. If you were feeling clever, you could launch a fleet of EC2 instances from an AMI that had the scripts preloaded that automatically download a list of files to process from a list of list files on a staging S3 bucket, have them each download one list file, process that file and then auto terminate. Set maximum number of ec2 instances to a thousand and watch them crank through the list in no time flat.

|

|

|

|

Granite Octopus posted:God drat this looks perfect, thank you np, interested to hear how it shakes out for you.

|

|

|

|

Also ensure that you have an S3 gateway endpoint attached to your VPC - this will save on NAT costs but also keeps the traffic within the AWS network for much more throughput.

|

|

|

|

Has anyone taken the AWS Networking Specialty Exam? I've been working with AWS for almost 10 years and I can build VPCs, TGWs, routes in my sleep at this point. I'm sure there are some service level gotchas but for anyone that has passed the exam how did you find it? I aced the Security Specialty and it was mostly rehashed SA Pro questions...

|

|

|

|

Is it possible to use AWS SDK v3 in a Lambda function? I've tried to install v3 of the S3 client but when the Lambda runs it says the module can't be found - this is a serverless application if that matters.

|

|

|

|

a hot gujju bhabhi posted:Is it possible to use AWS SDK v3 in a Lambda function? I've tried to install v3 of the S3 client but when the Lambda runs it says the module can't be found - this is a serverless application if that matters. I don't use the S3 package, but all the other AWSSDK packages I use in my lambda functions are v3.x and work fine.

|

|

|

|

Looking for input on part of a proof of concept for a customer account web portal. This part of it seems simple enough, exposing MS SQL data via a public-facing web API authenticated with bearer tokens, but I might be missing something? We're a business in Texas, we already have a VPN connection with AWS, the token nonsense isn't important here, and there's maybe a thousand active connections from CONUS+Alaska at any time. Incoming JSON request -> WAF -> ALB -> ECS+Fargate (NET5) -----> VPN -----> Our new fancy-rear end Palo Alto hardware firewall -> ???? -> MS SQL server The devil is in the details of course, but from a high level perspective this seems okay enough?

|

|

|

|

Why are you not just using API Gateway?

|

|

|

|

What if instead this was the for ui/web server?

|

|

|

|

The pattern for a public web service is: CloudFront - ALB/NLB - autoscaling ec2 target group - managed elasticache - DB of choice Or replace ec2 with k8s or lambda functions.

|

|

|

|

Agrikk posted:The pattern for a public web service is: Oh yeah, def CloudFront for the web site. I'm going with autoscaling ECS+Fargate because I'm 1 of 2 people in the company who know anything about AWS compute options and we're not interested in managing any of it. I'll take a look at ElasticCache in front of SQL and see how feasible+reasonable it would be.

|

|

|

|

candy for breakfast posted:Oh yeah, def CloudFront for the web site. I'm going with autoscaling ECS+Fargate because I'm 1 of 2 people in the company who know anything about AWS compute options and we're not interested in managing any of it. If itís small enough Iíd actually lean into EC2 over ECS; its a lot more straightforward to manage bare linux hosts and doesnít make you learn a whole new ecosystem. To be honest Iím not sure there is a _good_ reason to use ECS at all anymore. Maybe more options to scale fargate tasks? And if your looking for just running containers in the cloud; heroku or something like fly.io are wayyyy simpler to get started on and donít require learning a bunch of useless aws semantics.

|

|

|

|

ECS has way better cloudformation support, is the main reason to stick with it.

|

|

|

|

freeasinbeer posted:If itís small enough Iíd actually lean into EC2 over ECS; its a lot more straightforward to manage bare linux hosts and doesnít make you learn a whole new ecosystem. ECS on Fargate is pretty good if you want container orchestration without a lot of the extra complication that EKS and Kubernetes brings. You use AWS building blocks for everything, you don't need to manage IAM role <-> ServiceAccount mappings and vice versa, there feels like there's a lot less pitfalls (I.e. secret management, ConfigMap management, etc.) But, this might be a grass-is-greener thing from managing so many workloads in Kube. Definitely agree on running things in EC2 though. Auto Scaling Group + ALB/NLB is pretty rock solid. Either pre-package AMIs with something like Packer or do it all via user-data when the instance starts. The only two real things I'd watch out for are that management gets difficult when you start talking about many different applications and it's on you to make sure logs and metrics are getting shipped properly.

|

|

|

|

After spending well over a decade farting around with terribly managed Linux machines by companies that have no idea how to manage and patch systems at a professional level of competence as a business, in 2021 people need to have a real good reason to justify using EC2 instances that do much more than run ECS or K8S. Why bother with managing AMIs, system accounts, hardening your filesystem permissions, and thinking of Linux distros to run unless it's a material business deliverable? It takes more effort to migrate distros than it does to switch between K8S and even ECS in my experience, especially if you're like us getting caught needing to move off of CentOS. For some places there's a legitimate business need but most companies I've seen frankly don't and managing instances is more of a chore that's bungled and a legacy evil than something strategically important. Heck, I'm seeing DoD and old school enterprises asking me for Docker containers for our products Decisions should be made in the context of what most companies have in terms of knowledge and resources, and in most projects with a smaller number of people to start with you're unlikely to have an operations person and even then there's a fair chance they'll be shouted down about infrastructure demands anyway. So working with instances directly seems like a lose-lose even if you have graybeards all over your founding team unless your product is meant to work for people running instances, for example.

|

|

|

|

12 rats tied together posted:ECS has way better cloudformation support, is the main reason to stick with it. Yeah, around this time last year I did a whole stack with CDK- ALB, ECR, ECS, etc. Once you get used to the terminology and the idiosyncrasies of the .NET CDK, it's not too terrible. Just a bit weird. whats for dinner posted:ECS on Fargate is pretty good if you want container orchestration without a lot of the extra complication that EKS and Kubernetes brings. Bingo. It's me and someone else who just started learning ECS a few weeks ago. We don't enough time, people, and necessity to bother with EKS. freeasinbeer posted:If itís small enough Iíd actually lean into EC2 over ECS; its a lot more straightforward to manage bare linux hosts and doesnít make you learn a whole new ecosystem. Well... we're a windows shop; I'm the only person with enough linux knowledge to be saddled with being responsible for the EC2 instances. Pass.

|

|

|

|

Itís definitely never been simpler to manage EC2, SSM is so good I regularly advocate for its use on-prem BUT gently caress doing it unless you have to. Fight for not doing.

|

|

|

|

Iíve been using ECS/Fargate for lots of stuff. I have a service spun up with copilot using it. The new app runner thing they added letís you skip on the ALB but the minimum size is bigger. Wanted to try it but it makes it kinda a wash. Fargate I can just spin up the smallest thing possible and then increase if it needs it, and let it auto scale. No hosts to manage, just push images to ECR and refresh the service. Itís not too complicated once itís set up (and copilot saves a lot of that work)

|

|

|

|

A bit of a broad question here but can someone with AWS skills transition in to a comparable Azure role, and vice versa? Or is it one of those things where you commit to a ecosystem and you're not transitioning to the competitor without a lot of effort?

|

|

|

|

I worked at an org that went from AWS only to AWS + GCP + Azure + Alibaba Cloud and it was fine, easier than Cisco -> Juniper or Windows -> Linux IMO. The main thing I found was that all of the non-AWS cloud providers are awful, except for Alibaba Cloud, which is basically a copy paste and global find replace of AWS.

|

|

|

|

EDITED.

BaseballPCHiker fucked around with this message at 21:27 on Feb 2, 2022 |

|

|

|

I know I'd be fine with minimal logging and tight envs since it makes bad dev and bad actor behaviors simply not possible. Getting that set up is a lot of front-loaded effort and slows dev velocity so if they really grok what you're saying they may be trying to avoid that without saying so. If the concern is repeated, from high up, I'd expect a vendor has been whispering in ears, however, good luck.

|

|

|

|

Yeah if you can structure your AWS account such that you only need to audit the inputs to the account, that ends up being an order of magnitude easier to manage, audit, and scale. It also ends up being an order of magnitude cheaper but the costs for Config, GuardDuty, CloudWatch, etc., are so minimal compared to the usual suspects in AWS that it's not a big deal. This also ends up increasing developer productivity long term, you just have to be able to actually do it and continue doing it, which is above most teams. I've only worked at a single place that really managed to pull it off, but it was wildly successful, we went from "0 security except accidental" to "the federal government says its OK to give us census data" in about 6 months with a single infosec hire, and he was really more of a compliance engineer than he was "secops" or whatever.

|

|

|

|

I'd also encourage practices like reviewing IAM documents and policies, account key rotations (not just for security reasons but also to help ensure resilience for when people leave the org), and having infosec help out with developers' pipelines. While AWS Config is Good Enough for avoiding pretty bad violations of principles of least privilege I haven't found it inadequate for finding some of the edge cases like cross-account permission escalations and once you get to those kinds of attacks you need to start using policies with permissions boundaries and minimize access to highly privileged roles that aren't supposed to be accessible without a gating system's approval. On the other hand, I've seen enough attacks that attack the metadata service to the point I'm seeing some orgs mandating blocking access to 169.254.169.254 entirely which makes a ton of things really painful to work in within AWS and people are just treating them as an overgrown and overpriced colo facility.

|

|

|

|

Maybe the people asking for security alerts instead of locked down accounts misunderstand your stance as "I'd simply not get hacked"?

|

|

|

|

I'm trying to learn cloud HPC workflows as we want to replace our failing/expensive in-house cluster. I've been working through a some AWS tutorials (https://www.hpcworkshops.com) to learn the lay of the land. Today I ran into a roadblock when trying to build an image for a g3s.xlarge instance using packer:code:My question from all this is previous tutorial steps had me executing large parallel cluster jobs that were running other instance types with no issues. What is so special about the G type instances that they are initially restricted?

|

|

|

|

g3 are gpu instances. Iím assuming they are disabled by default because thatís what Bitcoin miners like.

|

|

|

|

I feel like I'm missing something obvious here. Lets say I am looking to see who from my environment assumed a role. I know that an accesskeyID gets made when a principal does assume a role, and I can search for that accesskeyID to see API calls they made through CloudTrail. But how can I tell who assumed that role in the first place? Shouldnt I be able to tie accesskeyIDs made through STS to an AD user who logged in via the console? Or does that have to be done through the CLI? Or maybe Im just tired and not thinking straight after having a baby.

|

|

|

|

The original assume role event has a userIdentity field, example from a quick docs search: https://docs.aws.amazon.com/IAM/latest/UserGuide/cloudtrail-integration.html#cloudtrail-integration_examples-sts-api The contents of the userIdentity field depends on a bunch of things, since basically every IAM principal in AWS can assume a role in some way. If you're looking for AD auth you probably want AssumeRoleWithSAML, which will have a userIdentity that includes a principal id, identity provider, and a user name which should be able to identify your AD user. e: my apologies, web console logins have their own event: https://docs.aws.amazon.com/awscloudtrail/latest/userguide/cloudtrail-event-reference-aws-console-sign-in-events.html but same info applies re: userIdentity 12 rats tied together fucked around with this message at 17:58 on Aug 31, 2021 |

|

|

|

double post for formatting I checked this out at $current_employer and AFAICT the full auth flow for Web Interface via Azure AD goes like - 1. AssumeRoleWithSAML (user agent aws-internal) fires, 1.a. you can parse identityProvider + userName out of the userIdentity field and this points to an Azure AD user 1.b. you probably want "responseElements.assumedRoleUser.assumedRoleId" 2. ConsoleLogin fires (user agent will be your web browser) + SwitchRole fires (when accessing a different aws account) 2.a. responseElements.assumedRoleUser.assumedRoleId (from above) == userIdentity.principalId 3. Events fire when interacting with AWS Services, in my case: "LookupEvents" 3.a. responseElements.assumedRoleUser.assumedRoleId (from above) == userIdentity.principalId You shouldn't need to do anything with ASIA- access key id unless you're tracing down IAM user -> STS assumed role. Since the original user comes from an SSO dance, you can work purely with assumedRoleId, I believe. I prefer not doing SSO for this reason, so I only have to worry about one credential-link path, and it's one that I fully understand and have control over every part of. 12 rats tied together fucked around with this message at 18:35 on Aug 31, 2021 |

|

|

|

Just wanted to give a hearty thanks for your posts. The links you provided gave me exactly what I needed and helped me make some queries in our SIEM tool to find exactly what we were looking for. I'm supposed to be one of our "AWS security pros" at the company and had a rough day yesterday misunderstanding some GuardDuty documentation. This was a nice win that helped restore some confidence, so thanks once again.

|

|

|

|

Rookie question as I try to wrap my head around AWS (cloud tech in general) and IaC using Terraform: Amazon has a tutorial for building a basic web app. https://aws.amazon.com/getting-started/hands-on/build-web-app-s3-lambda-api-gateway-dynamodb/module-one/ The steps are basically: 1. Create a static website with Amplify 2. Build a Lambda function with Python 3. Build new Rest API to link Lambda function to web app 4. Build DynamoDB and have Lambda write to it I've successfully built everything out using the console UI. I'm going to take another pass at it using Terraform. I'm assuming it's possible to write Terraform in such a way that I chain all that together with a single 'Terraform apply'? I'm going to dig through the documentation but just trying to wrap my head around the mental model. I can have each stage output the parameters for the next (i.e. after the Lamba function is built it outputs the information needed to build and link the REST api)?

|

|

|

|

phone posting so forgive the terse formatting: short answer yes. the terraform thing for this is called a "reference to resource attribute" and you can find it in the docs under terraform language -> expressions -> like halfway down as long as your resource is defined in the same file (not exactly correct but trying to keep it simple), you do not need to have terraform output anything for a "next step" the value proposition from terraform is that it is smart enough to look at all of your resource references and go "oh, $that needs to happen first so that we can realize the value for $this" and then do everything for you in the right order

|

|

|

|

|

| # ? May 17, 2024 20:16 |

|

12 rats tied together posted:phone posting so forgive the terse formatting: short answer yes. the terraform thing for this is called a "reference to resource attribute" and you can find it in the docs under terraform language -> expressions -> like halfway down Thanks for this. After many attempts, I was able to stand up a functional Amplify site via Terraform. I had to mix and match all sorts of poo poo I found online but got it working on the 6th version. Now on to the Lambda. Side note: seems like prime opportunity for a good tutorial here. Amazon Training walks you through all this via the console UI but it would be great to then have the same steps via Terraform. If I can complete this then I might try a Terraform write-up.

|

|

|