|

Busy Bee posted:Best webcam for home office and daily Zoom / Google Meet calls? From my research, seems like everyone is recommending the Logitech C920 but I'm open for other suggestions. I have that one and use it for WFH, seems great no complaints.

|

|

|

|

|

| # ? May 22, 2024 11:07 |

|

Busy Bee posted:Best webcam for home office and daily Zoom / Google Meet calls? From my research, seems like everyone is recommending the Logitech C920 but I'm open for other suggestions. I use that one for a science instrument and its autofocus is real loving good.

|

|

|

|

Thirding it. I donít use it much but itís a drat good camera when I do need it.

|

|

|

|

I'll go against the grain and say I prefer my Anker C200 to the Logitech C920 I had been using. It is also possible the Logitech just suffered from age, but the Anker lets me adjust field of view so it's not just stuck on super wide-angle, and doesn't give me the autofocus problems the Logitech was giving me. I see in reviews the mic on the Anker is sometimes a problem, but I always use an earpiece so whatever my webcams are doing when it comes to mics is not an issue for me.

|

|

|

|

Rexxed posted:The first thing I'd do is try another cable. USB 3 requires a lot more conductors than 2 and one might be broken. I broke a pin on the usb 3.0 header on my motherboard and it was a nightmare trying to get anything working on the front panel until I did some sketchy fixes. Thanks to everyone who thought it might be the cable. Swapped it out with a new one today, no more issues. EDIT: Dissected the old cable. Apparently it had foil and braid shielding, and on top of that, three cable pairs in the internal bundle were twisted and then each of those pairs shielded as well. Is that normal for USB3? Didn't realize how much more engineering goes into these than older USB standards. Chuu fucked around with this message at 07:48 on Jun 12, 2022 |

|

|

|

There is a very perceptible lag when I use my controller with my old tv, much worse than with my high refresh rate monitor. Same pc, same controller. Iím pretty sure itís not just the fps difference (max 60 versus a variable ~100-120, respectively). Playing with the controller was almost making me nauseous. I set the tv to ďgameĒ mode, but that didnít help. I used the tv with a PS4 for ages years ago and never had an issue with it. Is it possible that something about the pc hdmi connection is adding latency?

|

|

|

|

Rinkles posted:There is a very perceptible lag when I use my controller with my old tv, much worse than with my high refresh rate monitor. Same pc, same controller. Iím pretty sure itís not just the fps difference (max 60 versus a variable ~100-120, respectively). Playing with the controller was almost making me nauseous. I suspect you're seeing the addition of latency from a few things. I wonder if turning off vsync might help.

|

|

|

|

Rinkles posted:There is a very perceptible lag when I use my controller with my old tv, much worse than with my high refresh rate monitor. Same pc, same controller. Iím pretty sure itís not just the fps difference (max 60 versus a variable ~100-120, respectively). Playing with the controller was almost making me nauseous. Yeah if you want to test it, I'd find some way to check latency with a keyboard or something else wired, since it's probably latency as mentioned above.

|

|

|

|

I donít think turning off vsync helped much, not enough anyway (I had it on to limit the frame rate to 60 on the tv, so as to not unnecessarily tax the gpu). I duplicated the output to a monitor, and side by side I could see the tv was a fraction of a second behind.

|

|

|

|

Multiple things can contribute, for one the lower frame rate on the TV means even if you are buffering the same amount of frames (usually 1-3 complete frames), the latency is >2x as much because 3 frames x 16.6 MS (60 Hz) is almost 50 MS where 3 frames x 6.94 MS (144 Hz) is about 21 MS. But it can be even worse because the 144+ Hz monitor is likely extremely close to no frames buffered because it is not the limiting factor, where the 60 Hz TV is the limiting factor so it can be buffering more than just 3 complete frames, it could also have multiple further frames set up on the CPU just waiting to be sent to the GPU for render that can add up to well over 100 MS of additional latency. How the specific game handles back pressure is another issue, like Overwatch for instance will buffer a bazillion frames if it is running uncapped and the CPU is faster than the GPU and/or display so it piles latency on to the point of you will be waiting till next Christmas for the buffers to eventually reach the screen. The best bet you need to do is to cap it at the game level to slow the CPU down to less than everything else in the chain so it minimizes any potential buffering before the TV and then hoping that game mode on the TV is properly disabling any post processing TVs can do that slows down the whole works at that stage (also running at the TVs native resolution is absolutely necessary).

|

|

|

|

I compared it against a 1080p 60hz monitor (same as the tv), and I limited the fps to 60 with the Nvidia control panel. Side by side, I could see the tv was some frames behind the monitor. Not by much because the difference wasnít immediately apparent, but it was noticeable when opening the menu or other actions that made sudden big changes to the image.

|

|

|

|

What's the general rule of thumb in maintaining a wattage margin between what your PSU is rated for versus what the components generate? For example, if the system generates 484W, is a PSU rated at 750W sufficient?

|

|

|

|

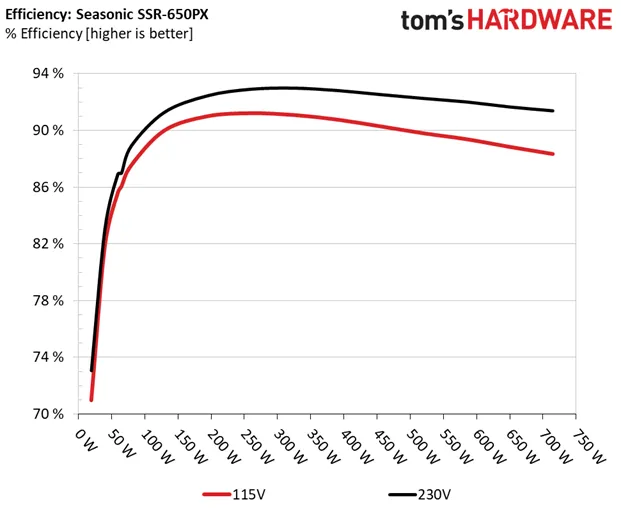

anakha posted:What's the general rule of thumb in maintaining a wattage margin between what your PSU is rated for versus what the components generate? For example, if the system generates 484W, is a PSU rated at 750W sufficient? You'd probably be fine with a 500w in that system. The calculators look at peak wattage which you almost never hit unless you're running synthetic stuff constantly. I want to say PSUs are most efficient when you're using 80% of their rated wattage but I could be off.

|

|

|

|

i've heard a bunch of different figures for that from 50-80

|

|

|

|

VelociBacon posted:The calculators look at peak wattage which you almost never hit unless you're running synthetic stuff constantly. I assume PCPartsPicker also assumes peak wattage in its calculations? If 50-80% of usage is peak efficiency, what's a safe assumption on how much of the stated sum wattage of a PC's components is actually utilized during regular use?

|

|

|

|

Here is a graph from toms hardware guide on a 650w seasonic PSU which shows a pretty typical efficiency curve: The difference between the various levels of the 80+ specification is where the peak is, and IIRC the PSU must maintain at least 80% efficiency from 20% to 100% load (lower efficiency is allowed for loads under 20%). This also explains why the highest efficiency 80+ titanium PSUs are often 1000W or more, since 0%-20% loading is excluded from the measurement it is a lot easier to maintain high efficiency when you don't have to start actually measuring it till you are already past 200W of output. Making a PSU that breaks 80% efficiency at 200W is a whole lot easier than making a PSU that breaks 80% efficiency at 50W or 100W of output.

|

|

|

|

Indiana_Krom posted:Here is a graph from toms hardware guide on a 650w seasonic PSU which shows a pretty typical efficiency curve:

|

|

|

|

Rinkles posted:I compared it against a 1080p 60hz monitor (same as the tv), and I limited the fps to 60 with the Nvidia control panel. Side by side, I could see the tv was some frames behind the monitor. Not by much because the difference wasnít immediately apparent, but it was noticeable when opening the menu or other actions that made sudden big changes to the image. TVs, particularly older and cheaper ones, often have significant input lag. Game mode sometime helps, but sometimes it makes no difference and is just about turning off ďpicture enhancementĒ stuff that makes games look worse. There are websites that list models and lag, you could try Googling what you have. But thereís probably not much you can do about it Iím afraid.

|

|

|

|

I am trying to run a surge protector off a 2-prong outlet and a metal sprinkler pipe. How dumb of an idea is this? Because of the layout of my apartment I want the cable modem in my closet. I want everything surge protected, which means I want the cable modem and coax protected there. The only power in the closet is provided by a light bulb socket. So without surge protection no problem. Install a light socket adapter that converts it to a 2-socket outlet like this and just power the cable model off of it. With surge protection though? More difficult because I need a ground. In the closet there is an unpainted pipe connected to sprinklers. I believe by local code this pipe must be grounded (how can I check?). My idea is to use a metal hose clamp like this to get a good connection to the pipe, use a 3-pin to 2-pin adapter with a ground tab with the 2-socket outlet, and run a cable mechanically secured with screws on both ends between the hose clamp and the ground tab on the adapter.

|

|

|

|

Chuu posted:I am trying to run a surge protector off a 2-prong outlet and a metal sprinkler pipe. How dumb of an idea is this? While it might work I'd probably just run an extension cord in there so as not to have everything be so sketchy. You're not wrong about the setup but the grounded sprinkler setup is an assumption and hooking a lighting circuit up to it could cause potential power weirdness if it's not grounded properly or someone else in the building is grounding other stuff to it, intentionally or not. There are some 2 prong surge protectors, if you must do it that way I'd buy one of those for the cable modem. I'd think pretty hard about just using an extension cable, though, there's a lot of nice ones available.

|

|

|

|

Is there a good UPS that mounts under a desk that'll do like 1200+ watts? Not really concerned on runtime as this is for my gaming PC, ps5, work laptop and monitors. I have a 500W right now one for my work laptop and monitor, but it screams if I run my PC on it at anything more than watching videos and I'd really like to protect my PC/PS5. Edit: Tallying everything up and 1000W should be more than enough, but even at that size it's all looking like desktop towers. Bondematt fucked around with this message at 07:38 on Jun 20, 2022 |

|

|

|

Lithium ion batteries are more compact, but a kilowatt is a lot of power. Also 1000 watts sounds like more than that stuff would likely run up to. How long do you expect to run this setup off battery power? What do you think you're protecting your hardware from with one of these anyway?

|

|

|

|

My 800W UPS is heavy as hell and I have no idea how you could mount it without some serious brackets.

|

|

|

|

LRADIKAL posted:Lithium ion batteries are more compact, but a kilowatt is a lot of power. Also 1000 watts sounds like more than that stuff would likely run up to. How long do you expect to run this setup off battery power? What do you think you're protecting your hardware from with one of these anyway? This is to protect from data loss due to power outages, which is pretty much a monthly occurrence in the summer here and always just after noon when I'm knee deep in a personal project or mid game. That's a good point, tallying stuff up below from what I can find. PC: ~580W Max of 400W for 3080 OC'd Max of 120W for 5600x OC'd Maybe 40-60W for everything else? 2 SSDs and 1 HDD Monitor 1: 60W according to Dell Monitor 2: 25W PS5 looks like it takes next to nothing in standby mode. Work laptop has a battery so I can just run that off a surge protector. Thinking about this, I can probably just get around a 600W for my PC and use the 510W I have for everything else. Edit: This was prompted from our last outage where I learned my PC at full blast will happily make that 510W UPS scream and shutdown. Bondematt fucked around with this message at 08:11 on Jun 20, 2022 |

|

|

|

Chuu posted:I am trying to run a surge protector off a 2-prong outlet and a metal sprinkler pipe. How dumb of an idea is this? Is the power in your area particularly bad, or your cable modem particularly expensive? Or are you in a lightning-happy area like Arizona? Is surge protection for the modem and coax really that vital? I just checked and I guess that technically my modem and router are protected, because the triple-tap I'm using to plug them in claims to have surge protection, but that's incidental (it's a cheap GE adapter I got mostly because I don't have enough outlets in some rooms). The coax cable is not protected at all. I figure if anything happens to the point the coax is affected then I'll have bigger problems to worry about, and in the unlikely event I have a power surge capable of killing my modem I can just run to Best Buy and replace it. Hell, I still have my old modem to fall back on if need be, although it might crimp my bandwidth. If I was in your situation I'd probably just run the modem off the light socket adapter and not worry about surge protection.

|

|

|

|

From what I found, this seems the best (or simplest) place to ask; apologies if I assessed it poorly. I want to get a mic arm that will work for a Blue Yeti, but also for something else if I ever decide to upgrade in the future. I'm not opposed to spending a small chunk on something if it's warranted, though I imagine something beyond $200 (I'd also like to go ahead and get a shock mount & pop filter, though future-proofing is less of a concern there) might make me hesitate, at least a little. I know adapters exist, but I'm always second-guessing myself in terms of whether I might end up with some edge case thing intentionally manufactured to prevent or impede such things. I almost just got the branded arm from Blue, but then my brain did the whole "but what if" dance and so here I am. Also, my current desktop, while solid and fairly sturdy, isn't necessarily super thick(1/2 in.)-in case mounting is going to be easier with one versus another. Thanks in advance.

|

|

|

|

Bondematt posted:This is to protect from data loss due to power outages, which is pretty much a monthly occurrence in the summer here and always just after noon when I'm knee deep in a personal project or mid game. You should get a power meter and actually see what wattage everything runs at. Are you running games with your important work documents unsaved? Do you need both monitors working to safely shutdown?

|

|

|

|

FakePoet posted:

There's nothing special about the Yeti threaded hole, it's standard and most mounts should fit it. I bet your desk will be fine with a clamp-on, people hang heavy rear end monitors on 1/2" all day

|

|

|

|

The AppData folder is taking up so much space on my SSD C: drive. I did that mklink thing and it worked, I made a Junction on my F drive (which is 1TB). It doesn't seem to have migrated over the stuff from my C: to my F: TreeSize still shows AppData taking up ~50GB of space on my C: drive and nothing on my F: drive. Any help?

|

|

|

|

I'm trying to find a piece of software to control my fan speeds, that actually works. I've tried several and some things like speed fan just simply don't work at all. MSI dragon center works a bit with my MSI board but it doesn't work well at all. Is there a hardware fan controller I should be using to plug this into instead of the motherboard.

|

|

|

|

GreenBuckanneer posted:I'm trying to find a piece of software to control my fan speeds, that actually works. I've tried several and some things like speed fan just simply don't work at all. MSI dragon center works a bit with my MSI board but it doesn't work well at all. Have you tried Argus? Itís paid, but everyone seems to like it. Thereís a trial version.

|

|

|

|

Rinkles posted:Have you tried Argus? Itís paid, but everyone seems to like it. Thereís a trial version. I didn't really want to have to pay to control the fans but I'll look into it. I'm having moderate success from Fan Control but it's ok

|

|

|

|

Fan Control didnít work for me. It detected everything, but iirc it could only control the CPU fan.

|

|

|

|

GreenBuckanneer posted:I'm trying to find a piece of software to control my fan speeds, that actually works. I've tried several and some things like speed fan just simply don't work at all. MSI dragon center works a bit with my MSI board but it doesn't work well at all. Is there no fan control section in your motherboard bios? Your bios will usually allow you to create custom fan curves.

|

|

|

|

Hi thread, Can anybody recommend a good IP webcam? I'm not versed in the subject but I'd like to put some webcams around my house and process the images myself. I know I could try to build something with arduinos or raspberry pis but that's all a bit fiddly. They would ideally connect to wifi and either push to something like sftp or let me go in there and get the data myself, I'm not terribly picky. Does this kind of thing exist? Some cursory googling led me here https://www.programmableweb.com/news/top-10-cameras-apis/brief/2020/04/05 but I have no idea if these are good choices. Apologies if I have the wrong forum - it's ultimately a camera question but has a software focus so I figured this was the right forum. Thanks.

|

|

|

|

Iíd just get a few Wyze cameras tbh They are cheap and just work.

|

|

|

|

mrcleanrules posted:Hi thread, You'll need to think or post a bit more about your use case. Let me expand a bit on why. I have a variety of pan/tilt/zoom cameras from different companies that are all almost the same (foscam, amcrest, insteon, etc). I also have a couple of positionable ones for outdoors from Reolink. They all provide a rtsp stream that's usually ffmpeg or some other kind of streamed video. For the most part these are all very similar units which can be commanded to look around and have IR for when it's dark. The web interfaces for these things are all pretty annoying but you can have a PC running as a network video recorder using iSpy (free) or BlueIris (paid) or motioneye/motioneyeos (for *nix/raspberry pi hosts). There's some others but those are the major ones I know of. This will usually mean you only have to deal with the camera's web interface once for initial setup and then you'll access its feed and recordings through the computer you're using as a NVR. The main issue with the cameras you pick up on amazon or wherever is that they call home to china to presumably do updates or provide some kind of cloud integration functionality so you can see the video remotely. It's an issue however because the servers they contact are often not secured well or maintained or updated so it is often beneficial to put them on their own subnet with no internet access or at least block the cameras from getting through your router/gateway. If they can only stream video to the devices on your lan you can opt to add cloud functionality to those if you want. I also have a $20 wyze cam that I put the dafang hacks third party firmware on (which is kind of a pain to load on but stops it from being a cloud based thing) that has good image quality and is a lot smaller and less expensive than the other cameras but isn't quite as nice since the dafang hacks firmware is very minimal. If you don't want to get very technical about it you can get some cameras that work with a cloud service but they will want you to pay for the storage eventually. This may be worthwhile as it's one less thing to worry about (and if your house is robbed they might steal your video recording PC), but it also may not.

|

|

|

|

Can USB WiFi adapters be a limiting factor in the speeds you get? I've got a 5G home Internet connection and when I sit a certain distance away from it with my dell laptop xps15, I get speeds up to 400mbit. It has built in WiFi. However my plex server (a refurbed optiplex) an equal distance away, has a dongle in a usb2.0 port (if that's what the non blue ones still are). It gets a pretty consistent 170mbit. They are on different rooms so the optiplex has maybe a fraction more barriers between it and the router, if we're talking direct line of sight, but the router is in an open hallway both rooms attach to with nothing between the devices and the doorways in each room. It just seems like the speed is consistent enough on the optiplex that it feels artificially capped if you know what I mean. Whereas on the xps 15, it will eventually get up to 400mbit and then fluctuate.

|

|

|

|

Yes, different adapters have different chips and with that varying speed capabilities. There are some pretty good options out there, so it may be a matter of buying something else. That said, do you know what kind of adapter you have now?

|

|

|

|

|

| # ? May 22, 2024 11:07 |

|

nitsuga posted:Yes, different adapters have different chips and with that varying speed capabilities. There are some pretty good options out there, so it may be a matter of buying something else. That said, do you know what kind of adapter you have now? Based on when I last ordered one, I'm pretty sure it's this: Foktech Wifi Dongle, AC600... https://www.amazon.co.uk/dp/B06XZ5B5G9 Edit: that says it can do up to 433. If the USB 2.0 port is a problem, I'm pretty sure there's an ethernet port on the back of the machine. Is there such a thing as a USB 3.0 to ethernet cable? Like, I could stick the dongle onto that cable and then plug it into the ethernet port. And if that was possible, would it likely be faster? Kin fucked around with this message at 16:17 on Jul 2, 2022 |

|

|