|

repiv posted:epic did a seriously impressive flex by loading up a scene from disney's moana and rendering it in realtime through nanite v1ld posted:There have to be really good reasons why this isn't done, it's pretty obvious, but would be good to know why a movie studio which has no realtime constraint on rendering a scene wouldn't pursue those kinds of approaches. On the GPU side, batching and re-ordering can be non-trivial parallelize in a way that makes GPUs happy, so perhaps people have just been avoiding it. steckles fucked around with this message at 03:36 on Nov 6, 2022 |

|

|

|

|

| # ? Jun 4, 2024 05:10 |

|

I was watching that same video and what you said about scene size simply not fitting in memory struck home: trying to divvy the work across boxes won't work for path tracing since you still need to fit the full scene in on each box. Right? So you get better compute distribution, but you're still bumping into per-box memory limits? E: Guess what I'm asking is if the fundamental bottleneck of pathtracing re: multi-machine parallelization is the full scene has to be on each machine? Rays can hit any part of the scene, so that would seem to be the case? v1ld fucked around with this message at 03:34 on Nov 6, 2022 |

|

|

|

v1ld posted:E: Guess what I'm asking is if the fundamental bottleneck of pathtracing re: multi-machine parallelization is the full scene has to be on each machine? Rays can hit any part of the scene, so that would seem to be the case? VVVVV: Typically paths are traced hundreds or thousands of times per-pixel and averaged in off-line rendering. Fundamentally, path tracing would be impossible without averaging. Tracing even double digit numbers of rays per pixel and getting decent results requires nutty space magic like ReSTIR and Deep learning driven de-noising filters. steckles fucked around with this message at 03:53 on Nov 6, 2022 |

|

|

|

From writing raytracers before in school and on a whim thereís issues with basic trivial parallelism methods in ray tracing due to the sheer noise issues as scene complexity increases (more bounces = more error amplification). Some rays also get really, really complex that can really screw with timings and blowing out caches and invalidating pipelines as you get bad spatial jitter issues on top. So some approaches include over-sampling and averaging which is similar to the antialiasing issues just the same as in raster tracing approaches. John Carmack had a good (long) talk on all the physics and lighting rendering systems from 9 years ago that is still relevant to the discussion https://www.youtube.com/watch?v=P6UKhR0T6cs

|

|

|

|

I was interviewing for a job earlier in the year helping test CXL memory breakout boxes, so I imagine that will become a big deal for render farms in the next couple years. https://en.wikipedia.org/wiki/Compute_Express_Link if anybody hasn't heard about it. I guess you could call it an industry-standard take on stuff like NVLink or vendor-specific fiber channel links.

|

|

|

|

v1ld posted:Genuinely asking here, not being skeptical. It just seems like such a different problem space to games. it is a very different problem space to games of course, we're talking many orders of magnitude difference in scale and processing power, the main point i was driving at was just that raytracing isn't just good for absolute photorealism (in response to truga) traditional realtime rasterization techniques are unrealistic, but they're not unrealistic in a way that an artist would deliberately deviate from reality to achieve a stylized look most of the time, unless they are working backwards by art-directing around the limitations of tech

|

|

|

|

The incoherence issue is part of what shader execution reordering is supposed to solve in the 40-series, right? Obviously it won't eliminate the issue, but it's supposed to provide a pretty big speedup to ray tracing just by sorting the rays beforehand.

|

|

|

|

steckles posted:Tracing even double digit numbers of rays per pixel and getting decent results requires nutty space magic like ReSTIR and Deep learning driven de-noising filters. speaking of nutty space magic, some of the nerds here may find this overview interesting, from a channel that popped up out of nowhere with improbably high production values https://www.youtube.com/watch?v=gsZiJeaMO48 goes over the fundamentals, then RIS (the foundational trick Q2RTX used) and then ReSTIR (the new improved trick to be used in Portal RTX/Racer RTX/Cyberpunks RT update)

|

|

|

|

repiv posted:speaking of nutty space magic, some of the nerds here may find this overview interesting, from a channel that popped up out of nowhere with improbably high production values This is an interesting video but I could do without the talking dog.

|

|

|

|

Lol front page of Reddit

|

|

|

|

shrike82 posted:Lol front page of Reddit Lot goin on right there

|

|

|

|

shrike82 posted:Lol front page of Reddit happy for them

|

|

|

|

shrike82 posted:Lol front page of Reddit Dudes absolutely rock

|

|

|

|

Why do other countries get the cool 4090s

|

|

|

|

infraboy posted:Why do other countries get the cool 4090s it doesn't look like a box, more like a bag though

|

|

|

|

Snagged one ex-miner A4000 for cheap. Should be good fit for either of my cases (Fractal Design R6, early rev NCase M1) as FE's were not available around here and everything else is huge gaming poo poo. 140 W TDP

|

|

|

|

While the West struggles to figure out real time path tracing, China has pioneered the real time integration of 2D and 3D waifus.

|

|

|

|

Are there any Intel Arc benchmarks for Diretide around? Most of what I can find are youtubers bitching about 4090 perf.

|

|

|

|

MarcusSA posted:This is an interesting video but I could do without the talking dog. vtubers are the future of graphics tech im afraid your videos will be narrated by dogs and your drivers will be written by animes

|

|

|

|

shout out to that furry who made the ray tracing video though.

|

|

|

|

Kivi posted:Snagged one ex-miner A4000 for cheap. Should be good fit for either of my cases (Fractal Design R6, early rev NCase M1) as FE's were not available around here and everything else is huge gaming poo poo. 140 W TDP Can you use those with the consumer drivers? A 16GB 3070 sounds kinda great.

|

|

|

|

shrike82 posted:Lol front page of Reddit why is the 4090 box so huge, does it come with an extra waifu statue or something

|

|

|

|

The A4000 doesn't quite match the 3070 performance, though it can get kind of close if you overclock it (source: steve walton's review). It seems there's some decent overclocking headroom there, even with the locked voltages. Just be prepared for the card to be very loud, even at stock. It's a typical noisy workstation blower gpu.

|

|

|

|

Arivia posted:why is the 4090 box so huge, does it come with an extra waifu statue or something https://www.youtube.com/watch?v=0frNP0qzxQc

|

|

|

|

Dr. Video Games 0031 posted:The A4000 doesn't quite match the 3070 performance, though it can get kind of close if you overclock it (source: steve walton's review). It seems there's some decent overclocking headroom there, even with the locked voltages. I don't mind the loudness. My current card is already loud so I already game with headphones or in another room so it's fine. I plan to do some airflow mods to exhaust the air bit better.

|

|

|

|

repiv posted:speaking of nutty space magic, some of the nerds here may find this overview interesting, from a channel that popped up out of nowhere with improbably high production values This video was great, thanks a bunch for linking it.

|

|

|

|

Kivi posted:Wait, is HWU same as TehcSpot? I watched this before buying https://www.youtube.com/watch?v=HEagFvmjW4w Yep. Hardware Unboxed's video reviews are TechSpot's written reviews or if you prefer vice versa.

|

|

|

|

Subjunctive posted:This video was great, thanks a bunch for linking it. There's also a great book that goes into details into all the nerdy details including math and code in a fairly understandable way. It's not exactly new so might not address the most recent developments but still fascinating. https://www.amazon.com/Physically-B...ps%2C187&sr=8-1

|

|

|

|

v1ld posted:I was watching that same video and what you said about scene size simply not fitting in memory struck home: trying to divvy the work across boxes won't work for path tracing since you still need to fit the full scene in on each box. Right? I was thinking about this and I guess it would be possible to divide the scene to separate boxes and put every box in their dedicate computer. When I ray travels between these boxes the computers would communicate the ray information between them. This would be quite a bit more complicated than every computer calculating one full frame, but it would reduce the memory demand quite a bit. Hard to say how big the IO demands between the computers would be and if this would reduce the accuracy of the rays. It would probably be necessary to adjust how big of a box every computer would have to take care of, or some computers would handle several fixed size boxes, maybe adjusting this dynamically.

|

|

|

|

mobby_6kl posted:There's also a great book that goes into details into all the nerdy details including math and code in a fairly understandable way. It's not exactly new so might not address the most recent developments but still fascinating. I believe I own a hardback of the first printing of that book, but have never taken the time to read it.

|

|

|

|

repiv posted:speaking of nutty space magic, some of the nerds here may find this overview interesting, from a channel that popped up out of nowhere with improbably high production values Saukkis posted:I was thinking about this and I guess it would be possible to divide the scene to separate boxes and put every box in their dedicate computer. When I ray travels between these boxes the computers would communicate the ray information between them.

|

|

|

|

Subjunctive posted:I believe I own a hardback of the first printing of that book, but have never taken the time to read it.

|

|

|

|

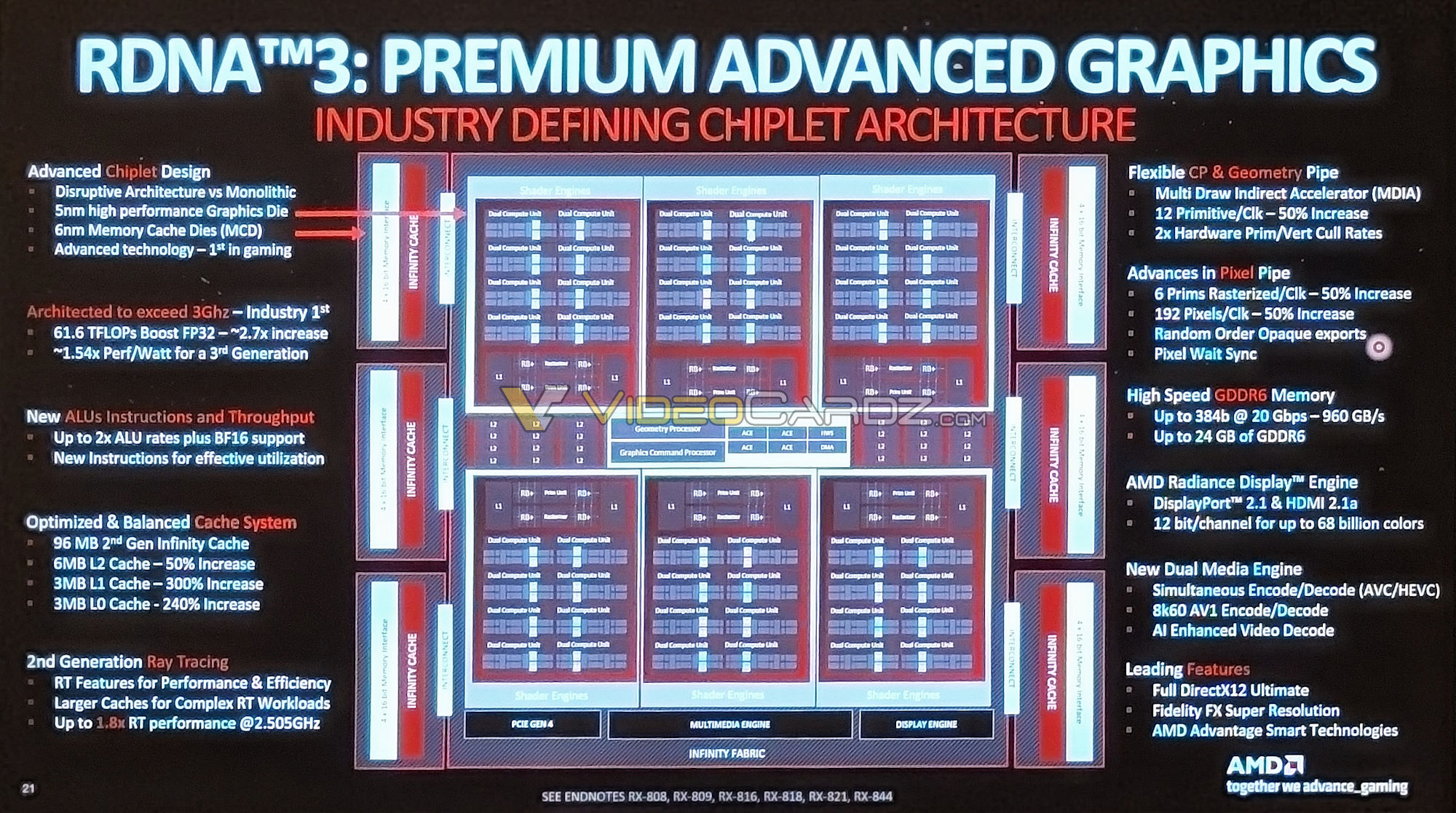

Rumors about Navi31 having problems at the last moment to achieve the '3.3GHz-3.7GHz' range it was designed for. Fixed for Navi32, which will hit near 90% of Navi 31's compute performance. It'll make for interesting 7800 series performance. Let's see what pricing they go for for their fully enabled and operational Navi32 cards.

|

|

|

|

karoshi posted:Rumors about Navi31 having problems at the last moment to achieve the '3.3GHz-3.7GHz' range it was designed for. Fixed for Navi32, which will hit near 90% of Navi 31's compute performance. It'll make for interesting 7800 series performance. Let's see what pricing they go for for their fully enabled and operational Navi32 cards. I find this hard to believe. It more sounds like some AMD fans are having a hard time accepting that RDNA3 isn't as powerful as they were hyping it up to be. edit: lmao, I went to see who was spreading this rumor and found that greymon55, one of the previous AMD "leakers" (who was wrong about most things) deleted their account. Who was the leaker who first leaked the SKU names and memory/GCD configs (20GB for the XT and 24GB for the XTX)? edit 2: if this can be believed (the leaker himself says to take it with a grain of salt), then it seems like 3 GHz was the original target, and you shouldn't take max bios limits seriously.

Dr. Video Games 0031 fucked around with this message at 00:17 on Nov 7, 2022 |

|

|

|

karoshi posted:Rumors about Navi31 having problems at the last moment to achieve the '3.3GHz-3.7GHz' range it was designed for. Fixed for Navi32, which will hit near 90% of Navi 31's compute performance. It'll make for interesting 7800 series performance. Let's see what pricing they go for for their fully enabled and operational Navi32 cards. people are just speculating because some leaks (though not the only really reliable one which didn't have anything about clock speed) had been saying >3GHz for ages and then in the end we just got "the architecture is designed to reach 3GHz" with lower stock speeds, and the performance is a little underwhelming overall. the 3.7GHz claims always seemed wildly unreasonable and i'd seen it counterclaimed previously that it was just the max in the bios so not going to be anything like the actual speed but who knows how reliable any of that is it's possible the 3GHz leaks were correct in so much as they were about the target & architecture design but they didn't actually have firmer info so it got overhyped, or just that people were just making poo poo up. the ones claiming higher certainly were. the names + memory configs of the skus were first leaked by wccftech i think so no clear sources beyond that lih fucked around with this message at 00:31 on Nov 7, 2022 |

|

|

|

SwissArmyDruid posted:

Note that infinity cache (L3) is moved to the MCD - that's the red boxes. This implies the cache is segmented per MCD - what you have isn't 1 big cache, it's really 6 little caches. Cache/SRAM does not shrink at 5nm so pushing those to the 6nm dies is beneficial too. The infinity cache did not get larger, but, it's higher-bandwidth. Since typically memory bits are interleaved across the lanes (right? each access is hitting all memory lanes?) I think they'd always evict in unison, so there's no reason a segmented cache wouldn't work, but, maybe that also has implications as far as the ability to scale GCDs or memory/cache coherence model (I haven't really thought this fully through). I really think based on the patents that multi-GCD is going to follow the same "cache-coherent interconnect paradigm" that CPUs have been using for ages, but, it's possible they may go to a different coherence state-machine model since the GPU model is very read-heavy and write-seldom (not really my area and I also haven't though this fully through either). One interesting tidbit that was in the patents was that the cache was not shown as part of the memory controller in the multi-GCD patents - it was rather conspicuously on the other side of the diagram next to the interconnect. I think the short-term implication is that the GCD will explicitly manage what it wants evicted (is that a side-cache?) and maybe it broadcasts that to other caches too. Also, you can think of the cache as potentially being another client on the interconnect - the chip just has two 1tbps links (let's say) and one of them normally goes to cache and the other to the interconnect, so you can talk to the other GCD at basically cache-speed and it can talk to its cache at cache-speed. Or perhaps the cache would just be another client of the interconnect and each GCD treats its cache like a fully remote thing that it is pushing/pulling data from, and thus could ask for the other GCD's cache data directly. Maybe if you have 2 lanes what you connect to is the other GCD's cache and you communicate through the caches like a PCIe aperture/window. Or maybe since it's segmented it's really six mini-caches that the GCD manages? They also did increase L0, L1, and L2 a lot, but, remember that's per-GCD and the GCD has to feed more shaders (each with dual-issue) as well as a lot of other units that are faster as well. NVIDIA also significantly increased L2 on Turing when they moved to dual-issue and added tensor/RT. Obviously RT is not very cacheable (although Shader Execution Reordering/Ray Binning may help a little bit) but maybe it's particularly beneficial for tensor (larger working set vs scalar execution?) or just necessary for dual-issue to perform in general. It is coincidental that both AMD and NVIDIA did dual-issue and bigger low-level caches at the same time (obviously NVIDIA could not do super giant L3 without the SRAM density of TSMC N7 - that is an unsung hero behind all of AMD's 7nm designs, they are just taking advantage of the huge cache density advantage that TSMC gave them) and I wonder what the exact synergy is there. I just am not a believer in stacking GCD-style dies yet. I was skeptical about even stacked cache this generation when I heard it come up with Ada - "boy that's gonna be fun to pull heat through". But maybe you could do it underneath, as you say. I am also skeptical about pulling out random parts of the graphics pipeline and moving them off-die - memory/cache are a nice clear delineation, but, the "RT accelerator chiplet"/"tensor accelerator chiplet" idea never made sense to me. Those are clients of the SM engines even on NVIDIA's uarch and in AMD's model they're built into something else too iirc, it's an "accelerator instruction". These GPU partitions have been engineered forever as a huge integrated thing with lots of shared resources and scheduling etc, it seems almost impossible to pull it apart after the fact, but, maybe specific functional blocks can be pulled out. But it would have to avoid any significant performance anomalies, which gets back to things like caches - a lot of the improvement of Maxwell/Pascal/etc was making sure the right data was sitting in cache when you were done, so you could move to the next stage. If it lives on another die, that's harder, obviously if you have superfast interconnects that can link a lot of smaller caches it gets easier but I generally think having things being processed off-die (even stacked) is more challenging in a lot of subtle ways. Maybe with the side-cache idea of the patents, AMD is just moving towards the Command Processors explicitly managing what they think needs to be in the cache and that might avoid some of the problem with pulling random pieces off the GPU pipeline. I still think the first couple multi-GCD gens are going to be more or less full GCDs with a cache-coherent interconnect like multi-socket processors. We could see stacked cache (again, especially under the GCD) and we could see multi-GCD, perhaps even together (no reason why not). But I just think first-gen multi-GCD will be two separate GCDs on a PCB, using the long-distance multisocket style links and not the low-power on-chip ones that you use with TSV/etc. It will of course be much much wider - you have to be talking almost the same speed as native memory to get good scaling in graphics, I think. That's not a inherent problem, you can just do a wider IF link, but, it's a lot of data to move. And if you can just jump up to an actually-big GCD you can push off the multi-GCD thing for a little while longer. NVIDIA couldn't respond if AMD dropped a 600mm2 or 800mm2 GCD, with a monolithic design with the IO still on-die you can't devote enough area to "GCD". And I think it would also significantly ease some of the PCB routing problems that come along with superwide buses in the GDDR6+ era - Hawaii went to 512b and everything since has been either HBM or narrower. Maybe a 768b bus is feasible with 12xMCD because the GDDR trace routing will be a lot more "diffuse" rather than being crammed in one place. Paul MaudDib fucked around with this message at 00:58 on Nov 7, 2022 |

|

|

|

the Angstronomics leak, which was spot on, said there is a stacked cache variant in the works that has 192MB of cache, but performance benefits are limited for the cost increase (which makes sense given how much cache & memory bandwidth it already has). will probably show up on a stupidly priced 7950 XTX in a year or something

|

|

|

|

Im looking to grab a 3080 or 3080ti if the price is right. Are there any brands that are prefered? Any to avoid?

|

|

|

|

ZombieCrew posted:Im looking to grab a 3080 or 3080ti if the price is right. Are there any brands that are prefered? Any to avoid? Honestly not really at this point. Iím completely happy with my FE 3080. Iíd just look for the best deal you can.

|

|

|

|

|

| # ? Jun 4, 2024 05:10 |

|

I mean, probably not EVGA.

|

|

|

|