|

If they are just using parts which were mainly developed for commercial use, why do they need to be manufactured in the US? I am honestly uninformed of the situation w.r.t. Taiwan and China but could see why the situation might be precarious and why the American government might be worried. In the case of other international suppliers, what is the big deal? Is this just the US gov’t contingency planning for the case where they are at war with the rest of the world?

|

|

|

|

|

| # ? May 18, 2024 04:23 |

|

silence_kit posted:If they are just using parts which were mainly developed for commercial use, why do they need to be manufactured in the US? Yes but also with things like platform security modules/Intel management engine and similar there is a potentially “invisible” back door that could be tampered with in an untrusted supply chain.

|

|

|

|

silence_kit posted:In the case of other international suppliers, what is the big deal? Is this just the US gov’t contingency planning for the case where they are at war with the rest of the world? Yes. The US government already mistrusts electronics produced overseas because of security concerns (backdoors or other foreign government strategies to compromise devices). For anything that is security critical, they likely only use US-sourced electronics and employ only US natural citizens to minimize the risk of foreign interference.

|

|

|

|

Also, what happens when your relationship with a foreign government changes and you can no longer source defense-critical materials? You don't need to be at war with someone to restrict their access to sensitive components.

|

|

|

|

Thanks to everyone for your posts on this topic.Kibner posted:Yes. The US government already mistrusts electronics produced overseas because of security concerns (backdoors or other foreign government strategies to compromise devices). For anything that is security critical, they likely only use US-sourced electronics and employ only US natural citizens to minimize the risk of foreign interference. Are you saying that a computer on a classified network is totally made up of US designed and manufactured electronics? Is that even possible?

|

|

|

|

silence_kit posted:Thanks to everyone for your posts on this topic. Maybe not 100%, but government contracts try to ensure that there are as few foreign produced products that could conceivably be used to compromise security as possible.

|

|

|

|

For something like a resistor or a capacitor it doesn't matter. But a lot of embedded CPUs don't need to be on the newest manufacturing nodes anyway, so there's a decent amount of US fabs doing older stuff IIRC. That goes into defense stuff too.

|

|

|

|

Kazinsal posted:Since the "Network and Edge" category is so vague and barely in the black, but every server board made still has a smattering of Intel controllers on it, I wonder if they're taking a bath on Barefoot. About that, https://www.tomshardware.com/news/intel-sunsets-network-switch-biz-kills-risc-v-pathfinder-program

|

|

|

|

in a well actually posted:About that, https://www.tomshardware.com/news/intel-sunsets-network-switch-biz-kills-risc-v-pathfinder-program I was just coming to post this, that’s a real bummer. I think the local Intel office here is a lot of the network switch stuff too so that would suck. Them getting into Risc V was surprising even if it was a merely token effort but it doesn’t surprise me it’s one of the first things they kill.

|

|

|

|

silence_kit posted:Does the US military/government ACTUALLY design custom ASICs for their applications? The only ones I am aware of are the RF chips for the RF front-end electronics in military radios/RADARs, which are comparatively much lower tech than computer chips. Yes -- all the time, especially for rad-hard stuff that a FPGA just won't be performant enough for. Crypto HW is another major category. I thought at one point the NSA had its own little mini-fab... these things don't need to be cutting-edge deep-EUV though. "90nm should really be enough for anyone" (it really is a sweet spot IMO for most ICs that aren't cutting-edge processors/logic/etc)

|

|

|

|

NSA did have a mini fab before, yes. Not sure if it was removed from Google Earth / Google Maps way back.

|

|

|

|

Paul MaudDib posted:They're genuinely in the reverse position of AMD all those years ago, and coming into a recession just the same. Intel has one thing going for it now that AMD did not back then: When Intel makes announcements of new product, not even good product, just "not bad", their stock does not go down 10%-15% because automated internet-scraping HFT algorithms are so dumb they can't tell the difference between Anne or Berkshire. Or used to, before the mainstream proliferation of machine learning. Which is just another way of saying that the stock market is inherently irrational and Intel could go undead before people drop it as a blue chip. SwissArmyDruid fucked around with this message at 00:38 on Jan 28, 2023 |

|

|

|

These are pretty bad results but not the end of the world as long as they can actually unfuck things within the next generation or too. There's a review of a Alder Lake-N N100 PC, the guy is a bit awkward but it's a decent overview. https://www.youtube.com/watch?v=IL00YmcTV0g There's also one in Chinese with subs: https://www.youtube.com/watch?v=atFViD1-R7o Seem like a pretty big boost, especially compared to the previous entry level models. It does seem like they're all single-channel but this model is also DDR4, so a fast DDR5 version might be able to offset that disadvantage.

|

|

|

|

I haven't been paying attention, Intel entered the consumer desktop GPU market to compete with AMD and Nvidia? Why? That seems like a gigantic investment for a limited market. Are they really betting on GPUs for buttcoin mining and AI stuff being a massive growth market?

|

|

|

|

icantfindaname posted:I haven't been paying attention, Intel entered the consumer desktop GPU market to compete with AMD and Nvidia? Why? That seems like a gigantic investment for a limited market. Are they really betting on GPUs for buttcoin mining and AI stuff being a massive growth market? They are trying to get into the server compute market and the desktop gpu's are a bit of a side hustle that could earn money, if executed well. Same/similar silicon, different software stack.

|

|

|

|

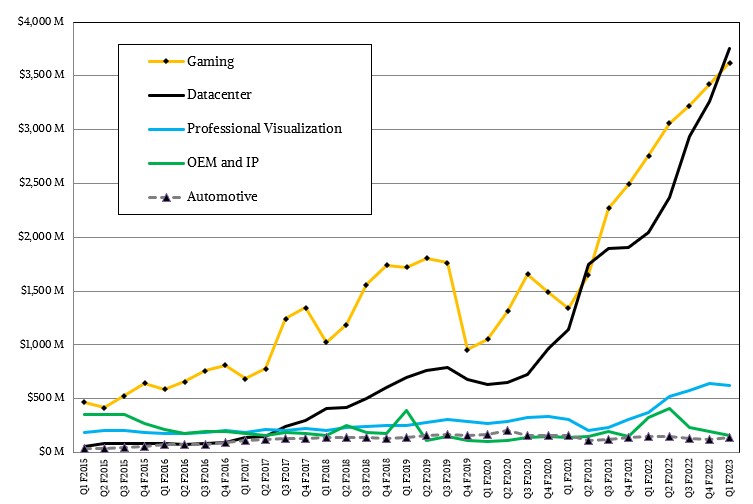

icantfindaname posted:I haven't been paying attention, Intel entered the consumer desktop GPU market to compete with AMD and Nvidia? Why? That seems like a gigantic investment for a limited market. Are they really betting on GPUs for buttcoin mining and AI stuff being a massive growth market? It is a massive growth market, datacenter makes up the majority of nvidia's revenue now and Intel reasonably wants a part of that

|

|

|

|

icantfindaname posted:I haven't been paying attention, Intel entered the consumer desktop GPU market to compete with AMD and Nvidia? Why? That seems like a gigantic investment for a limited market. Are they really betting on GPUs for buttcoin mining and AI stuff being a massive growth market? As several people have mentioned they're desperate for a datacenter GPU, but I'm still pretty sure the desktop GPU's will be shitcanned sooner rather than later. The driver development costs have to be astronomical and getting to a point where the cards are actually competitive is going to require years and years of running at a massive loss.

|

|

|

|

mobby_6kl posted:It is a massive growth market, datacenter makes up the majority of nvidia's revenue now and Intel reasonably wants a part of that "gaming"

|

|

|

|

TheFluff posted:As several people have mentioned they're desperate for a datacenter GPU, but I'm still pretty sure the desktop GPU's will be shitcanned sooner rather than later. The driver development costs have to be astronomical and getting to a point where the cards are actually competitive is going to require years and years of running at a massive loss. Intel can't afford to miss the boat again. They hosed up on mobile, they hosed up on wireless chipsets, Optane, and even flash memory. There's no way that Intel can continue to extract fat margins from x86 server chips in the future, because competition from AMD and ARM will prevent Intel from being able to keep high prices. You know what has really high margins right now? GPUs. Desktop included.

|

|

|

|

Twerk from Home posted:Intel can't afford to miss the boat again. They hosed up on mobile, they hosed up on wireless chipsets, Optane, and even flash memory. There's no way that Intel can continue to extract fat margins from x86 server chips in the future, because competition from AMD and ARM will prevent Intel from being able to keep high prices. This is true, but Intel is really good at making really poor decisions, and big companies love short-term thinking.

|

|

|

|

Paul MaudDib posted:(except for that tiny national-security tsmc fab that will be years behind even intel let alone TSMC taiwan when it comes online) Are you talking about the Arizona fab? Because TSMC pivoted to turning it into a leading-edge fab and it's further along than has been publicly indicated.

|

|

|

|

TheFluff posted:This is true, but Intel is really good at making really poor decisions, and big companies love short-term thinking. Also being godawful at timing, both Optane and GPUs came to market just as the prices of DDR and other graphic cards fell.

|

|

|

|

Optane failed for a variety of reasons, both from business decisions to technical issues to bad luck/timing Even if they managed to stick to the original plan schedule and if it managed to scale and yield the way they hoped it would, CXL may have still killed it and Optane on CXL is a much less interesting business proposition for Intel

|

|

|

|

icantfindaname posted:I haven't been paying attention, Intel entered the consumer desktop GPU market to compete with AMD and Nvidia? Why? That seems like a gigantic investment for a limited market. Are they really betting on GPUs for buttcoin mining and AI stuff being a massive growth market? https://i.imgur.com/zXNExip.mp4 https://www.youtube.com/watch?v=j6kde-sXlKg

|

|

|

|

F.D. Signifier posted:It's gonna take them a while to catch up in the consumer GPU market even with their resources I feel like you didn’t watch that LTT video. e: like most of that video is Luke and Linus going “yeah stuff was broken but they are obviously working very hard to fix it, even weird poo poo like active cables not working” and ending on a note of “most people seem to be happy with their cards and for good reason” which is the exact opposite of what would prove your point Arivia fucked around with this message at 00:52 on Jan 31, 2023 |

|

|

|

Ordered an Arc750, with the 770 being constantly out of stock. They appear to be selling well enough. Please keep the updates and fixes rolling. I need an affordable option in this hosed up gpu market.

|

|

|

|

Are the Arc drivers completely from scratch? The UHD/Iris stuff seemed to work fine without any weird issues. Like I could run Cyberpunk slowly, but without crashes or glitches, on a 11th gen laptop chip.

|

|

|

|

i forgot where they said it, but someone from intel said the arc implementation of directx was built from scratch, not derived from their old iGPU driver

|

|

|

|

mobby_6kl posted:Are the Arc drivers completely from scratch? The UHD/Iris stuff seemed to work fine without any weird issues. Like I could run Cyberpunk slowly, but without crashes or glitches, on a 11th gen laptop chip. pretty sure they where basically from scratch.

|

|

|

|

TheFluff posted:As several people have mentioned they're desperate for a datacenter GPU, but I'm still pretty sure the desktop GPU's will be shitcanned sooner rather than later. The driver development costs have to be astronomical and getting to a point where the cards are actually competitive is going to require years and years of running at a massive loss. Also availability problems have almost entirely passed, the sole reason you'd buy an Intel GPU right now is if you needed a cheap card that can do AV1 encoding. At the time of the initial announcement it felt like Intel were trying to appear as the savior of the GPU industry when everything was out of stock but by the time their cards came out there were better AMD cards available for less. Plus the driver issues and everyone expects them to abandon the whole line within a few years.

|

|

|

|

It's difficult to put a lot of faith into Intel to commit to these GPUs when they're bleeding so much money and given their prior track record. Even if the team at Intel is in 100% good faith trying their best and committed it doesn't matter if leadership / shareholders axe them. I would argue, however, that Intel should probably try to sell that division off potentially instead of doing lay-offs because it would better improve their share values.

|

|

|

|

I read the posts about Intel being on the back foot, bleeding money, etc., but what does that really mean? Like, I'm pretty sure they're Too Big To Fail, so what happens if Intel stays on a downward trajectory? We get stagnation of products? Harder availability of products as they can't produce as much?mobby_6kl posted:There's a review of a Alder Lake-N N100 PC, the guy is a bit awkward but it's a decent overview. thanks for this, btw - the benchmark numbers were interesting and I think it might be a worthy replacement to my old 2016 i3

|

|

|

|

gradenko_2000 posted:I read the posts about Intel being on the back foot, bleeding money, etc., but what does that really mean? Like, I'm pretty sure they're Too Big To Fail, so what happens if Intel stays on a downward trajectory? We get stagnation of products? Harder availability of products as they can't produce as much? Realistically we get some type of protective government tariffs or subsidies, and also Intel becomes just one of a bunch of options for both client and server CPUs. Honestly, the 2nd part of that is already happening. AMD is eating Intel's lunch in the server space, and Ampere Altra or Amazon's own ARM designs are really competitive too. In the client space, phones and tablets sell much more than laptops do, and ARM Chromebooks are a small but growing slice of the market. Intel's stagnation of products already happened, that's what we call 2015-2021.

|

|

|

|

I wouldn't be surprised if they slide into a state sort of similar to Bulldozer-era AMD, selling lovely products and bleeding money for a number of years until they manage to restructure the organization into a less dysfunctional state. Big orgs have a ton of inertia though and take forever to turn around. One of the reasons I have so little faith in the desktop GPU's is that when you're under pressure to restructure and stop bleeding money it's really hard for leadership to defend a long-term project like that even if it's critically important to the future of the business, because everyone in the org will be trying to save their own rear end, and in doing so they'll attempt to get anything that isn't their own project killed.

|

|

|

|

Im an idiot so I bought an arc 770 LE. I'll report back when im really pissed off.

|

|

|

|

I have a theory that Intel killed a bunch of their lines over and over again (phi, optane, three or four network companies,etc) because they weren’t as profitable as the xeon lines and other internal politics, but now that they’re eating poo poo on xeon the gpus are critical to growth of high margin and might have a longer runway. Otoh rumors are that the third gen consumer gpu dev is cancelled (the a770 being first gen.)

|

|

|

|

I can see them killing the consumer GPUs if they think the driver situation is completely desperate but it'd be pretty stupid to bail on a strategically important segment. So yeah I can see some idiot shareholders pressuring them into that.

|

|

|

|

what are cloud companies likely to do for the switchover to av1 encoding? just roll their own hardware without using nvidia/amd/intel, or what's the move?

|

|

|

|

I'm going to cry a lot of they shitcan the drat thing after I bought it.

|

|

|

|

|

| # ? May 18, 2024 04:23 |

|

kliras posted:what are cloud companies likely to do for the switchover to av1 encoding? just roll their own hardware without using nvidia/amd/intel, or what's the move? On device encoding is becoming the rage with the controllers on nvme devices able to do differing levels of encoding/decoding on the fly and in the background. A lot of custom options exist already, it’s pretty neat.

|

|

|