|

Each one of your inputs should have a unique ID that you specified on the front end. When you POST data to an endpoint by submitting a form, your browser is grabbing all the stuff you put in the form and sending it to the server (starlette in this case) in a request. Each field in the form, visible or not, gets pushed up in that request as a key-value pair. The key will be the ID you gave your input, the value will be whatever's been entered on the page. When you handle that request in starlette, it should give you all of the form data as a request parameter in your handler function. Each web framework is a little different but that request object should contain everything that was submitted in the form somewhere within it. Your inputs will likely be stored in a dict, with the key matching whatever the ID was on the front end.

|

|

|

|

|

| # ? May 14, 2024 08:36 |

|

nullfunction posted:Each one of your inputs should have a unique ID that you specified on the front end. When you POST data to an endpoint by submitting a form, your browser is grabbing all the stuff you put in the form and sending it to the server (starlette in this case) in a request. Each field in the form, visible or not, gets pushed up in that request as a key-value pair. The key will be the ID you gave your input, the value will be whatever's been entered on the page. ah, ok.

|

|

|

|

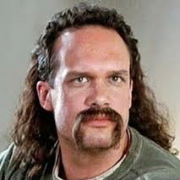

What I'm using to generate text from the gpt models I trained works but it needs to be fixed in a couple ways, but I dunno how I'm not good at this. Most of this was written by another guy on SA and I'm just tweaking it to get it to do what I want.code:user2: daosays: formatting Like this; code:BrainDance fucked around with this message at 02:18 on Feb 20, 2023 |

|

|

|

So, this is the example given by Starlette on requests:code:

|

|

|

|

samcarsten posted:So, this is the example given by Starlette on requests: code:code:code:code:

|

|

|

|

alright, I've put this tegether and think it is correct. Please advise if you see anything wrong.code:

|

|

|

|

Just what do you think content contains?

|

|

|

|

Jabor posted:Just what do you think content contains? key:value pairs. It's a dictionary, right? I'm trying to get the value input into a text box. The name of the object is the key, and the content of the text box is the value.

|

|

|

|

Look at the line where you assign a value to content.

|

|

|

|

marshalljim posted:Look at the line where you assign a value to content. Am I right in saying it's only reading the URL?

|

|

|

|

so I'm trying my hand at this I'm trying to hoover real estate addresses, prices, etc. from this site: https://www.royallepage.ca/en/on/toronto/properties/ Here's what I have so far: code:now if I do code: I haven't been slamming the site with requests, so I don't think they're blocking me. Thoughts? Ideas?

|

|

|

|

If I remember right the items returned by find_all's iterator have to be unpacked, try calling .string on each result

|

|

|

|

Seventh Arrow posted:I haven't been slamming the site with requests, so I don't think they're blocking me. Thoughts? Ideas? The problem is how you've specified the classes on your find_all. What you've written asks for all <li> elements that have a class that matches that whole big long string of classes. The only place I see those classes is applied to the top-level HTML element, not to any of the <li> elements. Digging around in the DOM, I was able to find the <li>s that correspond to the listings, they look like this: code:Python code:

|

|

|

|

Thanks guys, I will take a look into that.

|

|

|

|

If you don�t care about speed I�d suggest scraping with selenium in a chrome browser. Many many many websites have various anti scraping mechanisms and when they see your user agent is python/requests they�re gonna say lol, no. Selenium is easier for a newbie to debug because you can see an actual browser window grab xPaths from that window and while it�ll still trigger some anti scraping things you�ll at least see that happening. You can still use beautifulsoup to parse the the html if you really want.

|

|

|

|

samcarsten posted:Am I right in saying it's only reading the URL? Yeah, content is a string that you created from the incoming request. Try interrogating the request object to see what else you can dig out of it

|

|

|

|

I�m looking to replicate OpenSSL aes-256-cbc decryption of a .zip file using an RSA .pem public key file. We�ve got a script that just runs the command line executable and that works fine, but they want to port it to a AWS Lambda function. I�ve looked over a variety of pyopenssl and pycryptodome examples and I just cannot seem to dig up a working example that properly converts the .pem format to AES with the right results. Has anyone had any experience they can share on this?

|

|

|

|

I don't, and this is an unsatisfying answer, but what if you just deploy a container image that runs that script?

|

|

|

|

QuarkJets posted:I don't, and this is an unsatisfying answer, but what if you just deploy a container image that runs that script? It�s not out of the realm of possibility. I�ve managed to convert all their other processes to Python functions or classes so at least as a learning exercise I�d like to make it happen, but I�m not above taking the easy way out.

|

|

|

|

I'd like to read streaming data in with Python and have it live update in a line graph. Does anyone have experience with this? Plotly and Matplotlib have animate functions, but I worry that my use case is a little outside what they normally handle. The Plotly examples I see use static buffers, and I need to handle streaming data

|

|

|

|

A very common way to handle streaming data is to accumulate it into a buffer, do what you need to with it, rinse and repeat when you get the next chunk of streaming data. Plotly doesn't care how you get the data you want it to graph, nor should it care. Plugging the two blocks together is your job as a programmer.

|

|

|

|

CarForumPoster posted:If you don�t care about speed I�d suggest scraping with selenium in a chrome browser. Many many many websites have various anti scraping mechanisms and when they see your user agent is python/requests they�re gonna say lol, no. I did use BeautifulSoup, but maybe I'll use Selenium next time. This is what I came up with: code:The rest of its suggestions were pretty lol but I did like the idea of using "time.sleep(1)" to keep the script from nuking the website (if in fact that's what it's actually doing, I've never used time.sleep before). Next step is to try using pyspark to clean the data...after I get my hands on every pyspark tutorial I can find.

|

|

|

|

more dumb newbie questions - Say I've got the following dict: code:gradelist = list(student_grades.values()) gives me a list of lists, but how do I iterate through these to grab what I actually want? I'm guessing I'm not describing this terribly well, its breaking my brain a little

|

|

|

|

student_grades['Andrew'][4] will get you that list item if you want to loop through each item in the dictionary, the official docs have some guidance: https://docs.python.org/3/tutorial/datastructures.html#looping-techniques

|

|

|

|

The thing that is i think confusing me is how to loop through each student, grab the nth item and output that to a new list for each n - the value being a list was initially what I thought was throwing me but I think I'm misunderstanding something more basic. Soylent Majority fucked around with this message at 18:53 on Feb 23, 2023 |

|

|

|

Do you mean you want to make a list containing grade #n from every list? That can just be code:

|

|

|

|

Try writing it out as a simple loop first:Python code:If this data was ingested into a pandas dataframe it'd be even easier, but this implementation requires no new dependencies. There are more succinct implementations, but this should help you figure out how to cleanly iterate over these objects

|

|

|

|

kinda yeah - i want to grab each students grades for the first exam and find the average grade for that exam, then do that for each exam. I'm just trying to figure out in general how I could grab the first item from multiple lists and create a new list with that data, and do that for however many items exist in the list - it seems like once I've got that data out and in their own lists I can mutilate it however I need in order to get something out, in this case averages. I've come up with:code:Soylent Majority fucked around with this message at 19:20 on Feb 23, 2023 |

|

|

|

Soylent Majority posted:kinda yeah - i want to grab each students grades for the first exam and find the average grade for that exam, then do that for each exam. I'm just trying to figure out in general how I could grab the first item from multiple lists and create a new list with that data, and do that for however many items exist in the list - it seems like once I've got that data out and in their own lists I can mutilate it however I need in order to get something out, in this case averages Would something like this work? I've just typed it up here, haven't run it myself. Python code:

|

|

|

|

well gently caress that doesn't work after all, those last 4 averages cant be right nope i'm an idiot who didn't finish c/ping properly Write a program that uses the keys(), values(), and/or items() dict methods to find statistics about the student_grades dictionary. Find the following: Print the name and grade percentage of the student with the highest total of points. Find the average score of each assignment. Find and apply a curve to each student's total score, such that the best student has 100% of the total points. code:Highscore is Tricia: 94.8 Average score for grade1: 82.8 Average score for grade2: 80.4 Average score for grade3: 80.4 Average score for grade4: 80.4 Average score for grade5: 80.4 {'Andrew': 0.6265822784810127, 'Nisreen': 0.7827004219409283, 'Alan': 0.9388185654008439, 'Chang': 0.8881856540084389, 'Tricia': 1.0} Soylent Majority fucked around with this message at 19:48 on Feb 23, 2023 |

|

|

|

OK, here's the slightly unfucked version that I think meets the requirements as written, but its hardcoded to only work with up to 5 grades. Still trying to figure out how I'd allow it to be more flexible, somehow create the gradex lists based off the count of grades in one of the dict values?Python code:Highscore is Tricia: 94.8 Average score for grade1: 82.8 Average score for grade2: 81.8 Average score for grade3: 86.0 Average score for grade4: 70.6 Average score for grade5: 80.4 Andrew's curved score is 62.66% Nisreen's curved score is 78.27% Alan's curved score is 93.88% Chang's curved score is 88.82% Tricia's curved score is 100.0% Soylent Majority fucked around with this message at 19:58 on Feb 23, 2023 |

|

|

|

Soylent Majority posted:OK, here's the slightly unfucked version that I think meets the requirements as written, but its hardcoded to only work with up to 5 grades. Still trying to figure out how I'd allow it to be more flexible, somehow create the gradex lists based off the count of grades in one of the dict values? If you can trust that all the lists are the same length, you can do something such as enumerate over the first student, using that index as the key to handle all the others. Python code:Edit: vvvv - Gah, I knew there was a simpler tool for this; just don't have to do this often enough to remember. Falcon2001 fucked around with this message at 21:04 on Feb 23, 2023 |

|

|

|

No mention of zip() as a way to grab items in order from a collection of iterables?Python code:You can also use itertools.zip_longest() if you wanted to handle replacing missing grades with zeroes, for example: Python code:

|

|

|

|

nullfunction posted:No mention of zip() as a way to grab items in order from a collection of iterables? hot drat, zip() does exactly what I was aiming for there, thanks

|

|

|

|

I use a jupyterhub environment with my team members, but some of them are just learning python and coding in general. I'd like to make the onboarding a bit easier / automated, so for example, them needing to use the terminal and bash to create SSH profiles so they can connect to gitlab, and having a curated conda env kernel that has all the stuff they need installed. What's the best way to do this? Can I save a conda env config in a central place in jupyterhub so others can use it? I've seen some onboarding type .sh bash scripts that look interesting, anyone got any good tips on this? I'm also fairly new to python and coding world.

|

|

|

|

Oysters Autobio posted:I use a jupyterhub environment with my team members, but some of them are just learning python and coding in general. I'd like to make the onboarding a bit easier / automated, so for example, them needing to use the terminal and bash to create SSH profiles so they can connect to gitlab, and having a curated conda env kernel that has all the stuff they need installed. FWIW: Another way to tackle this if automating it isn't simple, is to try and write clear, easily followable documentation for how to perform those tasks. If they need to do similar tasks in the future, this isn't a bad way to be like 'hey here's some stuff and why we're doing it'.

|

|

|

|

I keep having SQL questions which I cannot seem to locate the answer to: This code works just fine and returns the blood glucose values which fall in the 6 hour interval Python code:Python code:I know I can write this query using fstrings (because I am the one writing the query so there is not any user input to worry about sanitizing): Python code:

|

|

|

|

Jose Cuervo posted:I keep having SQL questions which I cannot seem to locate the answer to: Not even the SQL thread?

|

|

|

|

I just finished building a project for my class; it's essentially a Tkinter interface with a bunch of inputs for my music collection that saves the inputs to a .json file. I'm having a go at using PyVis to map out some of the relationships of the items in the collection. Here's my code: Python code:code:

|

|

|

|

|

|

| # ? May 14, 2024 08:36 |

|

Jose Cuervo posted:I keep having SQL questions which I cannot seem to locate the answer to: just guessing but I bet your SQL driver is (correctly, imo) not interpolating the tss variable here: code:code:

|

|

|