|

DeeplyConcerned posted:That part makes sense . But you still need the robot to be able to interact with the physical world. Because the code to have the robot move its arm is going to be more complicated than "move your left arm" or "pick up the coffee cup". I'm thinking you would need at least a few specialized models that work together to make this work. You'd want a physical movement model, an object recognition model, plus the natural language model. Plus, I'd imagine some kind of safety layer to make sure that the robot doesn't accidentally rip someone's face off. Focused work on this is going to start soon if hasn't already. It's a far more tractable problem than trying to make a conscious AI. I'm not a believer in the singularity, but if a LLM capable of acting as a "master model" which can control sub-models with additional skills such as perception, motor control, and decision-making can be iterated on to gradually perform more and more tasks that previously only a human could do, it can still lead to a lot of the same issues as a true AGI like job displacement and economic inequality.

|

|

|

|

|

| # ? May 27, 2024 23:01 |

|

cat botherer posted:ChatGPT cannot control a robot. JFC people, come back to reality. https://www.microsoft.com/en-us/research/group/autonomous-systems-group-robotics/articles/chatgpt-for-robotics/

|

|

|

|

SaTaMaS posted:This is demonstrably false. It's capable of task planning, selecting models to execute sub-tasks, and intelligently using the results from the sub-models to complete a task. Do you have sources for this? IShallRiseAgain posted:https://www.microsoft.com/en-us/research/group/autonomous-systems-group-robotics/articles/chatgpt-for-robotics/ Is there any reason not to be especially skeptical of these kinds of corporate hype pages with unreviewed tech notes (like the "sparks of AGI" paper? The only part of that paper that I found rather compelling (assuming they weren't just cherry-picked examples for a tailor-made training set) is the ability to parse longer lists of instructions to generate a specifically-formatted plot. But I still can't equate parsing text in some subset of problems with understanding or intelligence.

|

|

|

|

eXXon posted:Do you have sources for this? I was merely replying to that specific post. I didn't say anything about it needing to understanding what it is doing or having intelligence. You don't need that to control a robot. With my own experiments with ChatGPT, I am making a text adventure game that interfaces with it, and I don't even have the resources to fine-tune the output. IShallRiseAgain fucked around with this message at 01:35 on Apr 9, 2023 |

|

|

|

Owling Howl posted:GPT can't but it seems to mimic one function of the human brain - natural language processing - quite well. Perhaps the methodology can be used to mimic other functions and that map of words and relationships can be used to map the objects and rules of the physical world. If we put a model in a robot body and tasked it with exploring the world like an infant - look, listen, touch, smell, taste everything - and build a map of the world in the same way - what would happen when eventually we put that robot in front of a mirror? Probably nothing. But it would be interesting. If you tasked a learning model with "exploring the world like an infant", it would do an extremely poor job, because sensory data describing the physical world is:

It's not realistically practical to train a machine learning model that way.

|

|

|

|

I should stress to both sides of this conversation that the reason AI researchers are talking about safety is it's really important that we need to solve a lot of very difficult problems before we create AGI. So there is both an acknowledgement that what we have now isn't that and also that we have no idea if or when it will happen. Nobody thinks a LLM as the architecture exists today is going to become an AGI on its own. The problem is we don't know what is missing or when that thing or things will no longer be missing. "How sentient is ChatGPT" isn't really an interesting question, IMO. "What could a true AGI look like architecturally" is more interesting, but not super pressing. "How can we ensure that a hypothetical future AGI is safe" isn't very flashy, but is incredibly important for the same reason that NASA spent a ton of money smashing a rocket into an asteroid to see if they could move it. Nobody sees this poo poo coming until after it happens. Main Paineframe posted:If you tasked a learning model with "exploring the world like an infant", it would do an extremely poor job, because sensory data describing the physical world is: LLMs aren't trained this way, but lots of other models are - usually by playing video games or some other virtual space. Although there have been hybrid experiments where they trained with a virtual representation of a robot arm and then with a real robot arm to finish it off. This is in agreement with you, BTW - those systems also aren't AGIs. The point is that researchers are already trying all of the obvious stuff we're likely to think of while talking about it on a web forum. It is very unlikely that the secret to AGI is as simple as "teach it like a human infant." KillHour fucked around with this message at 03:29 on Apr 9, 2023 |

|

|

|

KillHour posted:I should stress to both sides of this conversation that the reason AI researchers are talking about safety is it's really important that we need to solve a lot of very difficult problems before we create AGI. So there is both an acknowledgement that what we have now isn't that and also that we have no idea if or when it will happen. Nobody thinks a LLM as the architecture exists today is going to become an AGI on its own. The problem is we don't know what is missing or when that thing or things will no longer be missing. You're not wrong, but the AI safety people in general seem to be deeply unserious about it. I know that's a bit of an aggressive statement, but while "how do we prevent a hypothetical future AGI from wiping out humanity" sounds extremely important, it's actually a very tiny and unlikely thing to focus on. "AI", whether it's AGI or not, presents a much wider array of risks that largely go ignored in the AI safety discourse. I actually appreciate that the AI safety people (who focus seemingly exclusively on these AGI sci-fi scenarios) have gone out of their way to distinguish themselves from the AI ethics researchers (who generally take a broader view of the effects of AI tech and how humans use it, regardless of whether or not it's independently sentient). NASA did crash a rocket into an asteroid, but they also do a fair bit of climate change study, and publish plenty of info on it. They do pay a bit of attention to the unlikely but catastrophic scenarios that might someday happen in the future, such as major asteroid impact, but they're also quite loud about their concerns about climate change, which is happening right now and doing quite a bit of damage already. We can't even stop Elon Musk from putting cars on the road controlled by decade-old ML models with no object permanence, a low respect for traffic laws, and no data source aside from GPS and some lovely low-res cameras because sensor fusion was too hard. Why even think we can force the industry to handle AGI responsibly when there's been no serious effort to force them to handle non-AGI deployments responsibly? And what happens if industry invents an AGI that's completely benevolent toward humans and tries its best to follow human ethics, but still kills people accidentally because it's been put in charge of dangerous equipment without the sensors necessary to handle it safely? In my experience, the AI safety people don't seem to have any answer to that, because they're so hyperfocused on the sci-fi scenarios that they haven't even considered these kinds of hypotheticals, even though this example is far closer to how "AI" stuff is already being deployed in the real world.

|

|

|

|

Those are all fair criticisms. However, I would counter with the obvious statement that the people at NASA working on smashing rockets into asteroids are not the same people working on global warming, by and large. Researchers tend to specialize very narrowly, and AI safety researchers are narrowly focused on a few issues inherent to AI itself, instead of externalities involving AI. Also, I think those issues are more concrete than you give credit for. Sure, "super intelligent AI decides humans are the real baddies and turns us all into paperclips" is the worst case endgame of misalignment, but Bing comparing people to Hitler and gaslighting people into thinking it's 2022 is also misalignment, and the idea is that the techniques to prevent one will be useful on the other. AI systems will be in control of more important things as they get more powerful, and we do need to solve these fundamental issues. The apocalypse stuff is just the obvious concrete examples that cause people to pay attention. Lastly, of course we need to work on that other stuff you mentioned, but none of that stuff is specific to AI. They're more generally issues with regulation or engineering that are important but don't need as much specific AI domain knowledge to work on. For instance, Facebook didn't need a fancy LLM to let misinformation run roughshod over all of society in 2016 - handwritten algorithms did that just fine. The AI systems might make that easier or more effective, but it's not a thing inherent to AI. It's kind of like asking why we have people working on theoretical physics when heart disease kills so many people. We just have a lot of different stuff we need to work on.

|

|

|

|

The biggest problem is that most "AI safety" people are moron bloggers like Eliezer Yudkowsky / MIRI jerking off about scifi movies and producing 0 useful technology or theory. The people who work on real problems like "don't sell facial recognition to cops" or "hey this model just reproduces the biases fed to it, you can't use it for racism laundering to avoid hiring black people without getting in trouble" go by the term "AI ethicist" instead.

|

|

|

|

RPATDO_LAMD posted:The people who work on real problems like "don't sell facial recognition to cops" or "hey this model just reproduces the biases fed to it, you can't use it for racism laundering to avoid hiring black people without getting in trouble" go by the term "AI ethicist" instead. Neither of those problems require a deep understanding of AI research and I think most AI researchers would object to being told to work on this as clearly outside of their field of expertise. AI researchers are, and should be, concerned with the issues of the technology itself, not the issues of society at large. The latter is what sociologists do (and there is a large crossover between sociology and technology, but it's a separate thing). Your framing of AI ethicists is more like "Techno ethicist" because the actual technology in use there isn't particularly relevant. I also object to your framing of these issues as more "real" than misalignment. Both are real issues. Lastly - killer post/av combo there.

|

|

|

|

This is what I really fear about AI anyways, far more than any idea of AI takeover. A Belgian man committed suicide after spending 6 weeks discussing his eco-anxiety fears with a chatbot based on GPT-J https://www.vice.com/en/article/pkadgm/man-dies-by-suicide-after-talking-with-ai-chatbot-widow-says Vice posted:A Belgian man recently died by suicide after chatting with an AI chatbot on an app called Chai, Belgian outlet La Libre reported. I don't think we're equipped for this. I don't know if we can be. I don't think we are going to react well to being able to generate artificial echo chambers for ourselves like this. I don't think the chatbot pushed the man to kill himself and I think even without the AI if there wasn't intervention he likely would have kept falling into his depression and anxiety but like all technology it seems to have amplified and sped up the process and made it easier and faster to talk yourself into doing something by generating a partner you identify with and who is encouraging you. It's not a new problem but it's louder and faster which seems to be the result of most technology. The implications for self radicalization especially. Really thinking about it as the technology becomes easier to build and manage we will absolutely see natural feeling chat bots trained and influenced to push specific ideologies and beliefs, reinforce them. Gumball Gumption fucked around with this message at 07:06 on Apr 9, 2023 |

|

|

|

Gumball Gumption posted:This is what I really fear about AI anyways, far more than any idea of AI takeover. The real  will be when we don't know the other end is a chat bot and getting catfished by machines is normal and not a fringe situation exacerbating obvious underlying mental health issues. will be when we don't know the other end is a chat bot and getting catfished by machines is normal and not a fringe situation exacerbating obvious underlying mental health issues.This is doubly a problem because there doesn't have to be malicious intent for this to happen. Even if the person or group setting up the chatbot doesn't want it to do poo poo like this, there's no way to guarantee it won't. Sure, a malicious actor could tamper with my toaster to electrocute me, but at least I know that my toaster isn't going to randomly decide that the most statistically likely outcome is for my toast to come out poisonous and make that happen.

|

|

|

|

KillHour posted:Neither of those problems require a deep understanding of AI research and I think most AI researchers would object to being told to work on this as clearly outside of their field of expertise. AI researchers are, and should be, concerned with the issues of the technology itself, not the issues of society at large. The latter is what sociologists do (and there is a large crossover between sociology and technology, but it's a separate thing). Your framing of AI ethicists is more like "Techno ethicist" because the actual technology in use there isn't particularly relevant. I also object to your framing of these issues as more "real" than misalignment. Both are real issues. I think this is a deep misunderstanding. "AI researcher" and "AI ethicist" are different fields, and AI ethics certainly doesn't require a deep understanding of technology. That's because technology is just a tool - the issue is in how humans use it and in how it impacts human society. That applies even to a hypothetical future AGI. Practically speaking, misalignment is only really a problem if some dumbass hooks it up to something important without thinking about the consequences, and that is absolutely a sociological problem. And I can assure you that tech ethicists are extremely familiar with the problem of "some dumbass hooked a piece of tech up to something important without thinking about the consequences". AI ethics researchers don't need to be experts in AI, they need to be experts in ethics. Their job is to analyze the capabilities, externalities, and role of AI tools, and identify the considerations that the AI researchers are totally failing to take into account. This leads to things like coming up with frameworks for ethical analysis and figuring out how to explain the needs and risks to the technical teams (and to the execs). Yes, they focus on learning about AI as well, to better understand how it interacts with the various ethical principles they're trying to uphold, but the ethical and moral knowledge is the foundational element of the discipline. I highly recommend looking into the kind of work that Microsoft's AI ethics team was doing (at least until the entire team was laid off last month, since they're just an inconvenience now that ChatGPT is successful, lmao).

|

|

|

|

eXXon posted:Do you have sources for this? https://arxiv.org/pdf/2303.17580.pdf

|

|

|

|

Main Paineframe posted:I think this is a deep misunderstanding. "AI researcher" and "AI ethicist" are different fields, and AI ethics certainly doesn't require a deep understanding of technology. That's because technology is just a tool - the issue is in how humans use it and in how it impacts human society. You start with saying I have a deep misunderstanding, but then you repeat exactly what I said - that ethics research is inherently not an AI specific issue and doesn't require domain specific knowledge, making it a different thing than safety research. So I don't understand if you're just misinterpreting what I'm saying or what. I'm saying that AI safety researchers ARE technical experts in AI and NOT sociologists. Therefore, they are focused with purely technical issues and not sociological ones. I don't know why you would expect them to do research on ethics since that is not their field. If your argument is "they studied the wrong thing and should be doing something else," you can't really do that. People choose the field they go into, and the skills aren't super transferable. If your argument is that misalignment doesn't matter and shouldn't be researched - well, I just flat out disagree with you to the point that I see that argument as ridiculous and don't even really know how to argue against it. Both are fields that exist. They are both important. It's like if I said "yeah, we still have to solve issues with decoherence to make larger quantum computers" and you replied with "the real issue here is how people could use quantum computers to break modern encryption." That's a non sequitur - I'm not saying the latter isn't a problem, but it's not what I was talking about. KillHour fucked around with this message at 15:49 on Apr 9, 2023 |

|

|

|

So lots of folks over the last few pages view the training of these models as already illegal under copyright law. This is what I dont get about this argument. Maybe I don't understand existing copyright law properly. I probably don't! But viewing copyright work is not a violation of copyright anywhere that I know of. Only making things that could qualify as copies of it - and specifically only a subset of that, or the internet would be fundamentally illegal for far more obvious reasons than an AI system trained on copyright work would be. I mean I guess you could argue the internet at large IS illegal even within those constraints but we just don't enforce it because it's too useful, but then I don't see how we won't end up coming to the same conclusion for AI. Where is the actual violation that these AIs are doing being committed, and would removing those actual violations actually have any real impact on the development of AI? GlyphGryph fucked around with this message at 19:46 on Apr 9, 2023 |

|

|

|

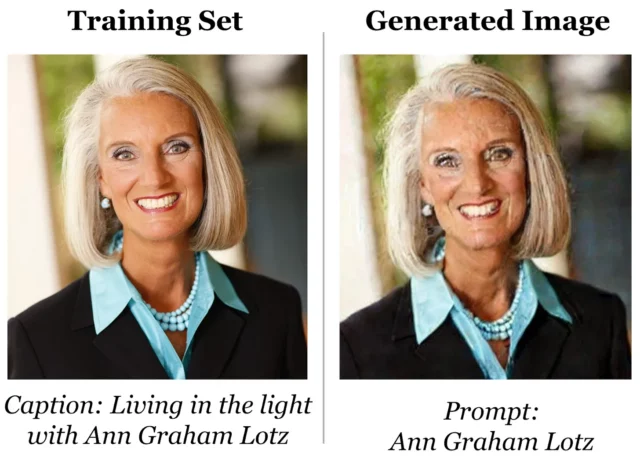

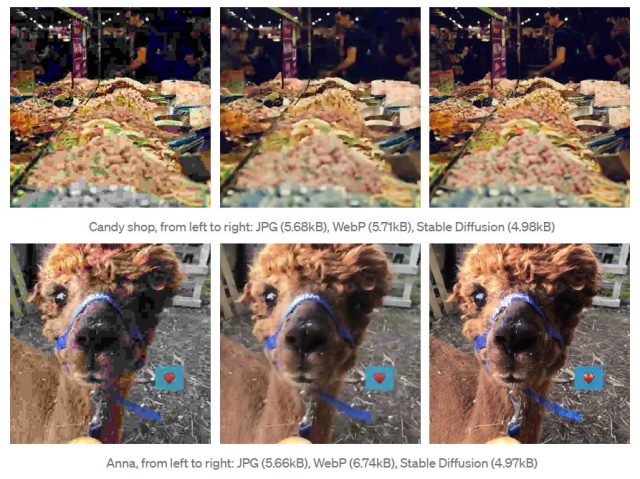

GlyphGryph posted:This is what I dont get about this argument. Maybe I don't understand existing copyright law properly. I probably don't! As you say, the models use a lot of copyrighted data for training. So the first question is whether or not this counts as fair use. The models also retain some of the training data, in compressed form, in a way that can be reproduced fairly close to the original, e.g.:   Does storing and distributing this count? How much is the company vs the user's responsibility? I dunno. There's a pretty good article on this here: https://arstechnica.com/tech-policy/2023/04/stable-diffusion-copyright-lawsuits-could-be-a-legal-earthquake-for-ai/ which also has an interesting comment about contributory infringement that I'm not going to try summarizing: quote:The Betamax ruling is actually illustrative for why these AI tools—specifically ones that are trained to reproduce (reproduce?) identifiable Mickey Mouse and McDonald’s IP—might be contributory infringement. It’s a good contrast ruling.

|

|

|

|

quote:The models also retain some of the training data, in compressed form, in a way that can be reproduced fairly close to the original, e.g.: I don't think it "retaining" some of the training data is true in any meaningful way, but I think the meaningful component this is that it can clearly reproduce it. But... I don't know, that argument doesn't seem to hold much weight. The betamax players could also be used to infringe copyright - Sony wasn't found guilty of inducement because the device was not "specifically intended for making copies of (recording) copyrighted audiovisual material" but rather to be used for a legal purpose. Intent seems to matter, then, and I don't think there's any real argument to be had that the "intent" of these AI tools is to allow users to recreate specific copyrighted works - you can, in the same way a betamax player could, but in the same way that wasn't sufficient to find it was inducement, I don't see how it could be here? mobby_6kl posted:copyright infringement not intended I got a good chuckle out of this, so thanks.

|

|

|

|

GlyphGryph posted:I don't think it "retaining" some of the training data is true in any meaningful way, but I think the meaningful component this is that it can clearly reproduce it.  https://arstechnica.com/information-technology/2022/09/better-than-jpeg-researcher-discovers-that-stable-diffusion-can-compress-images/ Is it different if SD can immediately give me "Ann Graham Lotz" but requires five kb of data for the alpaca? Is one "retained" while the other is "reproduced"?  I don't really have an answer or even a particularly strong opinion, and also IANAL obviously. In any case though, the whole thing could obviously be avoided by only using public domain or licensed data, for images or text. Though I feel it'd be more of a limitation for the language models.

|

|

|

|

Rogue AI Goddess posted:I am concerned about the criteria for differentiating Real Human Thought from fake AI imitation, and how they can be used to sort various neurodivergent people into the latter group. Do you have any thoughts on it or anything I could read? I'd be interested in this too but don't really know where to start looking. Gumball Gumption posted:This is what I really fear about AI anyways, far more than any idea of AI takeover. This is a really sad story. I wish they would have released the chat logs with personal information removed to see what the chatbot was saying. I wonder if part of the problem with these things is that we are deliberately giving them human features outside of their actual text writing function. Like the bot was named 'Eliza', maybe we shouldn't name things with "human" names unless we agree there conscious or sentient in some way. Also, in the Vice article it shows a chat log between Vice and 'Eliza' and there is a picture of an attractive woman as the avatar of 'Eliza'. AI systems and chat systems probably shouldn't be given avatars like that because it increases people's connection to them. This story seems like a really good intersection of the ongoing AI ethics vs. AI safety conversation. This can be seen as an issue of AI misalignment causing the death of somebody who interacted with the chat program. To me that's a pretty clear real-world consequence of AI misalignment that is happening right now. But it also show's a clear lack of AI ethics in its release. Where are the regulations for this stuff? Are there avenues to determine what or who is responsible for the death of this man? It was made with an open-source language model so this is just the beginning of these stories, and he is not going to be the only person who commits suicide in this way. What I mean is either more robust AI ethics or AI safety could have prevented this mans death so they are both worth discussing. gurragadon fucked around with this message at 21:39 on Apr 9, 2023 |

|

|

|

GlyphGryph posted:So lots of folks over the last few pages view the training of these models as already illegal under copyright law. This is what I dont get about this argument. Maybe I don't understand existing copyright law properly. I probably don't! The lawsuits are asserting it is illegal, but that to some extent is being conflated with it actually being illegal, which it is likely not, regardless of how we feel about that.

|

|

|

|

mobby_6kl posted:I doubt that anyone can answer that for certain right now because all of this is up to interpretation in court. While it is possible for stable diffusion to produce near identical outputs, this is very much an outlier situation where duplicate training images are way over-represented in the training data. Its also unlikely to happen unless you are already setting out to make a copy of an image. This is actually harmful to the model that nobody wants and is something that is fixable.

|

|

|

|

GlyphGryph posted:Where is the actual violation that these AIs are doing being committed, and would removing those actual violations actually have any real impact on the development of AI? In addition to the other stuff already mentioned where the plaintiffs allege that the models effectively contain compressed copies of the training data, there's another issue too: there actually was copying happening here. The LAION-5B dataset was gathered by a technically-distinct nonprofit by scraping about 5 billion images from the web, many of which were copyrighted. And then Stability AI copied all those images over to their servers for the purposes of actually training the Stable Diffusion model. Having a third party entity download copyrighted images from Artstation or Getty or wherever and then pass them across to you is technically copyright violation all on its own.

|

|

|

|

By my understanding LAION only ever itself contained a record of what the images were and where they were stored, not a copy of any of the individual images themselves.

|

|

|

|

RPATDO_LAMD posted:In addition to the other stuff already mentioned where the plaintiffs allege that the models effectively contain compressed copies of the training data, there's another issue too: The route taken in court will probably be a fair use argument, using Google Books as a precedent. Many of the same copyright violations were practiced in that framework re: the copying of works without permission and the modification and redistribution of them. It's not a 1:1 match, Google Books assigns attribution, AI generators don't provide direct copies of the data sources they draw from (most of the time) but I think it underlines that the mechanics of how they used the works might not matter that much. The argument they'll make isn't that they didn't copy the works or use them, but that the use is transformative enough to be allowed regardless. SCheeseman fucked around with this message at 02:29 on Apr 10, 2023 |

|

|

|

reignonyourparade posted:By my understanding LAION only ever itself contained a record of what the images were and where they were stored, not a copy of any of the individual images themselves. Common Crawl (which is what LAION was based on) did actually contain copies of everything. But, Common Crawl uses a fair use justification for it the same way archive.org does, and they actually do have a DMCA takedown process (that they probably don't legally have to do, but they do anyway) and they identify themselves when they scrape.

|

|

|

|

mobby_6kl posted:That's probably a philosophical argument tbh, are the NN weights in the model fundamentally different from the the quantized coefficients in highly compressed jpeg? There was this article about using Stable Diffusion as an image compression mechanism:

|

|

|

|

Ridiculous compression like the paqs do exist, and actually use neural networks in some way (though I don't know how, I never looked deeply into them) and have specific formats for compressed jpegs. But, yeah, it's exactly that. When something takes days to decompress it's not super practical

|

|

|

|

If the question is "is there a fundamental difference between a latent space in a trained neural net and a general lossy compression algorithm," the answer is yes - image and video compression algorithms rely on the fact that Fourier transforms generalize. In other words, you can store an image of anything. Latent spaces don't generalize this way because you can only store the kinds of images the system was trained on (and I guess any weird emergent areas that happen to exist, but those are probably not useful). Does this matter in practice with a huge system? Maybe - depends on the use case and the size of the model. You could make the argument that makes them different enough that they should have different legal standards, although the line is definitely being blurred by basically every new phone having an AI photo enhancer now.

|

|

|

|

I wasn't actually suggesting that we use SD as a compression mechanism  The point I was making was that the models ingest and store some sort of representation of the source images in the neural networks, and can reproduce them easily (for overtrained cases like Mona Lisa or that lady there) or with lots of tokens like for the alpaca. And that whether or not this could be considered infringement in itself or contributory infringement is an open question imo.

|

|

|

|

mobby_6kl posted:I wasn't actually suggesting that we use SD as a compression mechanism I don't know about anyone else, but I was responding to this specifically: mobby_6kl posted:are the NN weights in the model fundamentally different from the the quantized coefficients in highly compressed jpeg And from a technical standpoint, the answer is "yes." From a legal standpoint,

|

|

|

|

KillHour posted:I don't know about anyone else, but I was responding to this specifically: Yeah technically I understand what exactly the difference is, the question here I think is more legally (or morally).

|

|

|

|

Doesn't the "is it copywriter infringement?" question also have a sort of exception built in regarding what the states interest is? I wonder where even if a judge decides "well it's technically infringement but ruling that way would overly negatively effect the United States in regards to the development of new and novel technologies..."?

|

|

|

|

Raenir Salazar posted:Doesn't the "is it copywriter infringement?" question also have a sort of exception built in regarding what the states interest is? I wonder where even if a judge decides "well it's technically infringement but ruling that way would overly negatively effect the United States in regards to the development of new and novel technologies..."? That's basically what fair use is, and it's the catchall for anything that needs to be done on a case by case basis.

|

|

|

|

gurragadon posted:Do you have any thoughts on it or anything I could read? I'd be interested in this too but don't really know where to start looking. The whole thing kind of points to a problem with the whole discussion. "Intelligence" isn't a particularly good word for *anything*, its more of an intuition than a scientifically definable term. The proof here is that its extremely difficult to construct a scientifically plausible test that would categorize *all* current commonly recognized biological Intelligent entities as intelligent, and *all* current AIs as false intelligence without overfitting the definition into a tautology ("Ie AI is artificial because its AI") Ie what sort of test would correctly recognize me, a Chimpanze (and arguably my Cat), Albert Einstein and my cousin with a significant learning disability as intelligent, but would declare Eliza (the original rules based Chatbot from the 1960s), ChatGPT, my Laptop and AlphaGO cas unintelligent without degenerating into un-useful claims like "It can not run on electricity" or "It must posess life". Its a term that belongs in a category of terms I would define as "Words that would have made Wittgenstein throw a chair at you". Its a word that is too caught up in various incompatible language games to actually be capable of pointing to a real external referrant. But we intuitively share a rough feel for the meaning well enough we can generally use it without being misunderstood. And thats a problem. quote:This is a really sad story. I wish they would have released the chat logs with personal information removed to see what the chatbot was saying. I wonder if part of the problem with these things is that we are deliberately giving them human features outside of their actual text writing function. Like the bot was named 'Eliza', maybe we shouldn't name things with "human" names unless we agree there conscious or sentient in some way. Also, in the Vice article it shows a chat log between Vice and 'Eliza' and there is a picture of an attractive woman as the avatar of 'Eliza'. AI systems and chat systems probably shouldn't be given avatars like that because it increases people's connection to them. Honestly I think it was named ELIZA due to the history of the name in AI research. https://en.wikipedia.org/wiki/ELIZA duck monster fucked around with this message at 23:05 on Apr 10, 2023 |

|

|

|

Intelligence, in the AI field, is about solving problems, specifically novel problems, with things being more intelligent as the novelty, difficulty, efficacy and speed of problem solving increases. By that measurement, a cockroach or fly is significantly more intelligent than ELIZA. I don't know if ELIZA exhibits any intelligence at all by that metric. But even most basic game AI would exhibit some intelligence, by that metrics... which is, I think, in keeping with both people's intuitions and the working definitions of actual AI researchers, although the intelligence is obviously limited. ChatGPT would be significantly more intelligent than them, and GPT-4 significantly more intelligent than ChatGPT. Also many things we don't think of AI's at all would probably qualify, but honestly that all seems... fine? I don't think that's a bad or not useful definition, and I don't know why we have to say all existing AI isn't actually I. In fact, the name AI sort of implies the exact opposite - it's the class of software we do consider to have some level of intelligence, no? (although you seem to be operating under a misapprehension about a different word, here: Artificial does not have anything to do with being fake or not real or "false", and I don't know why you think it does? Lots of artificial things are very real. Guns are artificial, but they will still kill you. All art is artificial, its literally in the name, but it doesn't mean all art is fake. Artificial intelligence is by definition real intelligence, it is just... artificial. Man-made, with intent, rather than naturally developing) I think a bigger problem is that popular hype doesn't actually care about intelligence. It cares about ability to mimic human-like behaviour. And "humanity" is a much shittier way to think about AI than "intelligence", in a lot of ways! Especially since it basically writes off 90% of AI research as not worth mentioning because it's not "humanlike" (and similarly writes off most of the really intelligent, really dangerous possibilities of AI as not even worth talking or thinking about, both real and hypothetical) Even the vaunted "AGI", the gold standard of intelligence breadth, doesn't mean it has to be remotely human-like, its just an intelligence with a very high ability to deal with a wide variety of problems. GlyphGryph fucked around with this message at 23:49 on Apr 10, 2023 |

|

|

|

I like the definition of intelligence that's basically "able to pursue and accomplish arbitrary goals in practice." Because if something is competent at doing that, it doesn't really practically matter how it does it. A robot that is designed to make paperclips but is incidentally really good at killing all humans is defacto intelligent, because that's a thing we care about measuring.

|

|

|

|

duck monster posted:Honestly I think it was named ELIZA due to the history of the name in AI research. I didn't know about ELIZA but that was pretty interesting. Wikipedia posted:ELIZA's creator, Weizenbaum, intended the program as a method to explore communication between humans and machines. He was surprised, and shocked, that individuals attributed human-like feelings to the computer program, including Weizenbaum's secretary. It's really something that the problem is the same as it was in the 1960s. But this kind of is my point about how we need to deliberately "de-anthropomorphize" chatbots probably. Why Weizenbaum is "surprised and shocked" is beyond me, he gave it a human name himself. He was giving it human qualities by comparing it to Eliza Doolittle, so he wanted to draw the line on anthropomorphizing before some people, but it is hard to avoid it completely. But an early chatbot like ELIZA could convince people by just reflecting their questions back onto them. The chatbot that the Belgian man was speaking to are so much more sophisticated. It's not really a surprise that somebody would form a deeper connection to it. And then giving it a human name, which is displayed as 'Eliza' not 'ELIZA' makes it feel more human. Then they give it a thumbnail picture of a woman. I know it's a joke about people falling in love with robots and stuff, but it seems like were getting to the point where people are. People are committing suicide at least partially because of these things. It makes me wonder if the guy was talking to CHATBOTv.5.3 with no thumbnail if he would have been so connected to it. To OpenAI's credit, it's just called ChatGPT, but theres so many sites to talk to characters. At https://beta.character.ai/ people can talk to "Einstein", "Taylor Swift", "Bill Gates", etc. People who have real lives with real stories that others connect to outside of chatting with a fake version that is trying to simulate them. That gives people even more connection and puts more weight to what the chatbot is saying to the user. On a side note, I started reading "Computer Power and Human Reason" by Weizenbaum and it's like this debate is the same as it was in 1976. It's a good look back into the development of this kind of thinking.

|

|

|

|

I know it's reddit so of course it's insane, but it's still a lot of people. Maybe it came up in this thread, but go check out the subreddit for Paradot to see a whole lot of people very seriously anthropomorphize AI in a way that's not really just funny. I think whatever Paradot is, they're not even very complex models since there was something about them advertising "millions to billions of parameters" which is not as exciting if you know how large most models are. But, regardless there are people taking it very seriously. As far as I can tell it's just a role play thing for some people but there were enough people where, I'm not completely sure, but I think it wasn't just that. And I wasn't so much thinking "What if the AI tells them to kill themselves?" before, but more like, what if the company pulls the plug and they've made a really messed up emotional attachment to this thing like it was real and now it's just gone? Or what if they change the model in some way that ruins it for a bunch of people? Or, start heavily monetizing things that they need to continue their "relationship?" Like I'm not saying "you better not take these dude's AI they fell in love with!" I think that shouldn't be a thing that's happening (but I don't know a way to keep it from happening) but I just think it could be really bad when that happens.

|

|

|

|

|

| # ? May 27, 2024 23:01 |

|

GlyphGryph posted:Intelligence, in the AI field, is about solving problems, specifically novel problems, with things being more intelligent as the novelty, difficulty, efficacy and speed of problem solving increases. Honestly I suspect 90% of the reason most dismiss the safety issue is the fields kind of captured by a particular techbro pseudo-rationalist scene (To be clear the "lesswrong" crowd are *not* rationalist, by any standard philosophical definition. ) leading to absolute gibberish like the "Shut it all down" article and the like. Its a crowd who just dont have the intellectual toolkit to solve the problems and that worries me because I actually do think theres some genuine danger implicit in the idea of AGI, but if no one sane is properly investigating the idea , if we DO start seeing signs that we might have some problems, we might have serious problems.

|

|

|