|

I'm working my way up to it, the goal is 120~tb after I buy all my drives. I'm not using anywhere close to some of you guys but still I can see it. For me it's not just movies and tv shows, it's archives of things. You can go out there and download the whole waffleimages archive, it's quit large (though, not really, my idea of "large" has started changing as I get into the tens of tb). I do this for basically everything, if an archive of some ancient game or website or whatever exists I will download it. And that stuff adds up. When you have massive amounts of storage, things like "well I might as well download every single dos game ever commercially released, who knows which one I'll wanna play" dont seem too crazy anymore. I also like operating system betas. There are quite a few and people can collect and hoard rarer builds because it's an incredibly weird, toxic community. Those add up too, but it's fun to have.

|

|

|

|

|

| # ? Jun 6, 2024 21:04 |

|

Due to a fun experience with my power company doing some work in the area, I'm looking at having to redo my setup. Are there any obvious pitfalls I should be aware of to getting something like a cheap second hand Dell T330 or T620, sticking unraid on it and setting up the *arrs etc? I know that there can be issues with refurb hard drives and the drive controller (hba?) may need to be flashed/replaced, but not sure if there's anything else I missed. I've got minimal Linux and no docker experience, but I was basically only using my NAS as a file server, so worst case scenario I can run the *arrs etc off of a windows install pointed at the network share if unraid is too much of a pain for me to work out (I really wish there was an easy way to test it in a VM or something without having to commit the hardware). Mainly looking at doing something like this as replacing the Synology box by itself would be as expensive as the ex-server and a bunch of refurb drives.

|

|

|

|

I think you'll be fine with unraid as far as touching the console goes (you won't need to much if ever) to get the arrs and other stuff installed in docker via the app store. No comments on HW, but I'd image those old workstation boxes are pretty power hungry.

|

|

|

|

You could join the ML30 gang! https://www.ebay.com/itm/1252452495...emis&media=COPY I think out of the box with unraid it would meet your needs, but several of us have upgraded the CPU, RAM, and other parts and it works great, and is still cheap as hell. I know basically 0 Linux and I've been able to set mine up pretty easily. SpartanIvy fucked around with this message at 15:08 on Aug 11, 2023 |

|

|

|

Tornhelm posted:Due to a fun experience with my power company doing some work in the area, I'm looking at having to redo my setup. Are there any obvious pitfalls I should be aware of to getting something like a cheap second hand Dell T330 or T620, sticking unraid on it and setting up the *arrs etc? I know that there can be issues with refurb hard drives and the drive controller (hba?) may need to be flashed/replaced, but not sure if there's anything else I missed. I came into Unraid with almost zero Linux experience and I didn't have any points in my setup of the NAS and all the *arr apps where I felt like I needed it. It's very easy to do almost anything you need to do from the GUI. The only thing I've run into is that some enterprise drives won't have their drive cache enabled without issuing a command to it but this doesn't actually affect their functionality, just their performance so you don't even actually need to fix this (and if you do want to fix it there are scripts people have already written to do that for you). Honestly my bigger caution to people would be that if you're hoping to use Unraid to start learning Linux or Docker is that it's actually not great for that because it's so easy to use without having to get into the CLI or a Docker Compose .yaml. If you're someone like me who struggles to learn unless forced to, it's not ideal. Scruff McGruff fucked around with this message at 15:29 on Aug 11, 2023 |

|

|

|

Is the ZFS thing about needing more memory for more TB true?

|

|

|

|

hogofwar posted:Is the ZFS thing about needing more memory for more TB true? If you have the RAM to let ZFS use it'll definitely help performance, especially where spinning rust is involved, but it's absolutely not required. For what it's worth my server has 96TB of disks in RAIDZ1 for 64TB usable with 32GB total RAM in the system. ZFS has requested half that and is using only half of what it requested. AFAIK dedupe is the only case where lots of RAM is actually required, and there are so many catches and caveats to dedupe that it's firmly in the "if you have to ask, you shouldn't be using it" category.

|

|

|

|

Just don't turn on dedupe or L2ARC

|

|

|

|

ZFS needs a 64-bit OS and about 512MB of memory (including the kernel and active userspace). Everything else just makes it faster.

|

|

|

|

|

BlankSystemDaemon posted:ZFS needs a 64-bit OS and about 512MB of memory (including the kernel and active userspace).

|

|

|

wolrah posted:It's probably worth noting that when ZFS first came out and these were mildly steep requirements, which is part of why the idea that ZFS needs a lot of hardware spread. Now that 64 bit processors and gigabytes of RAM are standard in phones it's irrelevant. It topped out at 32GB. BlankSystemDaemon fucked around with this message at 00:53 on Aug 12, 2023 |

|

|

|

|

Its also one guy in the truenas forums and his opinions being taken as fact. See: - 1gb per tb - his opinion on ecc - his opinion on virtualization - his speculation on scrubbing with non ecc and how it will lead to all of your data being lost He has some knowledge but he has done more damage to zfs than anyone else at the same time.

|

|

|

|

ECC memory is objectively better. You can sorta clean it up after the fact with scrubs in ZFS and BTRFS since you're super unlikely to write the same data wrong in the same way across multiple drives, but it's better to just not gently caress up the data in memory in the first place.

|

|

|

|

|

I bought 512gb of RAM under impression I needed 1gb per tb. I only found out later itís not really required, just helpful. If you arenít using deduplication youíll be fine without doing 1gb per tb. The upside is now I can shoot CDs into space

|

|

|

|

I agree with having ECC, I would not use zfs without it. It's just some speculation of how not using ecc will immediately lead to full and unrecoverable data loss from the guy. And the 1gb per tb was a recommendation that was started if you were using deduplication, and then it just turned into a 1gb per tb "rule"

|

|

|

BlankSystemDaemon posted:I'd suggest playing with truss (which outputs SysV-like strace lines) to check where the difference is. I'm on a debian system so I ended up using strace instead of truss/dtruss. For the simple touch /mnt/whatever.lol command I see: code:code:Thanks again for the help!

|

|

|

|

|

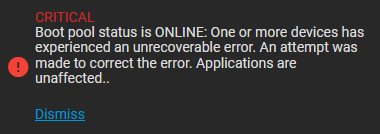

Logged in to TrueNAS for the first time in a while to do some housekeeping and got this. That just means an issue with the USB drive that's acting as my boot device, right? All my other pools are fine with no errors so hopefully all I need is a new thumb drive to boot off of. I thought that the read and write activity on the boot drive was minimal, but I suppose they're not typically powered on 24/7 for a decade and counting. It's been so long since my initial setup so I can't remember, does the boot pool need to be on its own dedicated device? I ask because I have an SSD in there I use to spin up VMs for game servers or for offloading my wildlife camera streaming server to. It would be neat if I could use up a tiny bit of space on the SSD for the boot pool but if that's a dead end I'll just go grab another thumb drive.

|

|

|

Wild EEPROM posted:Its also one guy in the truenas forums and his opinions being taken as fact. - 1GB per 1TB of storage is a recommendation based on production-scale workloads, it's not pulled out of the rear end. - ZFS was designed for Sun hardware, which you couldn't buy without also getting ECC memory - it can run without it (and do better than any other filesystem), and it can even mitigate the issues of not having ECC by enabling in-memory checksumming. - No clue what virtualization has to do with filesystems, other than benefiting from using zvols instead of storing files on a filesystem. - The scrub of death was a solid bit of FUD that's been debunked by no less than Matt Ahrens: https://www.youtube.com/watch?v=rA6YmkxD6QQ&t=151s Windows 98 posted:I bought 512gb of RAM under impression I needed 1gb per tb. I only found out later itís not really required, just helpful. If you arenít using deduplication youíll be fine without doing 1gb per tb. Matt Ahrens has sketched a design for an improvement that'd make 1000x faster and also take up a lot less resources, but I haven't heard of any company willing to pay for the work, and it's only really the sort of thing companies are interested in. Wild EEPROM posted:I agree with having ECC, I would not use zfs without it. It's just some speculation of how not using ecc will immediately lead to full and unrecoverable data loss from the guy. fletcher posted:I'm on a debian system so I ended up using strace instead of truss/dtruss. As for Debian, Linux doesn't do NFSv4 ACLs properly (there has been two attempts to implement it, but neither made it into the kernel), whereas FreeBSD (and therefore TrueNAS Core) does. NFSv4 ACLs were explicitly designed to be compatible with Windows ACLs for interoperability - becasue they were derived from the Windows 2000 ACLs. Not sure why --inplace would fix it, but I'd imagine that leads to write amplification? Coxswain Balls posted:

I think iX has started recommending using proper SSDs from reputable manufacturers now, for that very reason. I'd recommend you make a backup of your configuration and look into using the SSD - although I have to admit, I don't know if you can partition the SSD to only use a bit of it, but it's definitely worth looking into since you already have it. As for why it failed, it's still subject to the exact same level of cosmic rays as every other type of electronic device - they probably just don't have as many extra sectors to fix silent data corruption with.

|

|

|

|

|

Coxswain Balls posted:

Just to say it, back up the config immediately if you haven't already. I'm pretty sure boot pool really wants to be its own device (or devices, mine is a mirror after I had exactly this happen last year). It might be possible to trick the installer into using only part of another drive, but I wouldn't want to try that if the drive in question already has data on it. I'm also pretty sure truenas explicitly tells you not to use USB devices for the boot pool, that's unraid's thing, but if it worked until now I ain't gonna say you can't do it again. You should also look into setting up the health monitoring stuff to notify you when this happens. If the boot pool craps out that's one thing but if you lose one of the drives in a data pool that's something you want to know about as soon as it happens. Having to log in to discover this kind if thing might mean you lose a second drive before you learn about the first one.

|

|

|

|

BlankSystemDaemon posted:The non-volatile flash found in USB devices is typically of quite poor quality, so it's only really a matter of time before they start failing. Yeah, I looked at their requirements page for what size USB drive to get and noticed that change. Looking into it, best practice is to have boot on a dedicated drive, and while it's possible to start partitioning things up it's not recommended or supported. I've been doing things the "right" way as much as possible and this thing has been rock solid, so I'd like to avoid going into hackjob territory. If I had even one more SATA port in my ThinkServer I'd be fine with just getting a basic-rear end SSD, this 120GB thing at $22 is only $5 more than a 64GB replacement thumb drive. Heck, for $3 more I can get 240GB (granted, I have no idea how crappy those linked drives might be). To do that though would require an expansion card, a new PSU, and an adapter to hook up a standard PSU to the proprietary connector on the motherboard. power crystals posted:I'm also pretty sure truenas explicitly tells you not to use USB devices for the boot pool, that's unraid's thing, but if it worked until now I ain't gonna say you can't do it again. When I first set up my NAS a USB boot device was definitely considered acceptable. It seems that FreeNAS rarely wrote to the boot drive, but that changed with the switch over to TrueNAS and I'm guessing it's beginning to hit the wall with the increased I/O and the drive not being very good in the first place. Does USB 3.0 work properly in TrueNAS now? My immediate plan is to get a new Samsung USB 3.0 64GB flash drive, but maybe another option is to grab something like this and toss in the cheapest small SSD I can find at the recyclers. I've got email alerts already set up but I've been putting off looking at it because I got other stuff on my plate right now. I've got an offsite backup so I'm not terribly concerned about data loss, more trying to avoid the "gently caress, I don't want to spend money I don't have for this" feeling when I'm already dealing with other things. Replacing a boot pool is pretty reasonable, at least.

|

|

|

|

I would not recommend USB boot for anything you care about in 2023. I'd probably spend a little bit more for a higher quality SATA SSD, I am pretty leery about the long-term stability of those A400 drives.

|

|

|

|

Coxswain Balls posted:When I first set up my NAS a USB boot device was definitely considered acceptable. It seems that FreeNAS rarely wrote to the boot drive, but that changed with the switch over to TrueNAS and I'm guessing it's beginning to hit the wall with the increased I/O and the drive not being very good in the first place. Does USB 3.0 work properly in TrueNAS now? My immediate plan is to get a new Samsung USB 3.0 64GB flash drive, but maybe another option is to grab something like this and toss in the cheapest small SSD I can find at the recyclers. Instead of that enclosure I would suggest a USB3.1 M.2 NVME enclosure and a 16GB Intel Optane.

|

|

|

|

Dang, that seems perfectly suited to this particular use case where a 250GB boot drive would feel like a total waste. Hopefully I can find somewhere in Canada that has something like that for a similar price. Going that route, I can skip the USB enclosure altogether and use a PCIe adapter card like this one, yeah? If the drive is powered by the PCIe slot, not having a free SATA port on the motherboard and power connector from the PSU won't be a problem. I'm going to use another USB drive as a stopgap for now, but I'll start hitting up the recycling places around the city to see what I can dig up locally for small/older SSDs.

|

|

|

Saukkis posted:Instead of that enclosure I would suggest a USB3.1 M.2 NVME enclosure and a 16GB Intel Optane.

|

|

|

|

|

The whole issue with non-ECC on a ZFS based NAS that I have is that ZFS aggressively caches data. If you go balls out with a lot of memory (e.g. the aforementioned 512GB), cached data may stay resident for very long times. If a bit-flip happens, it might not get noticed, because in-memory checksums are off by default, and the error might make it to disk by roundtripping via a computer (re)processing the data, i.e. load, do stuff and save back to disk. If you just store Bluray rips and poo poo like that, it may not matter to you. If it's more sensitive stuff, I guess you should consider ECC.

|

|

|

Combat Pretzel posted:The whole issue with non-ECC on a ZFS based NAS that I have is that ZFS aggressively caches data. If you go balls out with a lot of memory (e.g. the aforementioned 512GB), cached data may stay resident for very long times. If a bit-flip happens, it might not get noticed, because in-memory checksums are off by default, and the error might make it to disk by roundtripping via a computer (re)processing the data, i.e. load, do stuff and save back to disk. Asynchronous writes are written to a memory region called "dirty data buffer" that can exist for a maximum of 5 seconds, or X amount of memory (can't remember the exact amount, but it's fairly small). After that it gets written to the disk, in a new record - because no on-disk records are ever modified. EDIT: What you've fallen for about is a slightly restated version of the "scrub of death" FUD that was mentioned up-thread, and none of the people who talk about it can explain the actual mechanism behind it without a lot of hand-waving, because they don't understand how ZFS works. This isn't even limited to ZFS or even computers; if someone offers you a scenario, and they can't explain the actual detailed mechanism of action for how it'd work, they probably don't understand it, and therefore aren't a reliable source of informatino. BlankSystemDaemon fucked around with this message at 18:45 on Aug 12, 2023 |

|

|

|

|

Ol' Dirty Data Buffer is a decent YOSPOS name change.

|

|

|

Matt Zerella posted:Ol' Dirty Data Buffer is a decent YOSPOS name change.

|

|

|

|

|

BlankSystemDaemon posted:Stuff isn't written from the ARC - it's an Adaptive Read Cache. Data resides in ARC for whatever reason. Data gets damaged by cosmic ray. Computer on network reads data "by opening file" on NAS. Said computer does stuff with data. Said computer writes file back to NAS.

|

|

|

Combat Pretzel posted:??? Anything in ARC gets its checksum verified when it's read, if checksum fails it gets re-read from disk. Then it gets modified in-memory and the new records get written to a new place on-disk. If you use snapshots like you should, you can still access the old copy.

|

|

|

|

|

So what now? Is in-memory checksums enabled or disabled by default? --edit: Holy poo poo, I'm trying to google an answer to this (i.e. ARC read checksumming), and I get all sort of irrelevant poo poo. Combat Pretzel fucked around with this message at 19:06 on Aug 12, 2023 |

|

|

|

Isn't memory checksumming with non-ecc memory like super resource intensive and results in significantly slower write and recall speeds for your memory?

|

|

|

|

|

ZFS can check the checksum of the data block in memory, when read straight from ARC instead of disks, but that functionality can be disabled, and so far I'm convinced that it's disabled by default for performance reason. Which is why I'm arguing about this. I think I've read about it on the TrueNAS forums, but my google-fu isn't helping.

|

|

|

|

Combat Pretzel posted:--edit: Search engines are bad again

|

|

|

|

I just remembered I have an mSATA 850 Evo sitting in one of my old ThinkPads. Is any old mSATA to USB enclosure/adapter I find on Amazon going to be fine? Since mSATA was a dead end this looks to be my only route for converters. It also looks like there's tons of small mSATA drives around the same size as those Optane ones on eBay so I could get a new boot drive without feeling like ~200GB is sitting around doing nothing. https://www.amazon.ca/s?k=msata+usb

|

|

|

|

I don't feel bad about having unused space on SSDs - just means they'll (likely) live longer, as write wear is spread around better.

|

|

|

|

An mSATA to SATA adapter should just be a cheap pin to pin thing and then you can use any old regular SATA to usb bridge.

|

|

|

|

Wibla posted:I don't feel bad about having unused space on SSDs - just means they'll (likely) live longer, as write wear is spread around better. But for a tenner I can get a grab bag of ten Intel 20GB mSATA drives. I've got six USB 3.0 ports on this thing, think of the redundancy!

|

|

|

Combat Pretzel posted:So what now? Is in-memory checksums enabled or disabled by default? Yeah, search are getting more and more hosed each year. Nitrousoxide posted:Isn't memory checksumming with non-ecc memory like super resource intensive and results in significantly slower write and recall speeds for your memory? Combat Pretzel posted:ZFS can check the checksum of the data block in memory, when read straight from ARC instead of disks, but that functionality can be disabled, and so far I'm convinced that it's disabled by default for performance reason. Which is why I'm arguing about this. You're conflating things, though. When ZFS reads something into ARC, the checksum is also read into memory - and when something then goes to later on use that memory, the checksum is once again checked to ensure it matches before anything else takes place. If I understand what you're worrying about, there's still no actual mechanism of proposed action for the thing you're worrying about - but if you want to argue it, I suspect you might need to talk to folks who understand it better than I do (or watch the clip I linked where Allan quotes Ahrens on why it can't happen, because I have a feeling that that really is what you're looking for.

|

|

|

|

|

|

| # ? Jun 6, 2024 21:04 |

|

Got a backup USB drive running now, but the only other drives I have lying around are 8GB which causes the install to fail on 13.0. FreeNAS 11 installed fine, so I went from a fresh install of 11 and did the upgrade chain from 11 ➡ 12 ➡ 13 to match my config file, then upgrade to current. It took me longer than it should have because man does TrueNAS Core not like my USB 3.0 drives/ports for some reason. Whenever I had it on a USB 3.0 port there was some issue or another during the installation or upgrade paths, but when I upgrade using a 2.0 port then power down and move the boot drive to a blue SS port it seems to run just fine and at full speed. It seems to like locking up and failing during the upgrade script that runs on reboot after installing an update. Is Scale any better with USB 3.0 support? I'd like to leverage the available USB 3.0 ports with enclosures but not if it's going to be finicky like it seems to be in Core.

|

|

|