|

I can't remember if I've posted this before, but I'm just gonna do it again if so: The READ column identifies number of failed READ commands issued by ZFS to the kernels disk driver subsystem. The WRITE column is the same but for WRITE commands. The CKSUM column identifies number of times that the drive returned-from-READ-command something other than what ZFS knows should be there, based on the checksum. BlankSystemDaemon fucked around with this message at 00:42 on May 8, 2024 |

|

|

|

|

|

| # ? May 25, 2024 18:53 |

|

PitViper posted:Read errors, nothing in the cksum column. Surprisingly, swapping cables on the two disks in question seems to have "resolved" the issue, in that I'm 60% of the way through replacing one of those two disks with not a single error reported. Still replacing them regardless. Honestly, I'd do another scrub once all the resilvering is done and see what you can see.

|

|

|

|

Got myself another Synology NAS, gonna use it for btrfs snapshots and move the old one offsite with a wireguard tunnel and use it as a restic target. Anyone else done this before?

|

|

|

|

I am not a pro computer person and have a weird thing that's probably got a completely obvious answer I am just missing, help appreciated. I've got to download a bunch of large (1-10gb) *.gz files amounting to around 300-500gb. They are only accessible through a web browser interface though which allows me to download the entire folder or the files individually. I just need the entire folder. However, the website itself seems to be throttling the downloads to about 1-10Mb/s meaning its going to take several hours or more. Ultimately I want these files on my home NAS which is a headless Unraid server. The ideal solution would be to just fire up FTP from the NAS but I can't given the way its accessible (only through the website). tl;dr - Does anyone know of a way to download data from a webpage interface directly from Unraid?

|

|

|

|

|

That Works posted:tl;dr - Does anyone know of a way to download data from a webpage interface directly from Unraid? Kinda hard to tell from your description. There are ftp web interfaces, if that's the case you'd be able to use filezilla to connect. Can you connect to the web page in multiple tabs and download? If so, does it double the bandwidth? Its possible the website isn't hosted on a fast upload connection.

|

|

|

deong posted:Kinda hard to tell from your description. Nothing that i can connect with via FTP. Multiple tabs and download I'll try but I think that's still gonna be a lot of tedious work if I can't just get it directly to the NAS. It's a good backup solution if nothing else though, thank you.

|

|

|

|

|

I should think that, assuming you have the URLs for the files, you could run a wget or curl script from the Unraid console which would allow you to specify the Output directory directly on the NAS.

|

|

|

Scruff McGruff posted:I should think that, assuming you have the URLs for the files, you could run a wget or curl script from the Unraid console which would allow you to specify the Output directory directly on the NAS. Of course... This is the simple thing I was not thinking. I gotta read up on my wget syntax but I am sure I have done this in the past. e: will wget let you authenticate etc for an https / pw protected site? Literally have messed with it just a few times ever.

|

|

|

|

|

I’m planning on moving my docker containers off of my Synology and onto a dedicated server. I’m assuming I can just mount shared folders as needed from the server and update the docker compose file to the new path, right? Or are there shenanigans of some kind involved?

|

|

|

|

You could try using the web developer tool's network to get a curl command that I think might include the required cookies. Open dev tools > Network and download a file. Find the file in the list on the network, and right click. Somewhere in the context menu will be the option to copy the url as a curl command (windows or bash/posix). Depending on exactly how the source website has been written, you can probably generalise the command in to a script for all the files.

|

|

|

|

That Works posted:Of course... I can't speak for every website but I know you can use --user to set a username in the header and --ask-password to get the command to prompt you to enter a password so that you're not storing it in plaintext.

|

|

|

|

That Works posted:Of course... The username/password command line is if the site uses the old school HTTP authentication method. If the site has their own web login script (ie: the majority of sites), it generally just sets a cookie (or two) like SA does (eg: bbsession) which you'll need to copy from a session that logged in to a text file and load it with ----load-cookies=file option. And hope that it's IP agnostic.

|

|

|

Scruff McGruff posted:I can't speak for every website but I know you can use --user to set a username in the header and --ask-password to get the command to prompt you to enter a password so that you're not storing it in plaintext. Stack overflow led me to this also, gonna try it first and if unsuccessful try the post above. Thanks all.

|

|

|

|

|

Harik posted:My old NAS is getting fairly long in the tooth, being cobbled together from a recycled netgear readyNAS motherboard (built circa 2011, picked it up in 2018) and a pair of old xeon x3450s. took nearly a year because my dog got sick and wiped out my toy fund (he's fine now, good pupper)  I don't remember if anyone answered the question about PCIe -> U.2 adapters? I want to throw in a used enterprise U.2 for my torrent landing directory (nocow, not mirrored because i'm literally in the process of downloading linux isos and can just restart if the drive dies) and dunno, a mirrored pair of small optanes for service databases and other fast, write-heavy stuff. Maybe zfs metadata? I've got 2 weeks before the last of the main hardware arrives and I can finally do this upgrade.

|

|

|

|

Those adapters exist, startech is a fairly reliable option in that space. Kind of expensive for what they are (pretty much just a pcb with traces on it) and there are dodgy Chinese options too. But if the startech says Gen 4 capable you can be pretty confident it’ll work at that rate.

|

|

|

|

FAT32 SHAMER posted:I’m planning on moving my docker containers off of my Synology and onto a dedicated server. I’m assuming I can just mount shared folders as needed from the server and update the docker compose file to the new path, right? Or are there shenanigans of some kind involved? Some containers don't like their databases/specific files on a network drive, if you are thinking of doing that

|

|

|

|

Harik posted:took nearly a year because my dog got sick and wiped out my toy fund (he's fine now, good pupper) I've used Startech U.3 to PCIe adapters at work, and they seem to be fine; I guess U.2 would be very similar.

|

|

|

|

Hello. I have a question. My dad has a NAS server setup for his music collection and is having difficulty playing music from it. Previously he has been using Sonos, but he has run into problems with Sonos having a hard track limit (something like 64,000 songs, which is not nearly enough for his entire collection) and also their app is currently hosed from a recent update. He is wondering if there is a better solution to playing music directly off a NAS server than Sonos? Any insight would be greatly appreciated thanks!!

|

|

|

|

fridge corn posted:Hello. I have a question. My dad has a NAS server setup for his music collection and is having difficulty playing music from it. Previously he has been using Sonos, but he has run into problems with Sonos having a hard track limit (something like 64,000 songs, which is not nearly enough for his entire collection) and also their app is currently hosed from a recent update. He is wondering if there is a better solution to playing music directly off a NAS server than Sonos? Any insight would be greatly appreciated thanks!! What is he streaming the music to? If there is a client compatible with what he is using, I've heard Navidrome mentioned frequently.

|

|

|

|

Computer viking posted:I've used Startech U.3 to PCIe adapters at work, and they seem to be fine; I guess U.2 would be very similar. On another note entirely, I'm having trouble understanding the arc_summary code:

|

|

|

|

It's KiB, MiB, GiB etc. for data volumes, and K, M, G etc. for counts.

|

|

|

|

ok, that's reasonable. is that all reads? I question a fairly busy server with ~10 active VMs only doing 2 billion reads in a month.

|

|

|

|

ARC total accesses? I presume so. Regarding as to how much or little it is, you have to consider that these VMs run their own disk caches.

|

|

|

|

fridge corn posted:Hello. I have a question. My dad has a NAS server setup for his music collection and is having difficulty playing music from it. Previously he has been using Sonos, but he has run into problems with Sonos having a hard track limit (something like 64,000 songs, which is not nearly enough for his entire collection) and also their app is currently hosed from a recent update. He is wondering if there is a better solution to playing music directly off a NAS server than Sonos? Any insight would be greatly appreciated thanks!! Plex with Plexamp but that might be on the overkill side.

|

|

|

|

hogofwar posted:What is he streaming the music to? At the moment he is streaming to Sonos speakers with the Sonos app which is where he's running into the aforementioned issues, but he is not adverse to buying new hardware/devices/speakers etc. I'll have a look at navidrome thanks

|

|

|

|

IOwnCalculus posted:Plex with Plexamp but that might be on the overkill side. Overkill how? I'm not sure my father understands the meaning of overkill when it comes to his music collection

|

|

|

|

That Works posted:I am not a pro computer person and have a weird thing that's probably got a completely obvious answer I am just missing, help appreciated. https://www.httrack.com cli version

|

|

|

|

hogofwar posted:Some containers don't like their databases/specific files on a network drive, if you are thinking of doing that Yeah, anything related to the container itself would be on the server, but like for plex I’d like to keep the data on the NAS

|

|

|

Harik posted:took nearly a year because my dog got sick and wiped out my toy fund (he's fine now, good pupper) Also, cute pupper. Please pet him from me  Harik posted:I wasn't even aware there was u.3 and lol it exists because the sas lines weren't shared with pcie on u.2. why do we have to keep dragging sata/sas into every interface? So of course now's the time for the hyperscalers to move to E1.L or E3.S, or even using NVMe-over-PCIe for spinning rust, because it simplifies the design of the rack servers.  For ARC, the only thing that really matters is the hit/miss ratio. If you're below 90% and haven't maxed your memory, download more RAM. If you've maxed your memory, you can look into L2ARC. Just remember that L2ARC isn't MFU+MRU like ARC (it's a simple LRU-evict cache), and that every LBA on your L2ARC device will take up 70 bytes of memory that could otherwise be used by the ARC (meaning you can OOM your system if you add one that's too big). Combat Pretzel posted:ARC total accesses? I presume so.

|

|

|

|

|

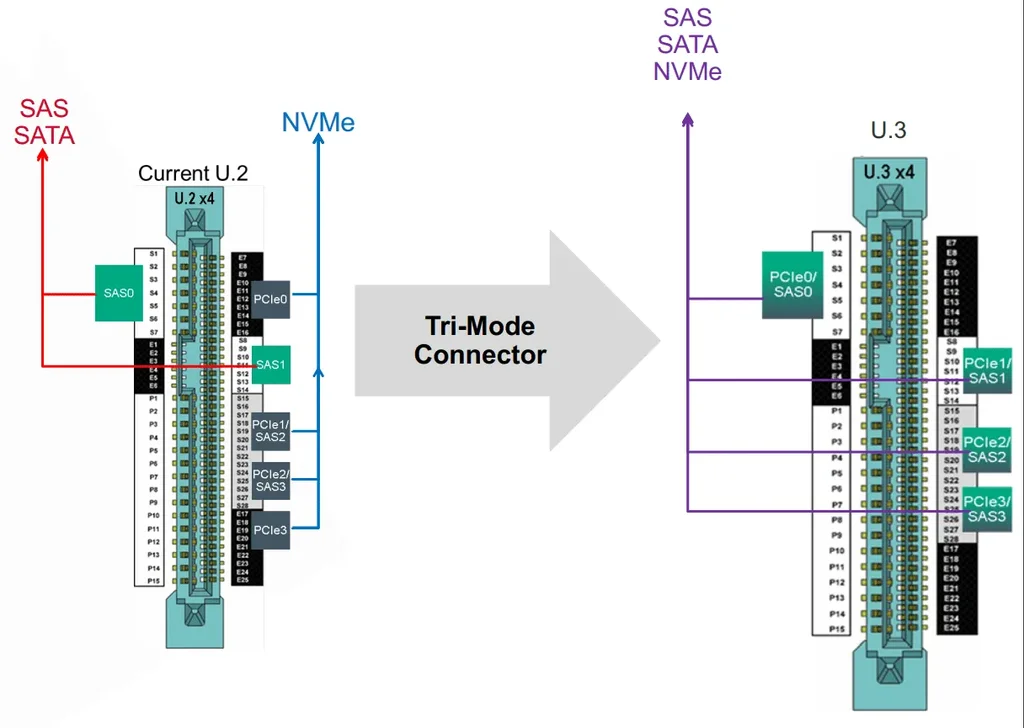

BlankSystemDaemon posted:For PCIe, M.2 (with the right keying), U.2, and U.3 are all compatible - and unless you need bifurcation, can all be electrically coupled to work with the right adapter. Pupper pet. Yes, I get that they can all be mashed together on the same wires, but why tho? These are new drive designs for these new exotic connectors so just make a PCIe interface to spinning rust and call it a day. It's ridiculous to make these hyper-complex interfaces especially when drives are already incorporating flash caches and can benefit from the simplified bulk transfer of data to begin with! They make a profit selling $15 NVMe drives so it's not like the interface is stupidly expensive to implement. You don't even need the latest gen5 PCIe stuff for drives that can't transfer that fast anyway. in short I hope all these "legacy interests demand their special snowflake chip implementing a protocol from 1979 still be commercially viable" decisions blow up in their faces and the nvme-everything faction wins out. It'd be good for the industry overall to stop subsidizing adaptec. e: only brand new u.3 drives can be used with u.3 hosts, SAS and SATA and U.2 drives are all physically or electrically incompatible and you need all new designs and we need all these new designs so... adaptec can still sell SCSI chips. in 2024. BlankSystemDaemon posted:For ARC, the only thing that really matters is the hit/miss ratio. BlankSystemDaemon posted:ARC is better than the virtualized guest OS' caching, though. Harik fucked around with this message at 13:16 on May 10, 2024 |

|

|

|

tugm4470 was out of the h11ssl-i after I ordered and offered me an upgrade for free to the -c, I asked him to throw in the SAS breakout cables since I need all 16 ports and he agreed. Let's see how this goes. I may actually have the SAS cables already though, I think they're the same breakout cables as my previous board. Just need to be ready to -IT flash the controller when it arrives I guess. E: drat it's here already, he upgraded me to priority shipping for free as well, was originally going to be here in 2 weeks. Beat most of the rest of the parts, I don't have a CPU cooler, PSU or NVMe for it yet. Harik fucked around with this message at 01:41 on May 14, 2024 |

|

|

|

BlankSystemDaemon posted:and that every LBA on your L2ARC device will take up 70 bytes of memory that could otherwise be used by the ARC code:

|

|

|

|

The reason for U.3 is that you could have drive slots that you could plug NVMe, SAS or SATA drives into as the controller interface high speed signals could switch between them, referred to as “tri mode phy” usually. I forget why u.2 wasn’t like this from the start, annoyingly.

|

|

|

Combat Pretzel posted:FFS, you keep claiming this. It's 70 bytes per ZFS data block. The problem is, records in ZFS are variable size - and there's no real way to get the distribution across an entire pool. You should still max out your memory before using L2ARC, though.

|

|

|

|

|

Unless you go bonkers with L2ARC, or you're severely memory limited, the trade-off may be worth it. In my case, it's limited to pool metadata and ZVOLs with either 16KB or 64KB volblocksize. I'm giving away 0.61GB of the 52GB of ARC to keep 400GB of data warm on a Gen3 NVMe SSD. Works fine for running games on Steam (per fast loads after clearing ARC via a reboot). If you're working with the default ZFS record size of 128KB (or bigger), you might get better ratios of headers vs data. Compression reduces it only so far (which I'm using on these ZVOLs, too). Combat Pretzel fucked around with this message at 23:42 on May 10, 2024 |

|

|

|

I'm hoping that Microsoft (and whoever else "spies") are going to release data, whether these extreme coronal mass ejections this weekend led to an increased amount of system crashes or not.

|

|

|

|

my 6tb wd red is on its last legs, so i need to replace it - are there any noise issues with wd vs seagate worth worrying about? i got a seagate as a backup drive, and i had to script it to manually write to a file or the read head would make a clicking sound when it returned - is anything beyond wd red worth getting nowadays? don't see a lot of blues, and black and gold just sound like "gamer" upsale - anything interesting coming up, or should i just buy a new 12 or 18tb drive? typical use cases are just various media and storage that don't get accessed that often

|

|

|

|

Wd drives under 8tb use smr instead of cmr, making it basically useless for any kind of raid or zfs

|

|

|

|

I think they make both and you gotta check carefully which one you're ordering.

|

|

|

|

|

| # ? May 25, 2024 18:53 |

|

WD Red Plus and higher are CMR. Standard Red 6TB and below are SMR.

|

|

|