|

Another look at the Talos Principle Vulkan renderer at AnandTech Their results are even worse than ComputerBases, with Vulkan losing to OpenGL in some cases

|

|

|

|

|

| # ? Jun 4, 2024 15:49 |

|

repiv posted:Another look at the Talos Principle Vulkan renderer at AnandTech I don't think the intention of getting Talos running on vulkan day 1 was to beat another api in performance.

|

|

|

|

Croteam: "Hey look, we hacked our game to work on Vulkan, this thing is real now, you can even try it out" Everyone else: "Holy poo poo performance sucks"

|

|

|

|

Truga posted:Croteam: "Hey look, we hacked our game to work on Vulkan, this thing is real now, you can even try it out" Khronos Group: Stop giving us bad press

|

|

|

|

FaustianQ posted:Khronos Group: Stop giving us bad press Everyone else: Oh wait, you're the OpenGL guys. Congrats on the improvement!

|

|

|

|

They should have pushed out their ARM demos to the public, it's obviously working much better there

|

|

|

|

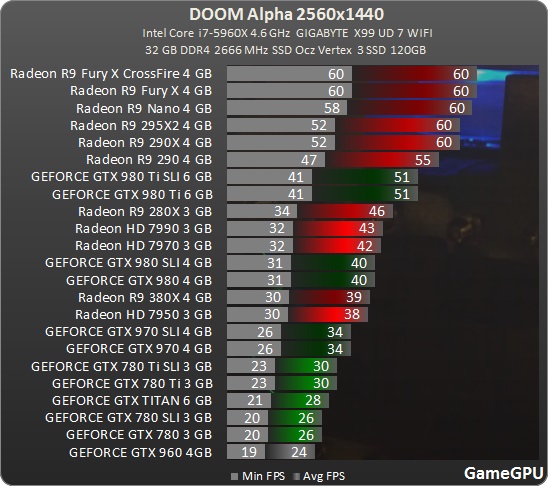

Going with what this thread is all about, after AMD dies,  nvidia nvidia http://gamegpu.com/action-/-fps-/-tps/doom-alpha-test-gpu.html Honestly though, I wonder what's going on here. Obviously SLI isn't working, but nvidia seems to be performing really oddly there, pre-optimization or not... Then again, it is bethesda now, I don't know how many, if any, id dudes they have left.

|

|

|

|

Doom is using the id Tech 6 game engine. Which is an OpenGL/Vulkan based game engine. Makes sense that AMD would have better optimization with the Mantel to Vulkan connection.

|

|

|

|

The 290 outdoing the 980ti again

|

|

|

|

Reminds me of when Doom 3 was developing and Carmack came out and praised the 9700 Pro relentlessly. And rightly so at the time but the engine ended up running faster on nvidia by the time the next generation came out (X850 vs 6800 Ultra) and people could max out AA settings. In retrospect, R300 was such a good chip.

|

|

|

|

Tanreall posted:Doom is using the id Tech 6 game engine. Which is an OpenGL/Vulkan based game engine. Makes sense that AMD would have better optimization with the Mantel to Vulkan connection. I honestly didn't know that. I figured Bethesda wasn't interested in keeping a big engine like that around after their rockstar dev left.  vvvv: It won't but I'd like to see that happen just to take some of the dumb smug out of nvidia fanboys' faces.

|

|

|

|

I will buy someone a forums upgrade if that chart ends up being true 24 hours after release dayTruga posted:I honestly didn't know that. I figured Bethesda wasn't interested in keeping a big engine like that around after their rockstar dev left. The data is on my side  I better start embracing be an nvidia fanboy even though all I feel like im doing is being bitter and critical of AMD's performance. Do I have to sign up somewhere or whats the deal. (for real though I think it bodes very well that the 290 is showing almost 60 fps at 1440p[!]) penus penus penus fucked around with this message at 22:45 on Feb 17, 2016 |

|

|

|

Truga posted:Going with what this thread is all about, after AMD dies, I made a joke post about Doom, Nvidia and API transitions being a bad mix last year, did not expect reality to get ahead of me.

|

|

|

|

Stanley Pain posted:That's why you get NvInspector and force SLI on for games that don't have it. You can usually get things working pretty well that way. It's great when that works, but it usually doesn't, at least not without some degree of glitchiness, to my experience. It sucks to have to play with flickering textures when you specifically paid for a system to make games looks pretty  AzureSkys posted:By not working on SLI or needing proper support, does that mean the game won't run, graphics problems, or is there a performance decrease? Well, by default, if the driver doesn't have an SLI profile built in, or if the profile flags the game as SLI-incompatible, it'll just use a single card. You wouldn't notice a performance drop because the additional performance was never there in the first place - and if the game's well-optimized (Dragon's Dogma PC and Metal Gear Solid V come to mind) you might not even need the second card for it to run well. You'd only notice if you ran monitoring software. In AC: Syndicate's case, there was an active SLI profile but it was badly implemented, and people actually got worse performance with SLI enabled. At least Ubisoft got it fixed, eventually. I heard Warner Bros. completely gave up on getting it work in Arkham Knight.

|

|

|

|

Awwwww yissss, scored another B-stock 780 Ti. I'm gonna need a different mobo to run SLI but I'm thinking of doing that anyway. I could also flip them and buy a 980 Ti, although that seems dumb given the next-gen cards aren't too far off. Or I could just swap this in instead of my ref blower card and flip the old one, and save the money for a couple months. I could definitely use more GPU power right now, just worried about taking the depreciation hit over the course of a couple months instead of a year or two.

|

|

|

|

Classified reference what

|

|

|

|

Truga posted:https://lists.freedesktop.org/archives/mesa-dev/2016-February/107932.html Well, yeah, they already showed DOTA 2 running on an early version of Vulkan at GDC last year. They also put a huge amount of money and manpower into Vulkan. Most of the header files, tool sources and other code have "Copyright (c) 2015-2016 Valve Corporation" at the top along with Khronos, LunarG and Google. The_Franz fucked around with this message at 02:38 on Feb 18, 2016 |

|

|

|

Truga posted:http://gamegpu.com/action-/-fps-/-tps/doom-alpha-test-gpu.html Google Translate posted:Apparently the game will automatically adjust settings, not allowing the user to manipulate (not amenable to logic, but apparently setting is determined based on the volume of video adapter) . It is possible for AMD cards also used several lower settings than the NVIDIA older, but that we learn to release games that will be available free access to the graphic settings of the game. So we advise not to use our test as a basis for making a decision on buying a video card for this game. Forced dynamic quality scaling pretty much invalidates the results, although the disparity between NV and AMD is still weird

|

|

|

|

repiv posted:Forced dynamic quality scaling pretty much invalidates the results, although the disparity between NV and AMD is still weird It really is too bad to not being able to run modern games @ 120fps on 120hz refresh rate with smooth-as-glass game play especially when you have the hardware available. Even though it's was already doable almost two decades ago in games like Quake 3 with GeForce 256 DDR and a 21" Sony CRT.

|

|

|

|

DaNzA posted:Are they going to have the awful fps cap @ 60fps and basically nullify the benefit of having 120/144hz?

|

|

|

|

Don Lapre posted:Classified reference what Apparently it's classified non-ref board with a chip running at stock clocks. Possibly with the difference of an 8+6 instead of 8+8 connector. Not sure what to expect from it - the classified is a premium board and you wouldn't think they'd put low-end chips on it but maybe they had surplus boards. Or maybe they were regular boards that got down-clocked for TDP or market segmentation. Guess I'll see how this goes. Can't be worse than my reference card, since it's got the custom board. Even weirder: after I bought this there were some Kingpin Reference Edition cards too. Wat?

|

|

|

|

DaNzA posted:Are they going to have the awful fps cap @ 60fps and basically nullify the benefit of having 120/144hz? The idTech architecture is heavily geared towards consoles and the 60 Hz refresh rates of TVs. The new Wolfenstein games sported dynamic resolution on the X1 and PS4 to maintain 60 FPS, along with megatextures, etc. so it's apparent to me that these are intended for a majority console audience. I think DOOM will continue this trend. It's not that surprising to me that at this stage AMD has performance gains knowing that the consoles all use AMD GPUs.

|

|

|

|

DrDork posted:Depends on if they lock physics to framerate (ahem, Bethesda). A lot of games lock physics to framerate and it's not a big deal, because most games only use physics for eye-candy with no gameplay impact. Bethesda actually bound all animation to framerate, i.e. at 120hz you'd fire twice as fast, run twice as fast, etc.

|

|

|

|

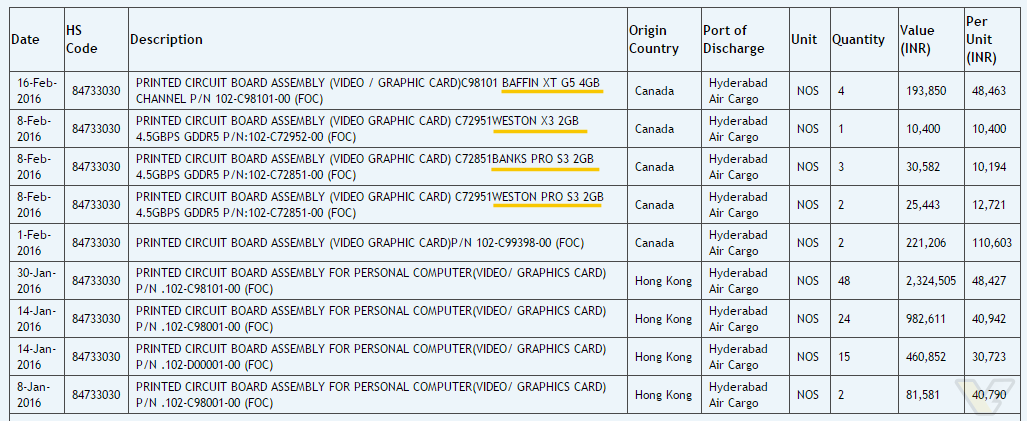

Some speculation for the thread. Seems codenames are: Greenland, Ellesmere, Baffin, Weston and Banks. Baffin XT is actually the previously spotted GPU that everyone thought it might be Polaris10/Greenland/Vega apparently (shares same board model, may not be definitive). Weston and Banks seem to be low end cards, and some speculation they are the new 28nm Glofo design and/or effectively a rebrand.

|

|

|

|

Definitely look like rebrands or die shrinks, from the memory size. Baffin looks like the x90 successor though

|

|

|

|

Maybe a shrunk Fury with its whopping 4GB HBM? Good luck trying to sell that dud again in a few months for anything over 199$ More likely 480X or even 470X

|

|

|

|

sauer kraut posted:Maybe a shrunk Fury with its whopping 4GB HBM? Good luck trying to sell that dud again in a few months for anything over 199$ Wouldnt they be able to do a real fury with HBM2 or is it a big difference in the layout of HBM1/2

|

|

|

|

Michymech posted:Wouldnt they be able to do a real fury with HBM2 or is it a big difference in the layout of HBM1/2 There is some difference in layout. HBM1 is actually smaller in footprint than HBM2. SK Hynix's numbers are: HBM1 is 5.48 mm × 7.29 mm (39.94 mm2) HBM2 is 7.75 mm × 11.87 mm (91.99 mm2) HBM2 stacks also have different heights, based on capacity/number of stacks. (0.695 mm/0.72 mm/0.745 mm vs. HBM1's single specification of 0.49 mm) This would require a heatspreader on top of the theoretical Fury with HBM2. So, assuming no die shrink, using a layout like the present Fury cards is completely out of the question. Fortunately, since we know that Samsung is building HBM2-specced 8 Gb DRAM layers, we can probably get away with just two stacks, with the die moved off to one side, and the stacks to the right. Until we know the actual footprint of "What Fury Was Supposed To Be", inferences going any further would have no basis in reality.

|

|

|

|

Some currency conversions there from INR (Indian rupee) to USD: Baffin XT board: $700 per unit Weston Pro board: $185 per unit Weston board: $150 per unit Banks Pro board: $150 per unit Honestly not sure what's going on there. MSRP of $1000, $500, $350, $250 respectively?

|

|

|

|

Anime Schoolgirl posted:Definitely look like rebrands or die shrinks, from the memory size. Considering AMDs naming scheme, Baffin looks like a Fiji shrink and is likely the 480 and 480X IMHO, as there would be a titanic gulf between the projected Greenland and even a shrunk Fury. 470 and 470X look to be Ellesmere, Weston and Banks are likely the 440 and 450, which leaves the 490 and 460 open, Greenland might be the 490 and 490X with Fury either being dropped or full Greenland. I thibnk it's possible Ellesmere is Tonga, shrunk and unshrunk for the 470, 470X, 460 and 460X. My logic is dumb but is thus, both are GCN4, while 14nm Ellesmere has all features, 28nm Ellesmere will be stripped of it's compute capability and Async Shaders like Maxwell. Same as Weston and Banks, on such low end cards it's not required and they won't really be able to make use of such features. I can't back up my GDDR5X claims but it would mean greater separation in capability between XT and Pro. Fury (Crimson? Fits the initiative) is Greenland XTX, 16GB HBM2, 14nm 490X is Greenland XT , 16GB HBM2, 14nm 490 is Greenland Pro, 8GB HBM2, 14nm 480X is Baffin XT with 4GB HBM1, 14nm 480 is Baffin Pro with 4GB HBM1, 14nm 470X is Ellesmere XTX with 6GB GDDR5X, 14nm 470 is Ellesmere XT with 6GB GDDR5, 14nm 460X is Ellesmere Pro with 3GB GDDR5X, 28nm (TSMC) 460 is Ellesmere LE with 3GB GDDR5, 28nm (TSMC) 450X is Weston XT with 2/4GB GDDR5, 28nm (GloFo) 450 is Weston Pro with 2/4GB GDDR5, 28nm (GloFo) 440 is Banks Pro with 2/4GB GDDR5, 28nm (TSMC) AMD doesn't do dedicated below the 440 or it's just integrated chips slapped on a PCB, I mean with DDR4 even 8 CU will catches up to the 730/740/250, an R5 430 is near pointless. Evidence to support my 28nm claims  I think the die size and how Pitcairn is looking to be low end this generation (comparable die sizes) makes me think this is Pitcairn Rev.2 for better power consumption and an update to GCN4. However 250nm2 is a bit small for Tonga which runs counter to my claim. I don't know how much space you'd get from gutting Tonga though.

|

|

|

|

Prototypes are probably valued higher than production examples.

|

|

|

|

xthetenth posted:Prototypes are probably valued higher than production examples. Yep, Fiji XT was valued around $1100 on Zauba.

|

|

|

|

repiv posted:Yep, Fiji XT was valued around $1100 on Zauba. Which puts, if ratio stays true, Baffin XT as MSRP ~420$, Weston as ~110$ and Banks as ~60$. For what they sound like to be, those prices make sense actually. 420$ puts Baffin in 490 range though.

|

|

|

|

SwissArmyDruid posted:

Out of curiosity, where'd you get those numbers for Hynix HBM2?

|

|

|

|

Durinia posted:Out of curiosity, where'd you get those numbers for Hynix HBM2? Sourced from Anandtech. http://www.anandtech.com/show/9969/jedec-publishes-hbm2-specification

|

|

|

|

Kinda wish AMD and Nvidia would just move to 4GB on their lower end cards already as the starting point, doesn't seem worthwhile to go with a 2GB card unless it's something for low end/low power usage. I'd say keep 2 low or mid tier cards max with 2GB, the rest in 4/6/8/16GB configurations in upper mid-range and enthusiast/high end.

|

|

|

|

The 950 and up are all available in 4gb.

|

|

|

|

Durinia posted:Out of curiosity, where'd you get those numbers for Hynix HBM2? Ah, thanks. I had missed the Semicon slide in there. Interestingly, the HBM2 bump map is the same as HBM1, such that with a small amount of care, any HBM2-capable design should be able to also support HBM1 as an alternative. Probably not interesting as a product offering, but could be nice for testing/development.

|

|

|

|

Don Lapre posted:The 950 and up are all available in 4gb. lol a 4gb 950

|

|

|

|

|

| # ? Jun 4, 2024 15:49 |

|

Ozz81 posted:Kinda wish AMD and Nvidia would just move to 4GB on their lower end cards already as the starting point, doesn't seem worthwhile to go with a 2GB card unless it's something for low end/low power usage. I'd say keep 2 low or mid tier cards max with 2GB, the rest in 4/6/8/16GB configurations in upper mid-range and enthusiast/high end. I don't understand this, I've seen modern games running acceptably on a 1GB GTX 750, and 4GB seems to be fine for the current high-end. The Fury is somewhere between a 980 and 980 Ti with only 4GB of VRAM available.

|

|

|