|

It looks like the reviews will come out on launch day at this point? It's annnoying not knowing when the embargo ends.

|

|

|

|

|

| # ? Jun 8, 2024 19:09 |

|

hobbesmaster posted:Maybe itíll force reasonable motherboard prices? Evil Trout posted:It looks like the reviews will come out on launch day at this point? It's annnoying not knowing when the embargo ends. Yeah these embargoes suck balls, but whatever, I never pre-order anything anyway. Some "leaked" tests suggest it's quite a bit faster and more efficient, but we'll see soon enough.

|

|

|

|

hobbesmaster posted:Maybe itíll force reasonable motherboard prices? There are $130 z690 boards, and z790ís only changes are turning some downstream PCIe lanes from 3.0 to 4.0 and adding 1 20Gb USB port. Intel has a big platform price advantage, but that platform likely dead ends with this launch.

|

|

|

|

Cygni posted:There are $130 z690 boards, and z790ís only changes are turning some downstream PCIe lanes from 3.0 to 4.0 and adding 1 20Gb USB port. Intel has a big platform price advantage, but that platform likely dead ends with this launch. there's always launch gouging and then supply rampup (especially on the cheaper models, they like to launch flagships first), and this is a major socket change and new memory standard. in hindsight there's no way this wasn't gonna be bad. LGA1700 was real expensive on introduction for a while too, and hard to find the ddr4 models people wanted. right now even if you want in it's an absolute no-brainer to sit and wait for the 7800X3D or even 7950X3D... if you can stomach the likely-astronomical prices. 7950x price plus $300 for 2 cache dies? And board prices will come down in the meantime. And if you wait for next gen I bet X3D is just the standard SKU, plus you get a second-gen memory controller (probably?). It's still gonna be an expensive platform though - Intel really has a huge range of capabilities too, some boards have two 5.0x16 slots, down to boards with PCIe 4.0 and DDR4. AMD's B660E, X670, and X670E all fall into that "pcie 5.0 with ddr5" category so the proper comparison is this... and I can see the partners and AMD bringing that down to $200-250 for a decent not-super-cost-reduced board, that's basically where Intel is right now. that said the decision to pull the plug on AM4 development is baffling. You could do a DDR4 IO die (if the current one didn't work) pretty easily, it all talks infinity fabric. Going entirely DDR5-only for the near future is baffling, and seems like something they might reverse on. Newegg has PCIe 5.0 DDR4 boards for $115 and basic-bitch H610/B660M PCIe 4.0 DDR4 for $80-90. There's a lot of demand for budget stuff right now and having a "crossover generation" is useful for targeting that budget price point. And for a lot of users (gaming) it doesn't make a ton of difference. Yeah there's some circumstances where it matters, but maybe not enough to go from a cheap 16GB/32GB DDR4 build to a DDR5 motherboard and new expensive RAM. DDR5 is still more expensive than DDR4, and a lot of people can pull forward a 3200C16 or 3000C15 kit that at least isn't gonna be complete trash, and even if you buy some new stuff it's super cheap compared to DDR5 (still). AMD doing the "buy a Zen4 get 32GB of DDR5 free" is a smart play there to neutralize the "reuse your ram" approach, plus subsidize some (platform-)early-adopters with a component they almost 100% certainly don't have. A lot harder for retailers to play price games with component bundles, although it may not be exactly what you wanted. And also Sapphire Rapids got pushed back again, which leads to (comparatively) cheap memory modules for client, so it's not as expensive a promo as it might otherwise be. But it's also a sign of sales and market pressure, rumors came out recently that AMD is cutting Zen4 production, it's a tough sell for a recession-minded public to buy a $300 motherboard and new memory and a $300 6-core. Oh and if you want X3D that's gonna be another $150 a chiplet probably. To compete with Intel who just rolled it in as a generational increase and is rumored to no longer be increasing most prices (except 1 SKU iirc). Like Zen4-X3D is gonna be good I'm just expecting the prices to be lol, let alone threadripper. But I guess they'll come down to wherever they need to be, eventually. And apparently (if AMD really is cutting Zen4 production) server's not gonna just absorb it all either. I think at this point it's starting to bleed into enterprise purchase patterns too, not hyperscalers but a lot of mid/large businesses are pulling back. (but if wafer sales are fixed already, does cuts in Zen4 production mean increases in RDNA3? maybe that bodes well for AMD going for volume in the GPU market while NVIDIA fucks around with their inventory and price-fixing scheme. Or maybe they can "delay" some wafers like TSMC counteroffered to NVIDIA?) If they were going to put Zen4 on AM4 they'd still need Zen4 dies. So they are going to keep doing zen3 on AM4 I guess? Baffling, way to misread the room, the people who aren't flagship warriors want cheap, the PC market has been hosed for 30 months now and everyone's tired of price increases at this specific moment. I really really figured they'd loosen up and do maybe one more gen on AM4. Paul MaudDib fucked around with this message at 03:14 on Oct 19, 2022 |

|

|

|

they did announce they're going to keep doing low-end AM4 stuff but there's no details yet. some rumours a while ago of more X3D Zen 3 parts too but nothing's come from that yet. also rumours of Zen 4 chips for AM4 being a possibility but nothing substantial yet either

|

|

|

|

https://videocardz.com/newz/intel-claims-core-i9-13900k-will-be-11-faster-on-average-than-amd-ryzen-9-7950x-in-gaming we're getting all this pretty soon anyway but here's intel's own 13900k/7950x comparison

|

|

|

|

Was hoping reviews would drop today but I guess Iíll impatiently wait until tomorrow.

|

|

|

|

any thoughts on a 13600 for a pure gaming rig? i wouldn't go for a 6C CPU these days but noticed that the 13600 has 6P+8E (20T) and i don't know how that works out for gaming

|

|

|

|

The 12700ís 8P+4E is probably better for gaming but the reviews will be out soon enough.

|

|

|

|

shrike82 posted:any thoughts on a 13600 for a pure gaming rig? i wouldn't go for a 6C CPU these days but noticed that the 13600 has 6P+8E (20T) and i don't know how that works out for gaming as always, wait for reviews, but... you don't really need more than six cores for gaming, but I'm also not going to argue that if you really want to go more than just 6C for whatever reason the thing about low-end Raptor Lake is that everything below the 13600K is re-badged Alder Lake, including the 13600 non-K this is still technically an incremental* improvement: the 12400 goes from having no E-cores to the 13400 having four E-cores (because the 13400 is a re-badged 12600K), and whatever extra clocks they can squeeze out, but if you actually want the architectural improvements of Raptor Lake (if any), you'll want the 13600K specifically ____ * the loser here is the i3-13100 still being a 4P0E CPU, with just 100 Mhz more clocks over the i3-12100, though this is not unprecedented, given that this is also what they did from 10th gen's i3-10100 going to the i3-10105 that came out contemporaneously with Rocket Lake

|

|

|

|

time to dust off the fermi housefire memes https://www.youtube.com/watch?v=yWw6q6fRnnI

|

|

|

|

https://www.youtube.com/watch?v=P40gp_DJk5E 493W in Blender

|

|

|

|

That really is a lot of watts! So we're at 450W GPU, 330W CPU, we are past even the best 850W PSUs being able to power a high end single GPU system.

|

|

|

|

absolutely ridiculous. a lot of people are going to learn about the joys of aio's over air cooling soon (to be clear, intel still throttles on the arctic liquid freezer ii 420, but imagine this poo poo on air lol) kliras fucked around with this message at 14:56 on Oct 20, 2022 |

|

|

|

Hooly gently caress they made the power worse than Zen4?! I guess being on top the benchmark charts, even if the wins are often by degrees too small to matter, really brings the sales in. edit: wow even with top end AIO's its thermal throttling quite a bit. Usually down to mid 4Ghz range or less even in games. PC LOAD LETTER fucked around with this message at 14:56 on Oct 20, 2022 |

|

|

|

intel's power efficiency has long been much worse than amd's & this time they're wildly blowing it out even further to stay competitive on performance, it's pretty silly they've claimed the 13900K performs about the same as the 12900K at 80W though so at least there's possibly that

|

|

|

|

Intel cranking the wattage for performance is stupid, but at least in games, by default, the usage isn't too bad:

|

|

|

|

So it's the same "oh no at unlimited power it pulls as much as it can, the horror!". It makes sense to test it as-is of course but did anyone test setting actually reasonable power levels?

|

|

|

|

that's still +50% over the 12900K & 7950X & if you're buying it you're probably buying it for production workloads that are going to seriously stress it and draw much more power than gaming more often i haven't seen anyone test power limits yet but intel is claiming it's still decent under relatively low limits

|

|

|

|

https://www.youtube.com/watch?v=H4Bm0Wr6OEQ der8auer's video makes it look a lot reasonable. e.

Jeff Fatwood fucked around with this message at 16:47 on Oct 20, 2022 |

|

|

|

^^^ Ok seems like there's at least one guy who tested it, watching it now: https://www.youtube.com/watch?v=H4Bm0Wr6OEQ

|

|

|

|

der8auer did some power limited testing: https://www.youtube.com/watch?v=H4Bm0Wr6OEQ he capped the 13900K to 90w in BIOS, to match Ryzen's Eco mode, and found that it performed about the same as the 12900K... while the 12900K was pulling 110w more if I recall correctly this was almost exactly what Intel was marketing - that Raptor Lake was actually an efficiency gain because you could get it to match Alder Lake while pulling less power but again, el problema en es capitalismo where this isn't going to be turned on by default and you'll have to configure it yourself

|

|

|

|

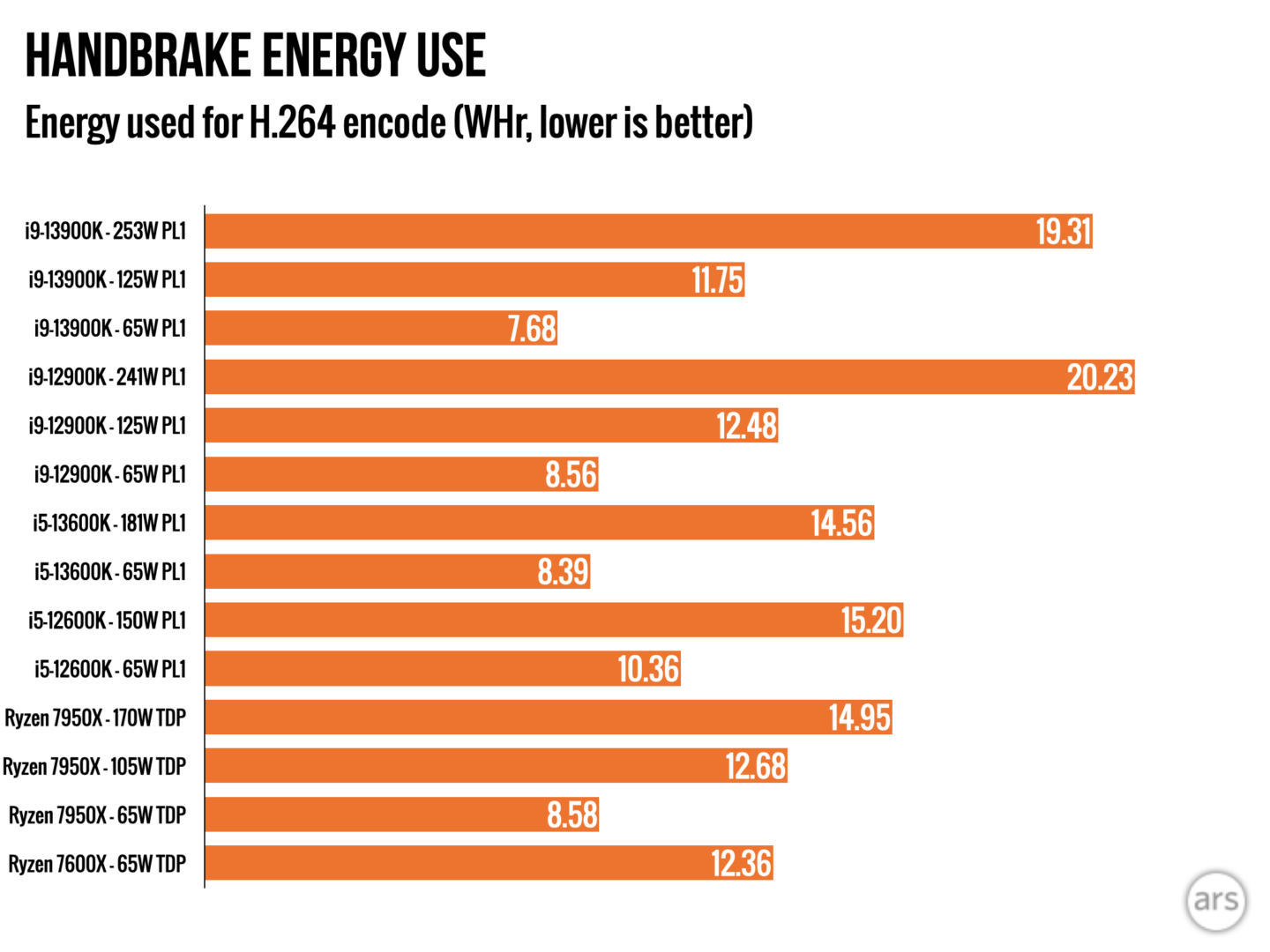

I think this is a good graph

|

|

|

|

lih posted:that's still +50% over the 12900K & 7950X Intel claimed better than decent, their announcement said that a 13900K at 65W was faster than a 12900K at 241W. I want to see those numbers. We'll also get a 65W review when the $100 cheaper 13900 non-K drops. Well, if anybody bothers to review it. Almost nobody reviewed the 12900.

|

|

|

|

wargames posted:I think this is a good graph This is exactly what I was looking for, thanks hardware unboxed for actually building a power / performance curve. Here's AMDs node advantage. AMD is going to have a huge win in server platforms with this!

|

|

|

|

wargames posted:I think this is a good graph

|

|

|

|

wargames posted:I think this is a good graph Look at what they do to match a fraction of our power

|

|

|

|

That doesn't seem to match up with der8auer's testing like at all

|

|

|

|

I happened to be needing to order a gaming system today so I got a 13th gen 13700k w/ DDR5 5600. Lets see how big of a debacle this ends up for me. I never buy on day 1.

|

|

|

|

mobby_6kl posted:That doesn't seem to match up with der8auer's testing like at all Yeah the only difference is R20 vs R23. Does R23 really favor AMD that much more?

|

|

|

|

wargames posted:I think this is a good graph I mean, the explicit purpose of the 13900K and its power tuning is to win at gaming benchmarks after all, not really PPW in cinebench. The Cinebench test is interesting from an architectural and future Zen 4 server perspective though. The gaming performance is pretty mental:  It looks like only smaller sites testing 13700Ks, but computer base did test one. It and the 13600K lookin real good vs the identically priced 7700X and 7600X:  the 13600K getting the big cache bump is def paying dividends, and the 8 ecores will probably make it age better than the 12600k. Really seems like the best chip from either company this generation. Cygni fucked around with this message at 16:03 on Oct 20, 2022 |

|

|

|

Those are using different RAM, but still the 13600/700 are going to be pretty good cost/benfit. I'm leaning towards the latter for moar productivity coars though. E: gently caress the 13700 is $200 more for two extra P cores [and a shitload of cache]. Uhhh. I'll wait until someone those charts for me. E3: This has to be a mistake. Ars listed the 13700 as $490 / $384 (F), there's no way it's $100 between these versions E2: Arstechnica has some reasonable PL tests too, though not super comprehensive:    https://arstechnica.com/gadgets/2022/10/intel-i9-13900k-and-i5-13600k-review-beating-amd-at-its-own-game/ mobby_6kl fucked around with this message at 17:43 on Oct 20, 2022 |

|

|

|

lol what the hell are computer base doing with the inconsistent ram

|

|

|

|

mobby_6kl posted:Those are using different RAM drat didn't notice that, and there really isnt any other 13700K reviews out there worth a drat aaaaa

|

|

|

|

How does the 13600k compare to the 12th-gen CPUs on value once the older stuff gets discounted? I'm looking to upgrade from an 8700k and the bar charts are telling me the 13600 would be the best bang for the buck.

|

|

|

|

well it's really going to depend on what sort of discount there is on 12th gen as to where the value is. the 13600k seems to be pretty clearly the best value current gen option. the ryzen 5600, which is last gen, is the best value at a lower price tier than that right now.

|

|

|

|

https://twitter.com/Buildzoid1/status/1583130564889935878 Board vendors up to the old tricks it seems.

|

|

|

|

how easy is it to reign in the power usage on newer intel platforms? can you just set a power limit in watts in the BIOS?

|

|

|

|

repiv posted:how easy is it to reign in the power usage on newer intel platforms? can you just set a power limit in watts in the BIOS? yeah

|

|

|

|

|

| # ? Jun 8, 2024 19:09 |

|

Inept posted:Yeah the only difference is R20 vs R23. Does R23 really favor AMD that much more? I saw the hardware unboxed review before the GN review and GN points out that motherboard vcore draws can be a difference of 40w for no perf gain. It is possible HUB's texting setup was just extra hot but I feel like his data is still in line with the other reviews. The 13900k uses lots and lots of power, idk if anyone else has seen it thermal throttle as hard though.

|

|

|