|

SYSV Fanfic posted:My parents have a core 2 duo with a gt 710 added to it ($25 on sale) for video decode/browser acceleration. If there is a free as in beer benchmark for general use I can run on it, let me know. Most of the people I know that own PCs do not game, and are quite happy with their celeron/pentium branded bay trails/braswell CPUs that have a weaker per core performance than a core 2 duo. You put a graphics card from this year in, of course that's going to help things, and even though it's based on an "old" core design it's still one 4 years newer than the CPU and system. That's hardly a standard setup for a Core 2 Duo, which is more likely by far to have either ancient, terrible, Intel integrated graphics or low end cards from that time. You're just reinforcing how unacceptable a Core 2 Duo system is - and I'm not sure why you think gaming is relevant, the modern internet and browsers are quite intensive now that everything's gone to poorly optimized HTML5 that demands acceleration support. And no, those modern systems are nowhere near as slow as a Core 2 Duo based system.

|

|

|

|

|

| # ¿ May 13, 2024 23:45 |

|

Anime Schoolgirl posted:a n3050 is only very slightly behind a core 2 duo e6600, which is really impressive once you realize that the n3050 uses less than a tenth of the power It's even more impressive when you notice that an e6600's stock clocks are 2.4 GHz while the N3050's stock frequency is 1.6 GHz and only clocks up to 2.16 GHz with turbo.

|

|

|

|

PBCrunch posted:

No it is not, it is basically the minimum that won't grind to a halt on modern websites people use, but it's not good enough. Modern low end Intel systems absolutely perform better all around for normal people usage.

|

|

|

|

SYSV Fanfic posted:I could put a 610 (which is a 520 - 2011) and get the same hardware acceleration for rendering and decode that the c2d lacks built in. If it wasn't clear, my point was that as a CPU the core 2 duo was still good enough for 80% of users. And your point is wrong. Because it isn't good enough. SYSV Fanfic posted:

That guy started with a quad core, not a dual core. In case you were asleep for 10 years, all Core 2 Duos are dual core. Core 2 Duo systems simply aren't fast enough for normal users these days, unless you pile in a bunch of other equipment they don't have already.

|

|

|

|

Captain Hair posted:

Yeah this is the reason I'm so down on the Core 2 duo for the normal user these days. Technical people have the expectation that "normal users" just run one thing at a time, or maybe a word processor and a browser tab simultaneously. But what you actually see happening is they'll open 20 complex tabs like facebook or whatever and have the word processor open and so on. Then they complain everything's so slow, but they're also not going to change their usage pattern.

|

|

|

|

redeyes posted:This is only because the machine is running out of RAM. 8GB usually fixes it. If the machine even accepts 8 GB. And if someone's willing to toss the money into upgrading in the first place. And only if that particular case is the system running out of RAM, when it's just as often all those little things demanding too much CPU time when you add it all up. And what you've got in the end is something that's at the margin of acceptability right now, and will soon be outside that as things continue getting more resource intensive in ways its not easy to expand it.

|

|

|

|

SwissArmyDruid posted:I'm not sure they even need to spy. Intel puts all their ideas there on the table, you could do a lot worse than copying the number of ALUs and AGUs, or cache sizes, or whatever. I thought the info out now is just that it can kinda hit Haswell under very tightly designed benchmarks, and probably doesn't manage that performance in more typical workloads?

|

|

|

|

mediaphage posted:I like this idea, but I'm going to be pretty cross if the dock doesn't actually make it look nice on the TV (that is, true alternate GPU rendering rather than some upscaling nonsense). I'm honestly not sure if this console is a replacement for the Wii U or the 3DS, or maybe both. I am a little skeptical of their ability to retain AAA 3rd party titles if they once again choose to poo poo the graphics bed, but whatever. Well they already don't have much in the way of third party AAA titles on the Wii U to begin with, so they're not really losing out. Most Wii U third party stuff is ports from last-gen console versions, when the Wii U is supported at all, due to Wii U basically being an Xbox 360 with 2 GB of RAM and a slightly faster GPU.

|

|

|

|

Potato Salad posted:Nintendo's next console going to the NX really doesn't help AMD, does it? Global Foundries is making their Tegras. The new Doom only runs on x86-64 systems. There are no ARM builds so it can't run natively on the Shield or any Tegra device. You were either watching people streaming it from a nice computer, or maybe a heavily stripped down tech demo. Like you grossly overestimate the chipset Nvidia is offering here. It's just a particularly good tablet/smartphone SOC, maybe, which still puts it far behind the performance of the Xbox One or PS4, let alone the upgraded Xbox One and PS4 models that will be coming out next year. The current Nividia Shield K1 tablet, for instance, has a quad core 2.2 ghz 32 bit ARMv7-A CPU with a 192 core Kepler based GPU setup, with 2 GB of RAM for the system. The Xbox One's AMD APU is 8 1.6 GHz x86-64 cores, with 768 GPU cores that don't have to be downclocked so as to prevent overheating in a non-fan-equipped mobile device, and there's 8 GB of total RAM. PS4 is similar. What Nintendo's on track to do, is for the third console in a row they're going to end up as the slowest/least powerful system out there. The Wii was of course noticeably faster than the previous generation of consoles but was still quite a bit behind the 360 and PS3, including both only being able to render in standard def and only having 91 MB of RAM total (PS3 and 360 both have 512 MB) and only being single core PowerPC when the 360 was triple core/6 thread PowerPC and the PS3 was a weird setup with 1 main PowerPC core and 7 different cores used for software as well. The Wii U is basically just an Xbox 360's design with more RAM - 2 GB specifically. The CPU is similarly triple core/six thread PPC, although at ~1.25 GHz instead of the 360's 3.2 GHz it has actual performance on most things about the same because of improvements in instruction set and the like. The GPU is only slightly more powerful than the 360's as well. So basically it launched as a 7 year old design when it came out in 2012. Now if Nintendo's next regular console really will be the NX, and the NX really will be any sort of current or near future NVidia Tegra chipset - it's not even going to be as fast as the 2013 Xbox One and PS4, it'll be a decent bit faster than the Wii U but that's a very low bar. Depending on which particularly Tegra setup they use since it'll supposedly end up handheld too, it might even be only the performance of the Wii U! It's a very bad sign, their only real option for coming out ahead of the current Xbox One and PS4, let alone the upgraded models coming next year, would be securing a high core count Intel or AMD setup. Anime Schoolgirl posted:That 1080/60fps demo was on Doom 3, actually If that's what he saw, then yeah that's just showing the hardware can handle a 12 year old game which ran in 720p on the original Xbox. Not exactly impressive! fishmech fucked around with this message at 17:54 on Aug 24, 2016 |

|

|

|

EdEddnEddy posted:The current Tegra X1 in the Shield TV is a 8 core (4 A57 + 4 A53 cores) and a 256 core Maxwell GPU that is actively cooled and while it still isn't quite to XBox One or PS4 level, it is drat close. It runs War Thunder's flying parts almost better than the PS4 does. And can handle video like a champ up to 4K/60FPS H265. It's nowhere close to the AMD GPU performance in the Xbox One or PS4, and being able to handle a video codec doesn't tell you much about games performance, it just tells you they have hardware codec support. Also, the 8 cores don't get used simultaneously, they switch between the sets of 4 cores based on system load - great at keeping battery draw or heating load down when you're doing non-intensive things, but you can't use them simultaneously to increase performance. Plus the NX is supposed to be able to be used portably if the same rumors listing it as a Tegra chipset are true, which places serious constraints on what sort of graphics performance the games can expect, unless you've got the most amazing cooling tech for a handheld system in the world and a really good battery on it. You consider all this stuff combined and getting performance out of the thing that's as good as the 3 year old XBO/PS4 is a distant hope, let alone anything better, and once again improved CPU/GPU XBO/PS4 are due out next year. Both of those are expected to be able to have real time gameplay at at least ~3K horizontal resolution if not full 4K horizontal resolution, and of course better performance at 1080p regardless.

|

|

|

|

Paul MaudDib posted:I also find it impressive that Nintendo is re-releasing N64/Gamecube/Wii games onto the 3DS. What were once console games are now handheld, and also running faster in some cases. Uh, what? They ported some N64 games sure (they also ported some N64 games to the original DS), but they sure don't have straight up N64 games to buy on the 3DS or New 3DS, and they definitely don't have GameCube or Wii games on there either, again outside complete recodes/ports. I've really got no idea where you got this notion from. Did you mishear a rumor about what the NX might be able to do? Like honestly I can't even find popular rumors about the 3DS/new 3DS running GameCube and Wii games which aren't just wild speculation from before it released. There simply isn't enough power in the thing to handle it. fishmech fucked around with this message at 23:34 on Aug 24, 2016 |

|

|

|

Paul MaudDib posted:"Recodes and ports" are exactly what I'm referring to. So nothing to do with actually putting games from other systems on there. Again: the regular old DS was full of N64 ports too. And half the Wii games they list are extremely multi-platform games that were also released on PSP or Vita, 360, PS3, PC. It's like being impressed that they poo poo out the yearly Madden games to every system. And there's absolutely 0 emulation, low level or high level, involved with these ports, despite what you originally claimed in your post - doing that would require quite a bit more processing power than the platform provides! The Nintendo 3DS is a very weak system, and the New 3DS really doesn't improve matters much. Edit: Another aspect is that the DS and 3DS are very low resolution systems: the DS is 256x192 on both screens, the 3DS is 400x240 (optionally 800x240 when the 3D mode is on, but still rendering the same aspect ratio) on the top screen and 320x240 on the bottom screen. And most of the time the bulk of 3D rendering is on only one screen, due to the hardware limitations, the other screen then being dedicated to very low intensity 2D stuff. Most of the games ported from the regular consoles to it thus only need to run at a reduced resolution compared to the original console, and even when it's the same resolution (many N64 games rendered at 320x240) you can get away with reduced detail in many aspects because they aren't noticeable on the small screens regardless. fishmech fucked around with this message at 03:05 on Aug 25, 2016 |

|

|

|

The main thing that the upgraded PS4/Xbox One are targeting is the ability to reliably render games at better than 1080p resolution, because 4k is going to be the new high end for a while. It'll probably be quite some time before the upgraded models get replaced.

|

|

|

|

Arsten posted:I disagree simply because they make a lot of money on their consoles. By the end of the 5 years, people aren't buying them anymore because everyone had a PS3 when the PS4 was released. Short of intentionally making lovely consoles, which didn't work out so great for Sony, they'll keep an upgrade cycle of some sort rolling along. No, they usually don't make much money on the consoles themselves. The lion's share of the money comes from the royalties on software sales. Making money directly on the console sales takes multiple years, since it happens once they've had time to reduce costs of production. When you're making upgraded systems, you lose that ability for a while.

|

|

|

|

Haquer posted:The PS4 was profitable from launch, however the XBox One All In One Entertainment System from Microsoft had several severe price slashes right off the front with them trying to loss-lead their way to victory like in the 360 days and it fell flat since it's a piece of poo poo The Xbox One is doing plenty fine, also the PS4 is the first console Sony's released in a long time that had a margin of profit at launch - and even then it was a very small one. If you want to talk about a console that's actually tanking, try the Wii U which still hasn't sold as much as the XBO despite a year extra on sale and always being the cheapest.

|

|

|

|

SwissArmyDruid posted:Other people have pretty much covered everything, but of particular note: The slim version of the Xbox One came out over the summer, and it's pretty much just a die-shrink (which they took advantage of to overclock it a little which allows it to handle UHD Blu-Ray playback, but has minimal impact on gaming performance). The upgraded consoles that are coming out next year though, are quite a bit beyond that. They'll have actual improved everything though they'll also take advantage of die-shrink/process-shrink stuff. It's also worth remembering that even though the Wii U is a full 4 years old, coming out in 2012, its hardware is basically the 11 year old Xbox 360 hardware in terms of performance, albeit helped by having 4 GB of RAM to work with instead of 512 MB.

|

|

|

|

Anime Schoolgirl posted:not even that, it's 2gb, of which 1gb is used by the OS It's a lot more complicated than that (games can access up to 1.5 GB of the RAM in certain situations, and a lot of that "OS RAM" is actually used to make sure the tablet display can be handled at the same time as the main TV display, so the true amount of RAM reserved to the OS is lower than it first appears), but there was OS-reserved memory in the 360 as well. Specifically, the 360 always has at least 32 of its 512 MB of RAM reserved for OS use, to support the common "guide" UI that lets you switch games, return to dashboard, view acheivements, play external music, etc. Depending on some of those features it can then take additional RAM and the game has to deal with it. The PS3 reserved a similar amount, and in certain conditions would use up to 96 MB of its own 512 MB of RAM for the OS while a game was in progress, though usually it hung around 32 MB like the 360.

|

|

|

|

Twerk from Home posted:Admittedly, the PS4 Pro is sure cheaper than building an equivalent small form factor Windows gaming PC, and SteamOS isn't quite there yet. SteamOS is never going to be "there" because it's a backburner linux distro that pretty much no one tests against.

|

|

|

|

Boiled Water posted:This is really interesting. I wonder if we'll see a return to console manufacturers subsidizing their own consoles again. What made you think they stopped? Both of the current gen consoles and the Wii U launched at prices they required taking a loss at launch, even though all of them are now profitable. In the case of the Wii U, I believe it was either their first regular console that sold at a loss at launch. The PS4 Pro might be lucking into launching at a profit, but it's also a weird half-step system like the Xbox One's half-step console due next year will be. And that means a lot of the normal costs of a "new" system are already paid in the existing base system.

|

|

|

|

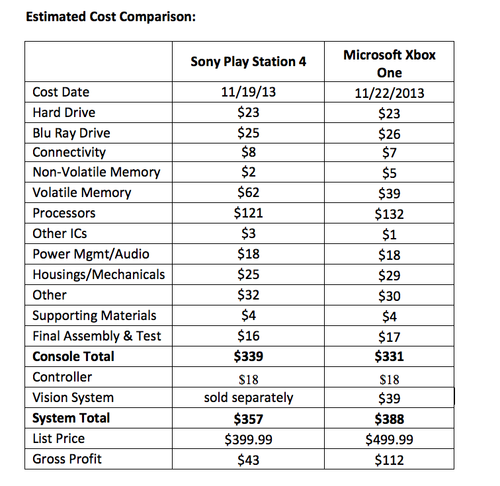

SwissArmyDruid posted:No, both consoles were made at a profit this time around. Both companies saw what that did for Nintendo and the Wii. However, The XBone's profit margin was much larger compared to the PS4's, and so Microsoft's early price cuts forced Sony's hand. Just seeing raw bill-of-materials costs doesn't really tell the whole story. Also what do you mean "what it did for the Wii"? What it did for the Wii, in actuality, was mean that the system was already dead within 3-4 years of launch and there was very low software sales per unit sold (i.e. very small ongoing profits even though there were respectable initial profits on units sold). That's not what they're going for. And the reputation of the Wii as a dead gimmick system didn't do anything to help the Wii U, which is on track to be Nintendo's worst selling home console. Edit: also your wording isn't clear, but I just want to remind you that while the already released Xbox One S is a plain "slim" revision, the PS4 Pro and the upcoming matching Xbox One release are full on improved hardware for the games etc to use. The Xbox One S has a minor overclock to handle 4K video decode, but it's still the same everything else. Edit 2: And let me be clearer about what I meant with the Wii stuff: So it comes out in 2006, and Nintendo decides to aim for a lower price, as we remember. One of their biggest means for doing that is deciding to go standard definition only, and to correspondingly use weaker hardware than the other, HD-capable consoles. And sure that makes sense for 2006, when SD sets are still rare. The problem is that's a very temporary situation, because by 2009 the United States now has the majority of households owning at least one HDTV. With the weak system specs turning off a lot of game developers besides those making shovelware, these means very few games are sold past about 2009 or 2010 or so, outside of a very few big Nintendo titles. And Nintendo is also hurt by the fact they haven't had multiple generations of experience making HD games for their next console (remember: the original Xbox and the PS2 both supported 720p and 1080i output and had some games that did each, as well as the 360 and PS3 doing full 1080p output on some games). This is why the Wii U is so hosed, on top of it being 2005 hardware (because it's essentially a 360 with more RAM) at its release in 2012. fishmech fucked around with this message at 20:53 on Sep 14, 2016 |

|

|

|

SwissArmyDruid posted:What it did for the Wii was this: BOM for a Wii was $160. Retail for a Wii was $250. Even as they were putting out new versions with bundles, they were still maintaining roughly that $250 dollar mark. Therefore, regardless of the Wii's relative success in terms of market share, every Wii sold was a net profit for Nintendo throughout its entire lifetime. Any games they sold were also, obviously, at a profit. Nintendo has all that money because at the same time their mobile devices were doing very well - first the DS and then after a rough first year the 3DS. The Wii U is an utter failure in sales, and their first couple months it wasn't even profitable for the console sale - because the odd hardware choices ran up against their pricepoint (iirc the tablet controller screen was one of their big sourcing issues). The Wii also did really poorly for them once it started flatlining - they would have greatly preferred a PS2 situation where it continues to sell well into the next generation. Plus they shouldn't need to afford a big failure, nobody wants to be in the situation of being hosed on one of their main product lines for a good 5 years straight!

|

|

|

|

FuturePastNow posted:Doesn't the built-in USB3 being janky just mean motherboard makers will have to put another chip on the board? It's something nerds care about but no consumer will ever know the difference. It means a lot of cheap motherboard/laptop makers are going to save money by not putting another chip on board, and the consumer's going to end up having a computer with randomly broken USB.

|

|

|

|

JediTalentAgent posted:I've got a spare Win 8.1 license that I bought that I read won't work with Zen, anyway The actual thing there is that Windows 8.1 may not support all new features of the new chips, similarly to how it also wouldn't support all the new features of Intel chips. Windows 8.1 will still run just as well as it did on current chips. This sort of thing has always happened.

|

|

|

|

SwissArmyDruid posted:TL;DR: Microsoft really REALLY loving want people off of 7 so they can harvest your delicious, delicious user metadata so they can use it to make even more money lifetime than someone shelling out for a license one time. Uh, no? All that stuff is in Windows 7 anyway and has been for over a year now. And very very few people actually purchase Windows licenses intentionally, instead of just getting it with a new computer. The last time regular Joe Public buying a Windows license on his own was a major cash driver was like the Windows 95 launch - and pretty much just that. Sure there's still millions of people buying their own licenses, but there's billions of people using Windows computers, so it ends up being a drop in the bucket.

|

|

|

|

pixaal posted:I don't think this can legally happen due to monopoly laws, but what would this do to AMDs stock price? That's not how monopoly laws work. There are a lot of legal monopolies and near-monopolies. Anyway Intel doesn't really have much incentive to take over all of AMD no matter what - they'd probably prefer just to take the things they really want and not the dead weight.

|

|

|

|

I think dude meant to say a PC with a TV tuner built in. Anyway yeah it's been illegal for nearly a decade to sell a TV without a digital TV tuner in the US or Canada, in regular stores for consumers. If a manufacturer doesn't want to include one, it has to be sold as a monitor.

|

|

|

|

turn left hillary!! noo posted:That's weird, at least some Vizio models I've seen come without tuners, I wonder how they get around that. You sure they weren't just monitors, or maybe some really old TVs that were sitting around unsold for a while? Federal code placed the cutoff for manufacturing new TVs for sale in the US at March 1, 2007 for all sizes (larger sets had to do it in 2005 or 2006 depending on screensize): https://www.gpo.gov/fdsys/pkg/CFR-2006-title47-vol1/pdf/CFR-2006-title47-vol1-sec15-117.pdf Canada's rules technically allow new TVs without anything but an analog tuner for sale still, but strongly encourage digital tuning to be present, and besides manufacturers don't tend to want to manufacture a seperate model just for Canada that lacks the feature.

|

|

|

|

Boiled Water posted:Being good might not be enough with windows 10 arm x86 emulation rolling out Just lol if you think slow rear end ARM chips further slowed down by doing x86 emulation are going to be relevant. Not even the worst current AMD chips are slow enough to be seriously threatened by that.

|

|

|

|

Boiled Water posted:It'll be good enough for most people who buy facebooking laptops that it won't matter. Turbonerds are another matter but that market isn't as large. No it absolutely will not. I don't know where this idea keeps coming from but it's not 2002 anymore, average users aren't using sites that are mostly static text with a few images, they're using websites with javascript poo poo and HTML5 video splattered all over the place and it's quite performance sensitive. Especially when they're on Facebook and playing Facebook games. It's "just a browser" but it's a browser running so much stuff. Maybe someone who hadn't gotten their hands on a new computer in 10 years wouldn't notice the difference, but that's not going to be a lot of people. ARM CPUs for consumer applications remain quite proportionally slow, and x86 emulation on top of that is a major slow down. Just ask anyone how nice it was to use the initial version of Office for those discontinued ARM Surface devices, when it was first available and it was just x86 office mostly running in an emulation layer! Hint - it was really slow just working with simple powerpoints and word documents, let alone excel.

|

|

|

|

The Lightning connector is garbage for reliability, even beyond the lack of pins it has. And it definitely couldn't carry the 5 amp/20 volt (that's 100 watt) current a USB-C connector can be specced to in the USB Power Delivery form of the USB 3.0 specs.

|

|

|

|

EdEddnEddy posted:Does GloFo do business with any other company and product? If so Who/What? They build the actual chips for a tons of companies, including Qualcomm and Broadcom.

|

|

|

|

apropos man posted:Do AMD have the equivalent of Intel's microcode running on their CPU's? Yes, it's pretty much the only way to implement the modern x86-64 instruction set with necessary compatibility for old programs, without doing much more complicated hardware designs. apropos man posted:With microcode being proprietary I'd expect that if AMD 'open sourced' their CPU code it'd add to the reasons for people to switch. People who would care about that sort of thing are probably going to demand all the other parts in the system be "open source" in a similar way before they really care to buy a system over it. It would also likely cost a lot of money/time with lawyers to go over all of AMD's current CPU microcode and ensure that it's in a state where it can be legally released into a proper open source license. There may be contracted or otherwise purchased work in there from an outside company that AMD can't unilaterally release for open source use.

|

|

|

|

Platystemon posted:Is open source microcode something that even Richard M. Stallman For a while he used a Chinese MIPS-clone based laptop specifically because it was the only one with open source firmware, now he uses an old ThinkPad with an open source replacement BIOS. He probably would go out and upgrade to something new that had open source microcode if it could also support an open source UEFI boot environment.

|

|

|

|

apropos man posted:But why would Intel require this amount of access?: "Intel" doesn't have this access. You, the computer owner or company sysadmin have this access. On older systems you needed DRAC or other similar remote management add-ons from various vendors to get this functionality, on newer systems it's simply built into the CPU. apropos man posted:Well I was insinuating that, although a car needs steering and power delivery, does a CPU need closed-source firmware? Yes? They literally need firmware to work just like most other components in your computer. And no one's funded an open source replacement project, so it's gonna be closed source. fishmech fucked around with this message at 22:30 on Jan 21, 2017 |

|

|

|

apropos man posted:How do you know for sure? I would be interested to see the result of fitting some kind of breakout board onto a network cable to see if there's any traffic from the motherboard's NIC which could be attributed to the ME. It might, or even most probably, produce no extra traffic but it'd be good to know. What do you mean, "how do you know?" It's literally what it is. It's intended for use on a local/corporate network, just like the older Dell DRAC, HP iLO, IBM RSA or American Megatrends MegaRAC, although an incompetent network setup could expose it to the wider internet just as any sort of controls can accidentally be exposed to the internet (for instance, say you had remote desktop serving set up for your corporate intranet, but some networking was hosed up and now all those are exposed to the internet where someone can attempt to use it). But when we're considering that scenario, anyone could try to get in. There's no indication that Intel themselves would have special access that no one else does.

|

|

|

|

Has there been any leaks of Zen CPUs that are useful for laptops?

|

|

|

|

Boiled Water posted:This leaves the question why pay for eight lovely cores when four would've done the job? Because 4 cores wouldn't be available fast enough to do what they wanted, at a price the console makers could stand while keeping the console affordable. And don't forget that the Xbox 360 was already 3 core/6 thread. So many common game engines already had optimization for at least that many threads. This sometimes even caused problems for PC ports: games like GTA IV on the PC just plain refused to run well on single core or dual core systems that people had at the time the ports came out, but ran great on higher core/thread counts afterwards.

|

|

|

|

WhyteRyce posted:I thought Intel's deal let them charge the same price throughout the life of the console. So instead of the cost going down over the life of the console, Intel kept getting to charge the same price for a laughably out-dated CPU That's very normal for console CPUs. The initial price usually is less than what the CPU maker could have had, but then they get to keep the same price for 5-10 years of manufacture and come out ahead once production really ramps up.

|

|

|

|

My L7500 @ 1.6 GHz does 214/425.

|

|

|

|

|

| # ¿ May 13, 2024 23:45 |

|

Do any of the people with early preview hardware have benchmarks with browser-based benchmarks (like sunspider, jetstream, etc)? Been trying to search for it and not coming up with anything, and frankly being able to handle various in-browser tasks is pretty important.

|

|

|

.

. would care about?

would care about?