|

I'm looking for Python courses that I can do fully from an IDE, REPL or failing that through Jupyter notebooks. About 6-7 years ago I did this great interactive course in R that was delivered entirely through R itself as a package you ran and all of the tutorials and exercises were done right from inside R Studio and I really enjoyed the "immersive" nature of it. Tried searching for something like this for Python but all I can find are interactive courses online that are taught through a web browser IDE. Maybe it's a bit picky but I found something about learning a language and getting familiar with the tools itself appealing, and that it was fully automated and even offline possible too. Something that I could run in VS Code would be fantastic. I've found a few courses taught over Jupyter notebooks which will be fine too obviously but just curious if anyone knows any Python courses run fully independently as packages.

|

|

|

|

|

| # ¿ Apr 28, 2024 20:45 |

|

I use a jupyterhub environment with my team members, but some of them are just learning python and coding in general. I'd like to make the onboarding a bit easier / automated, so for example, them needing to use the terminal and bash to create SSH profiles so they can connect to gitlab, and having a curated conda env kernel that has all the stuff they need installed. What's the best way to do this? Can I save a conda env config in a central place in jupyterhub so others can use it? I've seen some onboarding type .sh bash scripts that look interesting, anyone got any good tips on this? I'm also fairly new to python and coding world.

|

|

|

|

C2C - 2.0 posted:More questions: Gonna build a question on your question because I realized that I have no idea why I don't use matplotlib. Is there a reason specifically why matplotlib is your choice for viz? My go to has always been more high level packages, and I'm particularly fond of Altair or plotly or seaborne. Throw your JSON into a pandas dataframe and then just declare your chart type and which columns to encode as x and y markers is just so easy. Though I guess if your JSON is heavily nested then you'd have to flatten it or remodel it to work easily in a pandas df. Is there more interesting control that comes from matplotlib that makes it worth it? I'm really into data viz, soI've been debating to learn d3js or maybe it's higher level bindings in vega, but man I really wish I didn't have to get out of Python to do interactive and dynamic viz stuff so always on the look out for something better. Oysters Autobio fucked around with this message at 03:45 on Mar 2, 2023 |

|

|

|

Thanks for the answers about matplotlib. Would love any suggestions folks might have on courses, books, videos or other learning content where I can start learning a bit more about actual Python development beyond the basics of understanding dicts, and loops and etc. and something thats focused in the data analytics, engineering or science domain. I'm very interested in learning more design patterns, but particularily what I would love is to find tutorials that teach a given design pattern but with tutorials and examples that are more align with data related domains like data engineering, data analytics or data science. A lot of the stuff I've been able to find sort of seem more angled towards building a web app for like, a company or inventory system or something, but I'm interested in learning how I could apply these patterns for building out applications that fit my day to day. I'm a data analyst (though more like BI analyst in terms of actual job functions) who's main deliverable is basically tableau dashboards and maybe other ad-hoc visualisations or reports in Jupyter. But, like many places, my infrastructure is a shitshow/joke so its just a bunch of csv files being emailed around. Would love to learn how to actually build applications that could make my life or my teams life a bit better. Like, there's only like maybe 3-4 different csv structures we get in each dashboard product we make, so sure I could bring each one in and use pandas to clean it up each and every time, but it seems like it'd be worth the effort (and more interesting frankly) to make an actual tool rather than only learning python for one-off stuff. I'd love to do a tutorial that taught some design patterns that had you build one program end-to-end along with tests and the like. I've seen some that are for like, making a to-do list, but im having trouble finding examples that are like, okay we're gonna build a small app to automate cleaning a csv, or more advanced like, building a pipeline that takes data from these databases/APIs, does some things to it, then sends it to this database. Oysters Autobio fucked around with this message at 22:21 on Mar 4, 2023 |

|

|

|

Thanks, yeah I think my ask is a bit too hyper specific so I'll widen my question just a bit better. Other than SA, what other social media or platforms are people using for learning python and programming in general? I've been really frustrated lately that my go to learning strategy is still opening up Google first. With Google I realized that I just keep finding the same SEO-padding blogs that actually say next to nothing in terms of actually useful tutorials. Just feels like they're all either banal generalities about why x is better than y. And because of the SEO algo, every single startup, open source project or even indie devs have to min-max social media marketing with just banal blog posts about how "LoFi-SQL is totally the best new approach. LoFi-SQL is a database approach adopted in repos like <OPs_Project>". Reddit and HN feel the same way because the useful threads are buried deep in scattered posts. Say what you want about megathreads but at least they're oriented around categories and topics. Reddit et al just feels like having to sort through endless small megathreads generated by the same repos, or on the other hand lurkers who are just trying to figure out wtf is LoFi-SQL and why it's part of the neomodern data stack. Any good subreddits, forums, bulletin boards, or hell I'll get into listserv or mailing lists or w/e but just some places where people actually discuss how they do stuff in python. Can't believe this dumb web 1.0 comedy forum has some of the best technical resources I've been able to find, so I'll take any suggestion even if it's a sub forum for New York renters that happens to have a decent tech community. Oysters Autobio fucked around with this message at 17:10 on Mar 6, 2023 |

|

|

|

Falcon2001 posted:I've also hung out in the Python discord community which seems to be pretty reasonable in terms of people asking questions and walking through stuff. Oh hey both of these are awesome resources too, thanks for sharing. Someone should setup an open source project that assigns issue tickets and WIPs as code kata challenges that independently look random but actually combine to build a codebase, lol. Definitely very useful for reusability and practice. Zoracle Zed posted:my #1 suggestion would be to start contributing to open source projects you're already using. I've wanted to do this but feeling a bit intimidated and overly anxious (which is silly now that I say it outloud I know) about looking like an idiot when submitting a MR. Worried my Python abilities aren't "meta" enough for actually contributing it somewhere. But, you're right actually. Plus if its something I think I could use at work then it actually might be significantly easier to try and build inu a couple features I might find useful into an existing codebase rather than trying to re-make whatever edge-case and being intimidated by the whole initial setup. And yeah SEO really is sad. Like, the "dead internet" theory as a literal actual existing thing is paranoid conspiracy theory (everyone's a bot!! 🤖) but as a way to look at Google advertising-driven content its pretty much allegorical to websites creating this padded blog poo poo to game the SEO algo. I'm sure in the last few years some of these are either sped up or assisted by NLP generation of some kind. It's sadly only going to get worse I imagine. Oysters Autobio fucked around with this message at 17:52 on Mar 6, 2023 |

|

|

|

I'm guessing you're talking about GUIs without getting into any web dev right? Cause if you're willing to go the web app route, Flask is a pretty great way to use python with some basic bootstrap html and CSS (uses Jinja templates for parametizing html) Outside web dev, I've been curious to try out kivy for this since it claims to have a bit more modern looking UI

|

|

|

|

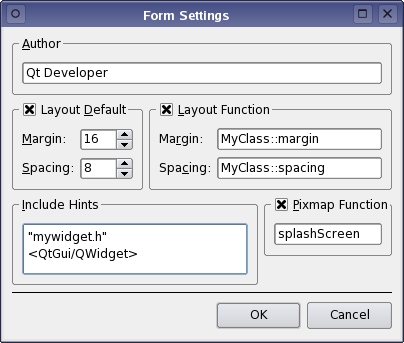

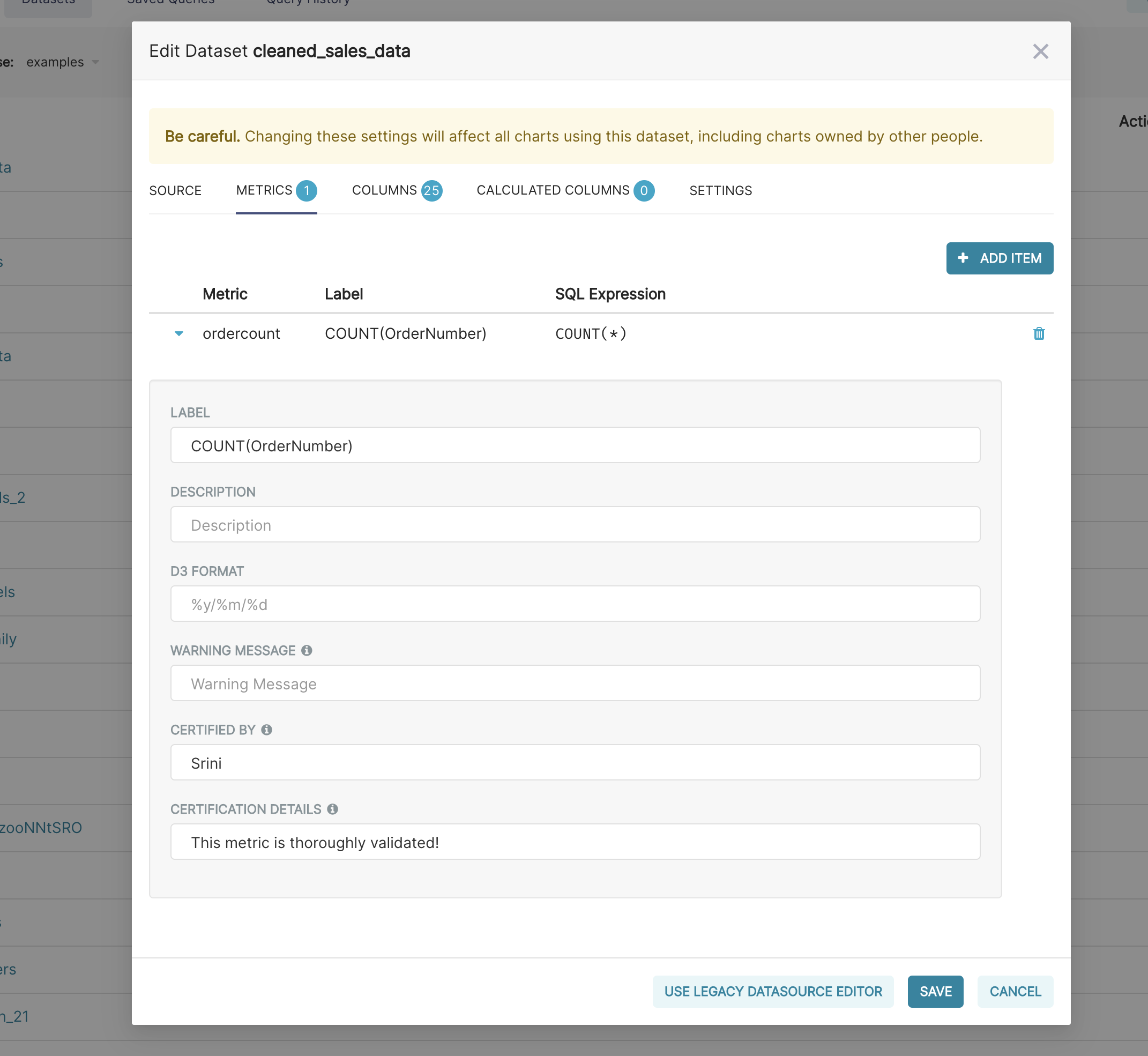

Yeah I've yet to see any Python based GUI not just look like a slightly more modern version of Visual Basic. Like all the examples in qt designer or tkinter all just scream Microsoft office modal box. I think unfortunately we've all been exposed to too many web based GUIs which have defined the aesthetic and UX expectations in the past decade of "everything-as-a-webapp". Though it's surprising because all that it takes is some thicker/heavier input boxes, minimal gradients/colors/container lines, some highlight responsiveness and heavy padding so that your GUI looks less like this and more like this:  Granted the latter is a React front end but still surprising you need these godawful webpack javascript monstrosity compilers to do anything in the front end but that's the web for you 🤷. The ppl who work on this as their day job all use javascript now so it's the most supported even if it's garbage. Only exception as far as python based that I've seen is a few front end libraries like plotly Dash and streamlit. Granted these are aimed at the data world so I dunno how useful they are for anything more complex than a demo app. If it's just as an internal tool another bit earlier option are Jupyter notebooks with ipywidgets and other ipython based widget libraries. ipyvuetify for example looks great. Google's collaboratory makes the best looking notebooks though they're all cloud based. If you still like working out of an IDE you can get VS Code extensions for them too. Oysters Autobio fucked around with this message at 08:45 on Mar 9, 2023 |

|

|

|

QuarkJets posted:Qt defaults to using the local system theme, so by default it looks Windows-like on Windows, or Mac-like on Mac, etc. That's actually a big part of the curb appeal, you get a lot of professionalism by default because that's usually what a GUI developer wants. But it's not limited to system themes, you can drop in anything you want (there are tons of cool looking themes in *nix) and make entirely custom things too. Ah okay that makes sense, though even Windows has moved away from the windows-like GUI and into something more minimalistic and modern. If I was a generalist developer (i.e. not exclusively frontend / GUI) then I could see the appeal since it just inherits the default look and therefore is functional without completely looking like a hacked together Excel VBA form. I imagine with Qt you could do custom theming?

|

|

|

|

I'm a bit stuck on how to fix this beyond saying gently caress it and waiting for a sane data warehouse or API or something. I'm trying to automate some processing of spreadsheets that eventually we turn into a Tableau dashboard but I'm completely stuck with password protection on Excel. It's one of these "encrypted password" level of passwords so unlike the Protect Workbook or Protect Worksheet I can't seem to remove the password from even within Excel. I've run into this poo poo before so I decided to go for working this into pandas and openpyxl. According to google and stack overflow, openpyxl should be able to read an .xlsx file even if "password protected", and then just saving it is enough to remove the password protection. Not clear if this also refers to the "encrypted password" setting or just the Protected Workbook passwords. Problem is whenever I try to read one of the .xlsx files it outputs a "Bad Zip file error." Tried this both with using openpyxl as a read engine on pandas and openpyxl on its own. According to google this is usually because the sheets aren't actually configured as tables so when openpyxl tries to read the .xlsx file (which is basically a .zip) it doesn't see anything. Lo and behold on manual inspection none of the sheets are formatted as tables, they're just text on cells. Now, the solution in this case is usually just to read it in as a .csv, but I can't even open it in openpyxl to begin with so aside from doing it manually I don't really know what other ways I could actually work with these stupid things. Luckily aside from the formatting, the tables themselves are actually fairly clean.

|

|

|

|

CarForumPoster posted:I’m having a hard time understanding the particulars here. By unzipping it do you mean with just like 7zip or something? I'll take a look and see if thats something I can do. I have come across pywin32 but I wanted to exhaust more standard or common approaches and make sure I wasn't missing some more obvious solution

|

|

|

|

Sigh, all this talk about the shittiness of Excel just keeps reminding me that while I really love UX/UI and data viz stuff, just thinking about JavaScript, CSS, HTML/DOM and bundle packages poo poo gives me nightmares. C'mon, give me a full Python web dev stack. I know I've already complained about this but it still just doesn't make sense given Python's ubiquity, especially with ML and DS being all the rage. Is it too much to ask for an entire industry of professionals to completely replace a tech stack? Huh???

|

|

|

|

Oh ya true, I haven't fully explored Dash enough and I really should.

|

|

|

|

I think I've already come in herre with similar questions, so I apologize in advance if there's some repetition. I'm a data analyst who mainly works in GUIs like Tableau, PowerBI etc. but I'm interested in upskilling with more skills in python and dev-work in general around data analysis (also "data science" too but only for the simple applications of ML to be realistic in that my day-to-day responsibilities which aren't in building models. I also don't have a CS, heavy stats or engineering background.). I want to learn how to do more of my work in a "devops" or software developer way both for my own general upskilling/interest but also because I really enjoy the consistency and reproducibility with working using the same set of tools that aren't based on GUIs (love the concept of TDD and design patterns, and interestingly they feel less daunting then having to tackle some giant-bloated Tableau dashboard because templating in BI tools absolutely blows). My work will pay for some online learning, so I really want to pick the best platform for me, but I've been having trouble finding something that is both super interactive (i.e. has some sort of "fake" web dev IDE that simulates what actually doing the skills would "feel" like) while not being so abstracted that its completely like mad-libs / fill-in-the-blanks (DataCamp often feels like this) and its still based around actual projects and not trying to teach you python through rote memorization (yes, I understand I need to just "write python", but my ADHD brain doesn't work with just "pushing through" something, I have to be actually doing something tangible for my focus to stay on enough that I'm actually writing code). Much prefer to learn how to build a python package end-to-end and at first only understand like 20% of applying python techniques themselves so that when I'm applying something its actually being done in the context of a tangible end goal. The whole "import foo and baz" poo poo is absolutely useless to me because I'll never remember that unless I've applied it and built some sort of memory of what this was doing in the context of a problem. I get frustrated seeing the kind of examples that should only be in documentation, not an actual tutorial. poo poo like this: code:If the examples were stupid but still "practical" they would work 1000% better like "John is writing an app for a lemonade stand and needs to write a function that groups lemonade drinks by brands. He has a csv with all the brands and all the drinks, here is what he would do..." It's also tricky to find "realistic" material for using python in a low-level "applied" sort of way. I'm not a web developer, so while I don't need to learn how to build an entire app, I still don't want all the code I'm writing just to be scratch notebooks filled with pandas queries, especially since very often what I'm asked to do is often very repeatable without much work to parameterize or at least be made in such a way that other colleagues could re-use. Anyways, bit of a long rant, sorry had to just get it out on screen here. If anyone knows of anything that's near the combo of what Im talking about (data-oriented, interactive / simulated environment and project/contextually focused) then it would be much appreciated for my stupid overthinking ADHD brain.

|

|

|

|

Sometimes I want to go back to R and it's tidyverse but everything at work is python so I know I don't have the same luxury. Two questions here, first, anyone have good resources for applying TDD for data analysis or ETL workflows? Specifically here with something that is dataframe friendly. Love the idea of TDD, but every tutorial I've found on testing is focused on objects and other applications so I'd rather not try and reinvent my own data testing process if there's a really great and easy way to do it while staying in the dataframe abstraction or pandas-world. Second questiom, is there a pandas dataframe-friendly wrapper someone has made for interacting with APIs like the requests library but abstracted with some helper functions for iterating through columns? Or are people just using pd.apply() and pd.map() on a dataframe column they've written into a payload? Still rather new with pandas and the little footguns I can see with accidentally using a method that results in a column being processed without maintaining order or key:value relationship with a unique identifier. If there was a declarative dataframe oriented package that just let me do something like. Python code:Where 'column2' is a column from pandas dataframe that I want to POST to an API for it to process in some way (translation or whatever) and the append boolean tells it to append a new column rather than replacing it with the results. With requests I always feel uneasy or unsure of how im POSTing a dataframe column as a dict, then adding that results dict back as a new column. Totally get why from a dev perspective all this 'everything as a REST API' microservices makes sense, but been finding it difficult for us dumbass data analysts who mainly work with SQL calls to a db to adapt. cue Hank Hill meme: "Do I look like I know what the hell a JSON is? I just want a table of a goddamn hotdog"

|

|

|

|

DoctorTristan posted:I'm having a hard time parsing what you're actually trying to do here because this post is jumping around all over the place. I'm sorry you're completely right. Thanks for being so patient and kind with your response. Bit flustered as you could tell. quote:

Thanks for the detail on this, and your example is right on what I'm looking at. My biggest confusion has been how to define testable units when handling dataframes but I didn't realize it could be as simple as what you said about testing a column. I just need to find some examples or tutorials of what this looks like in pandas or pyspark. quote:AFAIK there is no generic package that saves you the work of doing Sorry let me specify what I mean here a bit better. Consider in my case that dataframes (and it's rows and columns) are the only data structures that would ever be used with this web API. I'd like to make or use an interface for a user who: - has a dataframe - selects a column - column is iterated over with each row being POSTed to a web API - results are appended as a new column in the same order so they match Consider a scenario like a translation API, and I want to translate each row of data in a single column and have the translation appended as a new column with the rows matching. Because translation using ML based models are influenced by all of the tokens being passed through it, I can't send the entire column as a single JSON array and need to send and return the data row by row. quote:If you want to work with web APIs you need to know what JSON is. I don't *want* to work with web APIs, I'm *forced* to work with web APIs because most of the devs supporting our data analyst teams have never supported a data warehouse and only understand making web apps and microservices for everything. I wouldn't be doing this myself in the first place if we had someone responsible for ETL and having the data available in an analytically useful format alongside other data I need to analyze it with. I understand JSON a little myself but I have other analyst colleagues who like me only know SQL and a little bit of python, so I figured hey if I have to figure out how to do this why not spend the effort taking that knowledge and making it a little more reproducible so in the future it's super easy to do each time with a new dataframe. Oysters Autobio fucked around with this message at 16:05 on Jun 10, 2023 |

|

|

|

Macichne Leainig posted:You can also define the new column on the dataframe ahead of time and then just do like row.field = value as well, but now we're getting down into "how do you prefer to write your pandas" Absolutely nothing wrong with sharing (at least to me that is, lol) advice on "how do you prefer to write your pandas". It's much appreciated. The one part I'm not sure of is how to ensure or test to make sure that the new column I've created is "properly lined up" with the original input rows. Can I somehow assign some kind of primary key to these rows so then when my new column is added I can quickly see "ok awesome, input row 43 from original column lines up with output row 43." Does that make sense at all? Or am I being a bit to overly concerned here? I'm still fairly new to Python coming from mainly a SQL background so the thought of generating a list based off inputs from a column and then attaching the new outputs column and knowing 100% that they match is for some reason a big concern of mine. Oysters Autobio fucked around with this message at 03:06 on Jun 15, 2023 |

|

|

|

I'm helping out a data analysis team adopt python and jupyter notebooks for their work rather than Excel or Tableau or whatever. The DAs aren't writing python for it to be deployed anywhere (i.e. not for ETL or ongoing pipelines), they're mainly using various libraries like pandas and the like for data cleaning and analysis. Their end products are reports or identifying ETL needs that they can prototype and then be handed off to a data engineer to productionize into a datamart or whatever. I want to introduce some standards for readability and maybe even convince them to use our in-house gitlab for VC But all the resources I've seen on these topics are understandably related to SWE best practices for maintainable codebases. I don't want to go in heavy handed by imposing all of these barriers since the goal isn't to impose python or jupyter on them, rather facilitate it's introduction and benefits that come from adopting the tools. Number one priority here since everything is setup for them with a jupyterhub instance (though this could use some love in terms of default configs). So, does anyone know of any decent data sciency or data analysis oriented python code style books that is more oriented for those just using python as scripts but still nudges them to write descriptive classes / variables / functions and other readability standards but strips away the SWE stuff that's less important for people just writing jupyter notebooks? Similarly any suggestions for tools or packages I should consider would be great. Secondly, is it a bad idea to abstract different python packages and capabilities behind creating a monolithic custom library as a set of utilities for them? Like I posted earlier about using requests, would it be a bad practice to create a python package like "utilitytoolkit" and then when they ask for new usecasea they can't quite figure out or think might be nice to simplify because they do it a lot, just publish new modules and add on to it? Like say there's a really common API they use, I could abstract using python requests to run a GET call and transform the JSON payload into a pandas dataframe into a module so all they need to do is "utility.toolkit()"? I don't want to monkey around with APIs or microservices when everyone is just using python anyways. Oysters Autobio fucked around with this message at 12:35 on Aug 1, 2023 |

|

|

|

CompeAnansi posted:What's wrong with Tableau for data analysts? I understand maybe wanting to be able to explore the data in python if you want, but visualizations generally look better in Tableau and they're more interactive. Problem with Tableau that I find is that its the worst software ever designed in terms of reusability. You could work on one component or dashboard sheet for days, but you can't just swap out the data for anything similar so nothing is modular or re-useable. That lack of modularization makes it only good if you decide to deploy and maintain a standing dashboard that serves a re-occuring need. Once you sink your time into it though don't ever expect to be able to gain anything back, it's very frustrating to see old dashboards with what look like (from PDF printouts) really awesome visualizations but the moment you open it, it throws up connection errors. I find doesn't make it a good platform for quick visualization for a one-off report or product. Aside from those things, I am also just not a fan of the "developer experience" if you will. In addition to not being able to re-use components and hot-swap data, I hate how none of the UI is declarative. Instead of telling the GUI what you want to see, you have to randomly drop in esoteric combinations of dimension and measures until you get the result you were looking for. Absolutely hate that design. Finally, its expensive so we have limited licenses for even viewers, so less people can use your products. quote:If they're just using python for cleaning data, shouldn't that be the job of the data engineers? Hahahaha, yeah I ask myself that everyday. No, our data engineers don't clean data, they just ETL (i guess without the 'T') into the data lake and "there you go, have fun". Most of our engineers are working on greenfield replacement projects and don't want to support our legacy self-rolled on-prem hadoop stuff nor were any of them around when it was deployed.

|

|

|

|

I'm getting a little lost here in the "meta" of developing an internal utility app which is essentially just a wrapper of a few existing packages and some existing 3rd party APIs, along with some customs extensions I write using the built in extensions functions for one of these packages. Goal of the app is to basically take some existing business-specific workflows that currently only I do in Python on an ad-hoc basis, and turn it into an app. These workflows are very domain specific and they each currently require knowledge about a bunch of org policy, legal stuff etc. to do, but I'm the only one who knows how to do it. Luckily for my managers, im a data analyst who's also been messing around with python and thought hey, why not learn some dev skills and upskill a bit from just being an adhoc script monkey so that my colleagues who might be forced to do the same work don't have to read and materialize poorly documented esoteric business logic and policy that should've been developed into an app years ago. I could see this app being extended or used down the road by more experienced developers as part of some ETL pipelines or a microservice. But this isn't certain. So my thinking here was that I wanted to keep this mostly in Python and not deal with webservers and HTTP and all that because the current usecase is just for other analysts comfortable in jupyter notebooks to be able to import this as a python package into their workspace to write reports. After a lot of analysis paralysis I've narrowed down to making this in a "modulith" style because while my initial usecase is just as a python package, I could see myself extending this into a streamlit, plotly dash or flask app to learn a little GUI stuff. As I understand it, moduliths have you separating your code into separate components but with each of them containing the application, domain/business logic with internal APIs to talk to eachother. Would something like this be an okay use of a modulith pattern? It's basically my internal compromise between "gently caress it, this is a tool for me and my team and I dont need to gently caress with web frameworks for a working MVP" and "but I'm trying to learn best practices, and that if it works well it could very likely be extended by some real devs who would be stuck with my spaghetti bullshit." Part of the reason "I care" is because in our codebase I can see that other analysts also tackled these exact same requirements but they never took the time into making it into an actual application, instead it's just a bunch of notebooks and example tutorials. So while I read through them because I'm a nerd, most of my other colleagues aren't interested in python or dev so they want to just focus on the business workflow parts they're being9 asked to do. Up until now new folks always come to me for help, but so much of my "knowledge" is esoteric recreated archeology from past docs of people who did this but never bothered to actually coherently design these workflows into something easily repeatable. I know others are frustrated too because I'm pretty much the only one who knows this stuff and so when they turn to me I don't really have much to show for it. FYI, I know I'm overthinking this. This post is more of a way to organize my planning and thoughts, so please ignore if it's word salad. Oysters Autobio fucked around with this message at 18:48 on Aug 31, 2023 |

|

|

|

Is there any decent Regex generators for Python? Talking about here of a library that has functions for constructing common string searches in a "pythonic way" and outputs a raw regex pattern. Terrible written-on-phone example: Python code:Similarly, any decent "reverse engineering" regex tools in python? Like a semi supervised ML-based library that lets you input a bunch of similar strings and you can train a model to generate a regex string to parse it? Oysters Autobio fucked around with this message at 15:32 on Oct 12, 2023 |

|

|

|

Falcon2001 posted:Anyone have any experience with one of the Python-only frontend frameworks like Anvil or JustPy? I have some internal tooling I'd like to build with not a lot of time, and all things being equal, it'd be nice to not have to learn JS and a modern frontend library. The alternative would just be a bunch of CLI stuff using Click/etc, which isn't awful (or Textual if I decide to get really fancy) but it'd be nice to be able to just link someone to a URL. The Python front-end world seems pretty experimental to me at this point. Would absolutely love to be proven wrong because as someone who loves Python but also loves UI/UX and data viz, I'm sort of just delaying learning any JS frameworks. Are Jupyter notebooks at all in your POC here? Cause there's some half decent looking Vuetify extension for ipywidgets. Otherwise the only other one I'm familiar with is Plotly Dash which was made for data science dashboards rather than any UI. Seems all the cool kids who want to avoid the JavaScript ecosystem are using HTMX + Flask/Django. Dunno how well that would work for an internal tool.

|

|

|

|

Any good resources on managing ETL with Python? I'm still fairly new to python but I'm struggling to find good resources on building ETL tools and design patterns for helping automate different data cleaning and modeling requirements. Our team is often responsible for taking adhoc spreadsheets or datasets and transforming them to a common data model/schema as well as some processing like removing PII for GDPR compliance, I'm struggling to conceptualize how this would look like. We have a mixed team of data analysts. So some who are comfortable with Python, others only within the context of a Jupyter notebook. I've seen in-house other similar projects which created custom wrappers (ie like a "dataset" object that then can be passed through various functions) but Id rather use tried/true patterns or even better a framework/library made by smarter people. Really what I'm looking for is inspiration on how others have done ETL in python.

|

|

|

|

BAD AT STUFF posted:I'd agree with the advice that other folks gave already. One thing that makes it hard to have a single answer for this question is that there are different scales that the various frameworks operate on. If you need distributed computing and parallel task execution, then you'll want something like Airflow and PySpark to do this. If you're working on the scale of spreadsheets or individual files, something like Pandas would likely be a better option. But there are certain data formats or types that probably don't lend themselves to Pandas dataframes. Thanks a bunch for you and everyone's advice. Also especially thanks for flagging pydantic. I think what it calls type coercion is very much what I need, will need to see an actual example ETL project using it to make full sense. I am a bit stuck in tutorial-hell on this project at the moment and struggling to start writing actual code because I don't really know what the end state should "look" like in terms of structure.

|

|

|

|

QuarkJets posted:I'd recommend dask or spark if you predict that you'll need a solution that works with more than 1 cpu core. These frameworks work great on a single computer, in fact they're probably the easiest way to escape from the GIL - arguably with fewer pitfalls than concurrent.futures. But if you're doing something that takes 5 minutes once per month then yeah, do something simpler imo Yeah, and to be honest, I can't tell you why but I'm not a big fan of pandas in general, and actually prefer pyspark / sparksql. Very much a subjective thing, couldn't tell you why but the whole "index" and "axes" abstractions are bit annoying to my brain since I'm coming from SQL-world. I only really need to use the distributed processing like 50% of the time, but its enough where I'd rather just spend my time in one library framework rather than splitting between both.

|

|

|

|

Adding to the idea about a data engineering thread. I don't really follow many threads right now in SH/SC because the data stuff spans multiple megathreads.

|

|

|

|

Question for experienced folks here. I'm not a dev, just a data analyst who started picking up python for pandas and pyspark. Past few months i feel like beyond initial basic tutorials and now using Python basically for interactive work in a jupyter notebook, I'm sort of stuck again in tutorial hell when it comes to wanting to build better skills at making python code and packages for *other* people to also use. Sort of a mix between analysis paralysis (of too many options and freedom) and good hefty dose of imposter syndrome / anxiety over how other "real" developers and data scientists at work will judge my code. I know it's all irrational, but one practical part I'm having trouble with is the framing and design part of things. Having ADHD doesn't help either because it's easy to accidentally fall into a rabbit hole and suddenly you're reading about pub/sub and Kafka when all you were trying to lookup was how to split a column by delimiter in pandas, lol. Outside of Python I turn heavily to structure, to do lists, framing / templates and the like to stay on track, but I'm having trouble applying any of that for Python work. For example, I love design patterns, but all of them seem to be oriented towards "non data engineering" related problems or I can't figure out how to apply them with my problems. Like, I love the idea of TDD and red/green but have no clue how I would build testing functions for data analysis or ETL. I can't seem to find more opinionated and detailed and opinionated step by step models for creating data pipelines, ETL or creating EDA packages to generate reports. A lot of stuff feels like the "step one draw two circles, step two draw the rest of the loving owl". A lot of just venting here so please ignore if it's obnoxious or vague, but any advice or thoughts on this would be great.

|

|

|

|

Sorry for the crosspost but I realized this question might be better suited here. I'm trying to setup my local VS Code (launched from Anaconda on Windows) to use the notebook kernel and terminal from a JupyterHub instance we use at work. Reason being that this Hub has a default conda env and kernel that's setup to abstract working with our spark and hadoop setup. I followed some online tutorials which had me generate a token from my JupyterHub account, and then simply append it into a Jupyter extension setting which lets you set a remote jupyter server to use as a notebook kernel. Great, it works. Problem is that this seems to only connect the notebook to the server. The vscode terminal is still just my local machine. So how do I connect that to the remote JupyterHub? Goal here is to just fully replicate my work out of the jupyterhub instance on vs code to leverage all the IDE tools it has. Notebook works fine, but I don't want to have to work between both vscode and jupyterlab on chrome to use all the functionality. Side note, I use the "Show Contextual Help" feature in Jupyterlab a lot since it shows docstrings and info for any given object in the IDE. What's the equivalent for this in vs code?

|

|

|

|

Falcon2001 posted:Wanted to jump back to this question, as I was in a somewhat similar place, and definitely have the same ADHD coping mechanisms and problems. Here's some notes... This is really fantastic info, thanks so much for sharing. Your point about functional programming makes a lot of sense, and I think is probably key to some of my problems finding applicable ETL and data engineering related patterns. You've already provided way more than enough but if you or anyone has some good resources for functional programming for data engineering in python that'd be great. Also your specific advice all makes a lot of sense, and I've always just been really bad at breaking things down into chunks for anything so this probably seems like the best place to start. OnceIWasAnOstrich posted:Hmm, this is kind of a big ask. Your setup works by connecting to the remote Jupyter kernel over HTTP when you are using a Notebook in VS Code via the JupyterHub extension. You of course can get a remote terminal via Jupyter, Jupyter lab does it, but it does it with (I think) XTerm.js or something similar running on the host communicating over websockets. To make this work over a Jupyter kernel link, you would have to rewrite (or re-implement in the form of an extension) the VS Code terminal to use the Jupyter kernel protocol and possibly add software to your kernel environments to run the terminal session and transfer data over websockets or some other protocol. The extension just isn't set up to do that, as far as I know. Thanks for explainin, yeah reworking the extension how you describe it sounds far too onerous. Though it does sort of leave me scratching my head that no one else has tackled this, because only being able to connect a remote notebook but not a terminal/console seems like you may as well not even connect to a remote Jupyter server through vscode to begin with. quote:You can, of course also do remote terminals (and editors, and extensions, and everything else), but those are implemented over SSH. To make that work you would not really be using JupyterHub and would instead connect to the host running the Jupyter kernels over SSH and then access both the terminal and notebook kernels over that tunnel. So this kind of setup was what I was thinking was more doable, but I don't know what accesses I have in terms of SSH so I'll see what I can do. It doesn't help that my vscode is on a Windows machine because our workstations aren't connected to the Windows App store for me to install a WSL Linux distro. Adding PuTTY to the mix isn't appealing. quote:Individual extensions for various languages provide this via language servers. This works in the normal editor interface, but with notebooks I imagine it would be the responsibility of the notebook extension. I don't use that, perhaps there is a feature in settings you could enable? That feature might just not be available in notebooks. Thanks that makes sense, ill dig into the notebook extension settings and see if I can find anything about a language server -- quote:Another thing I know is possible is to run a web version of VS Code (or code-server) remotely and access it via the Jupyter proxies, something like https://github.com/betatim/vscode-binder or https://github.com/victor-moreno/jupyterhub-deploy-docker-VM/tree/master/singleuser/srv/jupyter_codeserver_proxy. You've still got a separate interface, but at least they're both running on the same host in the same browser.

|

|

|

|

Thanks for that. I watched a YT series which went over standard FP libraries like filter, map and reduce and it actually did really click for me how this could be really useful in ETL or data work.

|

|

|

|

Yeah if you're doing science / analysis stuff and already familiar with it I've yet to hear about any killer feature to justify moving from conda to something dev oriented like poetry or whatever.

|

|

|

|

Any pandas experts here? I've got an adhoc spreadsheet I need to clean for ETL (why this is my job as a data analyst, I don't know) but I'm having trouble even articulating the problem decently enough to leverage ChatGPT or Google. Spreadsheet (.XLSX file) is structured like this code:Reading it in as a data frame with defaults has pandas assigning the "category" column as the index, but despite reading docs and googling I still can't really wrap my head around multi - indexes. Lots of tutorials on creating them, or analysing them, but can't find anything for the reverse (taking a multi-level index and transforming it into a repeating column value). The target model should be something like this: code:edit: should specify that we'll like receive these types of spreadsheets with different labels and values. So I'd like to write it to be somewhat parameterized and reusable, which is why I'm not just hacking through this for a one-time ETL Oysters Autobio fucked around with this message at 02:32 on Feb 21, 2024 |

|

|

|

WHERE MY HAT IS AT posted:I don't think I'd call myself an "expert" so maybe someone will weigh in with a better solution, but using ffill seems like it would work: Awesome! This is perfect, exactly what I was looking for. This sort of mask + ffill method would probably work in a lot of usecases for these kinds of tables.

|

|

|

|

Vulture Culture posted:I agree that it should usually be a separate pipeline step, but you should feel fine doing whatever is idiomatically familiar to the people who need to maintain it while being reasonably performant Thanks for all the examples. I'll admit as a data analyst, I'm more comfortable with pandas than python itself. To your point about idiomatic, not gonna lie, the pandas-based masking and then forward filling method made much better sense to me then the pure python method. But, since my question was geared towards ETL, I can understand why ppl might recommend pure pythonic methods for maintainability sake. I am still very much learning. Though to be honest I've found myself drawn towards functional approaches (like using map(), apply() etc). Sometimes people's really deeply nested iterative loops are hard for me to understand because I'm really new to imperative programming and mainly familiar with SQL and such as a data analyst. Absolutely love me a nice CASE...WHEN... THEN syntax.

|

|

|

|

Notebooks entirely live in a different use of coding. There's using code to make a tool, package or something reusable. It's creating a system, package etc Then there's using code to analyze data or explore / test or share lessons and learning. It's to create a product. Something to be consumed once by any number of people. But after recently working in a data platform designed by DS' who were inspired by Netflix circa 2015, I think it's hell. Every ETL job is mashed into a notebook. Parameterized notebooks that generate other notebooks. Absolutely zero tests. If I'm writing an analytical report it's fantastic because I can weave in visualizations and text and images. Or often if I'm testing and building a number of functions, it's nice to call in the REPL on demand and debug things that way. But once that's finished, it goes into a python file. VS Codes has a great extension that can convert .ipynb to a .py file. But for straight code it's a mess and frankly I find it slows you down. With a plain .py file I just navigate up and down, comment as I need, etc. Finally once you've ever tried a feature IDE like vscode, you'll never want to go back to jupyterlab. The tab completion is way snappier, you've got great variable viewers and can leverage a big ecosystem of extensions I'm a complete amateur at python and am only a data analyst, but I'm so glad I moved to VS Code.

|

|

|

|

Fairly new to Python here so excuse the naive question: are you trying to extract these strings entirely only using regex and if so, why? By no means am I judging you on this, god knows we all have to do hacky poo poo all the time. Seems like trying to do this in pure regex is just a massive headache given the complexity of the pattern you're trying to hit. Personally, I'd do what someone else mentioned above and first filter for your keys (ie the strings you have), then extract the timestamps. Only benefit I could see is if the keys themselves are complex strings too where you're going to have to create something complex in regex to run searches on them too. If you just have the keys already then sounds far easier to go with that option. Alternatively, what's the format of the files? Log files? JSON? Are these in such a non standard format you couldn't leverage an existing python wrapper package? edit: sorry for the blahblahblah, I know you came here just looking for a quick regex answer about conditionals but...this is the python thread so, kind of expected to get pythonish answers. Oysters Autobio fucked around with this message at 15:31 on Mar 5, 2024 |

|

|

|

Zoracle Zed posted:Anyone got opinions about API docs generation? I kinda hate sphinx for being insanely over-configurable and writing rst feels like unnecessary friction. Sphinx with numpydoc is managable but I still feel like there's got to be something better. FastAPI swagger page generation is pretty cool but it's not like a drop-in or anything to my knowledge (ie you have to build the API with it from the beginning). I've been exploring python docs stuff and have found that mkdocs and mkdocs material have a big ecosystem of plugins and the like, all built on markdown instead of rst. Might have some API docs gen options.

|

|

|

|

Just export to HTML unless they're just rigidly demanding it in PDF. I think it's nbconvert that also supports encoded assets (images etc) into the HTML itself so it works basically the same as a PDF file. (I know this is an unwinnable battle and yet another example to flag when silicon valley ballhuggers go on and on about how the free market only creates the best standards...)

|

|

|

|

Yeah sorry, don't take my advice as reason to buck against whatever stupid arbitrary thing you're asked to do. nbconvert is exactly what you're looking for exporting pdf or other formats.

|

|

|

|

|

| # ¿ Apr 28, 2024 20:45 |

|

Just getting into learning flask and my only real question is how people generally start on their HTML templates. Are folks just handwriting these? Or am I missing something here with flask? Basically I'm looking for any good resources out there for HTML templates to use for scaffolding your initial templates. Tried searching for HTML templates but generally could only find paid products for entire websites, whereas I just want sort of like an HTML component library that has existing HTML. i.e. here's a generic dashboard page html, here's a web form, here's a basic website with top navbar etc. Bonus points if it already has Jinja too but even if these were just plain HTML it would be awesome. Oysters Autobio fucked around with this message at 00:13 on Mar 20, 2024 |

|

|