|

NewFatMike posted:If Raven Ridge can deliver 1080p60+Freesync at medium settings for current AAA titles, I'll be happy as a clam. Medium now is a lot nicer than it used to be, and that'll probably be really good for getting more folks on PC. What would that run, like $300 with a monitor and an HDD? Pitcairn performance would be nice, but even with DDR4 and TBR I don't think they'll get rid of the memory bottleneck entirely.

|

|

|

|

|

| # ¿ May 2, 2024 07:39 |

|

Why the hell are you even talking about the 1050, leaked specs say it's pretty much a RX460*0.6 and if the arch delivers, we might even see it come close to that and not get choked to poo poo by memory bandwidth.

|

|

|

|

Truga posted:Yeah, does this thing feature HBM? If not, then it's poo poo. Nope but New Zealand can eat me posted:Is the new geometry pipeline/draw stream binning rasterizer stuff only for Vega or does that also apply to the APUs? Raven Ridge is using Vega tech so all the features should be included. That along with DDR4 *should* be enough.

|

|

|

|

PC LOAD LETTER posted:Geez if they can just put a "lousy" 1GB of HBM1 on their APU's it'd be a huge win for iGPU performance. It could probably compete fairly well with some of the mid-ish range dGPU's at 1080p or less resolution with that. Seems like that isn't gonna happen though. At least not for 2017. As much as I'd like to see a zen quad with a RX480-level GPU on die paired to a stack of HBM, but it doesn't make any sense for Raven Ridge when and the memory controller can push 30-40 GB/s on DDR4 at average clocks. The GPU part is absolutely tiny. Arzachel fucked around with this message at 12:00 on May 31, 2017 |

|

|

|

SwissArmyDruid posted:If there is a criticism that I have continually levelled at AMD, it is that they appear to be hell-bent on leaving as much money on the table as possible. When you're charged for not making chips thanks to the WSA, it kind of skews the pricing curve

|

|

|

|

NewFatMike posted:This is all coming from my use case, but I'm tired of my MSI laptop. $1600 two years ago and I got a good i7-5700HQ, GTX 970M, but the body is all plastic and aluminum sheeting over it. If I can side grade because performance is exactly what I need to 1080p60APU/Freesync/metal bodied/good battery life thing sign me up. Raven ridge will essentially be the same CPU I've got now, I'm just hoping the GPU is, too.  Raven Ridge uses a cut down RX460 Raven Ridge uses a cut down RX460 It's not going to compete with semi-recent 100W dgpus.

|

|

|

|

Measly Twerp posted:So in fact a top mount rad is much worse, yikes. (Unless you intake from the top which would probably be similar to a front intake.) Hot air rises up, so front mounted is still slightly better.

|

|

|

|

BurritoJustice posted:HBM is super high bus width super low clock, it's even further away from viable CPU use than GDDR which is another order of magnitude from DDR4. Doesn't HBM actually have less latency than GDDR5 since the stacks are on-package?

|

|

|

|

SwissArmyDruid posted:...no, no, I call bullshit. There is *no* goddamn way they are pricing TR _THAT_ loving low! Goddamnit, AMD, what happened to not leaving money on the table anymore!? You don't have to do that in order to make Intel hurt anymore, your product is- A wafer unused is a wafer you still have to pay GF for, everything must go!  Besides, it makes sense to get everyone on the platform if they plan to provide an upgrade path.

|

|

|

|

NewFatMike posted:Still great for SFF stuff, hopefully the ****HQ or ****HK equivalent models for mobile will be 80-90% of the desktop models combined with Freesync 2 panels. GF's process nodes seem to be way more efficient at low voltage and clocks and I don't think Vega will change that one bit, but raw shader power has never been the issue. DDR4 gets us half way to not choking the iGPU to death but the hype around Vega for me was the TBR/DSBR implementation which seems pretty underwhelming right now. It might be down to the HBM2 memory controller severely underperforming and I'd like to see some testing done on this, but I don't think AMD can properly feed 11CUs at 800 mhz. Edit: now that I've looked at it more, Vega has less effective memory bandwidth than Fiji while still outperforming it by a decent margin  That bodes well for Raven Ridge even if Vega is pretty poo poo. Arzachel fucked around with this message at 07:58 on Aug 15, 2017 |

|

|

|

From Anandtech's Vega review:quote:On a related note, the Infinity Fabric on Vega 10 runs on its own clock domain. Itís tied to neither the GPU clock domain nor the memory clock domain.

|

|

|

|

Munkeymon posted:Is Zen2 the same as Zen+ and are we still talking about AM4 or is this AM4+? Originally the successor to Zen was called Zen+ in AMDs roadmaps. They later changed it to Zen2 but some people use that and Zen+ interchangeably. But now there is also a Zen refresh coming which is sometimes referred to as Zen+  Both should be compatable with with AM4.

|

|

|

|

Sormus posted:Wait, instead of ticktock AMD has up-rebrand cycle? :V Ryzen refresh is a supposed to be a straight respin with no architectural changes. Think Devil's Canyon except with actually significant clockspeed increases since we're already seeing 200-300mhz from minor steppings between Ryzen and Threadripper/Epyc. Arzachel fucked around with this message at 20:43 on Sep 5, 2017 |

|

|

|

Risky Bisquick posted:12NM Zen refresh coming in 2018 Q1 ...That's way earlier than expected considering that GF announced 12LP volume production starting sometime 1H2018.

|

|

|

|

Lolcano Eruption posted:32 Gigs of B-Die RAM that actually runs at 3200 is $100 more than non B-Die. I Bought 32 gigs DDR4-3200 at $250, they were SK Hynix and I can't do over 2666. 32 Gigs of B-Die was, at the time, $350. You don't need b-die sticks to hit 3000 or even 3200 with the newer agesa revisions unless your motherboard is particularly finicky but it's still not entirely seamless like on Intel.

|

|

|

|

Nvidia isn't a viable choice for consoles unless Sony and Microsoft decide to swap to ARM CPUs for whatever reason.

|

|

|

|

Seamonster posted:Will there be new chipsets? Yeah, they announced 400 series chipsets alongside the Ryzen refresh.

|

|

|

|

Darth Llama posted:I mostly just lurk this thread, but the discussions of how they make/produce these chips is pretty awesome once I look up all the terms over my head. I have a question though: what happens (errors?) in manufacturing that would keep a chip from using hyperthreading? It's just market segmentation, there are no manufacturing defects that would break SMT specifically.

|

|

|

|

Paul MaudDib posted:AMD pulling some NVIDIA-level naming bullshit right there. Ryzen2 the series, using the Ryzen+ stepping, to be followed by the Ryzen2 uarch... Amd uses Ryzen the same way Intel uses Core, Ryzen 2 is only used on lovely clickbait techsites. Neither Ryzen+ nor Zen+ exist anymore but people still stick to that name for some reason, and the architecture is named Zen(2). Those specs are obvious fakes that have been circulating around for months.

|

|

|

|

SwissArmyDruid posted:Yeah, DSBR is already enabled. I this it's the "Next-Generation Geometry" path that you're looking for. Primitive shaders are separate from the tile-based rendering implementation AMD uses, as far as I know. There's no reason to think it's not working anyways, Vega has way more shading power than Fiji with almost the same effective bandwidth.

|

|

|

|

Paul MaudDib posted:Zen is basically Broadwell-level IPC, which is only a few percent behind Skylake/Kaby Lake/Coffee Lake. Intel's lead is pretty much down to clocks and that largely comes down to GF 14nm being a low-power-optimized process that sucks rear end above 4 GHz. I think it's also down to Summit Ridge being AMD's first shot at both the architecture and the process, the stepping used for Threadripper dies moves the scaling wall 200-300 mhz up.

|

|

|

|

Paul MaudDib posted:Threadripper is the same stepping as Ryzen. Epyc is on the newer stepping. Huh, you're right. No idea why TR overclocks a tad better then, besides binning.

|

|

|

|

Twerk from Home posted:I'm skeptical that price differences among sub-$200 CPUs really matter, especially in the desktop space. The rest of the computer, especially RAM, is really drat expensive too. I'm all for more power to more people, but the CPU doesn't really dictate the price of the whole machine. Shaving off 50 bucks CPU might let you go from a 1050ti to 1060 which is a pretty big deal if you're on a tight budget.

|

|

|

|

PerrineClostermann posted:Combined thread when? Roughly the same as Haswell, so 20-25% faster than Sandy Bridge clock for clock.

|

|

|

|

Jago posted:Has there been any actual benchmarks or at least informed discussion on the relative performance between the Intel w/Radeon and HBM2 and EMIB vs the AMD 2400G w/ Vega? There's not much point comparing those two because they're made to serve completely different market segments. That Intel chip is going to cost three times as much.

|

|

|

|

FaustianQ posted:I feel like this pushes the min clock to be expected from Zen+ to 4.3Ghz, since exactly how would a 2800X differentiate itself from a 2700X then? +200mhz on base/boost seems about right, XFR to 4.4Ghz and since it's the top non-TR die, 4.5Ghz all core overclock. Never trust a semiconductor fab, I'll be surprised if Zen2 will be released before June 2019. Risky Bisquick posted:They did, Korean leaks show a huge improvement in latency Speaking of: https://videocardz.com/75185/first-benchmarks-of-ryzen-7-2000-cpu-have-been-leaked Arzachel fucked around with this message at 19:01 on Mar 6, 2018 |

|

|

|

Shy posted:Hey let's say I'll get my hands on the cheapest April model, which mb chip I'm supposed to look for? It's confusing. Probably B450, cheaper than X470 and supports Pinnacle Ridge out of the box unlike X370/B350/A350.

|

|

|

|

Shy posted:Cool, thanks. B450 is released simultaneously with the CPUs, right? It should be but there's no hard release date given and most of the info we have is from leaked slides.

|

|

|

|

Combat Pretzel posted:Those latency improvements don't affect the IF, right?  Sisoftware accidentally put their review up ahead of time. Edit: looks like the CPUs were tested on stock clocks with turbo enabled and with DDR4 at 2400. Arzachel fucked around with this message at 17:29 on Mar 17, 2018 |

|

|

|

Most likely they just didn't have any Coffee Lake chips on hand to run the test suite in time.

|

|

|

|

I kind of doubt that it's the power draw, people usually aren't too fussed if a desktop CPU pulls a bit more. My guess would be that they're running into the same clockspeed wall again except it's 300mhz higher.

|

|

|

|

KingEup posted:I have some questions about the AMD Ryzen 5 2400G and because my use case is unusual I can't find much information anywhere else. Prebuilts with Intel's Hades Canyon (i7-8809/8805) are coming out soon-ish and will be a good deal more powerful and significantly more expensive, doesn't seem like the SoC itself will be available seperately any time soon either. I don't think 12nm/7nm AMD APUs have been announced. You're never going to be CPU limited even with a low resolution outside of some extreme corner cases. Dual channel is pretty much mandatory and memory clocks are also a huge deal, I'd aim for at least 3000 with decent timings.

|

|

|

|

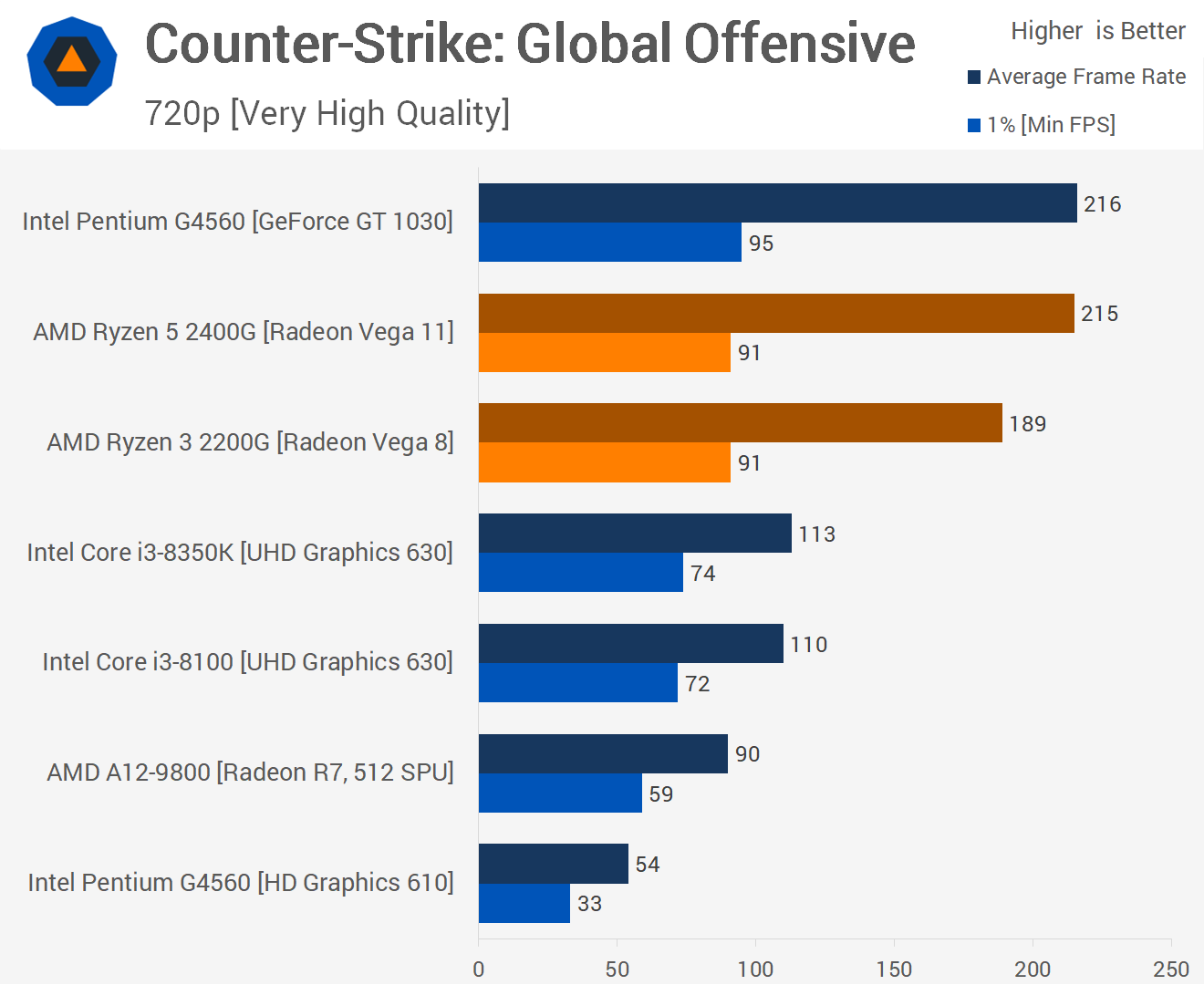

KingEup posted:Can anyone shed some light on why the min FPS at 720p is worse than the min FPS at 1080p? I can't find what settings they used or how they performed the benchmark (some other article mentions them using the tutorial) so I'm going to guess it's just variance in their bench method and nobody sanity checked the results.

|

|

|

|

eames posted:If AMD APUs work like Intel iGPUs (CPU/GPU on one die and dynamically sharing a limited TDP) then I can see some edge cases where 720p could cause lower minimums than 1080p. I could buy this but the Pentium + 1030 also has lower minimums on 720p for some reason.

|

|

|

|

fishmech posted:Ah yes, that thing that was introduced in Windows 10 and totally not 9 years earlier in Vista. I don't think it forced vsync on all windowed applications back then, did it?

|

|

|

|

SwissArmyDruid posted:Ryzen 2 hits 5.8 GHz on LN2. https://www.theinquirer.net/inquirer/news/3030327/amds-ryzen-2-processors-pushed-beyond-5ghz-by-overclocking Didn't someone hit 5.8 with a 1800X on LN2 pretty earl... oh, it's all cores.

|

|

|

|

sauer kraut posted:I wouldn't dance on Intels grave yet until we actually see a finished 7nm high power GloFo product. I'm more than a little skeptical of GloFlo's 7nm claims but at least AMD isn't locked into them anymore and can dual source chips from TSMC.

|

|

|

|

SwissArmyDruid posted:I worded that less-clearly than I should have. Yes, it's a GCN derivative, but what's beyond it is not. The sooner they get Navi out, the sooner they can get to whatever the hell it is that comes after. I mean, that's when the Ryzen money should start bringing results so  but I don't think GCN was/is anywhere near their biggest problem. but I don't think GCN was/is anywhere near their biggest problem.

|

|

|

|

FaustianQ posted:Also Also, people have been pushing R7 2700Xs to about 4.45-4.5Ghz with a very slight bump to BCLK (can't go above 105BCLK or system loses it's poo poo). So it seems Pinnacle Ridge isn't limited by the process but maybe through design? It's too bad AMD couldn't have found a way to make the BCLK async, multiplier OC is still better but an async BCLK might have enabled even higher clocks, maybe 4.6 to 4.7Ghz, or a really cold 4.4Ghz. Not sure if it's limited to X470 but boards with external clock gen should have an async mode. The Stilt posted:Pinnacle Ridge CPUs also support multiple reference clock inputs. Motherboards which support the feature will allow "Synchronous" (default) and "Asynchronous" operation. In synchronous-mode the CPU has a single reference clock input, just like Summit Ridge did. In this configuration increasing the BCLK frequency will increase CPU, MEMCLK and PCI-E frequencies.

|

|

|

|

|

| # ¿ May 2, 2024 07:39 |

|

eames posted:I do wonder why all 2000 series CPUs seem to hit a wall at 4.2 Ghz, either the process is super consistent or they're binning very aggressively for 2800X/TR2. Hitting the wall at around the same mark even after switching to a HP process points towards a bottleneck somewhere in the architecture, I think.

|

|

|