|

Arzachel posted:I'm guessing that means no curve optimizer undervolting, right? That's the consensus from leakers, yeah. Basically anything touching the clocks or voltages of the cores.

|

|

|

|

|

| # ¿ May 22, 2024 12:59 |

|

Has the PS4 emulator targeted AVX-512 yet? The best CPU for PCSX3 is an early 12900K with E-cores disabled and AVX512 enabled. It's not just overkill too, there are games that can only hit 60fps with AVX512.

|

|

|

|

gradenko_2000 posted:they almost certainly will use it for their server parts, as they already bragged about how that was going to be their next big thing Lisa Su originally showed a 5900X3D, but it didn't end up making sense as the 3D cache SKUs lose in general application performance and the 5900x/5950x are more productivity SKUs than gaming SKUs. You can see in TPU's testing that the 5800X3D consistently loses by ~5% to the 5800x in almost every test before they get to games due to the clock deficit.

|

|

|

|

Klyith posted:Spec might say one thing, but people have definitely run systems with BCLK overclocks in the +10% range and had them stable. Intel has basically all the IO buses decoupled from BCLK now, so you can go hog wild and only really hit the CPU. AMD hasn't yet.

|

|

|

|

HalloKitty posted:To be fair I (and surely many others) took notice of the potential of huge caches with regards to gaming performance back at the time of the 5775C, where disabling the integrated graphics to make full use of the 128 MB of eDRAM really smoothed out minimum frame times. That was effectively l4 cache and not hugely fast, but it was still notable. It was a path I'd hoped Intel would take. They didn't, AMD did, and they're seeing the huge perf gains The rumour is that the main P-core upgrade with Raptorlake is 1.5x the L2 and L3 cache, so Intel is catching on a little bit. EMIB seems to me like a pretty fantastic technology so I imagine if they're working on a cache die that could be great.

|

|

|

|

Arzachel posted:170W TDP is the maximum the socket is rated for, they haven't said anything about specific SKUs Robert said on Reddit that Ryzen 7000 will launch with 170w TDP SKUs, it's not just the socket limit. We do know that the 5.5GHz gaming benchmark was running below 170w, however.

|

|

|

|

FuturePastNow posted:I have a Ryzen question. Right now I have two sticks of 3200 RAM and two empty slots, the logical way to add more RAM would be two more identical DIMMs but for years I've seen people complain about Ryzens not working right with four DIMMs. Is this still a problem with 5000-series CPUs ( I have a 5800X3D)? I'm not exactly pushing the limits of memory speed here. The biggest issues with four sticks for Ryzen is motherboard topology. The memory controller, at least on Zen3, can handle 4 ranks (which you'd have with 4 8GB sticks) decently well, but there are a few different ways of connecting 4 slots to two memory channels and only one of them is really conducive to high performance with four DIMMs. The most common motherboard layout for 2DPC (DIMMs per channel) is Daisy chain, where the traces for one channel are first routed to one slot, and then split off to a second slot. This is why most motherboards have you put the DIMMs in the two furthest slots, they are the end of each chain and filling them prevents parasitics and EMI. The upside of this method is that it is the highest performance with two DIMMs, the downside is that with four DIMMs each channel has the sticks at different distances from the CPU which causes issues with higher frequencies. The second most common 2DPC topology is T-topology. This was popular during the X370/X470/Z390 eras, particularly on high end boards where users were most likely to install 4x 8GB sticks. With T topology, the trace for a channel goes to a point equidistant to the two slots for that channel, and then splits to each in a T shape. The obvious downside of this is that you have an open end on each channel if you only install 2 DIMMs, so it's like if you install your sticks in the wrong slots in a daisy chain board in terms of EMI. The upside is that 4 DIMM performance is very competitive with 2 DIMMs in a daisy chain board, and moves the bottleneck onto the memory controller's ability to handle more ranks. My motherboard, Z390 Aorus Xtreme Water force, is T-topology. It struggles past 3200 with only 2 sticks, but I can push 4400 loosely or 4000c14 with four sticks of good ram. Since 2019, 16GB and 32GB sticks have become more common so it's no longer necessary for most users to install 4 DIMMs. For this reason, T-topology has fallen massively out of favour. I don't think a single Z690 or recent X570 board is T-topology. If you have a slightly older board, you can usually tell by checking the QVL and seeing if the highest speeds are for 4 sticks or 2 sticks. Anyway, sorry for the long post, what is your motherboard? You're not likely to have issues if you're only sticking to 3200. If you do, the benefit of rank interleaving would likely equalise any performance loss if you had to drop to 2933.

|

|

|

|

Combat Pretzel posted:Hmm, I hope people do memory benchmarks of DDR5-4800 vs DDR5-5600+ modules ASAP Zen4 is available. I'm on the look out for ECC UDIMMs, and they're so far all DDR5-4800. Hope that'll change quickly, because something something HPC high bandwidth. ECC modules at JEDEC 5600 speeds (CL46) were announced a few months ago, and are already available if you're willing to grey-import from China in the same way you get Hynix A-Die (the second gen stuff that can do 7500-8000MT/s at daily voltages). It'll probably have wider availability by the end of the year. E: you could also try and find some ECC 4800 that has Hynix chips and just run it at 6000, if overclocking isn't off the table. I know it seems counter to using ECC, but shouldn't be too great an issue if you sufficiently stability test your OC. It's even easier thanks to the error reporting. BurritoJustice fucked around with this message at 06:36 on Sep 15, 2022 |

|

|

|

HUB is also the only reviewer I've seen that's found the 7600X, or any Zen4 CPU, to be ahead of the 5800X3D on average in gaming. I guess it's a test suite thing.

|

|

|

|

Combat Pretzel posted:I'm regularly looking around on the web, whether there's finally 5600+ DDR5 ECC modules for this dumb AMD processor, now all this gamer RAM is advertising the mandatory on-die ECC as super awesome feature and consequently loving up web search. Lol, I had this problem when I was trying to find ECC ram to reply to you last. I genuinely just gave up on finding a seller because I don't know how to specify "actually ECC" and not the basic bitch one every kit has. I'd probably try to find the product code for specifically the Hynix A-Die 5600 ECC kits, and look at the photos to see if they have one extra memory chip (for proper ECC). Maybe ask Dell's server division, they were the og place to go to find bare Hynix M-die sticks.

|

|

|

|

BlankSystemDaemon posted:The image here doesn't inspire confidence: They're not the ECC kits in that review, but gently caress A-Die is insane. They can't even go above 1.435V with their motherboard due to it lacking a BIOS update for the PMIC, but that was enough to run the 2x32GB kit at 7300c32! Shows that the limit was more the dies than the motherboards or CPU controller, you'd previously be hard pressed to hit 6000MT/s with a 2x32GB kit due to the quad ranks. As an aside, 1.435V is actually not that high. It looks scary compared to the 1.1v stock, but 1.435V is in the JEDEC range for the PMIC and Hynix's own data sheet lists an operating voltage of up to 1.5V safely. The hardcore folks with aftermarket cooling are running 1.65V, that's the range of exceeding specs.

|

|

|

|

Khorne posted:They used significantly worse memory for the 5800x. And they listed only one stick on the page showing the setup for the 5800x but 2 for the 5800x3d. Although I suspect they used two for both. Their 5800x is one of the very rare samples that can run 2000MHz on the infinity fabric (4000MHz ram speed), which they can't replicate for the 5800X3D. So they went with very tight timings at 3600MHz instead. The 5800X3D build is a new standalone testbed they're using for reviews going forward, whereas the data is what they already recorded with he 5800x for their 4090 review. I don't really blame them, W1zzard does insanely comprehensive coverage so he has to do thousands of benchmarks weekly. The differences should be miniscule in this case Dr. Video Games 0031 posted:Some reviews tested Raptor Lake with DDR5-6800 while Zen 4 was tested with 6000, and TechPowerUp even tested RL with DDR5-7400 lmao. DDR5-7400 was only used in the standalone, high speed ram benchmarks, all labelled "i9-13900K (DDR5-7400)" in the bar graphs to reduce confusion. All the other tests that don't specify the ram speed, and all the data included in other reviews as a comparison, was done the same 6000C36 that they tested Zen4 with. It was definitely a mistake to include the DDR5-7400 in the system specs next to the DDR5-6000, without a line clarifying it wasn't used for the general testing. Very confusing table. To your last point, 7000MHz+ is going to come down in a big way. Every single Hynix A-Die DIMM produced can run, at a minimum, 7200C36 and they're Hynix's new mass produced die. It's only starting to trickle into the consumer market in the ridiculously overpriced 7600MHz kits, which are being priced relatively to the super binned 6800MHz stuff we had before A-Die, but the price can't hold up long once general supply hits. If you don't care for needing an XMP profile, you can buy 32GB of A-Die for $200 from China in the form of green-PCB server RAM.

|

|

|

|

movax posted:Should be 100% fine; don’t even have to worry about BIOS updates (in the sense that all mobos will have USB-based flash, IIRC). USB flashback is integrated into the B650 chipset (and X670 is just two of them), so motherboard makers no longer need to add a separate IC with the flashing logic. But it's not mandated that it's supported, so not all budget boards add the required button and dedicated USB port.

|

|

|

|

Cygni posted:

Word is, the 3D VCache CCD can only run up to 5GHz. So the 7950X3D is one VCache CCD, and one regular CCD. So the 5.7GHz clocks are on the regular cores, with the VCache cores running up to 5GHz. It'll be a weird asynchronous design, basically AMD's first foray into big.Little. Except instead of "fast cores, slow cores" it'll be "faster for cache sensitive workloads, faster for cache insensitive workloads".

|

|

|

|

I think I might go crazy and buy a 7950X3D. I do more multicore stuff these days, and even my 12700H laptop embarasses my 9900K. Plus I mostly play strategy games that love VCache. No idea how to approach the platform upgrade though, going to have to figure out how much to ask for my Z390 Waterforce (weird rear end limited edition waterblock only motherboard) and expensive DDR4.

|

|

|

|

ChickenWing posted:I'd read that there were hardware instructions that had to be done at a software level if you had one of those gen chips (I can't remember the exact nomenclature but it had something to do with security) which is why gen 1 ryzens weren't "officially" supported - they work, but their performance was garbage. Did I misinterpret or was that a weird edge case that's unlikely to come up. Yeah, Ryzen 1 lacks MBEC (Mode Based Execution Control). Windows 11 by default enables virtualisation based security, and specifically an extension called HVCI (Hypervisor-protected Code Integrity). If you have MBEC, HVCI is only a minor performance cost (single digit percent). If you don't, HVCI slaughters performance (20-40%).

|

|

|

|

Pablo Bluth posted:TR Pro supports 2TB so you can get pretty high in workstation form already. If you're going to spend that much money on dimms, they're going to want you to pay TR Pro levels of money for the CPU and MB. Over on the Intel side, the Sapphire Rapids workstation xeons (W-3400) support up to 4TB of DDR5 LRDIMMs. They're roughly price equivalent to TR Pro.

|

|

|

|

BlankSystemDaemon posted:I plan on buying at least 1 stick of DIMM-wide ECC memory, even if I can't run it at the 6000MT/s that's the sweet-spot for Zen4 - just so I have something to validate the rest of the hardware against. Small correction, the dual data rate in "DDR" (which leads to the frequency mismatch) is independent of channel count. If it wasn't, we'd see higher channel count systems marketed with crazy numbers like 19,200MHz. It's actually due to transfers happening on both the rising and falling edge of the clock cycle, which is why the marketed number it's often referred to more technically as megatransfers per second. Consumer DDR5 platforms aren't even dual channel anymore, they're quad channel. We just still call them dual channel because A. Channels were conventionally 64b (and DDR5 channels are 32b), and B. There are two channels per DIMM so it is transparent to the consumer.

|

|

|

|

Klyith posted:Fuckin' tech jesus, holy crap. This definitely happens on MSI boards as well, Asus and Gigabyte are just the two most focused on because Steve bought already exploded CPU/Motherboard combos from both of those manufacturers. Literally every AM5 board will pump vSOC to high heavens if you turn on a >=6000MHz EXPO profile. There had to have been a failure of communication, or a straight mistake, from AMD for all the partners to make the same mistake. I don't like that the GN video focuses really hard on the Asus failure, because it makes it seem like it is isolated to Asus. Steve's implication that while other motherboards will kill the CPU, only Asus boards will continue to pump power into them until they melt, just isn't true because there are CPUs that have melted down in this way in boards from every major manufacturer. The first melted CPU was the one that De8aeur did a video on a month ago, and that one had the solder drip out from under the heatspreader running in an X670E Aorus Master. The AGESA changelog leaked by Igor, posted a page ago, lists PROCHOT as being non-functional in prior AGESA revisions. In the GN video at 13:30 he says that PROCHOT should be triggering at this point to prevent the meltdown, so if that's really the cause then it's not an ASUS problem but an AGESA one. Hopefully the followup video from GN is a bit more directed, he mentioned their lab found a whole host of broken features and protections in AM5 that they will cover separately. E: in the time it took me to slowly phone-type this I got semi-beaten by a helpful post from DR.VG about AGESA and PROCHOT

|

|

|

|

karoshi posted:How so? They've only guaranteed support through 2025, and 2026 is coincidentally when they're changing over from AGESA to OpenSIL for their system firmware. So the educated guess is AM6 in 2026.

|

|

|

|

Dr. Video Games 0031 posted:The return of non-pro threadrippers? They're on SP6, so they'll be based on Zen4 Siena, which is decently cut down from the full-size Threadripper Pro on SP5. It's a similar split to W2400 vs W3400 Xeon-W, you get lower core counts, half the memory channels and less PCIe. Siena also caps out at 225W, which is a bit of a limitation for 64core cpus, but I dunno if that is a socket hard cap that will actually limit Threadripper in any meaningful way. It'll still be cool though. Apparently they're dropping the OC support they added with Zen3 TR Pro and that'll be TR non-Pro exclusive again.

|

|

|

|

ConanTheLibrarian posted:AMD released the 3D v-cache and 128 core epycs today. First party comparison between the 3D v-cache chips and sapphire rapids max (i.e. 64GB of in-package HBM): These benchmarks are against regular Sapphire Rapids; the Xeon Max CPU are 94xx, not 84xx. So no HBM.

|

|

|

|

repiv posted:fanboys that are so deranged that they make their preferred company look bad by association are the best That twitter thread is my favourite thing to come out of hardware enthusiasts in the last year. Lost my poo poo when the lead Dev of the renderer that @AMDGPU_ was spreading fud about replied, and the guy doubled down. The lead Dev of the project was correcting him and he called fake news! All because AMD is bad at RT, apparently it has to be a big NVIDIA conspiracy that RT is useful.

|

|

|

|

Boris Galerkin posted:Would it be a mistake to buy that Microcenter exclusive 5600X3D for $230 for a brand new pc build for gaming (targeting 1440p/1xxHz)? I know future proofing is a waste of money but that 5600X3D apparently uses a dead socket vs the newer AM5 socket. Other things to consider if you're already at Microcenter, the 5800X3D is only fifty bucks more and hits the 8-core sweetspot of the consoles. If you want similar gaming performance, better MT, and more upgradeability at the cost of power consumption you can get a 13600K combo for a similar price too.

|

|

|

|

Rinkles posted:i wonder if those are ms store codes I'd be really surprised, all the physical copies come with Steam codes and it seems like the only reason people will play the Xbox version is getting it from Game Pass.

|

|

|

|

Hasturtium posted:Eww, yeah, itís gotta be about controlling power usage. Alder Lake already took criticism for using more power than its predecessors in absolute terms. And drat, that feature set chart is bonkers. AVX-512 isn't a power hog anymore. The first generation in Skylake-EP was kinda busted for power and it's ruined the perception forever. Golden Cove cores don't see any power increase, and in most cases see no clock decrease, running AVX-512 code. If you have a 12900K that can still use AVX-512, it's better at it than Zen4, and the extra 512b FPU makes Sapphire Rapids absolutely dominate in AVX-512 benchmarks. Twerk from Home posted:Why do all the work to use hardware features that aren't common? In the one place where AVX512 should have mattered, Intel Xeons, they've hosed that up too. AVX-512 is really good on Sapphire Rapids. Basically the only good thing about it is that it absolutely crushes FP benchmarks.

|

|

|

|

Dr. Video Games 0031 posted:Yeah, ASRock's new bios allows for really high memory speeds, but it may not help performance much in most things because the uclock goes into 2:1 mode with the memclock. As seen in that screenshot, 4000 memclock with 2000 uclock, while on a DDR5-6000 EXPO kit, you'll get 3000 memclock and uclock. It's not an ASRock thing, it's the newest AGESA 1.0.0.7b. An AMD employee spoke about it here. The main change is the new "DDR5 Nitro Mode" (very AMD name), which is the frequency-at-all-costs 2:1 mode. I fiddled around with it on my X670E Hero, and it's not just a binary setting, there is a whole page of settings that you can tweak. The descriptions mention latency costs for Nitro mode settings even beyond the cost of dropping the UCLK, so it remains to see how viable it is for gaming. Plus the training times as soon as you enable nitro mode are absurd, like 5 minutes to train 7200 on my board. The other part that the employee mentions is tweaks to enable 6400 in 1:1 on more CPUs, and sure enough, I can now run 6400C28 1:1 with my 2x32GB M-Die kit. Was impossible to stabilise above 6200 on 1.0.0.7A Patch A.

|

|

|

|

Kazinsal posted:Multi-channel width vs. speed has always been a tradeoff, and AM5 is no exception. You can do 64GB at 6000 right now, you just need to go 2x32GB. You can even do 6000 at up to 96GB if you use 2x48GB. I'm running 2x32GB 6400c28, using inexpensive greenstick Hynix memory I bought from a server supplier.

|

|

|

|

Shaocaholica posted:Is Ď480mbí threadripper pro cache usable by all cores? It's cache coherent, so yes, but it is non-uniform due to the chiplet design. Accessing the cache of a separate CCD is about as fast as accessing main memory, so it's better expressed as 12x32MB L3.

|

|

|

|

Reusing the full size IO die with memory channels disabled has the funny side effect of each NUMA node in TR non-Pro only having a single memory channel. If you put a 7995WX in a TRX50 board you'll have three whole CCDs sharing a single local memory channel. It's a good thing the NUMA penalty is relatively small.

|

|

|

|

Cygni posted:

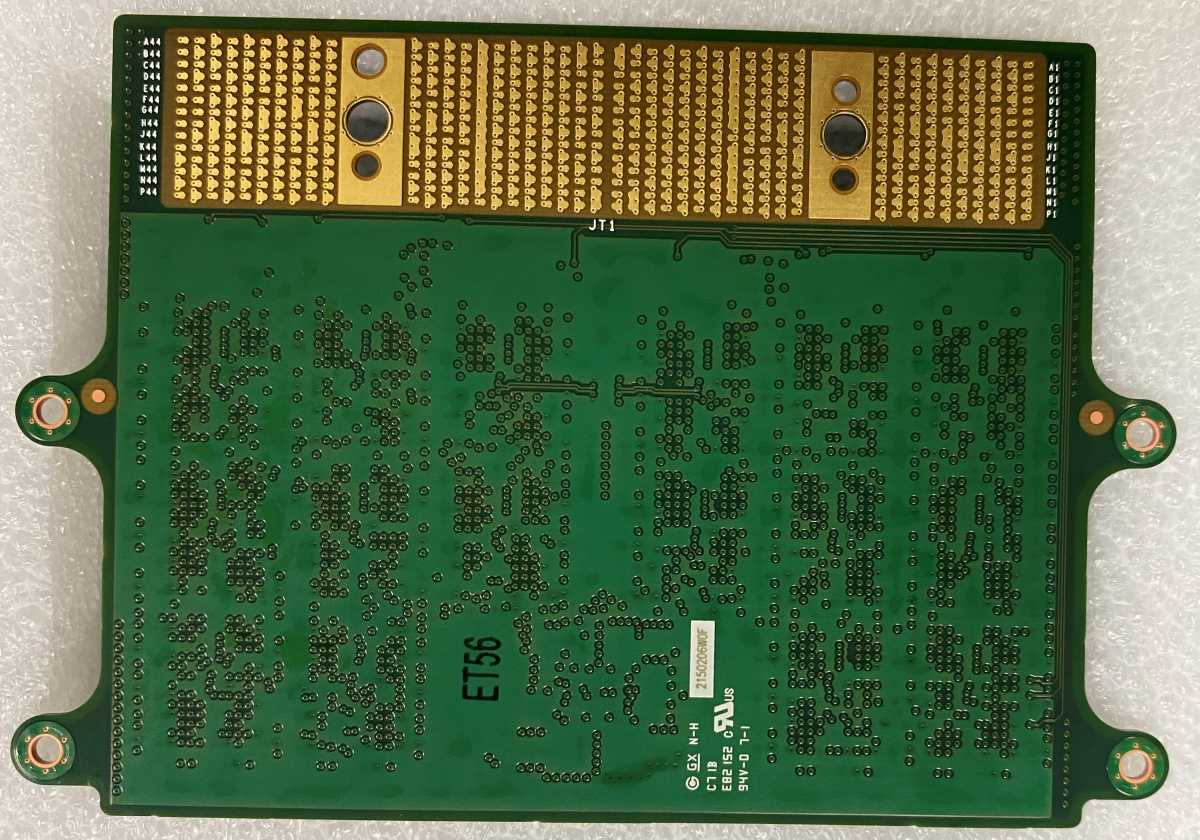

LPCAMM is actually, amusingly, a competing standard to CAMM. It's not just LPDDR on a CAMM module, which is in the spec, but a separate LPDDR-only compression attached memory module that isn't a JEDEC standard and isn't compatible with CAMM. LPCAMM is basically just a socket for LPDDR chips, the compression mount is directly under the dies:   Compare that to CAMM where the dies are on another part of the PCB:

|

|

|

|

SpaceDrake posted:I think there's a little confusion here because of the 5600X3D. Those were chips that were explicitly manufactured as 5800X3Ds, but not all their cores met specification while their L3 cache did. It was therefore not too difficult to disable those cores and add software support to read those chips as "5600X3Ds" and have them operate as hexa-core CPUs. VCache is added after the dies are fabbed and tested for defects, 5600X3D isn't physically defective 5800X3D at all. It's a relatively expensive (compared to the cost of a die) process so they don't waste time stacking onto faulty dies. It's just artificially cut to hit a lower end of the market, like the vast majority of CPUs.

|

|

|

|

hobbesmaster posted:The 5600x3d was supposedly CPUs that failed the VCache addition step. Whether something caused some cores to have physical damage or perhaps their temperature/voltage curves are just terrible. Isn't the only quote we have to this effect from a Microcentre rep to GamersNexus? I still think it only makes sense as an artificial part, I have no idea why adding the stacked cache fails in a way that kills only two of the cores, whereas it makes a lot of sense to just have a cut down 6 core with good performance to challenge the lower end of the market. It's not a unique thing, almost all CPUs are cut down due to segmentation instead of binning. Especially tiny chiplet CPUs on an extremely mature node.

|

|

|

|

SpaceDrake posted:What specifically happens is that the extra cache only reads into half the cores on the 7950X3D, largely for heat purposes. To prevent the overall CPU from overheating, the software will disable one of the Core Complex Dies™, leaving you with only six active cores (as I understand it). This isn't really what is happening at all. AMD has 8 core CCDs, which are physically separate dies. Each die has L3 cache that is on the die, which sum together from multiple dies to form the total L3 cache. Every core can access cache lines stored in the L3 cache on any die, but the non-local L3 cache is very slow. It's equally as slow to access the cache on another CCD as it is to go out to main memory, and the only reason that it's possible at all is so you can maintain cache coherence (basically, correctness of cache). It's better to understand the cache on AMD CPUs not in terms of the total, but the groups of cache. A 7950X has 2x32MB, an Epyc CPU can have 384MB of cache but it is really 12x32MB and doesn't provide a larger cache for each group of cores. VCache is the only way that you get an AMD CPU with more than 32MB of cache per 8 cores, but there is a strict voltage limitation imposed by the way that the cache is connected to the base die using through-silicon-via (TSV). A 7950X will happily boost using 1.5v, but you can't put more than about 1.3V through TSVs before they burn off the CPU. The clock limitation of VCache is a voltage one, not a thermal one. The thing about the way the cache works is that for most games, you don't want your game to spill between CCDs. Typically the extra compute isn't worth your hottest cache lines between spread between two NUCA nodes. A double VCache CPU wouldn't have much benefit for gaming, because you only want to be on one CCD anyway. By keeping a CCD without VCache, AMD gets to save some money on packaging, win back some extra productivity performance, and still get that 1.5v 5.75GHz single core boost that prevents it from losing too hard to Intel in the single core charts. The software disabling one CCD while gaming isn't for overheating, it's a really dumb and simple way of forcing games to stay on the cache die. The windows scheduler hates putting things on the cache cores, because they clock 500MHz lower, and games aren't smart enough to know the difference between the types of cores. The issue is that AMD's VCache driver is the dumbest pile of poo poo I've ever used. It's extremely simple, and is basically just two rules. If the Windows Gamebar thinks a game is open, it parks CCD1 (clocks). Then if CCD1 is parked, and the load on CCD0 exceeds a certain threshold, it unparks CCD1. That's it. This means it doesn't work if a game isn't a game in Gamebars eyes (this is why it doesn't work in Factorio), and it also doesn't work if a game loads up a bunch of threads on the loading screen but then still wants to stay on the cache die to get proper performance (this is why it doesn't work in Starfield). It also means you can't use your 8 extra cores to run background tasks without tanking game performance, as there is nothing keeping the game on the cache cores when the other die is unparked. The only way to ensure it's always working properly is to manually do core affinity. If you're willing to do that, it'll always be better than a 7800X3D because the cache cores on the 7950X3D run +200MHz faster across the whole VF curve (the max clock on a 7800X3D is 5.05GHz vs 5.25GHz on the 7950X3D). In terms of factorio, with large bases it is completely memory bottlenecked. This means that the VCache still helps, as cache increases effective memory bandwidth, but Intel's much faster memory subsystem gives them the win in general. Amusingly, I do actually have the world record on the FactorioBox 50K benchmark (61UPS), despite using a 7950X3D, but I cheated a little by using Linux and a bunch of kernel tweaks that help massively (custom malloc with huge pages and much more). You can get similar performance using Intel on bone stock windows.

|

|

|

|

Twerk from Home posted:Is Factorio more memory latency sensitive or bandwidth sensitive? Does it do any better on 4-8 channel DDR5 setups, or does the higher latency that bigger memory controllers and potential RDIMMs bring hurt performance? Memory bandwidth, mostly. Which is why Intel with DDR5-8000 can get 60UPS while the Intel CPUs in the HWUB benchmarks listed above are sitting around 45UPS. You won't get better performance from higher memory channel AMD CPUs, as fundamentally the bottleneck is in the GMI link from the IO die to the CCD. It's slow enough that you're already exceeding it's maximum bandwidth with just dual channel DDR5-6000. Higher channel counts just let you saturate more threads at once, across more CCDs, but Factorio is very single threaded and never scales outside the one CCD. The only CPUs, at least from AMD, that could be a theoretical improvement are the low core count Epyc CPUs that use GMI-Wide to provide greater bandwidth to each CCD. GMI-wide is where you connect two GMI channels to the one die, and is done on the 16-32 core Epyc CPUs because they have leftover GMI links on the IOD that are meant for more CCDs. That combined with more memory channels could give an improvement.

|

|

|

|

Gatac posted:So, I recently did a build with a 7800X3D on an Asus ROG STRIX B650E-F GAMING WIFI mobo with G.Skill Ripjaws S5 32 GB (2 x 16 GB) DDR5-6000 CL30 memory. It works in general, but does *not* want to run the RAM at 6000 - it goes up to 5800 fine, but putting it at 6000 hangs at boot. This is somewhat disconcerting because it means I can't use the DOCP settings for this RAM, which would automatically put it at 6000. And it is a hang, it's not overlong memory training as far as I can tell. I've let it sit for 30ish minutes without progress at 6000 while 5800 is up after a minute or so. Have you updated to the latest BIOS? That RAM kit is guaranteed Hynix, so it isn't a RAM binning issue as every stick of Hynix DDR5 on earth can do 6000C30. Memory stability has massively improved with newer BIOS patches, with 6000 being a bit touchy on the launch BIOSs.

|

|

|

|

The AV1 encoder in RDNA3 is really fundamentally broken. It encodes in 64x16 pixel tiles, which isn't that unusual, but the hardware support for outputting subtiles is broken and it will only output resolutions that fit in 64x16 tiles (with black pixel padding). They added a special case for 1080p to minimise the impact but it still outputs 1920x1082, which means you have to manually trim the 2 pixels from videos and for streaming a number of streaming services just straight up won't accept a 1082p stream. Naive VMAF scores are in the 30s because of the extra pixels, but even if you trim them out you're still getting decently lower VMAF than NVENC or QSV. The other common resolution that doesn't fit the tile size is 3440x1440, but that's less important than 1080p. An AMD Dev on the Mesa gitlab said it is fixed in the RDNA3+ encoder block, but that's not coming to desktop anymore, so you've got to wait until RDNA4 to get a functioning AV1 encoder from AMD. Hopefully they use RDNA3+ in APUs.

|

|

|

|

Cygni posted:Phoenix is listed with ECC support on TechPowerUps database. They also show 20 PCIe 4.0 lanes from the CPU, so that might mean a full 16x to the first pcie slot. They could also be playing games with how they count the lanes. The 5700G shows 16 3.0 lanes, for comparison. It depends on which lanes they wire up when they put it on an AM5 substrate. Most AM5 boards wire up two M.2 slots from the CPU, and if they put all 16 usable lanes (4 for chipset) into the PCIe slot then you won't have your M.2 slots. It'll likely be 8x to primary PCIe and 4x/4x to the m.2 slots. You'll lose a PCIe slot this way if they're wired 8x/8x, but otherwise moving down to 8x on the primary isn't a big deal. The bigger deal are the CPUs based off the 2+4 small phoenix die (8500G/8300G), they only have 10 usable lanes so allocation gets really tricky. They might end up going 4x slot, 4x/2x M.2.

|

|

|

|

BlankSystemDaemon posted:What's AMDs HMP design like, comparatively? I know Intel brags about their Thread Director that's part of the microcode, but that seems like a highly complex solution, and what litltle I've read suggests that AMDs solution is "simpler" (though maybe not easier..) 4C cores are 35% smaller and have a much lower fmax, but there aren't any differences in functional units this generation. For reference on clocks, 4C in servers maxes out at 3.1GHz and on desktop the highest they're clocking them stock is 3.7GHz 1t boost. The 3.7GHz boost is way out of the efficiency curve for the core though, it takes as much voltage to take a 4C core to that clock as it does to take a 4 core to 5GHz. Next generation Zen5 will have full width AVX512 (2x512b per cycle), while 5C will keep the same double pumped design from Zen4 (2x256, running over two cycles) and so there will also be a gap in FP performance to worry about.

|

|

|

|

|

| # ¿ May 22, 2024 12:59 |

|

gradenko_2000 posted:half the L3 makes me think this is a Cezanne 5700G with the iGPU turned off? The 5600 is a 5600X minus X, so the 5600G minus G is called the 5500. The issue with the 5700G minus G is that they can't just drop 100 from the name, so they just said gently caress-it and sent it anyway.

|

|

|