|

animist posted:okay, so, first thing you gotta understand is what people mean by "deep neural networks". a neural network is made up of "neurons", which are functions of weighted sums. thats it. here's a "neuron": hey now posting convnets is cheating

|

|

|

|

|

| # ¿ May 11, 2024 22:31 |

|

also i feel bad for recognizing some of those from illustrations alone

|

|

|

|

it's my world now fortunately/unfortunately

|

|

|

|

tbh that can be said of pretty much any technology

|

|

|

|

eschaton posted:if I were asked to make an ďobject recognizer,Ē I wouldnít train one huge network on a million images of the object I want to recognize and allow steganography to break everything, Iíd train a large number of smaller networks on different characteristics to recognize, and use additional separate networks to determine confidence according to recognitions, etc. finally arriving at the one confidence value This is actually basically Fast R-CNN - except object classification was done by SVMs instead of NNs really all the convnet did for that is feature encoding itís called fast rcnn but itís pretty slow compared to the approaches that supplanted it

|

|

|

|

oh and also fast rcnn was doing that all given that you were already giving it proposals for where objects in the image were

|

|

|

|

well resnet is more of a particular convnet architecture, like the densenet illustration at the end of that earlier post, than a particular machine vision objective

|

|

|

|

like pre-convnet image classification and object detection (which are overlapping tasks really) was all about using something like sift vectors, which was pretty much taking an image and encoding it into this vector where each dimension represented some particular human chosen function output and you hoped that the vectors were distinct enough that images of different things would encode distinctly but that also the encoding was general/robust enough that changes in pose or scale/distance wouldnít prevent things from matching up so an objective like object detection was a bunch of sliding various windows of subsections of the image and searching your database of sift vectors to see if anything matched and of course there was research into how to partition and search through that database of sift vectors faster etc

|

|

|

|

rchon posted:don't ask me about deploying mask r-cnn models to production systems with no quantifiable validation metrics or model versioning bonus if itís just the Facebook repo

|

|

|

|

suffix posted:should i be blindly copying code i don't understand from random repositories I mean youíre doing machine learning arenít you?

|

|

|

|

all the ethics courses in the world won't matter when each individual is just some alienated contributor to a greater machine, and who can rationalize away their own involvement in anything horrible which may result from their work

|

|

|

|

Itís pretty hard to predict if anything you might work on could be weaponized Actually itís pretty easy: it most likely will be Plenty of people working for google/amazon/whatever probably honestly didnít think their work would be picked up by the MIC but it was Plenty of people doing research not even funded by one of the ARPAs might catch their interest out of the blue later and suddenly theyíre pumping money into it Chemists and life science folks probably didnít expect chemical and biological weapons to come out of their stuff Hell some of the early nuclear weapons folks probably didnít realize how insane that would become

|

|

|

|

animist posted:lol the other day while something I was working on wasnít working I joked about having the GPUs just producing heat instead of anything useful

|

|

|

|

I mean, the fact that the features picked up aren't necessarily the same ones a human might consciously choose is a pretty well known phenomenon in machine vision; the amusing bit being that people sometimes use this to get huffy about things when people sometimes unconsciously or consciously also do ridiculous things and we have much better sensors in some ways

|

|

|

|

Winkle-Daddy posted:oh look, the handy work of Summly, the "AI News Summarizing Product" built by some kids that Marissa gave like a billion dollars to. Working at yahoo under marissa was the fuckin worst. "guys, guys, don't you see how valuable tumblr is???" if by ďbuilt by some kidsĒ you mean farmed out building an application around some algorithms licensed from SRI

|

|

|

|

you think open sores has issues thanks to a culture of tribal knowledge and gatekeeping, then you discover peopleís trash ML repos that are horribly broken out of the box

|

|

|

|

quote:Ray Phan, a senior computer-vision and deep-learning engineer at Hover, a 3D software startup based in San Francisco, told The Register that the lectures were confusing and contained mistakes. The online projects were too simplistic to be useful in the real world, and Raval was absent and unsupportive. Ah yes the senior AI engineer that needs to sign up for an intro to AI course

|

|

|

|

animist posted:there's a bunch of "black box attacks" that work this way, only seeing inputs and outputs it also helps that transfer learning is common in vision so you can expect whatever youíre dealing with to be some fine tuned head on an imagenet base

|

|

|

|

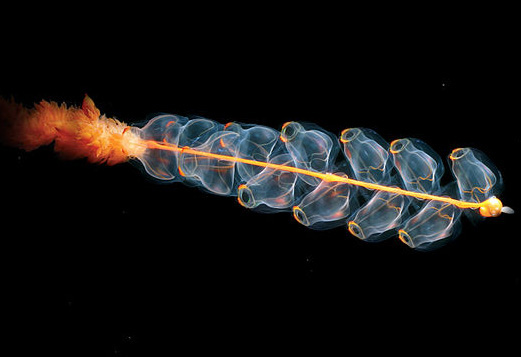

gently caress me for recognizing this

|

|

|

|

animist posted:

donít snipe my new convnet arch

|

|

|

|

there are so many new DSLs masquerading as languages I just donít keep track

|

|

|

|

I mean itís true

|

|

|

|

power botton posted:if you want to do math in a dumb dead language use fortran which is exactly why there are python-FORTRAN bindings

|

|

|

|

Some of our computational physicists still use FORTRAN And these are people like my age Living FORTRAN physics libraries

|

|

|

|

I canít tell if things are less horny now or different horny

|

|

|

|

A NN classifier for fizz buzz but unironically

|

|

|

|

statisticians amirite?

|

|

|

|

yeah by the Stanford numbering scheme that's a graduate class e:fb quote:Numbering System

|

|

|

|

Sagebrush posted:ah well then it's probably exactly what it says on the box. "grad students, go make a thing, if you need more information here's a list of papers to read, i'll be writing grants. if you make anything cool i'm putting my name as first author" donít give away the sausage factory!!

|

|

|

|

I remember when my weeder class in my engineering program was 1st semester, freshman year I still feel like I made the right decision but for the wrong reason

|

|

|

|

DELETE CASCADE posted:what kind of hosed up PI doesn't let the main grad student on the project be first author? how else will he ever graduate you're.... you're being facetious right?

|

|

|

|

academics is like the mob, credit kicks up to the top and never [graduating | getting tenure | moving on from being a postdoc] shits downhill

|

|

|

|

are you suggesting that the tenured faculty at "elite" institutions are anything but the epitome of humility?

|

|

|

|

thatís certainly a good formalization of things

|

|

|

|

I remember when I did that last one by accident in college at my student job and got in trouble because we had to claw back and reprint a bunch of reports

|

|

|

|

animist posted:also tbh if you're trying to understand data I'd reach for visualizations wayyy before ML because ML will just spit black boxes back at you. that's because I like to understand things tho actually I think youíll find ml has a lot of trouble with black boxes

|

|

|

|

obviously just shove it into tf-idf vectors

|

|

|

|

m8 wtf are you doing

|

|

|

|

what's your learning rate strategy?

|

|

|

|

|

| # ¿ May 11, 2024 22:31 |

|

rchon posted:this network has learned something but no animal ever has ultrafilter posted:Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead idk seems good

|

|

|