|

Darko posted:The issue with A.I. and general creative output is that artists/writers/musicians/etc. are going to go broke specifically because people by and large want products, not art. A lot of people don't see actual craft and skill in any art, they just want some nebulous "good" and want as much and as cheap as they can get it. As an artist, I can currently easily see where an A.I. is cobbling stuff together as opposed to even a mediocre digital artist is actually producing things, and am completely uninterested in having anything produced by an A.I. because it's missing human touch and the thought and mistakes and improvisation that comes from it. But as you saw from social media 6 months ago or whatever, people are just happy to finally have a portrait of themselves that they don't have to pay someone 1k plus for, because it's not about the craft. People by and large just view any art as a product and want to consume it and A.I. will feed them. I think a lot of people want AI art because they have their own stories to tell and they don't have the time and skills to produce it on their own nor the resources to constantly hire an artist. The human touch also isn't absent, most AI art has a person who is consciously choosing what they want for an image. Just because they don't see much value in the artist's vision doesn't mean they don't care about what the art is representing. Also, let's be honest here, I doubt a lot of artists care about all their commissions either. They might care about certain pieces, but I doubt they care about D&D character #507 they were hired to draw except as a product to sell. There is also nothing wrong with art being seen as a utilitarian tool either. There are plenty of situations where the art's purpose is to convey information, and has little artistic value. I'm not going to deny there are some people that are just using AI to produce anime titty girls, but people have always been using art for porn. Its not really a flaw in society, but more a result of basic human drives. AI art was also extremely lacking in tools to control the output at the start, so it was hard to put more meaning into AI art. Its gotten a lot better with technology like controlNet, but there is still a lot of room to improve. I'm excited about AI because in the future its going to give people the opportunity to create things that usually could only be created by large groups of people or by working themselves to death. The technology is not quite ready yet, but I think it will lead to a massive boom in independent projects. Some might argue that its going to produce a lot of garbage, but that's the price we have to pay when we give people better tools to express themselves.

|

|

|

|

|

| # ¿ May 13, 2024 07:12 |

|

Honestly, I think once it reaches the point an AI can replace programmers, its basically capable of replacing any job, except for jobs that strongly rely on social interaction. There might be a slightly delay for physical labor, but the tech is almost already there.

|

|

|

|

Count Roland posted:A side effect of Dall-e and similar programs is that there's a lot of AI art being generated, which shows up on the internet, which is trawled for data, which is then presumably fed back into AI models. I wonder if AI generated content is somehow filtered out to prevent feedback loops. AI being used to train AI is not the problem that people think it is. In fact, using AI to generate more training data is sometimes actually something desirable because you can better control the input. Midjourney for example uses RLHF (Reinforcement Learning from Human Feedback) to improve its model, and Stable Diffusion is going to release a model using the same technique. The controls used to gather the original dataset will work fine with a bunch of AI data, because even before AI, there was a lot of really bad data out there. (You can check the LAION database and search for a term, and see there is a lot of unrelated garbage.) As for the full potential of AI, I see a future where anybody can create their own TV show, game, or movie if they are willing to put in the effort without working themselves to death or relying on a team. I don't mean somebody just types in a prompt and they just automatically generate one, but they focus on their strengths, and use AI as an assistant to generate other stuff. Then there is using AI with robots. I could see a future where people do have robot housekeepers. Then there is the medical advantages, with early detection of symptoms, and hopefully more accurate diagnosis. My biggest concern is that getting the hardware/training data required for AI will require a large organization either government or corporate. I don't want a future where you have to pay a subscription for everything, corporations or government have firm control over what is acceptable or not, and its very easy for them to know everything about you. I have hope that the Open Source community won't let that scenario happen. Its been pretty good at keeping up with technological advancements even though higher quality LLMs are a bit hard to run on consumer hardware at the moment. -edit VVVV Local Stable Diffusion doesn't put a watermark on the images for most GUIs, and its fairly simple to remove the watermark. IShallRiseAgain fucked around with this message at 19:11 on Apr 1, 2023 |

|

|

|

Main Paineframe posted:I'm not talking about obstructing business at all. I'm talking about big business having to pay for the stuff they use in their for-profit products, just like everyone else. I think AI falls under the fair use category, its pretty hard to argue that what it is doing isn't transformative. There are super rare instances when AI can produce near copies, but this isn't something desirable and stems from an image being over-represented in the training data. Also, the requirement to have a "copyright-safe" dataset just means media companies like Disney or governments will have control over the technology and nobody else will be able to compete. They have the rights to more than enough copyrighted content that they could build a pretty good model without paying a cent to artists. The only people that will be screwed over is the general public. Although hopefully since the models are out there, no government will be able to contain AIs even if they crack down hard on them. IShallRiseAgain fucked around with this message at 23:23 on Apr 4, 2023 |

|

|

|

StratGoatCom posted:AI content CANNOT be copyrighted, do you not understand? Nonhuman processes cannot create copyrightable images under the current framework. You can copyright human amended AI output, but as the base stuff is for all intents and purposes open domain, if someone gets rid of the human stuff, it's free. The inputs do not change this. Useless for anyone with content to defend. And it would be a singularly stupid idea to change that, because it would allow a DDOS in essence against the copyright system and bring it to a halt under a wave of copyright trolling. I'm not saying anything about it being copyrighted? I'm saying that AI generated content probably doesn't violate copyright because its transformative. The only reason it wouldn't is because fair use is so poorly defined. I agree that AI images that are only prompts should not be copyrightable, because of the potential issues with that. It only takes a little bit of effort to generate AI content that is actually copyrightable.

|

|

|

|

StratGoatCom posted:Machines should not get the considerations people do, especially billion dollar company backed ones. And don't bring 'Fair use' into this, it's an anglo wierdness, not found elsewhere. Its people using AI to produce content though. Like I said before its not an issue for large corporations or governments. They already have access to the images to make their own dataset without having to pay a cent to artists. Its the general public that will suffer not the corporations. Also, Fair Use is essential when copyright exists otherwise companies or individuals can suppress any criticism of the works they produce. Japan is a great example of this, and tons of companies regularly exploit the fact that Fair Use isn't a thing.

|

|

|

|

Main Paineframe posted:If Disney makes an image generation AI, I certainly wouldn't expect them to license that out to just anyone. They'd guard that as closely and jealously as possible. If Disney builds a machine designed exclusively to create highly accurate and authentic images of their most valuable copyrighted characters, they're not gonna let anyone outside the company anywhere near it.

|

|

|

|

SaTaMaS posted:The thing keeping GPT from becoming AGI has nothing to do with consciousness and everything to do with embodiment. An embodied AGI system would have the ability to perceive and manipulate the world, learn from its environment, adapt to changes, and develop a robust understanding of the world that is not possible in disembodied systems. I think a true AGI would at least be able to train at the same time as its running. GPT does do some RLHF, but I don't think its real-time. Its definitely a Chinese Room situation at the current moment. Right now it just regurgitates its training data, and can't really utilize new knowledge. All it has is short term memory. Although some people have made an extremely rudimentary step in that direction like with this https://github.com/yoheinakajima/babyagi.

|

|

|

|

cat botherer posted:ChatGPT cannot control a robot. JFC people, come back to reality. https://www.microsoft.com/en-us/research/group/autonomous-systems-group-robotics/articles/chatgpt-for-robotics/

|

|

|

|

eXXon posted:Do you have sources for this? I was merely replying to that specific post. I didn't say anything about it needing to understanding what it is doing or having intelligence. You don't need that to control a robot. With my own experiments with ChatGPT, I am making a text adventure game that interfaces with it, and I don't even have the resources to fine-tune the output. IShallRiseAgain fucked around with this message at 01:35 on Apr 9, 2023 |

|

|

|

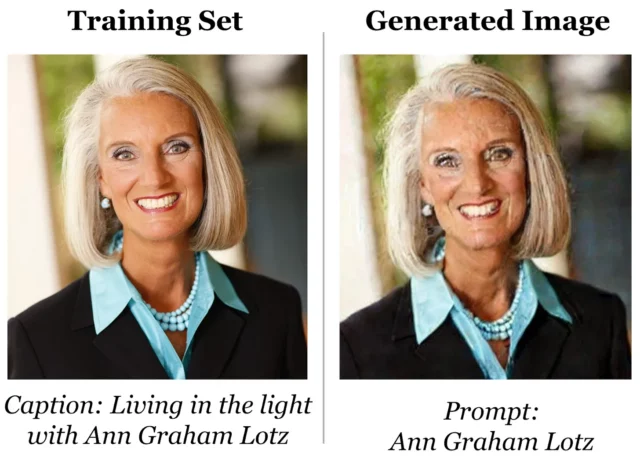

mobby_6kl posted:I doubt that anyone can answer that for certain right now because all of this is up to interpretation in court. While it is possible for stable diffusion to produce near identical outputs, this is very much an outlier situation where duplicate training images are way over-represented in the training data. Its also unlikely to happen unless you are already setting out to make a copy of an image. This is actually harmful to the model that nobody wants and is something that is fixable.

|

|

|

|

Raenir Salazar posted:From a game design standpoint it'll be really interesting to see if anyone tries to revive the "type in instructions" subgenre of adventure games. Using AI to interpret the commands more broadly. I'm working on that right now. https://www.youtube.com/watch?v=uohn5o0Cgpw

|

|

|

|

gurragadon posted:This looks fun, definitely a rainy night kind of game that I would play instead of reading creepypastas. Keeping it contained to a haunted house might keep the AI under control a little bit. I want to see how many ways I can die in this mansion. How far along are you and do you have any goals for it or just kind of leaving it open ended? Either way would be cool, I think. Does the AI generating the text make it easier or harder for you to make the game? So basically the idea is that its much more controlled than just asking chatGPT to simulate a text adventure game. The conversation basically resets for each pre-defined "room", and the game will handle being the long term memory for the AI. There are set objectives including an end objective, but multiple ways to achieve them. I'm making progress, but there is still a lot of writing on my end. The initial text for each room will be written by me, and I also establish rules for each room.

|

|

|

|

|

| # ¿ May 13, 2024 07:12 |

|

Jaxyon posted:My company already announced they've assigned a VP to start looking at how we can use AI to eliminate jobs. I've got a good target quote:[CEO - Monday 9:00 AM - Executive Meeting]

|

|

|