|

Also if you're going to get into data engineering then it'd probably be a good idea to get a certification for one of the three big cloud platforms - Amazon AWS, Microsoft Azure, or Google GCP. However don't let job interviewers intimidate you if you have AWS and they're an Azure house. It's all the same stuff with different labels slapped on, and you need to show them that.

|

|

|

|

|

| # ¿ May 21, 2024 01:20 |

|

If there's two things that you need to have a lock on in the DE space, it's python and SQL. Here's some python learning resources I posted in another thread: Our humble SA thread https://forums.somethingawful.com/showthread.php?threadid=3812541 https://www.reddit.com/r/inventwithpython/ https://www.reddit.com/r/learnpython/ https://www.reddit.com/r/Python/ https://www.reddit.com/r/PythonNoobs/ Books Python Crash Course Automate the Boring Stuff with Python Think Python Youtube Indently Tech With Tim mCoding ArjanCodes Python Simplified Online Learning Codecademy Real Python The Official Guide DataCamp Discussion Official Python IRC Discord Brain-busters LeetCode Practice Python HackerRank Edabit

|

|

|

|

The easiest way to do anything with tabular data in python is to use pandas dataframes. Import your CSV into a dataframe, then export it to SQLite. Boom, done. There are a zillion pandas tutorials out there, it won't be hard if you search for things like "import csv into pandas dataframe" or "pandas dataframe export to database", etc.

|

|

|

|

Also, when I originally had thoughts of creating a data engineering thread, the subtitle was going to be "do you want that in CSV, CSV, or CSV?"

|

|

|

|

Right now I'm working with the three technologies I hate the most: Salesforce, Informatica, and Oracle. However, the team is great so I don't mind. They're in the process of migrating Informatica to GCP, so I'm currently working on getting my Google cert. I've also let my python and SQL skills lag, so I need to pick that up again in case I have to do interviews when my contract is done.

|

|

|

|

Currently we're using Oracle as a dumping ground for Salesforce data so fortunately I don't have to interact with it much - do some queries, dump metadata to a spreadsheet, that kind of thing. What really does drive me up the wall, however, is the query language that Salesforce uses...specifically, how limited it is. You can't do joins, you can't use aliases, you can't do subqueries, no window functions. You can't even do "select *", lol. You have to include each and every field/column by name instead. You can't even do "distinct" on specific fields/columns. Someone wanted me to do some aggregate functions on specific fields with a where clause, so I had to export the whole object to SQL and query it there ಠ_ಠ

|

|

|

|

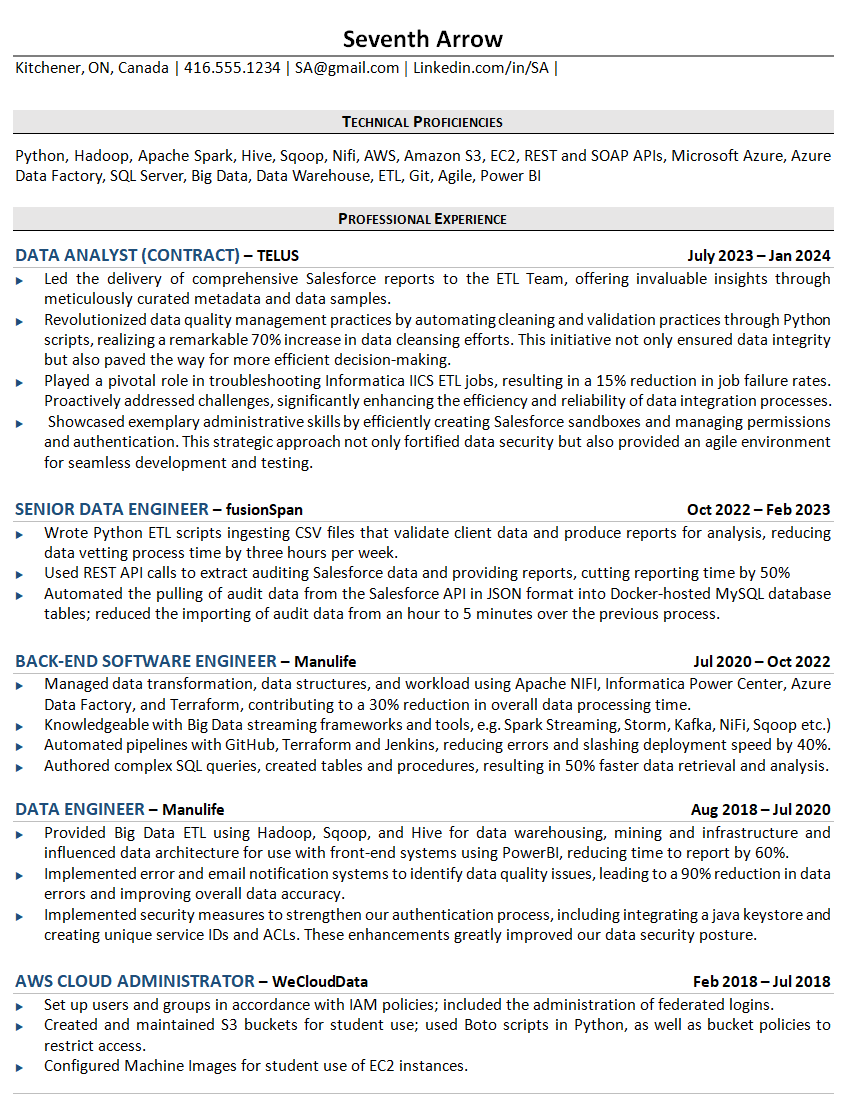

Ok so my contract with TELUS ended abruptly (because of budget lockdowns, not because I deleted production tables or something) so I'm on the hunt for a new job. Here is my resume, feel free to laugh and make fun of it but also offer critiques. Mind you, I will usually tailor it to the job I'm applying for, but it's fairly feature-complete as-is (I think). My lack of experience with Airflow, DBT, and Snowflake is a but of a weakness but I'm hoping to make up for it with project work. I'm also hoping to get a more recent cloud certification than my expired AWS thang (maybe Azure).

|

|

|

|

There was a huge upswell in demand for bootcamps back when every tech company was thirsty for more data scientists, but nowadays it seems like it's largely a wasteland of hucksters and profiteers. If there's a bootcamp that you think will teach you something that you need to know, then sure, proceed with caution. But under no circumstances should you go to a bootcamp under the impression that it'll give you resume clout. It won't. A degree in computer science will probably help you understand data structures and algorithms, but there's other ways of learning those things. The resume clout thing is similar, I think - every job opening says they want some sort of CompSci diploma but I've had loads of interviews with companies that have said that and I don't have a degree. Really, I think that having lots of personal projects really makes your application pop. I would say that many (but not all) of them should focus on ETL stuff - make a data pipeline with some combination of code, cloud, and some of the toys that every employer seems to want. If you're stuck for ideas, check with Darshil Parmar and Seattle Data Guy for starters.

|

|

|

|

|

| # ¿ May 21, 2024 01:20 |

|

To any Spark experts whose eyeballs may be glancing at this thread: I'm applying for a job and Spark is one of the required skills, but I'm fairly rusty. They sprang an assignment on me where they wanted me to take the MovieLens dataset and calculate: - The most common tag for a movie title and - The most common genre rated by a user After lots of time on Stack Overflow and Youtube, this is the script that I came up with. At first, I had something much simpler that just did the assigned task, but I figured that I would also add commenting, error checking, and unit testing because rumor has it that this is what professionals actually do. I've tested it and know it works but I'm wondering if it's a bit overboard? Feel free to roast. code:

|

|

|