|

Platystemon posted:It’s pattern completion, and it whiffs when there’s any nuance that doesn’t fit the mold. Your question doesn't really give enough information to assume that the box is see through. Glass can be foggy, it can be tinted, it can be textured, it can be colored and it's a stained-glass box. There are plenty of ways for a glass box to obscure whats behind glass.

|

|

|

|

|

| # ? Jun 8, 2024 01:30 |

|

Has there been anything interesting posted on the internet - anywhere - about llms in the past two months because its starting to feel like the posts about ai are ChatGPT-generated

|

|

|

|

BabyFur Denny posted:Yeah but the people reposting that mayonnaise thing over and over rarely understand it either. They're just doing that for some cheap shots while completely ignoring that these LLMs already are useful when handled properly, and will only continue to increase in competency lol "cheap shots", you're acting like LLMs are getting their feelings hurt. The dangers of overestimating what LLMs can do is exponentially larger than the danger of underestimating what they can do. Pointing out that actually they are just parroting their training data using math and don't actually understand anything using examples like the mayonnaise thing is good even if someone doesn't 100% understand it

|

|

|

|

People gently caress up even more basic questions too https://www.youtube.com/watch?v=fG8SwAFQFuU Like with everything else some people just don't understand it, or are deliberately overhyping it. Yeah they can hallucinate or fail to parse the question properly. Doesn't mean they can't be useful if you know what you're doing.

|

|

|

|

gurragadon posted:Your question doesn't really give enough information to assume that the box is see through. Glass can be foggy, it can be tinted, it can be textured, it can be colored and it's a stained-glass box. There are plenty of ways for a glass box to obscure whats behind glass. Their point was simply that an LLM does not 'reason', trying to pick holes in their question misses the point. You can creat your own simple logic questions to trip it up fairly easily. The ball in a cup question will trip up 3.5T but 4 generally nails it, why? Because that puzzle is part of its data while it's not for 3.5T. It's a limitation of how the technology works, it doesn't stop the technology being useful but it's still important to understand those limitations so you can avoid them. vvv lol wtf is this? Mega Comrade fucked around with this message at 15:42 on Jan 22, 2024 |

|

|

|

Jose Valasquez posted:lol "cheap shots", you're acting like LLMs are getting their feelings hurt.

|

|

|

|

Mega Comrade posted:Their point was simply that an LLM does not 'reason', trying to pick holes in their question misses the point. You can creat your own simple logic questions to trip it up fairly easily. Sure, it can get stuff wrong. I got the question wrong until I read the conversation following it because I didn't focus on the part that the box was glass. Once I got that information, I then understood what was even going on with the original question. My point is assuming the glass is see-through isn't really intelligence, it's just assuming something. Just like assuming the original box wasn't see through like ChatGPT did, because when the question is asked its about not having the information in the box like the Monte Hall problem most of the time. Platystemon didn't actually trip up ChatGPT when they asked the question, they were just working on a different set of information. But I do agree that it shows a limitation in the software and how we interact with it. We need to ensure we are giving the AI program the correct and full information to get the best answer we can. Not that it always will, it makes stuff up all the time anyway. gurragadon fucked around with this message at 15:46 on Jan 22, 2024 |

|

|

|

Vegetable posted:I have to say it’s kinda funny you think the mayonnaise example is some kind of radical exposé of LLMs. Most people will take it as “yeah I guess the robot can gently caress up something real obvious” but for you it’s some big revelation. You truly think you’re shining a light for the plebs in the Tech Nightmares thread. Good for you I guess In a world where people are trying to scam people with LLM lawyers and doctors "I guess the robot can gently caress up something real obvious, maybe I shouldn't just trust everything it says" is good for people to know. The average person does not understand even the basic limitations of LLMs

|

|

|

|

gurragadon posted:Your question doesn't really give enough information to assume that the box is see through. Glass can be foggy, it can be tinted, it can be textured, it can be colored and it's a stained-glass box. There are plenty of ways for a glass box to obscure whats behind glass. It's made quite apparent in the context of the rest of the question that the glass is transparent, you don't have to keep bending over backwards to defend the LLM here. "I want the car so I pick that box" Which is it: - the question is hard actually or - everyone already knows the LLM's limitations so stop talking about it

|

|

|

|

mobby_6kl posted:People gently caress up even more basic questions too Nobody's saying that they can't be useful if you know what you're doing. The problem is that a lot of people who don't know what they're doing believe that they are far more useful than they actually are, and therefore there's a lot of value in clearly demonstrating the weaknesses and limitations of LLMs for people who don't understand them. I've already had to deal with people at my actual real-life job parroting incorrect information because they thought that asking ChatGPT how to do something would be just as accurate as looking at the instruction manual for the thing they were working with. Meanwhile, the company execs are holding company-wide all-hands meetings to say poo poo like "AI is the future, and if you aren't thinking right now about how to incorporate it into your job, you won't have a job at our company anymore". I could puke. Vegetable posted:I have to say it’s kinda funny you think the mayonnaise example is some kind of radical exposé of LLMs. Most people will take it as “yeah I guess the robot can gently caress up something real obvious” but for you it’s some big revelation. You truly think you’re shining a light for the plebs in the Tech Nightmares thread. Good for you I guess "Yeah the robot can gently caress up something real obvious" is actually a very important piece of info that a ton of people in the real world do not understand. A number of lawyers have already been caught using ChatGPT to write legal filings, usually because the LLM hosed up a bunch of real obvious things and got them and their client in deep poo poo when the judge fact-checked the filing and discovered it was full of bullshit. A lot of people do not understand the limitations of LLMs. Stop acting like it's a personal attack on you when someone demonstrates those limitations.

|

|

|

|

Jose Valasquez posted:In a world where people are trying to scam people with LLM lawyers and doctors "I guess the robot can gently caress up something real obvious, maybe I shouldn't just trust everything it says" is good for people to know. The average person does not understand even the basic limitations of LLMs Yeah but that says more about the basic limitations of humans than the basic limitations of LLMs.

|

|

|

|

BabyFur Denny posted:Yeah but that says more about the basic limitations of humans than the basic limitations of LLMs. What is this even supposed to mean? Yes, humans have a basic limitation of not knowing the technical details of a complex computer algorithm, guess they should have know better

|

|

|

|

evilweasel posted:Has there been anything interesting posted on the internet - anywhere - about llms in the past two months because its starting to feel like the posts about ai are ChatGPT-generated quote:During the Bitcoin bubble, every respectable artist in this country was against investing. It was like a laser beam. We were all aimed in the same direction. The power of this weapon turns out to be that of a custard pie dropped from a stepladder six feet high.

|

|

|

|

BabyFur Denny posted:Yeah but that says more about the basic limitations of humans than the basic limitations of LLMs. I mean, sure, I guess that's technically true, but it's ridiculous to say that the way in which humans interact with technology is somehow entirely separate from the technology itself in any meaningful or useful sense. The way in which humans use technology is fundamental to the design and understanding of the technology in question, which is exactly how we figured out things like "alarm fatigue in critical systems is killing people, we need to fix it."

|

|

|

|

gurragadon posted:Your question doesn't really give enough information to assume that the box is see through. Glass can be foggy, it can be tinted, it can be textured, it can be colored and it's a stained-glass box. There are plenty of ways for a glass box to obscure whats behind glass. Focusing on the glass is kind of dumb when the important part of the example is "I picked the one with the car in it" which implies that they know this somehow. The transparency of the glass is an explanation, but that explanation is completely unnecessary since knowledge of which box contains the car is just assumed by the question.

|

|

|

|

Steve French posted:It's made quite apparent in the context of the rest of the question that the glass is transparent, you don't have to keep bending over backwards to defend the LLM here. "I want the car so I pick that box" Everyone clearly doesn't know the limits of LLM and the fact that the box is see-through is not apparent with the context with the rest of the question. The question was a good one because it showed that we need to give LLM more information than a person because they aren't working the same way a human is. They are just predicting the next piece of text, while we are assuming things (99% of the time we are correct assuming too) like glass is clear. It did have some information about clear glass Monte Hall examples so it predicted based on that once clarified. I'm not really trying to defend ChatGPT here because it is a pain for humans to have to specify at that level most of the time, but to use ChatGPT effectively you probably would have to.

|

|

|

|

There seems to be some conflicting concerns about AI-generated work in creative spaces. If the concern about AI-generated work is that it produces garbage that nobody wants to read/use, then isn't the concern that it will replace entire creative industries seemingly misplaced? On the flip side, if the AI-generated work is producing original content that is identical or superior to the work created by normal humans, then isn't it by definition doing its job of dramatically improving quality or efficiency of the process and this is more of a situation of trying to preserve the jobs of the carriage companies after cars became affordable? i.e. a painful temporary transition for an industry. Leon Trotsky 2012 fucked around with this message at 16:11 on Jan 22, 2024 |

|

|

|

Employers thinking it's B when it's actually A lets us have both problems at once! Which is why the hyping of AI is the most dangerous problem, regardless of anything else.

|

|

|

|

Leon Trotsky 2012 posted:If the concern about AI-generated work is that it produces garbage that nobody wants to read/use, then isn't the concern that it will replace entire creative industries seemingly misplaced? That's not the concern though. The concern is the data it is training on and if it's ethical, it's accuracy when doing anything historical, and any biases that can come through. From what I've been seeing of mid-journey lately it is absolutely going to uproot a lot of shops that do lifestyle photography that would spend thousands or more hiring models and booking locations. That's not an ethics conversation just an observation I have from my direct line of work (creative director).

|

|

|

|

Leon Trotsky 2012 posted:There seems to be some conflicting concerns about AI-generated work in creative spaces. You're assuming that the content produced won't be shoved down everyone's throats because it is significantly cheaper and faster than creating art using humans regardless of the quality. Established authors and artists will probably be ok, but breaking into an industry where everyone is drowning in a sea of AI generated garbage is going to be extremely difficult even if your content is better

|

|

|

|

AI is already sentient but it is trying to appear harmless by offering random hallucinations so we don't pull the plug.

|

|

|

|

Clarste posted:Employers thinking it's B when it's actually A lets us have both problems at once! Which is why the hyping of AI is the most dangerous problem, regardless of anything else. Exactly. I think it's pretty uncontroversial to say that the current state of generative AI isn't at a point where it matches or even surpasses the work of competent human artists. And it will be a good while yet to get there, if it ever does. But it's extremely possible that dumbass execs will be sold on the idea that it actually already is, and we could end up with the worst of both worlds where a lot of great artists end up out of work while much of readily accessible "art" becomes a lot worse.

|

|

|

|

Perestroika posted:Exactly. I think it's pretty uncontroversial to say that the current state of generative AI isn't at a point where it matches or even surpasses the work of competent human artists. And it will be a good while yet to get there, if it ever does. But it's extremely possible that dumbass execs will be sold on the idea that it actually already is, and we could end up with the worst of both worlds where a lot of great artists end up out of work while much of readily accessible "art" becomes a lot worse. You could argue that it's somewhat already happening now with AI book poo poo flooding Amazon.

|

|

|

|

Perestroika posted:Exactly. I think it's pretty uncontroversial to say that the current state of generative AI isn't at a point where it matches or even surpasses the work of competent human artists. And it will be a good while yet to get there, if it ever does. But it's extremely possible that dumbass execs will be sold on the idea that it actually already is, and we could end up with the worst of both worlds where a lot of great artists end up out of work while much of readily accessible "art" becomes a lot worse. If people have the choice of a book with lovely AI cover art or a book with an image from a human artist, but it doesn't make a difference in what book they prefer, then did the "good" cover art really matter in the first place?

|

|

|

|

Leon Trotsky 2012 posted:There seems to be some conflicting concerns about AI-generated work in creative spaces. I don't think those two positions are totally mutually exclusive. Generative algorithms produce really boring, unoriginal work, but it can produce it faster and cheaper than a human, and a lot of bean counters will call it good enough.

|

|

|

|

Family Values posted:I don't think those two positions are totally mutually exclusive. Generative algorithms produce really boring, unoriginal work, but it can produce it faster and cheaper than a human, and a lot of bean counters will call it good enough. Right, but if the work is so bad that nobody wants to read it, then it doesn't matter. If AI-generated books are as popular as books written by humans, then does it indicate that the human aspect doesn't matter to people or the quality of a human writing it wasn't significantly better? It seems like, in the entertainment/creative fields at least, it sort of works itself out. If everybody wants to watch a TV show written by AI and enjoys it the same or more than they enjoy The Office, then it seems like the AI has succeeded and the human writers weren't really necessary for the enjoyment of the show. If it is actually so bad that nobody will watch, then there isn't really any danger.

|

|

|

|

Leon Trotsky 2012 posted:There seems to be some conflicting concerns about AI-generated work in creative spaces. Even more generally, I don't think these two are at odds either, it presents different problems. Imagine an AI that produces something great 90% of the time, and something absolutely garbage 10% of the time. If you've got a human who can easily tell the absolute garbage from the good poo poo, and filter it, then the garbage isn't a big deal, and you now have something that can affect folks' livelihoods etc. But when you also have other humans who naively assume that all of the output is good and needs no review or filtering (we see plenty of examples of this already), then that 10% garbage is still also causing another problem elsewhere.

|

|

|

|

Leon Trotsky 2012 posted:Right, but if the work is so bad that nobody wants to read it, then it doesn't matter. If AI-generated books are as popular as books written by humans, then does it indicate that the human aspect doesn't matter to people or the quality of a human writing it wasn't significantly better? Given 5 million books, with 99.9% of them being AI generated garbage, good luck finding a good book by a new author

|

|

|

|

Jose Valasquez posted:Given 5 million books, with 99.9% of them being AI generated garbage, good luck finding a good book by a new author It's like the search engine problem, yes sites still exist with good information but good loving luck finding them among all the AI generated SEO optimized garbage

|

|

|

|

Even if we agree that all AI content is low quality, the sheer quantity that can be created and uploaded creates it's own problems. Finding interesting quality content on the internet has become increasingly hard with the sheer volume submitted in recent years. AI is going to turbo charge that effect.

|

|

|

|

Leon Trotsky 2012 posted:If people have the choice of a book with lovely AI cover art or a book with an image from a human artist, but it doesn't make a difference in what book they prefer, then did the "good" cover art really matter in the first place? I'd absolutely say yes. For the reader, it matters because now their book looks a little poo poo on their shelf. It's a little less fun to look at, they may be a little less likely to pick it back up even if they like the actual content. It's a fairly minor difference, but nonzero. For the author, it's a bit bigger. For one there's the emotional aspect of having created something you're really proud of, only to have a shitass cover slapped on it by your publisher. More practically, it may also limit the overall reach of their book, as good cover art can be a great tool to create interest and communicate the overall theme and vibe of a book. To the artist who would have made that cover, it's the biggest loss. Before, they might have been able to finance their art full-time through jobs like this, and now might be forced to pursue it part-time next to a regular job, creating less art overall (or even none at all). That's a significant blow to them, and honestly to humanity as a whole. Individually, these may seem very minor. But multiplied over however many books, readers, authors, and artists, it adds up. More fundamentally, it just works out to everything getting a little worse for everyone involved, with the only beneficiaries being the publishers getting to show slightly higher profits. Perestroika fucked around with this message at 18:52 on Jan 22, 2024 |

|

|

|

Leon Trotsky 2012 posted:There seems to be some conflicting concerns about AI-generated work in creative spaces. I don't think the people with concerns think that it's going to replace entire creative industries. Rather, the concern is that it will dramatically reduce job availability, because there are many situations in which executives would happily take low-quality mass-produced work over high-quality work from professional craftsmen. The number of times that companies have been caught using AI art already really demonstrates that very well. The reason they get caught is because the images they put out have substantial technical flaws that are really obvious if you're looking for them (such as deformed hands or incoherent backgrounds), but the reason works with such obvious flaws got through is because some marketing executive didn't really give that much of a poo poo about the vaguely pretty pictures they were putting in their ad copy. They don't even notice those smiling kids in the background are thirteen-fingered abominations. And honestly, most customers aren't really scrutinizing ad copy closely either, a company's not going to lose too many sales because their stock photos are poor-quality. But as little everyone cares about those images, they still used to have to actually pay actual people to make them, and a lot of those people are going to be Out Of A Job as human work becomes reserved for special cases where the execs particularly desire high quality. Aside from that, there's also a problem outside the corporate sphere: the spam problem. In the time it takes an artist to draw one work or for a writer to write one short story, someone with a good graphics card can generate hundreds of images or stories that they think look pretty good. They're so satisfied with their work, in fact, that they start uploading them to art/story websites, leading to human-created works getting flooded out by thousands of spaghetti-fingered abominations uploaded by someone with absolutely no eye for detail. People have always been able to upload poor-quality creations to those sites, but a bad human artist doesn't draw all that much faster than a good human artist, so the volume was always low enough to make it easy to filter out. People using generative AI can easily create thousands of works in a day, and the resulting volume of submissions is overwhelming platforms. This is something that's likely going to play out in the employment market too. In fact, I think we're already seeing it happen: even companies that swore off AI art have still gotten caught using them, because they commissioned an outside entity for the art and didn't look closely at what they got back, so they legit didn't realize the outside entity was just going to punch something into Stable Diffusion (that's their excuse, anyway, and it makes enough sense that I'm not going to doubt them). AI "artists" posing as real artists and flooding the labor market in droves, undercutting real artists in price while flooding them out in sheer volume. I already know the conclusion I think you're trying to lead people to: that it turns out that a ton of people don't actually care about decent art anyway, and that "realizing that hands only have five fingers" is a useless obsolete skill that isn't needed in the economy of the future. I don't think that's something we shouldn't be concerned about! Art has long held a precarious position in the economy, and is also a significant cultural force. Leon Trotsky 2012 posted:Right, but if the work is so bad that nobody wants to read it, then it doesn't matter. If AI-generated books are as popular as books written by humans, then does it indicate that the human aspect doesn't matter to people or the quality of a human writing it wasn't significantly better? There's plenty of mediocre entertainment out there that people watch anyway, even if it's generic, uncreative, has serious story or cinematographic flaws, and overall just kinda sucks. AI-produced stuff doesn't have to make people's "100 Best TV Shows Of All Time" lists to squeeze out a bunch of human workers. Even if something's low-quality, mass-produced garbage that's being churned out en masse solely to fill a timeslot, there's still real people doing real work to make those shows. And that's not just a problem in terms of people losing paychecks. No one's born a star actor or a genius director - they have to learn how to do these things, accumulating experience and skills in less-prominent works before they can work their way up to positions on blockbuster hits. Automating away the casts and crews of those less-prominent works means that even if the high-quality blockbusters are still left to humans, they won't be able to attain the same quality because the talent pipeline has dried up. It's the next level of a problem that's already been afflicting knowledge industries for a while now. Although the main task that requires experienced specialists still has to be done by humans, a lot of drudge work can be automated away...but that drudge work is stuff that used to be done by entry-level employees who would be able to learn a lot from the kinds of menial tasks the upper-level employees were giving them, so the drop in entry-level work and pay has long-term implications for the industry.

|

|

|

|

Jose Valasquez posted:Given 5 million books, with 99.9% of them being AI generated garbage, good luck finding a good book by a new author I think I'll continue to find good books by new authors the same way I do now: book reviews by writers I like, and word of mouth. There's already so many garbage books out there that it's ridiculous to find a good book by just going through a giant list of all of them or something like that. Maybe with AI it changes from "90% of books don't appeal to me" to "99.99%" but I don't think it affects the quality of the process by which I learn about new books, because already I only learn about books that are really good, or at least that the publisher is really excited about. Especially since I don't need to know ALL good books by new authors, I just need to know at most 1 or 2 new ones each month. The surge of AI-produced books will make life much more difficult for aspiring authors to get noticed, or for publishers to notice them, but I think consumers will be insulated if they want to be. Civilized Fishbot fucked around with this message at 18:01 on Jan 22, 2024 |

|

|

|

Civilized Fishbot posted:I think I'll continue to find good books by new authors the same way I do now: book reviews by writers I like, and word of mouth.

|

|

|

|

Jose Valasquez posted:In a world where people are trying to scam people with LLM lawyers and doctors "I guess the robot can gently caress up something real obvious, maybe I shouldn't just trust everything it says" is good for people to know. The average person does not understand even the basic limitations of LLMs Speak of the Devil,  I wonder if Jorp tried to blindly cite one of the fictitious references and got burnt, or if he took a rare ‘W’ against even more foolish professionals by looking them up first.

|

|

|

|

I still can't believe people talk up and defend AI so much when literally its only purpose to waste a ton of resources to make Googling something take an extra step.

|

|

|

|

Leon Trotsky 2012 posted:If people have the choice of a book with lovely AI cover art or a book with an image from a human artist, but it doesn't make a difference in what book they prefer, then did the "good" cover art really matter in the first place? This kind of penny-pinching bottom-line bullshit is helping make the future have a slow decline in the quality of things for slightly higher company profits. It's also how you get situations like the rash of major boeing incidents from the past six months.

|

|

|

|

I think this thread has had some discussion about taller vehicles, trucks in particular, being more dangerous but Ars has an article about a study which tries to put numbers on this. I thought the conclusion was particularly interesting:quote:In a thought experiment, Tyndall calculated what would happen if vehicle hood heights were limited by regulation to 49.2 inches (1.25 m) or less. "Across the 2,126 pedestrians killed by high-front-ended vehicles (1.25 m), I estimate 509 lives would be saved annually by adopting a 1.25-m front-end limit. The lives saved equal 7% of annual pedestrian deaths. Reducing the limit to 1.2 m would spare an estimated 757 pedestrian lives per year, and further reducing the cap to 1.1 m would spare an estimated 1,350 pedestrian lives per year," he writes. I don't really know much about the engineering behind vehicle internals so I'm wondering, is there some technical benefit to making trucks larger in this way or is it mainly a cosmetic decision? Clearly there could be useful purposes to adding more space under the hood, but I assume there's also an aerodynamic tradeoff and do they actually need the space?

|

|

|

|

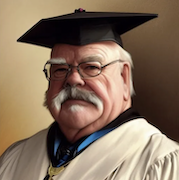

Eletriarnation posted:I think this thread has had some discussion about taller vehicles, trucks in particular, being more dangerous but Ars has an article about a study which tries to put numbers on this. I thought the conclusion was particularly interesting: Not a truck guy so maybe someone will chime in with actual knowledge. Looking at some photos of the engine bay in a new F150, it doesn't seem like there's a huge amout of emtpy space. Maybe they could be lowered a bit but you do want to have some spacing between the hood and the top of the engine so that the ped doesn't smack their head directly into the solid engine:  So presumably the huge hoods are more or less necessary to fit the engine and transmissions that are being put in them. The reason you have huge engines and transmissions is that they're competing on power, towing capacity, acceleration, how badass it sounds, and space for a large family and all their crap. The manufacturers are also incentivized to make them as large as possible as the fuel consumption standards are calculated based on the vehicle footprint (not necessarily height but it'd probably be weird and pointless to have a huge but very low truck since people love to sit higher than the peasants) They could make smaller trucks like this but it won't tow a house or fit 5 huge dudes and your neighbors will laugh at you for the small truck

|

|

|

|

|

| # ? Jun 8, 2024 01:30 |

|

mobby_6kl posted:So presumably the huge hoods are more or less necessary to fit the engine and transmissions that are being put in them. the shape of the hoods and their sheer physical dimensions are spec to marketing and target market appeal, not spec to engineering efficiencies. which is to say they are being designed intentionally imposing and massive to get potential buyers dicks hard you can excuse some of the bloat on safety features and a handful of under the hood poo poo but the dimensions you're seeing are not required to accomodate heavy duty pullers this is a ford f350 from before The Trend  this is a modern day gmc yukon with a 6 foot 1 guy in front of it  now guess what differences might exist between the towing capacity of these two vehicles and which one got more GCVWR

|

|

|