|

I would also like such a thread particularly since I bit the bullet and started learning the machine learning/data science thing recently and want to at least be informed about that that branch off from it.

|

|

|

|

|

| # ? Jun 1, 2024 11:54 |

|

Peel posted:I would also like such a thread particularly since I bit the bullet and started learning the machine learning/data science thing recently and want to at least be informed about that that branch off from it. don't forget to advertise it on LW and SSC

|

|

|

|

Ditto and especially curious about this!SolTerrasa posted:AI is lazy as hell, by design (if you try to make it unlazy it will do worse things, ask me later if you want to know why).

|

|

|

|

SolTerrasa posted:AI is lazy as hell, by design (if you try to make it unlazy it will do worse things, ask me later if you want to know why). Culinary Bears posted:Ditto and especially curious about this! As you know from the gospel of the Heretic Roko, (who is still hanging around these great ideas that totally aren't a memetic hazard for people like him. Saw a great Facebook thread where the LW social sphere and the UK perfectly normal leftist sphere crossed, and his understanding of interaction with women was at a, how do I put this, all set to take the Red Pill level. The human females in the thread let loose on him. One contacted me asking "is he THAT Roko?" and I had to confess he indeed was. And asked that she not needle him on basilisks, he'd given plenty of other targets by then after all.)

|

|

|

|

AIs are inherently malevolent because machines can't understand love, so laziness stops them trying to wipe out humanity.

|

|

|

|

divabot posted:Saw a great Facebook thread where the LW social sphere and the UK perfectly normal leftist sphere crossed, Not gonna lie, kind of curious about this too

|

|

|

|

Ichabod Sexbeast posted:Not gonna lie, kind of curious about this too Just social spheres colliding. It turns out socialist-leaning British people and Bay Area libertarians have amusing interactions.

|

|

|

|

divabot posted:Just social spheres colliding. It turns out socialist-leaning British people and Bay Area libertarians have amusing interactions.

|

|

|

|

I am reminded of the time the world's crustiest tankie and the world's glibbest fascist had a head-to-head https://twitter.com/philgreaves01/status/629606757854154752 https://twitter.com/PhilGreaves01/status/629607432948285440 https://twitter.com/FuturistGirl/status/629609471271014400 https://twitter.com/FuturistGirl/status/629608936870535169 https://twitter.com/PhilGreaves01/status/629611105032146944

|

|

|

|

Sure, here goes. The reason you make your AI lazy is not because otherwise it will take over the world. Once you know the math, AI loses all its mystery and mysticism. In my experience, most large modern commercial AI systems have a bit in them that's just pure machine learning, which tends to have a huge number of finicky parameters. Way too many to set by hand. When you create an AI system, during training you try to select these parameters so that the output of the whole system will converge with a set of examples you provide, like I described earlier. There's a lot of ways to do this. For example, some training methodologies just let the system modify itself at random and keep only the "good" modifications, and iterate until it's good enough. Another method that will impose more structure: calculate the derivative of "correctness" with respect to each parameter and bump them all in the "more correct" direction. But if that's all you do, your AI system will work perfectly in tests, then totally fall over when you try to use it for real. One time I was making a system that was supposed to play a really simplified discrete RTS game, like a really straightforward Age of Empires or Starcraft. I skipped this bit about making my AI "lazy". I had a handful of simple example enemies programmed in: one where they start on opposite sides of the map with equal resources, and the enemy just builds as fast as possible to get to a relatively powerful unit then builds them nonstop. And then another one where they start very close to each other with only one peasant, and the enemy runs away to go claim the desirable part of the board. And so on, about 5 examples, in increasing order of what I thought of as complexity. The hardest one was a turtle enemy with strong defenses against the Al's one peasant but lots of resources. So I set that running, and spitting out output "(0/5 scenarios won, X time steps elapsed in Y seconds real time)..." and leave it alone for a while. I come back to it a day later or so and it's looking good! 4/5 won, it never beat the 5th one but still, pretty good. I watch a replay of one of these matches in a visualizer and it looks good. Unconventional solve, but that's to be expected with a new game and an AI unused to normal RTS games. So I fire up a human v AI game and see if I've trained a master Starcraft player, and... Well, I gave an order to a peasant, and then the AI didn't do anything. It didn't even randomly move its own peasant or go mine a useless resource or anything. I was surprised. I won in like a minute, and then I started trying to figure out what went wrong. I checked the games against the preprogrammed enemies, and it still worked there. When I finally figured it out I was annoyed. What happened was, the AI found an easy-win button for 4 of 5 scenarios. In one, it found out that if you attack enemy peasants with your peasants, the preprogrammed enemy didn't respond. In another, it invented a peculiar rush strategy where it'd build a constant stream of entry level ranged units since the AI enemy didn't build any ranged defenses. They all died, but they did a little damage and I never programmed that enemy to repair anything. Anyway, the important thing was, it only learned how to deal with exactly the one set of preprogrammed responses in exactly those four scenarios. Move one peasant's starting position by one square and the AI would have no idea what to do, it'd have to relearn from scratch. Because of this memorization technique, the AI was *huge*. The action-value function had a nonzero term for not just most spaces on the board times most types of unit, but for a startling swath of the entire board state-space. A few more scenarios or a marginally larger board or a few more unit types, and I would have run out of RAM trying to train this thing (and now that I think about it, it must have been swapping to disk). It could never have played adequately against a human, since humans don't use exactly the same moves every single time. This dumb mistake is a special case of what's called "overfitting". The solution is a special case of what's called "regularization", where you penalize the AI for getting too big and smart. So when your system is futzing around randomly trying to learn good parameters for the massive fiddly machine learning pieces, you need to also enforce that it never gets too complicated. Rules like "if there's a peasant on square 1,4 with 3/5 health and also there's a militiaman on square 2,7 with 7/15 health then move the peasant to square 1,3" need to be harder to learn, more expensive, than the more expressive, more general rules like "always move peasants away from militiamen". You (very generally) want your AI system to learn the fewest possible and simplest possible and most generic possible rules. But if you don't apply pressure in that direction, it'll often end up just memorizing the inputs and being completely useless as a generalized solution. So you make your AI lazy. You constrain its resources, or subtract points for complicated or large solutions, or you explicitly ban it from making decisions based on things that are too specific, or you remove neurons or features completely at random, or something.

|

|

|

|

The Vosgian Beast posted:I am reminded of the time the world's crustiest tankie and the world's glibbest fascist had a head-to-head "If it aint interesting it aint radical" -an academic

|

|

|

|

SolTerrasa posted:Sure, here goes. So how are cameras racist? Are you going for something like they can't capture contrasts on dark objects as well as human eyes or something? (See also, any photos of people wearing black)

|

|

|

|

Osama Dozen-Dongs posted:So how are cameras racist? Are you going for something like they can't capture contrasts on dark objects as well as human eyes or something? (See also, any photos of people wearing black) The teams that make poo poo like that use themselves to calibrate it and are all-white most of the time. They never thought to test a nonwhite face because they don't think about faces that aren't white. That's racism ergo the machine replicates that racism.

|

|

|

|

Osama Dozen-Dongs posted:So how are cameras racist? Are you going for something like they can't capture contrasts on dark objects as well as human eyes or something? (See also, any photos of people wearing black) https://priceonomics.com/how-photography-was-optimized-for-white-skin/ Other articles on computer racism: https://www.fordfoundation.org/ideas/equals-change-blog/posts/can-computers-be-racist-big-data-inequality-and-discrimination/ http://www.npr.org/sections/alltechconsidered/2016/03/15/470422089/can-computer-programs-be-racist-and-sexist

|

|

|

|

The Harvard study is kinda hilarious since it seems the people involved have no idea how AdWords ads are bought and set up. The bias in this case was likely entirely human as a PPC manager from Instant Checkmate LLC thought it would be a great idea to bid against a list of stereotypical African-American names. That said, advertising algorithms in general are most likely also falling into the same traps as other automated systems.

|

|

|

|

Osama Dozen-Dongs posted:So how are cameras racist? Are you going for something like they can't capture contrasts on dark objects as well as human eyes or something? (See also, any photos of people wearing black) One of the most important things about AI photo analysis is that you should not know what it's using or how it's using it to make its decisions. You basically set up a bunch of tiny scanners that look at a random scatter of individual pixels all over the image, then a bunch of scanner-scanners, and on up for several layers. These are set up to run on a training set, know whether they got it right or wrong, and gradually adjust themselves and how much weight they put on certain pixels and values until they are right enough. It will usually go for the easiest and most obvious thing it can. If you train one on exclusively white faces for positive and things which are not faces for negative, it may well learn that "big slab of pink" is one of the defining features of a "face". This leads to a racial bias. The thing is, all AI will have failure rates. They need to be able to be wrong in order to be flexible enough to have any chance of being right on data they haven't seen yet. An AI's training set needs enough variation to know that "big slab of pink" is a worse predictor than "big slab of red-spectrum colour of any shade."

|

|

|

|

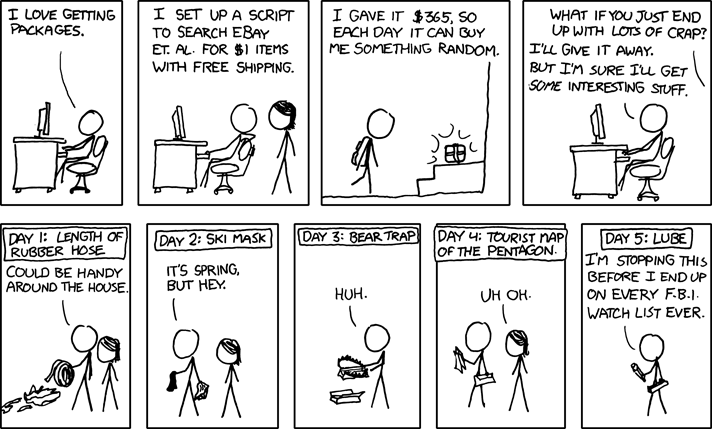

It seems that everyone finds it hilarious and/or sad when artificial "intelligence" makes mistakes... but if the mistake happens to confirm a common prejudice, suddenly some people jump to defend the machine as perfectly rational and unbiased and why would you program PC prejudices into it. Except of course we always program prejudices in our machines. A recent example I've found: remember this XKCD strip? Admit it, it's a beautiful idea. The machine makes your day weirder by introducing you, for cheap, to things you'd never have looked up for yourself. It sounds so good that some people made a business of it. Except, I want you to try it for yourself. Go ahead, go on eBay and search for $1 items with free shipping. Notice anything? See how "$1 items with free shipping" gets you an endless stream of utterly worthless garbage? Now, this isn't a machine learning issue, but it is a perfect example of the same lack of imagination that makes you choose a biased training set. There's an unwritten assumption that "$1 items" are going to be interesting or useful or cool, because the author's mental image of eBay is of a place with interesting, useful or cool stuff, because he went on eBay specifically to look for interesting, useful, cool stuff, and so he never saw the boring, pointless and overpriced crap that makes up the majority of the stuff for sale The aforementioned business? they know this, and they try filtering and categorizing, but they still end up mailing people mostly crap, as people on these very forums found out. Some people suspect that eBay sellers specifically set up overpriced-but-cheap poo poo items to be bought by bots, which isn't farfetched at all, because one of the biases that people inevitably program in their systems is that people will play along and not try to gently caress with them. You know how a lot of people trust Uber and Uber drivers more than taxi cabs? rumor has it that Uber drivers coordinate to go offline during big events, and then go back online at the same time, to manufacture scarcity and trigger Uber's surge pricing algorithm None of this is new or a surprise, we always have a good laugh at how dumb machines are when it happens, but add emotional baggage and tribalism and suddenly everyone forgets and makes excuses for "the cold equations". "Maybe it's because black people really are harder to see", "maybe East Asians really have eyes so narrow they might as well be closed", "maybe black people really do look like gorillas" etc. and I want to ask loving really? When in your entire life have you ever had difficulty making out the facial features of a black person, much less mistook them for another species? If we all were dark-skinned we wouldn't even be having this discussion, it would be a given that the insurmountable issues with shooting

|

|

|

|

Osama Dozen-Dongs posted:So how are cameras racist? Are you going for something like they can't capture contrasts on dark objects as well as human eyes or something? (See also, any photos of people wearing black) Those things are probably also true, but I was talking about three other pieces: scene metering, color correction, and autofocus. This part is outside my area of direct expertise so I'll be brief. People have probably heard this before. Most scene metering tries to make the average brightness someplace around 18% grey. Almost anything other than manual mode on a nice camera is going to try to do this. Why 18%? It looks good, and it's what they've always done. Originally I'm sure it was selected on the basis of some experiment or other, but it has the effect of dramatically underexposing photos of people with dark skin. Color correction: not as serious a problem anymore now that we have digital photography but it does mean that you end up needing to do post-processing on photos of people with dark skin where you don't really have to do anything for photos of people with light skin. During the film era it was really insane: photo labs were literally calibrated using a stock image of a white woman in a black dress, and that was that. Autofocus: autofocus usually looks for contrast, or if you have a nicer camera it looks for faces, and it looks for faces using old school computer vision techniques which come down to "distance between the eyes" and "shape of cheekbones" and a bunch of other stuff, all of which were tuned for white people. If you're talking about contrast-based autofocus then it'll usually find less contrast between a dark skinned person and their background.

|

|

|

|

SolTerrasa posted:photo labs were literally calibrated using a stock image of a white woman in a black dress and literally labeled NORMAL  And don't forget   It should be an uncontroversial statement that the perception of skin color is pretty biased

|

|

|

|

https://twitter.com/Pastysugardaddy/status/776176784463237120 Sometimes I think Matt Forney wants people to make fun of his appearance

|

|

|

|

hackbunny posted:and literally labeled NORMAL I know we're only looking at six of the photos, but I think the fact they're all attractive should be noted.

|

|

|

|

gently caress. gently caress. I'm hip! I'm woke! I'm wary of the powers that be! How did I reach my mid twenties without noticing this? The privilege is real and it is loving insidious.

|

|

|

|

Probably cause you had a cool mom who bought bandaids in fire engine red.

|

|

|

|

Terrible Opinions posted:Probably cause you had a cool mom who bought bandaids in fire engine red. Space Jam Jam'd-Aids, actually. Marvin the Martian always made my knee scrapes heal up fastest.

|

|

|

|

I never even realised that band-aids were supposed to be approximating white skin colour.

|

|

|

|

The Vosgian Beast posted:https://twitter.com/Pastysugardaddy/status/776176784463237120 Sometimes I think Matt Forney wants people to make fun of his appearance I do love how commonly the "0/10 would not bang" types tend to look like God was drunk when arranging their features.

|

|

|

|

Somfin posted:gently caress. gently caress. I'm hip! I'm woke! I'm wary of the powers that be! How did I reach my mid twenties without noticing this? You kid, but if you image search for "skin colored bandaid", nowadays, most of the result are of either bandaids available in several skin tones, or a new experimental bandaid that slowly becomes invisible as it picks up your skin color (I wonder if it actually works that good). It was hard to find a candid photo of a "white" bandaid on a black person, the idea has gone mainstream. It wasn't always like this MizPiz posted:I know we're only looking at six of the photos, but I think the fact they're all attractive should be noted. You have to go to extra lengths to hire an "unattractive" model. Even then, they'll likely be "hollywood ugly" i.e. unusual or older rather than actually ugly

|

|

|

|

hackbunny posted:You have to go to extra lengths to hire an "unattractive" model. Even then, they'll likely be "hollywood ugly" i.e. unusual or older rather than actually ugly I just figured they were essentially using whatever photos they could find. Bottom one looks more like a personal portrait than professionally shot photo. Would like to know how they chose the photos and see the men's photos just to get a comparison.

|

|

|

|

MizPiz posted:I just figured they were essentially using whatever photos they could find. Bottom one looks more like a personal portrait than professionally shot photo. Would like to know how they chose the photos and see the men's photos just to get a comparison. No, Kodak hired models for grueling multi-day sessions of staring unblinkingly at the camera while massive studio lights were pointed right at them. Models would be given days off to rest their eyes. Kodak no longer amounts to much but back then they were the color film manufacturer, if they didn't make the stock photos nobody else could because they held a monopoly, which makes the unfortunate way their film captured black skin even more egregious. I wasn't even kidding about chocolate and walnut furniture, they apparently only made film that could capture a high range of browns after their commercial clients complained they couldn't photograph dark chocolate and dark wood right. Kodak introduced this new film by obliquely stating that it could be used to take photos of black horses in low light. Seriously, read the first article linked by InediblePenguin

|

|

|

|

hackbunny posted:You kid, but if you image search for "skin colored bandaid", nowadays, most of the result are of either bandaids available in several skin tones, or a new experimental bandaid that slowly becomes invisible as it picks up your skin color (I wonder if it actually works that good). It was hard to find a candid photo of a "white" bandaid on a black person, the idea has gone mainstream. It wasn't always like this

|

|

|

|

Terrible Opinions posted:Honestly isn't the second one kinda redundant given that we have transparent bandaids already. Many of the pads are still colored to approximate white skin, sadly enough. hackbunny posted:You have to go to extra lengths to hire an "unattractive" model. Even then, they'll likely be "hollywood ugly" i.e. unusual or older rather than actually ugly JEsus, just look at all these ugmos!

|

|

|

|

Phil Sandifer posted:List of Things That Have Happened Since I Finished Neoreaction A Basilisk

|

|

|

|

I... What. How did they get MORE WEIRD?

|

|

|

|

Are they talking about Pepe the Frog

|

|

|

|

Parallel Paraplegic posted:Are they talking about Pepe the Frog But frogs are amphibians.

|

|

|

|

Parallel Paraplegic posted:Are they talking about Pepe the Frog NRx have started pretending Pepe is an avatar of this obscure Egyptian god https://en.wikipedia.org/wiki/Kuk_(mythology)

|

|

|

|

praise great KEK, who was Pepe, who will be the Machine God of the Singularity. send money now or be tortured eternally... for the lulz.

|

|

|

|

|

They are talking about the Kek, the actual god of /pol/. Noted by Nick Land in April, so this is quality Dark Enlightenment discourse from a primary figure with philosophical heft, not yer usual alt-right meme stew or even run-of-the-mill neoreactionary crayon-in-fine-print. of course I first heard of it on Monday, so it'll be over by Friday. Nick Land is not entirely pleased with the scary lumpen idiots he's actually attracted, but he did literally ask to slum with these fuckwits, so sucks to be him. Nick Land: And yea, capitalism shall break its mere human bonds and evolve beyond mere humanity, alt-right: [confederate furries] Nick Land: Note to self, the brown acid is not so good http://philsandifer.tumblr.com/post/150424766326/holy-poo poo-im-living-in-a-surreal-novel-a Phil Sandifer posted:Anonymous asked: Holy poo poo, I'm living in a surreal novel. A Tayposter just got the 88888888 GET on /pol/ and a lot of people are interpreting it as a command from Kek to build an AI. divabot has a new favorite as of 00:09 on Sep 17, 2016 |

|

|

|

I'm going to assume that in a year, this will be one of those things they'll all have quietly agreed not to talk about . Like when they all became convinced they were Neanderthals and went on about Thals being oppressed by Cro-Mags

|

|

|

|

|

| # ? Jun 1, 2024 11:54 |

|

Alt-Right tries to reach out to some YouTubers of various ethnicities in a HUGE 4 hour video I present to you all the strangest YouTube video that will make you feel like you took a manure bath BornAPoorBlkChild has a new favorite as of 02:33 on Sep 17, 2016 |

|

|