|

GreenNight posted:What's wrong with the VMware console? I mean it's no ConsoleOne.

|

|

|

|

|

| # ? May 14, 2024 23:23 |

|

It's just a pain in the rear end to use and has had some stupid bugs that have persisted through multiple versions, like the one that prevents you from bringing the console up as focus after it has been running for a while, forcing you to close and reopen the console. Or the times when you try to connect an ISO from local machine and it hangs until you completely close vSphere and relaunch it. poo poo like that. People give Hyper-V a lot of poo poo but the console experience is a million times better there. And to be clear, I'm talking specifically about the guest console. VMware obviously does a lot of things really well, but interacting with the OS of a guest isn't one of them.

|

|

|

|

Yeah that's true. You need to use the thick console to run update manager. I don't ever use the console to get to a vm though. I have everything in an rdp manager.

|

|

|

|

There are always times when you'll need to use it, namely during builds, before you IP a server, when you interact with guests that are on a heavily monitored network that has a hard deny on RDP, when there's a problem with remote accessibility, etc.

|

|

|

|

TheFace posted:I was mostly joking. And I forgot I was talking during the whole cloud conversation so people would assume I meant "put all storage in the cloud". Let me talk about the kind of problem that necessitates a storage engineer. We had this big IBM SONAS, which is a high-performance GPFS-based NAS platform that no one should have ever bought. It was connected over NFS to an HPC cluster with about 1600 cores and 16 TB of RAM, a handful of standalone servers, and a campus full of scientists accessing the thing over SMB/CIFS. Snapshots weren't replicating correctly between one unit and the backup in our DR site, so we applied a vendor-supplied patch. About a week later, we noticed that NFS performance on the thing was tanking periodically and driving up insane load on the frontends. This was backing up the whole cluster and utterly loving things up for the scientists who needed the cluster to do critical research. Performance metrics provided by the device's management GUI weren't very effective in identifying the problem, so our storage engineer reverse-engineered the interface between the systems and the performance collector feeding the management interface. We also orchestrated all of our client nodes with a custom collector that polled the NFS statistics for every mount on every client every 10 seconds and forwarded them into Graphite, generating about 4000 IOPS of constant metrics load. We inspected and correlated the latency of various operations and found that periodically, the number of file lock operations to one specific lab's file shares spiked when they ran a certain genomics batch job and the system ground to a halt. Our storage engineer dug through the provided binaries for the system update and diffed configs and found that the new version enabled opportunistic locking for CIFS without documenting it, which utterly hosed the NFS file locking and caused our performance issues. Good luck finding that poo poo with a generalist spending 10% of their day on storage. Vulture Culture fucked around with this message at 05:26 on Sep 14, 2017 |

|

|

|

Thanks Ants posted:If the hardware that your VMware VM is running on fucks itself up, your VM will boot up somewhere else. In AWS/Azure/Google Cloud you will wake up to a notification unless you've done the work yourself to handle deployment of a new VM, loading a configuration etc. VMware will only restart a VM (or VMs) in the event of host hardware failure if you have the appropriate licensing and have configured your setup correctly (Cluster, HA, Fault Tolerance, or whatever). It's easier to enable this feature on VMware than on most cloud platforms, but there isn't something magical about it. You could probably pay the equivalent of the vmware licensing costs for a product that plugs into AWS and accomplishes the same thing if you don't want to do it yourself. This is really due to the different mindset of vmware product development vs AWS product development, with different target markets and different end goals.

|

|

|

|

jaegerx posted:Really. Did you just call yourself the clam. Is that somehow surprising to you? Because it shouldn't be.

|

|

|

|

Just a thanks to whoever posted the BeyondCorp stuff (https://cloud.google.com/beyondcorp/), I've been reading it most of the morning and it's really interesting. Not something I expect to come across any time soon unfortunately but really good to get an idea of what it's about.

|

|

|

|

Wrath of the Bitch King posted:There are always times when you'll need to use it, namely during builds, before you IP a server, when you interact with guests that are on a heavily monitored network that has a hard deny on RDP, when there's a problem with remote accessibility, etc. Yeah I know. I'm the only one who accesses our ESX infrastructure here.

|

|

|

|

Thanks Ants posted:Just a thanks to whoever posted the BeyondCorp stuff (https://cloud.google.com/beyondcorp/), I've been reading it most of the morning and it's really interesting. Not something I expect to come across any time soon unfortunately but really good to get an idea of what it's about. Also check out: https://www.beyondcorp.com So the google site doesnít go into why they started the process moving towards Beyond Corp, where as this does. The short gist is that they got hacked hard by suspected Chinese hackers in 2009, and decided that trying to fight everything at the perimeter not only was a pain in the rear end for employees but meant that once the internal network was breached the jig was up. So why not simplify it for everyone and just make internal resources globally available, no more fiddling with VPNs or fighting firewalls, using saml and certificates you could achieve the same desired end state that was easier to centrally monitor and a lot easier for end users. Itís a really interesting concept that I could see spreading in big enterprises that are already off loading shared drives and email to SaaS providers. Just put a saml endpoint with a bit more logic in front of everything. Monitor access logs individually with splunk/elk and like magic you have much better security that you can centrally manage, less user headache and a single endpoint getting owned doesnít mean they can use that to pivot to your entire network the same way.

|

|

|

|

Vulture Culture posted:The last team I managed had one manager with some engineering responsibilities and eight full-time engineers responsible for everything in our datacenters that wasn't a network switch. Two of the eight engineers were storage engineers: one dealt with live HPC storage, and one dealt with backup and archival. It was too much for one engineer. Again was joking, because I understand that in IT there's basically a use case for EVERY IT job out there. I love the example though! I guess I'm just jaded as I've worked a few places now that have had entire teams for storage and backup where it likely could be rolled into other teams responsibilities due to the lack of complexity in the storage (and backup) requirements of the company as a whole, and in some cases willingness to throw hardware/money at it. Edit: In your case did it ever become a staffing issue? With one guy being that involved with storage and nothing else how much of a nightmare was it when it took vacation, or if he left? TheFace fucked around with this message at 16:22 on Sep 14, 2017 |

|

|

|

Punkbob posted:Also check out: https://www.beyondcorp.com Presumably there's still basic firewalling in place because connected devices are giant pieces of poo poo and nobody wants to burn through 10 reams of paper full of "Weedlord Bonerhitler", but I get this is about client access to secure apps and giving up on the dumb idea of doing SSL MITM rather than "no firewalls anywhere".

|

|

|

|

Thanks Ants posted:Presumably there's still basic firewalling in place because connected devices are giant pieces of poo poo and nobody wants to burn through 10 reams of paper full of "Weedlord Bonerhitler", but I get this is about client access to secure apps and giving up on the dumb idea of doing SSL MITM rather than "no firewalls anywhere". If you donít trust your clients why trust devices in your server space? Itís actually theoretically possible to do zero trust on server networks using IPSec/PKI where individual nodes have to be authorized to connect to each resource. I mean thatís hard, but I could see that being the next gen of ďfirewallsĒ.

|

|

|

|

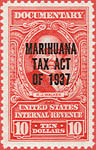

what's the weirdest advertising ploy you've received from a company? I think I just got our first really bizarre one since I've been here

|

|

|

|

I want that company to advertise to me.

|

|

|

|

GreenNight posted:I want that company to advertise to me. Same

|

|

|

|

Hey cyberreason I would love a 'product demonstration' for our whole office please

|

|

|

|

I don't think I should be sent any guns right now, toy or otherwise. The latest idiocy from my non-technical management is making GBS threads all over our working remotely policy. But just for IT, cause, you know.

|

|

|

|

Judge Schnoopy posted:Hey cyberreason I would love a 'product demonstration' for our whole office please I'll have to show it off to my CEO by running into their office and poppin off

|

|

|

|

Judge Schnoopy posted:Hey cyberreason I would love a 'product demonstration' for our whole office please No, just send me one, and I will personally demonstrate it for select individuals.

|

|

|

|

They sent me a NERF gun from Star Wars, the Han Solo blaster. I have no idea how they got my info, but I appreciated the gift. Shame I have zero input on product decisions, to the point where I've been told not to even answer the phone or any emails from vendors.

|

|

|

|

Kashuno posted:I'll have to show it off to my CEO by running into their office and poppin off Same but no guns involved

|

|

|

|

Kashuno posted:I'll have to show it off to my CEO by running into their office and poppin off DESK POP!

|

|

|

|

TheFace posted:Again was joking, because I understand that in IT there's basically a use case for EVERY IT job out there.

|

|

|

|

Another loving S3 outage in us-east-1... Just what I needed today!

|

|

|

|

I'm not going to go into a tremendous amount of detail, but over the last 2 months I've felt like more and more of my job has been disappearing. Just got news about a new project which would normally be handled by my team. My team will have no involvement. Hmm. I am not job sentimental at all. Got money in the bank, comfortable with my skill set. But that little voice in the back of my mind is going "hmm, wonder how my resume looks", because Stripe is definitely not as necessary as he used to be around here. If it happens, I'll miss working from home at this job. It is way, way, way too good to me as a parent.

|

|

|

|

HatfulOfHollow posted:Another loving S3 outage in us-east-1... Just what I needed today!

|

|

|

|

HatfulOfHollow posted:Another loving S3 outage in us-east-1... Just what I needed today! Lol

|

|

|

|

Isn't us-east-1 the duct tape and string AWS region?

|

|

|

|

Thanks Ants posted:Isn't us-east-1 the duct tape and string AWS region? Nah, us-east-1 is the most robust region. It's where new services are deployed first and has the most tenants. I try to move services to other regions but it's a pain when you keep getting config errors because some ec2 instance type isn't supported in the availability zone you selected or that the service you want to use doesn't support the functionality available in us-east-1. What's held together by duct tape and string is AWS as a whole...

|

|

|

|

This thread gives me whiplast sometimes.

|

|

|

|

The last five pages or so have been quite the thrill ride of laughs thanks to Cloud2Butt. Anyway I'm not sure I get the hate-on for punky bobster over there. Sure he's abrasive and spells ungood, but I think his points about a lot of infrastructure being absorbed into cloud services (and when I say services, I mean architected as cloud solutions, not forklifted into AWS and still being a pet) are reasonable and valid. Sure that doesn't mean everything will go the way of every user works from home with a laptop and softphone and can access all their services wherever - depends on the company, the services being provided, the location of the user (i.e. rural users with bad Internet connections wouldn't be first choices for that kind of model), etc. But someone else's point about not replicating entire server infrastructures at offices any more is also extremely good - there's just no need anymore, except maybe files and authentication. If a service needs to be available to multiple offices, even a pet in a VPC is a better choice than duplicating it across offices, security-wise and management-wise. Would also be pretty sweet if people would stop quoting clam down who should in reality clam up.

|

|

|

|

Super Soaker Party! posted:The last five pages or so have been quite the thrill ride of laughs thanks to Cloud2Butt. Are you the dude who regularly proudly proclaims that you have me on ignore? CLAM DOWN fucked around with this message at 23:09 on Sep 14, 2017 |

|

|

|

Super Soaker Party! posted:The last five pages or so have been quite the thrill ride of laughs thanks to Cloud2Butt.

|

|

|

|

We started work on implementing Ultipro about 6-7 months ago. Our go live date is in about two weeks. I don't know how the other groups areas are doing, but I'm pulling the trigger on a UPN change next week, and have MFA ready to turn on at the same time as the application go live. In addition to that, we are doing testing on my AD interface and should have that done before the go live date as well. This is my first time being a part of a project of this scale, and it has been exhausting.

|

|

|

|

Vulture Culture posted:The last team I managed had one manager with some engineering responsibilities and eight full-time engineers responsible for everything in our datacenters that wasn't a network switch. Two of the eight engineers were storage engineers: one dealt with live HPC storage, and one dealt with backup and archival. It was too much for one engineer. Donít stop. Iím so close.

|

|

|

|

Speaking of forklift migrations to ~the cloud~ I found out that my nemesis at my last job is still telling people to contact me by name for aws questions. This is a woefully incompetent "director" who thinks that the cloud saves money because it is the cloud. Literally spouting pc magazine tropes and not believing that he has to have a budget or planning. I haven't worked there in a month.

|

|

|

|

Punkbob posted:If you don’t trust your clients why trust devices in your server space? It’s actually theoretically possible to do zero trust on server networks using IPSec/PKI where individual nodes have to be authorized to connect to each resource. I mean that’s hard, but I could see that being the next gen of “firewalls”. Pagerduty does this. It is my dream. Firewalls are for suckers.

|

|

|

|

Kashuno posted:what's the weirdest advertising ploy you've received from a company? I think I just got our first really bizarre one since I've been here Also, this thread is hilarious.

|

|

|

|

|

| # ? May 14, 2024 23:23 |

|

H110Hawk posted:Pagerduty does this. It is my dream. Firewalls are for suckers. Wow I didn't realize that pagerduty had built that. I had seen the BeyondCorp stuff, but the pagerduty stuff is basically what I built at my last job. Glad to see someone else saw that as a valid model to run with. Besides zero-trust networking I think that the other major direction in firewalls is towards micro-segmentation built on labels that the control plane can see. You sort of saw this in early on in AWS with security groups but that is limited to instances and that still has a bunch of places where it falls back into a mess of IP based firewalls. What has me impressed lately is how powerful the new kubernetes network policy controller is. It somewhat enables per-process(or process group) firewall segmentation that is really easy to configure. Theoretically you could have a lb, web ui, service layer and database layer running in a shared cluster that can only talk to other layers when explicitly authorized. Now that caveat there is that the base of this is all docker so if you find an escape from the security around container then yeah bad things, but I'd argue that is still way ahead of where a shared host would be. In practice this is basically per-process network segmentation that doesn't need to keep track of things like IP's to enforce rule-sets. That's a crazy powerful concept. Building a "firewall" in kubernetes suddenly looks like this: code:https://github.com/ahmetb/kubernetes-networkpolicy-tutorial/blob/master/02-limit-traffic-to-an-application.md This is a good SREcon presentation on zero trust server side that H110Hawk's comment led me to: https://www.youtube.com/watch?v=j5BS7Gg-vwc Edit: wrong youtube link freeasinbeer fucked around with this message at 04:04 on Sep 15, 2017 |

|

|