|

Kill all subqueries.SQL code:

|

|

|

|

|

| # ? Jun 3, 2024 12:22 |

|

Judging from the code he posted, the flag column is in the same table as the other flag column. Aka update all line items if any one belonging to a parent record is flagged. Also subqueries are set based ops too. You may be thinking of correlated subqueries which force a loop over each row. Probably varies by db but the subquery select distinct may actually give better performance than the flat inner you just posted - while Iím pretty sure it would work fine in MS SQL, I donít think itís safe to assume it filters down to distinct records in other databases during the update and may produce odd or poor performance behavior. Anyway this is just a permutation of the ďfind the record with the max value in a certain columnĒ problem and the traditional solution for that is subquery based: code:

|

|

|

|

Tibalt posted:Edit: Thanks for the reply Ruggan as well. I'll take a look at the query plan as well. If you're using SQL Server and want a second opinion from the dubious, anonymous internet people here, you can upload the execution plan to Paste The Plan. Plan Explorer can anonymize the plan if you're worried about sharing table/ index names etc. Make sure it's the actual execution plan if at all possible!

|

|

|

|

Ruggan posted:Judging from the code he posted, the flag column is in the same table as the other flag column. Aka update all line items if any one belonging to a parent record is flagged. Ah, drat. You're right about the table thing. I saw that in the first example but after Tibalt put it into invoice/line item outside, I took off running. And I definitely was thinking of all subqueries being painted with the broad brush off row by row execution.

|

|

|

|

Is there a term for when you have to dive into a database full of different tables trying to find the right keys you can join on to get your data? SQL archaeology?

|

|

|

|

Pollyanna posted:Is there a term for when you have to dive into a database full of different tables trying to find the right keys you can join on to get your data? SQL archaeology?

|

|

|

|

Pollyanna posted:Is there a term for when you have to dive into a database full of different tables trying to find the right keys you can join on to get your data? SQL archaeology? Edit: Short for De-ORMalizing. PhantomOfTheCopier fucked around with this message at 02:18 on Aug 6, 2019 |

|

|

|

Pollyanna posted:Is there a term for when you have to dive into a database full of different tables trying to find the right keys you can join on to get your data? SQL archaeology? "Backend development"

|

|

|

|

Pollyanna posted:Is there a term for when you have to dive into a database full of different tables trying to find the right keys you can join on to get your data? SQL archaeology? Lockpicking

|

|

|

|

PhantomOfTheCopier posted:De-ORMing. nah I resorted to writing a thing that literally searches through every table, every column, and every row for a value and returns you all the results on a project that has no orm, and you're not allowed to write sql into the code, you have to create a new function for every query you want to do. insanity.

|

|

|

|

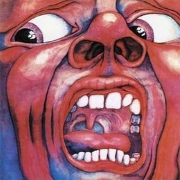

Pardot posted:nah I resorted to writing a thing that literally searches through every table, every column, and every row for a value and returns you all the results on a project that has no orm, and you're not allowed to write sql into the code, you have to create a new function for every query you want to do. insanity. You work with a system that's right out of that Fault-Tolerance comic?!

|

|

|

|

Can anyone recommend a set of guidelines etc. for configuring a postgresql server. I don't know much about the subject, but I know the config setup by our provider seems...not good...

|

|

|

|

I can't think straight today so help me out. T-SQL. I have three tables: Cases, CasesResumed, CasesClosed. Cases have a CaseID, OpenDate, CloseDate (nullable). The OpenDate is always filled, CloseDate is filled if the current status is closed, null if it is open. CasesResumed have CaseID and a ResumeDate. This indicates the case was re-opened on that date. CasesClosed have a CaseID and CloseDate. This indicates the case was closed on that date. I need to get historical data for number of total open and total closed cases on a series of month starts. My current strategy would involve selecting all cases with an OpenDate on or before each month start. For each of these cases, find the last resume and last close date on or before the month start. Use the case open, resume, close to find the status of the case on that month start date. With that, count number of open and closed cases on each month start. The major roadblock I'm having is getting the last resume/close date for each case and coalescing that with the case open date, to determine the case status. Any hints?

|

|

|

|

Managed to build a solution. I'm not sure if this is the best approach but at least it works.SQL code:

|

|

|

|

|

First, get a date table. While looping sucks and youíre mainly doing it because you need a row per month. That means youíre firing off a different query for every loop. If you canít use a date table, then while loop the dates into the temp table first, and then do a single query to retrieve your data (I.e. update each row in the temp table with a single query). As for the rest, Iíll maybe post a difference approach later.

|

|

|

|

Kuule hain nussivan posted:Can anyone recommend a set of guidelines etc. for configuring a postgresql server. I don't know much about the subject, but I know the config setup by our provider seems...not good... I don't claim these are still the universally best choices... (oh wth with the characters) Overview Memory Tuning In general, the following parameters can be used to tune PostgreSQL memory usage: code:Transaction Log While the on-disk database files are obviously highly correlated with the content of the tables due to INSERTs and UPDATEs, there are some settings in PostgreSQL that may affect the amount of storage used during normal operations. PostgreSQL utilizes a transaction log system, called the Write Ahead Log (WAL) to ensure reliability and data consistency in cases of system failures. The primary settings that control WAL behavior are: code:expects about 50MB of available space. Each checkpoint, when written to disk, is an indication that data has been flushed to disk (the actual database tables themselves); during database recovery, the saved WAL segments are used to ensure that transactions occurring prior to failure will be written after database restart. Actual database operations should be used as a check, of course, but, in general, it is feasible to calculate space expectations as (16MB*checkpoint_segments). Clearly, there is very little point in having an excessive number of segments retained (unless one of the aforementioned systems of replication or PITR are in use). During normal operations, PostgreSQL must flush this "dirty data" to the on-disk tables, and it attempts to do all such work prior to the completion of the next checkpoint. The default checkpoint_completion_target thus demonstrates the point at which those writes should conclude. With the given default checkpoint_timeout, then, all on-disk writes should conclude no more than 2.5 minutes after the transaction commits (again, the checkpoint segment is stored on disk, so the data is still available for crash recovery immediately after the COMMIT; it's only the tables in the data directory that have yet to be updated). This parameter also prevents high load that may result from writing 16MB of data all at once, so it should not be unnecessarily lowered as it may result in periods of database unresponsiveness. If data INSERTs, UPDATEs, or DELETEs are occurring too frequently, it is possible that the segments will be written to disk very rapidly. By default, if those writes occur more often than every 30 seconds (checkpoint_warning), and entry will appear in the log file noting this fact. While a smaller timeout may be seen to improve crash recovery times, significant IO load results from flushing data to disk, and for this it is desirable to have checkpoints occur as infrequently as possible. A balance is, obviously, desired: Having infrequent warnings about high-speed checkpoints is not a problem, but these should occur only under high load or during planned/expected administrative tasks (loading large data sets, for example). Otherwise, it is reasonable to have checkpoints occur every 1.5 to 4.5 minutes based solely on normal traffic, and some adjustments to checkpoint_completion_target may assist during high load intervals. Logging Several logging options may affect disk space; in particular: code:PhantomOfTheCopier fucked around with this message at 17:21 on Aug 9, 2019 |

|

|

|

I'm very new to SQL and have been working on a thing using MS Access for work to compare a master part list to a specific inventory list. The master is frequently updated with many parts and I import the latest one into the table "MasterList". The inventory list table only needs to compare to a fraction of the parts from the master so I set up deletion queries to remove the unneeded parts from the master based on what I've gone through and specifically added to the filtering list. Then my comparison works well to find changes. But since the queries are character limited, once I get to like 30 items I have to make a new query which currently are like 6. I have a macro to run all 6, but then it's not too easy to add new items to filter out since I have to edit the query. My current deletion query: code:Description delete query: code:code:In my queries where I list every part name or number to delete I can use "LIKE 'partnameA*' " to so I don't have to list everything that starts with "partnameA", or part numbers that start the same but have different end numbers, " LIKE "11x222-*' ". It's not as big of a deal to do partials since in the table I can just keep adding items, but there are a lot of close duplicates and it'd be nice to have one entry to cover them all, like stuff that has one digit different at the end that I know is OK to delete anything that starts with the first part. Is it possible to include something like that into one query to find partial matches between both tables in either description or part number to then delete from the master? AzureSkys fucked around with this message at 08:27 on Aug 12, 2019 |

|

|

|

my god. have any of you had to use snowflake?

|

|

|

|

AzureSkys posted:I'm very new to SQL and have been working on a thing using MS Access for work to compare a master part list to a specific inventory list. The master is frequently updated with many parts and I import the latest one into the table "MasterList". The inventory list table only needs to compare to a fraction of the parts from the master so I set up deletion queries to remove the unneeded parts from the master based on what I've gone through and specifically added to the filtering list. Then my comparison works well to find changes. doing the delete in one query using DeletionList is easy, since you can use OR conditions in join statements: code:not sure why you had the doubled-up conditions in your queries, and i'm assuming the distinctrow thing is an access thing doing it with a wildcard is very similar. insert your rows including the wildcard characters into DeletionList, then code:at least, assuming access sql behaves vaguely similarly to other sqls e: entirely untested; do your own testing and validation before running on a production db, etc etc

|

|

|

|

I'm doing something wrong here but I can't for the life of me figure out what. Problem: with psycopg2 and Python, if I make any kind of query that includes a WHERE clause, the query is run but returns no hits. If I make a query that doesn't include a WHERE, it works. So: cur.execute("SELECT * FROM employee_location;") works just fine, but cur.execute("SELECT * FROM employee_location WHERE last_measurement_time >= TO_TIMESTAMP(%s)", (time_to_start,)) returns an empty.

|

|

|

|

abelwingnut posted:my god. have any of you had to use snowflake? No, but I've heard lots of buzz about it, been meaning to check it out. No good?

|

|

|

|

redleader posted:doing the delete in one query using DeletionList is easy, since you can use OR conditions in join statements: Thank you for the help. Unfortunately I couldn't get that to work. I copied the code from something I found and just changed the tables/fields to match mine originally, so I guess that's where the redundant part came from. I think Access does something different, but this gives me something to keep poking around with. edit: I got this to work for deleting with one query from either criteria, but still don't know how to have it work with partial matches: code:AzureSkys fucked around with this message at 09:10 on Aug 13, 2019 |

|

|

|

Tax Oddity posted:No, but I've heard lots of buzz about it, been meaning to check it out. No good? i feel like the system underlying it might be worthwhile, but the interface and mechanics of it are so drat buggy. the ui is complete garbo and slow and debilitating, the history section is inaccurate, and there are so many odd limitations in every little thing that you have to find silly, annoying, and costly ways around. it's ability to handle tables with trillions upon trillions of rows is great and all, and would certainly bring sql server to its knees, but it's just so unwieldy. i'm constantly fighting the drat thing. oh, and you can't write sps or schedule any sort of tasks, there's no way to write functions, and you can't send multiple sql statements in a single api call. need to create a table with certain conditions, then insert data, then somehow offload that data? three api calls that are just annoying to cron together on an outside server. also seems wildly expensive but that's on my client and they seem to have no care for cost so whatever. abelwingnut fucked around with this message at 15:39 on Aug 12, 2019 |

|

|

|

Snowflake is way better (and cheaper) than Teradata, which is mind blowingly costly and a similar data warehouse. There's stuff out there to wrap multiple API calls at least in Python (using the DB 2.0 API): https://docs.snowflake.net/manuals/user-guide/python-connector-api.html

|

|

|

|

|

yea, i have to use node instead of python.

|

|

|

|

Has anyone come across a simple-ish mysql proxy (or a way in Mariadb) that can get stats on queries? Just looking for a way of recording that query "X" returned a response of size ###bytes, or ###rows/columns - any stats really. yes, got a developer that's making bad queries on occasion - or linking modules that are doing bad queries, but it's turning into a pain in the rear end to nail his rear end. Server is doing like 2-5kq/s, ~300mbps. I'd love a way of recording/logging a query that returns a result set that's over a specified size threshold - or failing that, a way of finding the approx result size of a single query easily.

|

|

|

|

Der Shovel posted:I'm doing something wrong here but I can't for the life of me figure out what. what happens if you try an always true condition like WHERE 1 = 1?

|

|

|

|

redleader posted:what happens if you try an always true condition like WHERE 1 = 1? Yeah, that works, and as expected will fetch the entire table. Could the problem be that the time values in the database are not compatible with whatever TO_TIMESTAMP() produces? E: this is what the relevant column looks like in the database, and I am trying to convert from epoch to whatever the hell TO_TIMESTAMP produces. datetime.datetime(2019, 4, 6, 2, 41, 26, 154000, tzinfo=psycopg2.tz.FixedOffsetTimezone(offset=180, name=None))) E2: OK, well. Turns out the initial problem was that there simply was nothing in the database that matched the criteria because mysteriously our Pusher connection had crapped out two weeks ago and the guy whose job it is to watch the database hadn't actually been doing anything, and it was only noticed today. It still remains a mystery why my intentionally greedy "greater than or equal 1.1.1900" style queries ALSO didn't work but hey it works now. Shaman Tank Spec fucked around with this message at 14:58 on Aug 13, 2019 |

|

|

|

Thereís a bunch of MySQL stored procedures that I have to update. Iíll be damned if I have to manually log into the database and edit the staging procedures by hand, then do the same for production. What options are there for keeping stored procedures in version control? Currently, we have no way of knowing what procedures are actually in our DB or recording/communicating changes to them. I refuse to be the rear end in a top hat that brings down our reporting service because of a typo and nobody knows or notices until itís too late. EDIT: Also, whatís the reasonable limit for nested SELECTs? Like 3? I personally prefer 0 but whatever. Pollyanna fucked around with this message at 23:00 on Aug 16, 2019 |

|

|

|

SQL script that drops the existing procedure and creates the new one? One of those migrations systems often present in ORMs?

|

|

|

|

|

What are the performance implications, if any, of using UUIDs as primary keys in Postgres (as opposed to a serial / auto increment int)? Tables are going to have a UUID regardless and lookups will be done via UUID (clients interact with the REST API via UUID), so it seems redundant to also have an int PK. I have many-to-many join tables which would have to track table1_uuid & table2_uuid rather than int IDs if I take this approach, which means frequent joins on uuid fields. Postgres has a built in UUID type, which makes me think performance is somewhat optimized for this (and some searching seems to confirm this), but I'm not really sure how this will scale.

|

|

|

|

nielsm posted:SQL script that drops the existing procedure and creates the new one? One of those migrations systems often present in ORMs? Yeah this. I donít know what systems are available to MySQL (I use a DB project in Visual Studio for MS SQL and store that in git), but if all you need is stored procs, just a drop and create works fine. Store in SVN or git. Level up: make a on a run on commit hook that auto deploys for you Level up again: separate version control branches for your different environments with different commit targets

|

|

|

|

Splinter posted:What are the performance implications, if any, of using UUIDs as primary keys in Postgres (as opposed to a serial / auto increment int)? Tables are going to have a UUID regardless and lookups will be done via UUID (clients interact with the REST API via UUID), so it seems redundant to also have an int PK. I have many-to-many join tables which would have to track table1_uuid & table2_uuid rather than int IDs if I take this approach, which means frequent joins on uuid fields. Postgres has a built in UUID type, which makes me think performance is somewhat optimized for this (and some searching seems to confirm this), but I'm not really sure how this will scale. MSSQL stores rows according to the clustering key or index, so when you use a UUID PK you need to make it non-clustered and add an int identity clustered index, otherwise the table gets fragmented to hell. Postgres doesn't have that problem as it just stores by insertion order, so you can safely use a regular UUID PK and nothing else, it won't hurt performance. At least that's what I googled when I was facing the sameb question.

|

|

|

|

Splinter posted:UUIDs Are you Twitter or Google? Do clients generate their own request IDs (distributed) or are those generated by the service (centralized)? What is your expected request rate (*100 for scaling)? Note that a number of the form "yydddsssss" (1tps max) can be generated by a distributed client. A bigint provides 19 digits in 8 bytes, so a client can just as easily append up to nine random digits (10^9 tps not counting collisions). UUIDs can collide, so you have to handle that case. It's straightforward to convert numbers to strings of [A-Za-z0-9] base 62, but what user wants a big uuid in their url? There are articles online about why you might/not use uuids, but feel free to claim the usual excuses.

|

|

|

|

PhantomOfTheCopier posted:The performance implication is related to the wasted memory and disk space storing repeat copies throughout all tables and indexes of a key field that provides 50 orders of magnitude more entropy than your application needs. (Sheesh I really need to start a blog, I was certain I had posted about this here, but search hasn't found it; probably should be on the first page.) Each transaction will also require 36 characters of network traffic, since you'll doubtless be communicating requests in ASCII. Uuid4's colliding is so unimaginable rare that it's not worth optimizing for Sequential int ids leak information about your db table size (German tank problem) Hash indexes only just got improved in postgres If 36 bytes of network traffic are killing you you aren't using text based protocols you're using something binary in which the difference is 96 bits. The overhead of tls is waaay more than 36 bytes and no one cares. The application to database protocol also is binary. Sequential int ids are almost certainly going to collide leading to issues when you need to shard or merge. I recommend using Uuid4's and then if you need the performance switch to something sequential. Bad databases like mysql don't or didn't have native support. If clustering matters to you then yeah it's not going to be great--so choose another column to cluster on. Anyway if you're asking yourself this question just measure it and find out. Malcolm XML fucked around with this message at 00:29 on Aug 18, 2019 |

|

|

|

Splinter posted:What are the performance implications, if any, of using UUIDs as primary keys in Postgres (as opposed to a serial / auto increment int)? Tables are going to have a UUID regardless and lookups will be done via UUID (clients interact with the REST API via UUID), so it seems redundant to also have an int PK. I have many-to-many join tables which would have to track table1_uuid & table2_uuid rather than int IDs if I take this approach, which means frequent joins on uuid fields. Postgres has a built in UUID type, which makes me think performance is somewhat optimized for this (and some searching seems to confirm this), but I'm not really sure how this will scale. There will be a performance hit for anything joining or filtering on these columns. Think about comparison operations in the CPU: 32/64 bit int operations are easy and fit singly into your ALU. Going beyond the bit-width natively used by your hardware is inviting and requiring extended time performing these operations in a software orchestrated way. For my company's purposes, when we've needed to represent a row while hiding the identity of the row itself, we've added a GUID column with a supporting nonclustered index, and use the value only on the single table, using the int key columns internally for the joins. The int pks are also the clustered index in most applications. Edit: also everything PhantomOfTheCopier said.

|

|

|

|

PhantomOfTheCopier posted:The performance implication is related to the wasted memory and disk space storing repeat copies throughout all tables and indexes of a key field that provides 50 orders of magnitude more entropy than your application needs. (Sheesh I really need to start a blog, I was certain I had posted about this here, but search hasn't found it; probably should be on the first page.) Each transaction will also require 36 characters of network traffic, since you'll doubtless be communicating requests in ASCII. Clients generate IDs. Idea being the client doesn't have to rely on receiving a server ID in response to creating a resource in order to be able to make future updates on that resource. Handling error cases related to server id sync issues is more complicated, more brittle, and also much more likely to occur than colliding UUIDs. The clients in this case are offline-first apps, so it's not as straight forward as just refreshing from the server once network connectivity returns. Could also make a composite key of uuid and user_id to make server side collision essentially impossible, but as Malcolm XML mentions, that might be overkill. I could stick with auto increment columns as the internal IDs/PKs, only storing uuid (or custom uid) as an additional column in the relevant tables (as Nth Doctor mentioned). This was my original idea before thinking about just using the uuids as keys. This allows join tables to use ints rather than uuids, which saves space and allows joins to work with ints rather than uuids. It does add some complexity though, as m:n relations need to be mapped from uuid (how they are represented in the request body) to internal ID (which the clients don't know) in order to be inserted/deleted. It sounds like the concern is more about using UUIDs vs rolling your own custom unique ID system that doesn't use as many bytes? You're right that UUID is overkill for this app, but at the same time there's already support for uuids on all the platforms relevant to this project, so going with uuids simplifies development. I considered rolling my own (probably something date time based similar to what you mentioned), but the consensus was to stick with the easy, existing solution rather than overthinking it. With this project, ease of development is a priority over optimizing performance at this time. It might not ever have to scale very large, so I don't want to invest too much upfront effort in designing a highly scalable solution. Mainly just want to make sure I'm not doing something that is so dog poo poo performance wise that it will need to be re-architectured before it it's actually even dealing with large amounts of data. It sounds like the main concern is wasted disk space/memory due to join tables using 32 byte keys rather than 4 or 8 byte keys. For actually running queries/indexing using uuids, it sounds like postgres is reasonably performant (the indexes aren't clustered by default) (though still not as optimal as using ints, as Nth Doctor noted). Anyway, it sounds like I should just run some tests after I have everything set up.

|

|

|

|

Another option to consider is an int, int key multi tenant architecture. First int being the tenant/user ID, second being an auto incrementing PK specific to that user. If youíre still worried about giving away info via auto increment user ID, just randomly generate those. Internal ID doesnít matter because the client already knows about those - they made them. Still gotta worry about fragmentation though.

|

|

|

|

I have a hypothetical performance question in which I am looking for the fastest way of doing something. I have a table of a billion records, and each record consists of a userID and a timestamp and some other data. Every hour 1.5 million records are added to the table corresponding to 1.5 million userIDs being batch updated with the same time stamp for that batch. If I want to pull the last record for every user at the end of every month would it be more efficient to: A.) create a composite index on userID and timestamp and create a query based on max(stamp), group by datepart(month, stamp) or some such B.) every time a batch of records comes in, add that batch's timestamp to a separate table of timestamps. Then create a query based on max(stamp), group by datepart(month, stamp) for the table of timestamps, inner joining that query with the user data table. C.) Something else? I'm curious to know that collecting data based on a list of timestamps is faster than grouping functions running against the entire data set. Does anyone have any insight into the performance of one of these ideas vs another?

|

|

|

|

|

| # ? Jun 3, 2024 12:22 |

|

Integer sequences are easy to guess, but so are UUIDs in some (many?) libraries, particularly if they're using a prng, which could also be platform specific. I noted how to get around this above. You can fairly easily have client requests join on a uuid-to-internal if you want to avoid all the extra duplication of stored and indexed UUIDs. Don't assume, though, that your uuid is "unique" or "secure". You may find that database maintenance, replication, etc., becomes an absolute pain using UUIDs. Presumably clients can generate UUIDs and obtain/update from the server when available. (Depends on purpose and traffic pattern.) Just sharing ideas at this point.

|

|

|