|

I'll add it explicitly: Whenever you have a collection of dicts that all have the same keys, you're using the wrong tool. The main reason is the lack of protection against mistakes, and lack of IDE assistance I mentioned above.

|

|

|

|

|

| # ? May 14, 2024 09:21 |

|

Dominoes posted:I'll add it explicitly: Whenever you have a collection of dicts that all have the same keys, you're using the wrong tool. The main reason is the lack of protection against mistakes, and lack of IDE assistance I mentioned above. This is a good solution and I will use something similar. Thanks!

|

|

|

|

Dominoes posted:I'll add it explicitly: Whenever you have a collection of dicts that all have the same keys, you're using the wrong tool. The main reason is the lack of protection against mistakes, and lack of IDE assistance I mentioned above. This isn't advice, but I use a DataFrames for almost everything where data needs to be accessed and only write to json to transport/store it. Even in Flask apps where I need to send stuff between callbacks I tend to write it to a hidden div as json then call it back into a DataFrame. It makes everything I could want to do so standardized and my code so easy to read. No matter how I got the data, whether it was a list, dict, whatever it is nicely displayable, exportable, transformable, etc. Wallet posted:This is a good solution and I will use something similar. Thanks! For your original question I'd have all my error names as index values with a column called message and a column called value and no two indicies with the same name. EDIT: Oh but how to store it all in code so it is findable? I think I missed the point and am dumb.

|

|

|

|

CarForumPoster posted:This isn't advice, but I use a DataFrames for almost everything where data needs to be accessed and only write to json to transport/store it. Even in Flask apps where I need to send stuff between callbacks I tend to write it to a hidden div as json then call it back into a DataFrame. It makes everything I could want to do so standardized and my code so easy to read. No matter how I got the data, whether it was a list, dict, whatever it is nicely displayable, exportable, transformable, etc.

|

|

|

|

One thing to remember about the post starting this recent conversation is that the poster only provided one example. He also mentioned config values. Many python projects use a .py file as a general config file. Take Django for example. In settings.py you have a lot of plain variable assignments as key-value assignments. Some examples: Python code:That being said, I've been working on a PR for Django to bring types to settings.py. It's requiring a lot of thought to meet the needs of developers, users, and system admins.

|

|

|

|

To me (We're getting far in subjective turf), I think in that Django example, it makes sense to expose the structure as a dict in the config file for the reasons you listed, but validate and manipulate it internally using something more robust. That'd be a cool PR - link when it's ready for the +1s?

|

|

|

|

Dominoes posted:To me (We're getting far in subjective turf), I think in that Django example, it makes sense to expose the structure as a dict in the config file for the reasons you listed, but validate and manipulate it internally using something more robust. Yeah, definitely. Dominoes posted:That'd be a cool PR - link when it's ready for the +1s? I have literally zero code yet. It's just several pages of notes now. It will be in limbo until/if this DEP gets finalized/accepted and I can work with everyone to see whats exactly going to happen. I'm sure we're talking about many, many months from now.

|

|

|

|

Thermopyle posted:One thing to remember about the post starting this recent conversation is that the poster only provided one example. Yeah, the errors weren't the best example�I probably should have gone with a config file because that gets a bit wonkier if you want it to be really clear and simple to edit. In the error dict case currently there's a getter being used rather than having code directly refer to keys in the dictionary, which provides protection against key errors and keeps stuff easy to edit but doesn't do anything IDE-wise. I haven't tested it thoroughly but I believe that you can additionally get IDE autocompletion (at least in Pycharm) to work in that setup by doing something like this: Python code:Wallet fucked around with this message at 23:32 on Apr 11, 2020 |

|

|

|

Dominoes posted:I'll add it explicitly: Whenever you have a collection of dicts that all have the same keys, you're using the wrong tool. The main reason is the lack of protection against mistakes, and lack of IDE assistance I mentioned above. I'm testing attr.make_class() for this and it's actually working really well so far. It gives me a class with string fields for each language derived from input. But what would be the more Pythonic/canonical approach, if there is one? One issue is that PyCharm can't know the fields of the new class so I'm missing out on code completion benefit. Is there any way to shoehorn this back in? Some kind of internal dictionary or modified stubs file or..? edit: don't think stub file works here; class defined in a pyi file is not the same class as created by attr.make_class. But dictionaries don't have type completion either so maybe I'll just get used to it. It's one of the convenient things about attrs though. mr_package fucked around with this message at 22:16 on Apr 13, 2020 |

|

|

|

mr_package posted:I have an attrs-based app that groups bits of text together to support multiple languages. So each instance has a 'text' field that is a dictionary e.g. {"en-US": "apple", "fr-FR": "pomme"} type of thing. They might not all have the same keys right now but they are going to converge over time as everything gets translated. I want users to be able to add things at runtime so I don't want to define all the fields and push a new code version and dictionaries support this; just add a new key e.g. "ja-JP" and you have Japanese language support. I might be misunderstanding you both because I'm bad at understanding anyway and also I'm pooped, but...but would a TypedDict help you out?

|

|

|

|

I'm kind of asking the impossible because the field names in the class (or keys in any dict) are not defined until the code is run, because they are loaded from outside (JSON, database, whatever). But I don't think I really need it-- for testing/writing it's nice to have the code completion on fields but the reality is production code is going to be iterating over things programmatically. I'm not going to be hard coding in any field names. I tried making a stub for a 'fake' class and type-hinting the output of the call to attr.make_class() as that type but still didn't work, I'm going to give up for now it's not really important now that I think about it. edit: kicked it around some more and attrs.make_class() just isn't going to be the tool for the job, it may work but too many things don't work, and the IDE becomes really confused. For example, make_class() doesn't have the ability to add methods, but it can be a subclass so you can put methods in a dummy/template superclass. But the IDE has no idea about them, decorators don't work, and when you try and type hint things the IDE complains because make_class returns 'type' but your classmethods try and all kinds of mess starts occurring. So, what is the right tool for the job? I have these Text() objects and each one has some metadata fields (boolean) and a dictionary for languages/localization. I don't want to have a dictionary in every Text() object that's always the same keys if that's the wrong tool. But I want them to be dynamic; I don't want to manage them at code level I want application to be able to add/remove them. Perhaps in that use case dictionary is the right tool after all? mr_package fucked around with this message at 16:52 on Apr 14, 2020 |

|

|

|

So what's the deal with multithreading in Qt? I basically have a long-running background task, that logs through a logger, and I want to capture the logging output and have it stream to a QTextEdit. It sort of works, except that the main UI thread still gets destroyed and freezes up. In my research I came to the conclusion that the correct way to do it is with a QThread and a QTimer to periodically stream data from the StringIO that I have the log redirecting to, but I'm clearly doing something wrong. I know that the logging redirection works since it no longer shows up in the standard output window of PyCharm, but my UI thread just freezes so I don't see the QTextEdit ever updating (and I have to force-quit python...). Nvm, I guess I was using StringIO wrong. I ended up just redirecting the output to an actual file (which will come in handy when we need to debug) and that works for whatever reason. Macichne Leainig fucked around with this message at 20:06 on Apr 17, 2020 |

|

|

|

Protocol7 posted:So what's the deal with multithreading in Qt? I believe that you send status updates back from the QThread using a signal().

|

|

|

|

accipter posted:I believe that you send status updates back from the QThread using a signal(). It's this, your main thread should be used for handling UI elements only, anything that takes significant processing time or that needs to wait for some other thing should be done in a QThread and UI elements should be interacted with from the thread via signals

|

|

|

|

I�ll refactor it that way eventually, but the writing it to a text file and just reading the text file in the original thread actually worked better than I anticipated. This is an internal-only tool I�m making anyway, so

|

|

|

|

In case anybody's reading this thread and not the Django thread � I could really use someone's help tracing down a problem I'm having in Django. It seems trivially easy to cause a deadlock in the logging module, by hitting the site ~simultaneously with dozens-to-hundreds of AJAX requests. It's been independently reproduced; I just don't know how to go further with digging through the innards of Python itself since it seem like that's where this issue lies. https://github.com/data-graham/wedge Can anyone spare some time to poke at this? I don't want to discount the much-appreciated efforts others have already given it, but I'd like to broaden the visibility now that I know it's not just me.

|

|

|

|

|

I'm not 100% sure why, but actually routing logs to your handlers appears to make the issue go away. I guess Django gets confused if you configure logging handlers but don't use any of them... I did it by just adding a 'root' key to the logging dictconfig: code:Aramis fucked around with this message at 09:24 on Apr 23, 2020 |

|

|

|

Hm. Strange, because when I originally started investigating this, I had loggers defined. That's what the problem was to begin with, it's wedging in production. I took out everything I could identify as not affecting the behavior, and I was originally swapping all the various loggers in and out, until I eventually saw that it wasn't whether the loggers were defined that was causing it, but the handlers. So I left loggers empty for the demo. FWIW I just now added my prod loggers back in Python code:

|

|

|

|

|

SOLVED So the issue was in my wsgi.py. Python code:Which is the top hit for "django apache environment variable", just like it was ~5 years ago when I first found it and was trying to protect and consolidate my env vars ... and it turns out to be written by literally my best friend from grade school who I hadn't talked to since 1999, lmao But apparently when you do it this way it's creating a new wsgi application for every request, which is what leads to the deadlock. Going back to the out-of-box wsgi.py solves it (which explains why my built-up-from-scratch demo didn't work): Python code:Thanks everyone for looking!

|

|

|

|

|

I'm glad to see a solution. Frustratingly, it's been in the back of my mind constantly since you first posted it.

|

|

|

|

Likewise. I've never groked loggers; perhaps for lack of trying. And, problems that have a solution of edit wsgi.py tend not to be the fun type with a eureka moment.

|

|

|

|

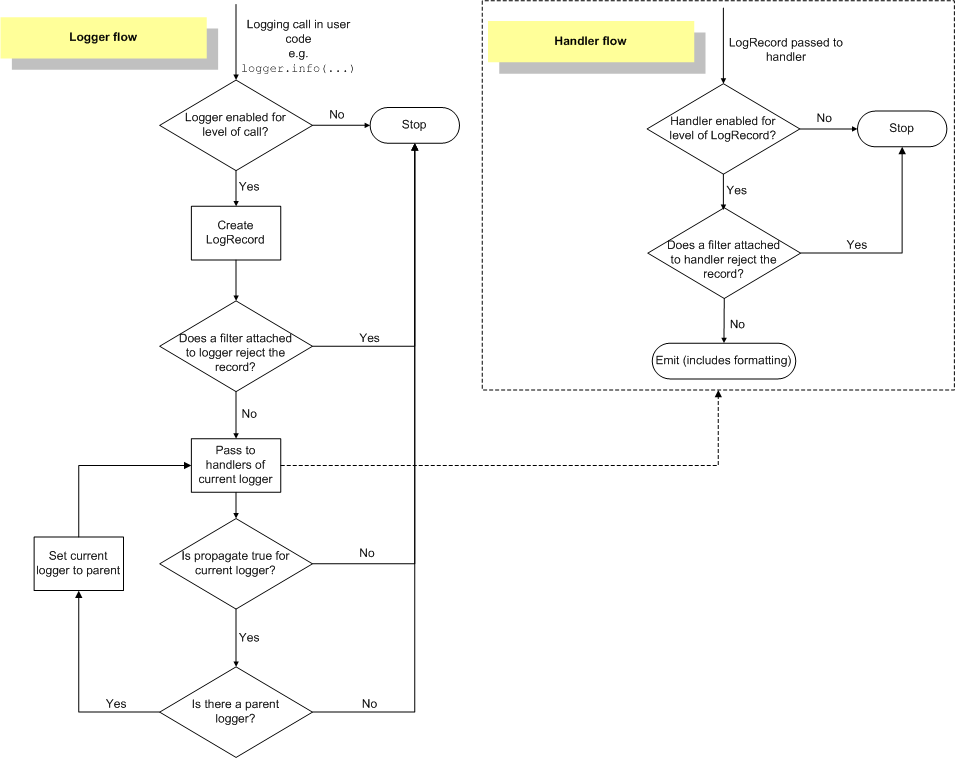

The post where I try to explain loggers. Loggers are weirdly hard to understand for something that seems like it should be simple. This stems from the fact that it's made to be very useful to Enterprise Grade applications that have very complicated logging requirements, but this makes it harder to grok if you don't have a lot of experience in that area. Don't let that put you off. Because it has a lot of features, it can be very useful for even small applications...once you learn how to understand it. The logging module is made to handle a stream of message events and each event can have different sorts of metadata assigned to it. When you log a message, it is evaluated at multiple steps to see if it should be outputted, where it should be outputted, and what format it should be outputted in. You can have multiple loggers in your application, each with a different configuration, and you can share parts of that configuration amongst different loggers. The logging module has a basic mode that you access by calling members of the logging module directly: logging.debug('blah blah blah'), logging.info('blah blah blah'), logging.error('blah blah blah'), etc. This basic mode configures a default logger called root with some basic configuration options. You could use this mode like so: Python code:code:I find myself hardly ever using this mode so I'm not going to talk about it anymore. The docs on it are straightforward once you understand the remaining part of this post. A more useful mode is to specifically create a logger like so: Python code:For example, if you have loggers named, my_project.foo.bar.baz, and my_project.foo.dork.face, you can configure the logger called my_project.foo and it will be shared by everything in bar, baz, dork, and face Ok, so now you can use this newly created logger object instead of the basic mode outlined at the beginning. Now what happens when you call logger.info or any of the other log methods?  There are four basic things that happen when you log a message:

Your loggers can be configured in different ways. You can use the programmatic API: Python code:code:

|

|

|

|

A top, top post thank you Thermopyle

|

|

|

|

|

Thermopyle posted:The post where I try to explain loggers. Thanks for this. Loggers are one of those things that are important and I always feel like I should really figure out how they work but then there's lots of other things to do and my basic per-module logger pattern seems to work well enough so I never actually do.

|

|

|

|

That's the best expl I've heard. Going to try to add this to a proj.

|

|

|

|

+1 to all the kudos, knowing this stuff will definitely help me some day. In fact I'm almost positive my previous Qt problem could be fixed with a proper logger implementation.

|

|

|

|

Protocol7 posted:+1 to all the kudos, knowing this stuff will definitely help me some day. In fact I'm almost positive my previous Qt problem could be fixed with a proper logger implementation. Your problem was the post where you were trying to update a text box based on the contents of a text file and encountering issues getting the threading to work properly, right? The problems you were encountering were not solvable with the logging module. QuarkJets fucked around with this message at 21:53 on Apr 24, 2020 |

|

|

|

QuarkJets posted:

I figured someone would call me out on that. It doesn�t matter anyways since I convinced my boss to let me make this UI in React as a web app with a Flask backend, which is definitely much more in my wheelhouse. Python is great but not for any UI stuff in my experience. Doesn�t help I was trying to extend an existing OSS project so I was stuck with Qt, but that project kind of sucked and was super buggy from the start anyway. Macichne Leainig fucked around with this message at 15:17 on Apr 25, 2020 |

|

|

|

Protocol7 posted:I figured someone would call me out on that. Plotlys Dash (built on top of flask) w/Dash Bootstrap Components is very very fast to build nice looking web pages so long as what you're trying to build can be thought of as a dashboard in some sense and redeploying to update content is fine. (e.g. don't need a WYSIWYG blog editor for non tech people) Its nice because in addition to getting all the bootstrap easiness it supports writing content with markdown, navbars, etc. Example: https://dash-gallery.plotly.host/dash-oil-gas-ternary/ CarForumPoster fucked around with this message at 18:13 on Apr 26, 2020 |

|

|

|

That does look super nice actually. I don�t know how realistic it is to redeploy every time data changes on this app though. It�s basically for utility companies to go out and take photos of meters, utility poles and that kind of stuff, so the data will be updated pretty constantly. I�m sure that�s possible with statically generated apps but I�m not too familiar with that kind of thing so I�m gonna stick to my guns for now.

|

|

|

|

I've used this simple cached_property snippet for years, and I just noticed 3.8 added one to functools. I like to use it for things that make sense as properties but might be a little expensive to calculate if there's a chance it might be hit in a tight loop. Anyway, thought I'd share my implementation for people who aren't able to use 3.8... Python code:

|

|

|

|

That's neat, what about expiration / cache-busting though? I'm thinking about long-lived class instances whose state changes over time, their properties would also need to get refreshed somehow.

|

|

|

|

|

Data Graham posted:That's neat, what about expiration / cache-busting though? I'm thinking about long-lived class instances whose state changes over time, their properties would also need to get refreshed somehow. I don't do that too often, but I think I've done it in the pass with a wrapper around functools.lru_cache. edit: Oh no, wait I remember. You can force a re-calculation by deleting the property like del instance.whatever_property. That will make it re-calc on next access. So you've got to write something that stores the time it's cached and references each time you access... Thermopyle fucked around with this message at 00:27 on Apr 27, 2020 |

|

|

|

Protocol7 posted:That does look super nice actually. I don�t know how realistic it is to redeploy every time data changes on this app though. It�s basically for utility companies to go out and take photos of meters, utility poles and that kind of stuff, so the data will be updated pretty constantly. Yea that makes sense. We use Django w/Django CMS and a template so that we can edit the site without redeploying. Took 2 weeks to get the first site live while in Dash I can have a one page app done in a day.

|

|

|

|

Given a document's hash, could you store it into it's own metadata, I think it's impossible but there may be clever workarounds ?

|

|

|

|

unpacked robinhood posted:Given a document's hash, could you store it into it's own metadata, I think it's impossible but there may be clever workarounds ?

|

|

|

|

And even if you could, so could the person sending totally_the_file_you_wanted_and_not_a_cryptolocker_check_the_hash_if_you_dont_believe_me.exe

|

|

|

|

Proteus Jones posted:No. Once you add the hash, the file's hash changes. It's a dog chasing its own tail. I think this trick can be done with MD5 (because its broken enough)

|

|

|

|

taqueso posted:I think this trick can be done with MD5 (because its broken enough) Wouldn't that make this problem worse? DoctorTristan posted:And even if you could, so could the person sending totally_the_file_you_wanted_and_not_a_cryptolocker_check_the_hash_if_you_dont_believe_me.exe I thought I read something a while ago saying it's pretty easy to make an "attacker" file match a desired MD5 hash. I could be wrong though, not as well versed in security stuff as I probably should be.

|

|

|

|

|

| # ? May 14, 2024 09:21 |

|

Protocol7 posted:Wouldn't that make this problem worse?

|

|

|