|

Is there an offline version of DLSS? Say I don’t need real-time DLSS so I can give the algorithm more iterations to say upscale images and I can even train the algorithm with high res sample images.

|

|

|

|

|

| # ? Jun 1, 2024 06:24 |

|

Shaocaholica posted:Is there an offline version of DLSS? Say I don’t need real-time DLSS so I can give the algorithm more iterations to say upscale images and I can even train the algorithm with high res sample images. Isn't some supercomputer at Nvidia already doing this better than we could at home? I thought DLSS used a combo of that + distributed computing to generate the data it uses.

|

|

|

|

DLSS 2.0 is "offline" already. There is no phoning home. AI upscaling is an established old school workload. People have used it to upscale textures in old video games, restore old/damaged warfootage, etc. There are even some free and paid tools online that do it in the cloud. https://letsenhance.io/ for example Cygni fucked around with this message at 19:22 on Apr 30, 2020 |

|

|

|

VelociBacon posted:Isn't some supercomputer at Nvidia already doing this better than we could at home? I thought DLSS used a combo of that + distributed computing to generate the data it uses. Oh maybe. That would be useful for industry outside of games. Hint hint pretty much all movies with VFX are rendered at HD and not 4k. All those 4k blurays are some flavor of upscaling some better than others.

|

|

|

|

Ugly In The Morning posted:2.0 is supposed to be way easier to integrate into games, too, so I have high hopes. It’s almost making me wish I got a 4K monitor instead of 1440p one. That poo poo is pure voodoo and if AMD doesn’t come up with an equivalent, I can’t imagine them having the mid/high end market ever again. With DLSS and full RTX you still aren't really pushing 4K at or above 60 consistently if that makes you feel better. Maybe a 2080 super or something does.

|

|

|

|

VelociBacon posted:Isn't some supercomputer at Nvidia already doing this better than we could at home? I thought DLSS used a combo of that + distributed computing to generate the data it uses. That was DLSS 1.0, and is a part of why people were scratching their heads as far as it being a long-term solution (getting lots of devs to submit enough poo poo to their systems to be able to build the NNs, which may or may not change with patches and updates, was...uh...probably not going to scale well). DLSS 2.0 doesn't use any game-specific pre-computed NN or whatnot--it does it all right there on your system. That and the fact that it uses data that's pretty much already there if you have TAA is why it's much easier for devs to actually use. e; for clarity, it uses a generalized framework that's pre-computed (and thus also updatable) from NVidia, but that framework does not need to be trained specifically per-game like 1.0 did. DrDork fucked around with this message at 19:51 on Apr 30, 2020 |

|

|

|

I think DLSS 1.0 still has potential if it can be trained to be better than DLSS 2.0.

|

|

|

|

DrDork posted:DLSS 2.0 doesn't use any pre-computed NN or whatnot--it does it all right there on your system. That and the fact that it uses data that's pretty much already there if you have TAA is why it's much easier for devs to actually use. DLSS 2.0 still uses a pre-computed model that Nvidia cranked out on their supercomputers, the difference is it's a single generic model now rather than the per-game and per-resolution models that 1.0 used.

|

|

|

|

DrDork posted:That was DLSS 1.0, and is a part of why people were scratching their heads as far as it being a long-term solution (getting lots of devs to submit enough poo poo to their systems to be able to build the NNs, which may or may not change with patches and updates, was...uh...probably not going to scale well). Where are you seeing that? NVidia says 2.0 is still trained on their DGX supercomputers. My impression is that the big difference is that 2.0 uses more temporal data, which allowed them to create a general upscaling network that doesn't require per-game training (though I suspect they'll continue to refine the DNN as new games add support). Stickman fucked around with this message at 19:36 on Apr 30, 2020 |

|

|

|

repiv posted:DLSS 2.0 still uses a pre-computed model that Nvidia cranked out on their supercomputers, the difference is it's a single generic model now rather than the per-game and per-resolution models that 1.0 used. You're right, I was a bit too broad in the way I worded it. The no-per-game-training is a huge difference, though, in terms of being able to scale and keep things up to date.

|

|

|

|

Stickman posted:My impression is that the big difference is that 2.0 uses more temporal data, which allowed them to create a general upscaling network that doesn't require per-game training (though I suspect they'll continue to refine the DNN as new games add support). Yeah from what I gather DLSS 1.0 was a combination of two DNNs - a first stage that anti-aliased the image using temporal feedback, and a second stage that "intelligently" upscaled the anti-aliased image via pure guesswork. DLSS 2.0 is closer to TAAU in that it temporally integrates low res input into anti-aliased, higher resolution output in a single step so it can accumilate real detail up to the limit of the output resolution rather than just the input res. repiv fucked around with this message at 20:14 on Apr 30, 2020 |

|

|

|

I know this is all speculation and nobody will be able to give me a definite answer, but: I'm planning on getting a 3070 or maybe 3080 when they get released. I suppose what I want to know is: - Typically what are the price differences between 70 and 80 on release? - Will they all be out of stock instantly? - Will the various card manufacturers instantly come out with 10 variations on each? or does that come later? - Typically what are the performance differences between the two? If it's a 200 dollar difference with a 5% performance difference, I'll probably just get the 3070. But on the other hand I want to future proof. A TI is overboard, I'll never get a TI. - What is a good ram amount these days for future proofing? 8gb? I suppose we'll know more when xbox/ps5 specs are announced? - What manufacturer is recommended for the cards? I saw someone do a super useful post on EVGA cards a page or two back and if I don't get much input I'll just follow that guide. I want to future proof by not getting something poo poo but I also don't want to spend like, over about 500 dollars. I currently have an old monitor that's 1200x1920 but I want to get a new one, but I'm not aiming for 4k.

|

|

|

|

redreader posted:I know this is all speculation and nobody will be able to give me a definite answer, but: -Depends a lot but usually $100-$150 -At first? Yeah. Usually 30 days is enough to find stock if you use nowinstock but if production is limited due to Covid...? -Takes time to ramp up. I'd expect Founders right away and other AIBs to trickle in, but usually pretty quick -Totally depends. Usually the xx80 line is not a great price/perf ratio though. -Nothing needs more than 8GB but I would expect more would eventually be needed. I don't think GPU RAM scales up that fast though. I'd expect the GPU market would drive GPU RAM requirements in general, so if you're looking for the 3070/80 whatever is in those will be fine. -EVGA is typically the gold standard, to be honest there isn't much Bad anymore, imo. If $500 is your limit that *might* get you a 3070, but I am not certain they'll come in under that.

|

|

|

|

You should listen to other people here over me but there probably won't be significant future-proofing between the 80 and 70. You might as well keep the money and put it toward a new significantly better 5070 or whatever they do like that 3.5 years later

|

|

|

|

redreader posted:I want to future proof by not getting something poo poo but I also don't want to spend like, over about 500 dollars. I currently have an old monitor that's 1200x1920 but I want to get a new one, but I'm not aiming for 4k. If 500 dollars is the limit and you want to get the card on release day, you're getting a 3070. Edit: I'm on the fence whether I'm also buying the 3070 or would I hold on until 3060 release. Most likely depends how much prettier Cyberpunk will be with RTX effects. If I think I can hold until 3060 release, I'll trade this 1060 6gb up to 1070/1070Ti and wait for its release. Rosoboronexport fucked around with this message at 20:21 on Apr 30, 2020 |

|

|

|

While it's fun and we do it because we're bored, in terms of actual purchasing decisions it's silly to try to predict much in advance. You're talking about products that will become available maybe 6 months from now, and we don't know anything real about them. The way Nvidia structured their past couple releases, there was a time period between Founders Edition reviews and AIB availability where it was reasonably easy to make a decision and preorder before products sell out.

|

|

|

|

Stickman posted:Where are you seeing that? NVidia says 2.0 is still trained on their DGX supercomputers. while a general model apparently works fine, I don't get why they couldn't do even better scaling by training a network specific to the game (taking temporal data into account DLSS 2.0 style)

|

|

|

|

redreader posted:I know this is all speculation and nobody will be able to give me a definite answer, but: $500 will 100% certainly be 3070 tier. Depending on how bad launch gouging is it might even bump over that a bit. Yes, major releases always sell out instantly from all brands. Set up some nowinstock alerts and F5 EVGA's website on launch day and try to get one if that's your thing. Typically Founders Editions have released first by a certain margin of time (1-2 weeks). They are fine but uninspiring cards, warranty is only 1y and service is not EVGA-tier, and NVIDIA glues them together now which makes them a pain to service yourself. But they are the easiest to put a waterblock or G12 on (which may or may not work on this new generation, you never know). Generally speaking in the lower tier cards you are best off getting the cheapest axial-cooler model from a third party (EVGA). The problem is exactly what you note, better cooling and binning makes like a 5% difference and it's not worth paying 25% more for it. You would be better off buying a poo poo-tier 3080 than the blingiest 3070 Hall Of Fame model, the step to the higher chip makes much more of an impact. 8GB is the bare minimum these days and is not at all futureproof. 16GB is the "safe" amount, 32GB is futureproofing. You are basically a shoo-in for the EVGA 3070 Black Edition Gaming or whatever they are calling their bottom-tier dual-fan 3070 this time around. Nobody can tell you exactly what that will be but the low end of 3070 pricing will almost certainly dip below $500. Unfortunately everybody else will be coming after this too, because they're one of the best deals... hence saying you'll have to set some nowinstock alerts and watch the EVGA website if you want it in less than 2 months or so. The other thing is you can try EVGA step-up. This will have you buy some other (non-refurbished) card once the launch is announced (it doesn't matter what, get a 1030 if you want, get a firesale 2080 if you see one under $500). You then register the card's warranty with EVGA. At some point shortly after launch they will put the 3070 on the step-up availability list, you register with them and you go on a waiting list. When they get around to you, you mail in your card, you pay the difference between what you paid (minus any rebates) and the MSRP of the new card, and shipping both ways. Then they'll send you the 3070. The advantage is you are going on a waiting list, so they will get around to you eventually. The downside is it could be a couple months, especially if there are already shortages. Paul MaudDib fucked around with this message at 21:23 on Apr 30, 2020 |

|

|

|

Paul MaudDib posted:while a general model apparently works fine, I don't get why they couldn't do even better scaling by training a network specific to the game (taking temporal data into account DLSS 2.0 style) A weird thing with nueral networks is that the more general a model gets, the better it tends to get. Probably because it can use way more training data.

|

|

|

|

Paul MaudDib posted:Typically Founders Editions have released first by a certain margin of time (1-2 weeks). They are fine but uninspiring cards, warranty is only 1y and service is not EVGA-tier, and NVIDIA glues them together now which makes them a pain to service yourself. But they are the easiest to put a waterblock or G12 on (which may or may not work on this new generation, you never know). Nvidia has been upping their game on the FE cards year over year. It seems reasonable to think they may be good(ish) for Ampere. Do they still bin the FE cards? I forget. e: I'll no doubt end up with an FE card because the minute I see an in-stock 3080 (regular or Ti) I'm going to buy it so hard that my furious typing will measure on the richter scale at US Geo. I'm guessing all of us with HDMI 2.1 compatible gaming OLEDs are in the same boat- we've been waiting for Ampere for soooo long. It was a real disappointment when they didn't include 2.1 on Turing. Also that pie in the sky DP to HDMI 2.1 converter that Realtek was touting is absolutely nowhere to be found and probably never will be. Taima fucked around with this message at 21:27 on Apr 30, 2020 |

|

|

|

Do GPU dies/packages have to be soldered onto their final PCB before the binning process? That seems inefficient. Maybe there's a special binning jig for loose GPU packages?

|

|

|

|

There's absolutely a custom rig where they drop the chip into a socket and test it without soldering it in.

|

|

|

|

GN has a video of it. The hardware exists but binning doesn’t seem to be the primary use case. https://www.youtube.com/watch?v=BYzxDaxNer0

|

|

|

|

Thanks everyone. Yeah I can go over 500 if I have to. I'd like to get a cool/quiet running 3070 so probably the evga gamer edition card. That just means I won't get the 3080 probably. We'll see what it all looks like, when it's closer to the date! Thanks again for the input.

|

|

|

|

Sorry if this is the wrong place to post this, but I don't want to create a dedicated thread in the Haus of Tech Support. My computer wouldn't boot, and troubleshooting revealed that removing my GPU (PowerColor Red Dragon Radeon 5700 XT) solved the problem. I popped the old GPU in (Geforce GTX 1060) and everything works fine again. The 5700 worked fine since I installed it in January until this suddenly happened a few days ago. I've put it back in and tried again and it didn't solve the problem. I contacted PowerColor and haven't heard back yet. My questions are these: how certainly can I say the GPU is no longer functional? Could there be a reason other than the card dying why the PC won't boot with it installed? If it is indeed dead, what are my RMA chances if I've had it since mid-January (purchased from Micro Center)?

|

|

|

|

Racing Stripe posted:Sorry if this is the wrong place to post this, but I don't want to create a dedicated thread in the Haus of Tech Support. Would not power on at all, or powered on and wouldn’t display anything?

|

|

|

|

Racing Stripe posted:Sorry if this is the wrong place to post this, but I don't want to create a dedicated thread in the Haus of Tech Support. -Quite probably dead -What PSU, and how old is it? -Try using a different power connector to the card if possible -If you do need to RMA you should be fine. You are the original owner, right? You'll get sent an identical 5700 XT but you may be stuck with that 1060 for a while, I assume PowerCooler doesn't do cross ship or anything.

|

|

|

|

Ugly In The Morning posted:Would not power on at all, or powered on and wouldn’t display anything? Case lights turned on and fans started spinning, but black screen for five seconds or so before it powers of and repeats the cycle.

|

|

|

|

Lockback posted:-Quite probably dead My first thought was a dead PSU, so I actually just replced it. EVGA 650 watts. It's a new power connector, I suppose, since I swapped out all the cables. However, I do use a 45 degree angled extender adapter that I don't use with the other card, so I'll try leaving that out. It could have gone bad, I suppose. Yes, I'm the original owner, bought from Micro Center so I should be able to provide documentation.

|

|

|

|

Wait, you mean the EVGA Power Link? I remember reading that those things were crap, full of crap, and would actually introduce instability into your graphics cards.

|

|

|

|

SwissArmyDruid posted:Wait, you mean the EVGA Power Link? Really? I've had one on my 1080ti for a few years with no trouble, but I don't want to blow up my card.

|

|

|

|

SwissArmyDruid posted:Wait, you mean the EVGA Power Link? The only real negatives I've heard of are dorks torqueing the PCIe power connectors when installing it, but its... not really a complicated device internally. I doubt it would add any more issues than a cable extender would. I mean I wouldn't push 1kw through it with a card on LN2, personally, but I wouldnt do that with a cable extension either. I've got one that i goofed with a little when EVGA was giving em away for free but it looked a little weird on my card so I took it off. Seemed fine to me though! (also dont lose the wrench it comes with if you wanna reuse it)

|

|

|

|

SwissArmyDruid posted:Wait, you mean the EVGA Power Link? No, not that. I hadn't heard of that. Similar idea, but it's just a power cord extender with the wires angled 45 degrees coming out of the plastic plug that inserts into the GPU. I need it because that side of the GPU is about 1/4 inch from the lid of my case.

|

|

|

|

Enos Cabell posted:Really? I've had one on my 1080ti for a few years with no trouble, but I don't want to blow up my card. Cygni posted:The only real negatives I've heard of are dorks torqueing the PCIe power connectors when installing it, but its... not really a complicated device internally. I doubt it would add any more issues than a cable extender would. What I'd heard is that if your overclock is marginal WITH the powerlink, taking it out can make the overclock stable, with some repeatability. Which, in my opinion, means it's not 100% transparent and acting like it isn't there, it has the potential to cause problems, and I already have torn out a ton of hair just trying to diagnose bad video cards without adding extensions into the mix.

|

|

|

|

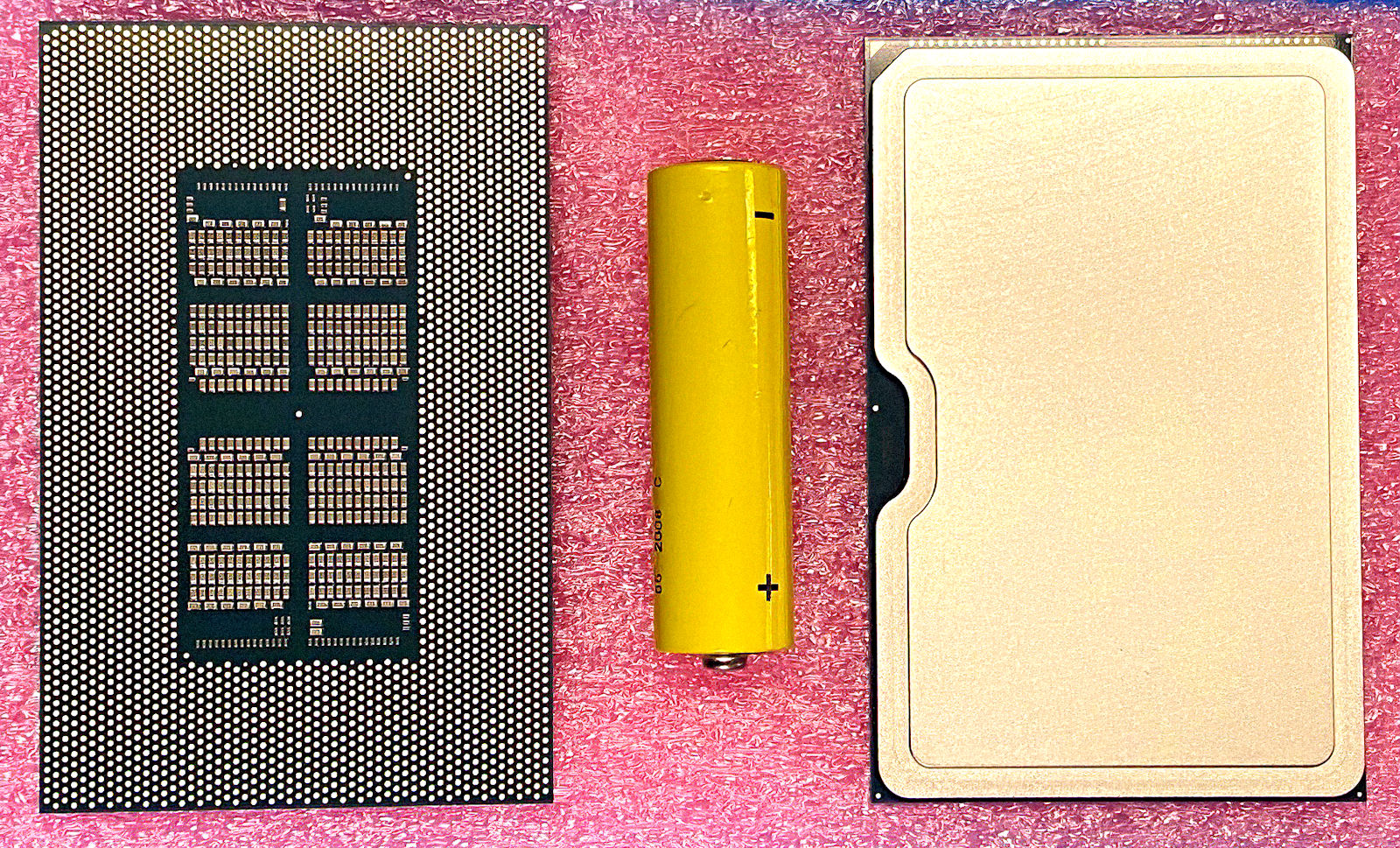

Intel teases Xe-HP graphics processor – ‘Father of All’ GPUs

|

|

|

|

Lame they should have put the battery label side up to see what size it is. Looks like a 14500/AA tho but would be comical if it was a 18650.

|

|

|

|

Racing Stripe posted:My first thought was a dead PSU, so I actually just replced it. EVGA 650 watts. It's a new power connector, I suppose, since I swapped out all the cables. However, I do use a 45 degree angled extender adapter that I don't use with the other card, so I'll try leaving that out. It could have gone bad, I suppose. Try it without the extender but if two different PSUs were used and it's still in a boot cycle you got a dead 5700Xt. https://www.powercolor.com/rma If you're in the US start by emailing: RMA Service (USA): support.usa@powercolor.com Make sure to tell them it boots fine with the 1060 and that you tried a new PSU. Might save you some back and forth emailing.

|

|

|

|

Lockback posted:Try it without the extender but if two different PSUs were used and it's still in a boot cycle you got a dead 5700Xt. I tried removing the extender and it didn't fix the problem. I'm contacting them now. Racing Stripe fucked around with this message at 20:57 on May 1, 2020 |

|

|

|

SwissArmyDruid posted:What I'd heard is that if your overclock is marginal WITH the powerlink, taking it out can make the overclock stable, with some repeatability. i mean its literally a tiny board with two capacitors on it, which are actually intended to smooth out some ripple, so yeah I guess that might have some sort of impact (positive or negative) on a knife edge overclock depending on the PSU and GPU? but honestly that sort of turbo-nerd OCing is pretty worthless on Pascal and Turing anyway, but i guess turbo-nerds will be turbo-nerds

|

|

|

|

|

| # ? Jun 1, 2024 06:24 |

|

4, maybe 8 chiplets? guess they are gonna go full zeppelin style chiplet scaling. 1,2,4 LP/HP/HPC

|

|

|