|

redreader posted:When I asked about this earlier in the thread someone said that ray-tracing is being standardized in directx and that Nvidia will have better ray-tracing due to dedicated hardware. AMD's big navi will be on 7nm and doesn't have dedicated ray-tracing hardware, maybe they'll have better standard 3d performance. It's possible that their cards are a cheaper way to get better standard 3d performance if you don't care about ray-tracing. I do, though. AirRaid posted:Big Navi does support hardware ray tracing though. yeah, people misunderstood what that patent was, AMD built their dedicated hardware into the texture unit, but they do have dedicated hardware ("BVH Traversal unit"). I think of it as being analogous to an execution port on a CPU, AMD's RT and texture units share an execution port. They're betting those operations won't happen at the same time often enough to hurt performance too much.

|

|

|

|

|

| # ? May 30, 2024 15:04 |

|

redreader posted:When I asked about this earlier in the thread someone said that ray-tracing is being standardized in directx and that Nvidia will have better ray-tracing due to dedicated hardware. AMD's big navi will be on 7nm and doesn't have dedicated ray-tracing hardware, maybe they'll have better standard 3d performance. It's possible that their cards are a cheaper way to get better standard 3d performance if you don't care about ray-tracing. I do, though. There's only one known way to compute BVH trees fast, and it requires fixed-function hardware, so if AMD wants to do real time raytracing, they have to have it. I'm fairly sure both companies are copying notes from the exact same academic paper, either way.

|

|

|

|

AirRaid posted:Big Navi does support hardware ray tracing though. But in what manner is the question. They could cheap out and have "ray tracing support" to the same extent the consoles will, which is to say a limited, low-end implementation that makes no real attempt to replicate what something like RTX Quality can do. Or they could take a real stab at it and bank on many titles already baking console RT into their engines and then layering on top of that in a manner than lets them leverage the optimizations from a console-centric origin, so the end result is comparable to RTX quality. We really have no idea, though, because AMD hasn't said much of anything about what their cards will be able to do yet. Just gotta wait.

|

|

|

|

VorpalFish posted:I mean obviously the games you play and your expectations for settings matter a lot. AAA titles with high settings still belongs to the more money than sense I don't care about value crowd, and even there you're seeing frame rate dips. yeah it just all seems fairly pointless, 1440p looks awesome, and the same hardware that gets you 4K60 gets you high refresh 1440p. And 34"/38" gaming ultrawides own too, the current crop do at least 120 hz and up to 175 hz in some cases, there are really no high-refresh 4K gaming ultrawides. If you want it for productivity, and for older games, yeah, I guess. But for modern AAA titles it's just needlessly intensive for very little real gain imo, and means you're missing out on a bunch of other cool hardware niches.

|

|

|

|

Paul MaudDib posted:there are really no high-refresh 4K gaming ultrawides. To be fair, this has largely been because you couldn't shove enough bandwidth to drive one down a DP 1.4 or HDMI 2.0b line. HDMI 2.1 opens that up considerably, so I would expect we'll start seeing stuff like 5120x2160@120Hz in the nearish future.

|

|

|

|

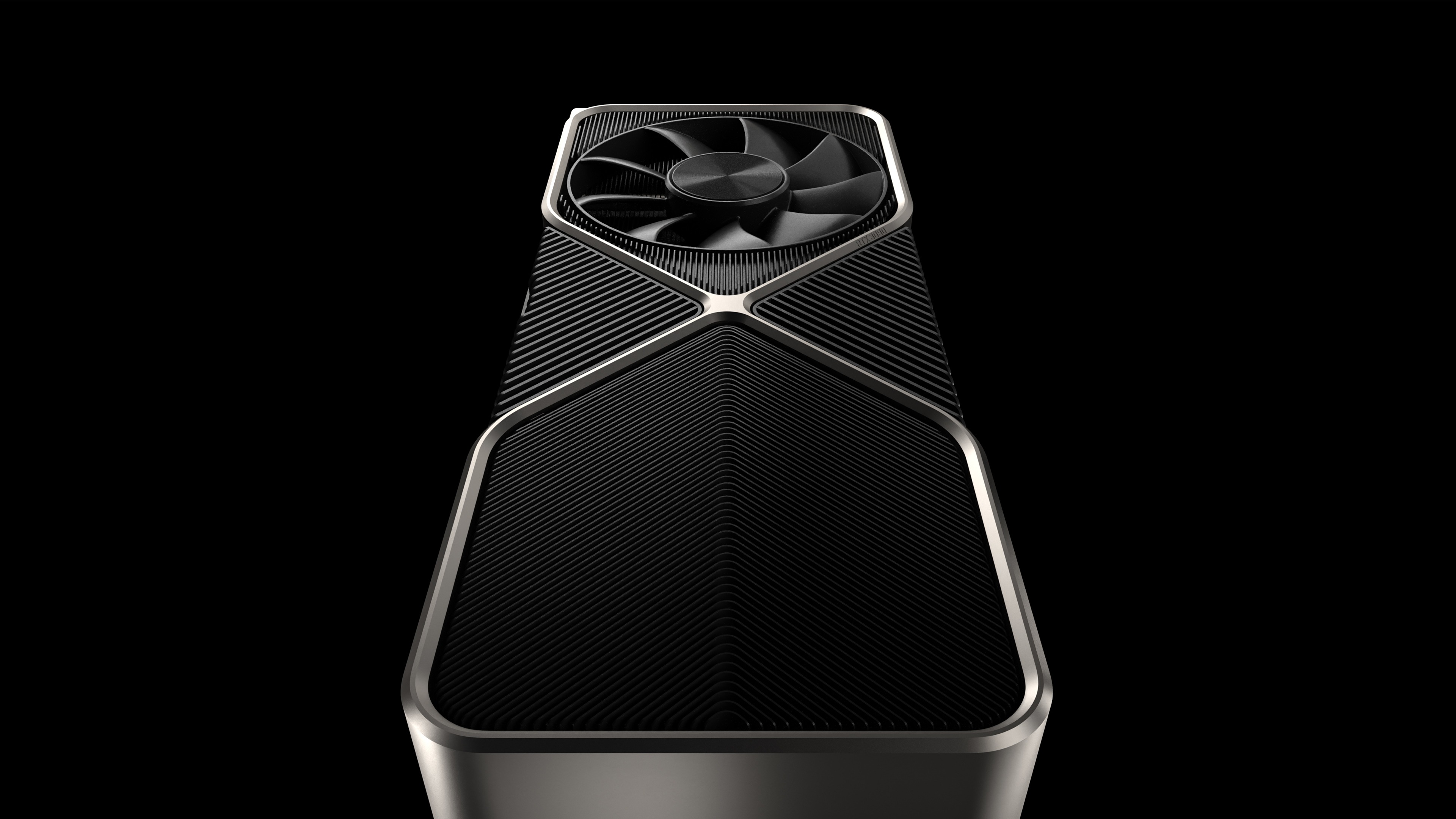

AirRaid posted:It's a cover. Nvidia's site has images of both the 3080 with the cover also and the 3090 without - ok word, i guess them little fins are gonna be that delicate in the 3090. this cooling solution really is insane, the boost clocks better be mental.

|

|

|

|

DrDork posted:To be fair, this has largely been because you couldn't shove enough bandwidth to drive one down a DP 1.4 or HDMI 2.0b line. HDMI 2.1 opens that up considerably, so I would expect we'll start seeing stuff like 5120x2160@120Hz in the nearish future. that's the point though - 4K's bandwidth requirements are so extreme that it will forever be lagging behind what is possible at 1440p and 1080p. When HDMI 2.1 comes around we can do 1440p240 and stuff like that as well. Every resolution is a particular set of compromises, I just don't feel like 4K gaming is really a set of compromises that work that well for many people, high-refresh 1440p is just a better general-purpose monitor for most tasks, and much cheaper. (and 8K is going to be lol, like 30 fps or less outside DLSS-accelerated titles.)

|

|

|

|

Paul MaudDib posted:that's the point though - 4K's bandwidth requirements are so extreme that it will forever be lagging behind what is possible at 1440p and 1080p. When HDMI 2.1 comes around we can do 1440p240 and stuff like that as well. Sure, but diminishing returns also exists. It's not like the difference between 240Hz and 300Hz is anywhere near as noticeable as between 60Hz and 120Hz, after all. With HDMI 2.1 we'll be able to get 4-5k into the 100-120Hz range, which is a great option for a lot of people. Is it perfect for everyone? Nope--some will legitimately prefer 1440p@200Hz or whatever, and others will say gently caress it and stick with 1080p@60Hz because it won't take a super-expensive GPU to drive. Personally, I've been loving my ultrawide, and am very much looking forward to a modest bump in both resolution and refresh rate. But to each their own.

|

|

|

|

4K PC gaming doesnít really make sense until we get decent cheap gaming monitors at that resolution.

|

|

|

|

shrike82 posted:4K PC gaming doesnít really make sense until we get decent cheap gaming monitors at that resolution. For sure. I was just shopping those and it looks like getting one without dropping six hundo isnít going to happen. Iím happy with 1440p144hz for now, dropping that much on a monitor feels crazy to me (I say, planning to buy a 3080)

|

|

|

|

Some DirectML news: TensorFlow code is on GitHub. https://twitter.com/DirectX12/status/1303440401848823809 So here is what I don't get about DirectML; who's supposed to be in charge of building and training the data we will eventually use for DirectML, and how, or through what, would that be made available to a general audience? GPU drivers? A Windows 10 update?

|

|

|

|

I dunno, I bought a nice Samsung LED IPS 49Ē to use as a gaming monitor and itís fuggin nice. Granted Iím not playing BR games like yíall that need 9999 FPS to shoot 12 year olds so

|

|

|

|

Ugly In The Morning posted:For sure. I was just shopping those and it looks like getting one without dropping six hundo isn’t going to happen. I’m happy with 1440p144hz for now, dropping that much on a monitor feels crazy to me (I say, planning to buy a 3080) for me dropping a bunch on a monitor is a no-brainer, since it'll work fine and be very good for at least 10 years, unlike gpus that are good for 2 years and passable for another 3-4 max

|

|

|

|

shrike82 posted:4K PC gaming doesnít really make sense until we get decent cheap gaming monitors at that resolution. Guess that depends on your definition of "cheap." You can get a 4k@60 IPS monitor for ~$350. Ain't nothin, but it's also not crazy expensive or anything, especially since if you're buying into 4k gaming you're probably not thinking of driving it with a $300 GPU. If you mean 4k@144Hz, yeah, those are still quite expensive, but several good models exist around $800. Obviously a different market at that point, but when we're also talking about people trying to decide between a $700 and a $1500 GPU, well, it's not that outlandish, either. There are numerous 1440p@144-165Hz monitors that cost around the same. You can argue whether you think more resolution or higher refresh rates is better for a given scenario, but it's not like one is really any cheaper than the other. Truga posted:for me dropping a bunch on a monitor is a no-brainer, since it'll work fine and be very good for at least 10 years, unlike gpus that are good for 2 years and passable for another 3-4 max Pretty much this. I mean we all have our priorities and all, but if you invest in a real good monitor chances are it'll outlast pretty much any other part of your system.

|

|

|

|

Except even the high end 4K gaming monitors are compromised - isnít the 2nd batch of Samsung G9s ridden with issues?

|

|

|

|

Truga posted:for me dropping a bunch on a monitor is a no-brainer, since it'll work fine and be very good for at least 10 years, unlike gpus that are good for 2 years and passable for another 3-4 max I think for me part of it is that I canít gently caress around with monitors as much so theyíre just not as fun as a new graphics card. Thereís no OCing or benchmarks or stress tests to optimize, so...

|

|

|

|

MikeC posted:Should have quoted I guess. I think we can all agree that if the best AMD can squeeze out is a repeat of the GCN years they've given up and ceded the interesting part of the market to nvidia and maybe intel. But low-end/midrange dGPUs are kind of a dying breed to begin with considering the quality of integrated GPUs. Would make a lot of sense for AMD to focus on APUs/Consoles only but that'd lock them out of half of the HPC market that Epyc opened up.

|

|

|

|

Lisa Bae has a pretty good handle on the company - itís probably a competitive advantage to be one of the few shops to be able to spin a CPU+GPU solution. Iíd be surprised if their GPU division was in the red. Theyíre still recovering from years of mismanagement under Koduri and now that heís loving things up at Intel, the hope is that AMD can rebuild that division.

|

|

|

|

Truga posted:for me dropping a bunch on a monitor is a no-brainer, since it'll work fine and be very good for at least 10 years, unlike gpus that are good for 2 years and passable for another 3-4 max I paid $1245 for my U3011 on Thanksgiving 2010 ó still have the original box and it's moved like 6 times, only real "complaint" is the backlight. shrike82 posted:Lisa Bae has a pretty good handle on the company - it’s probably a competitive advantage to be one of the few shops to be able to spin a CPU+GPU solution. I’d be surprised if their GPU division was in the red. What were some of the major strategic boo-boos Koduri made?

|

|

|

|

Malcolm XML posted:But low-end/midrange dGPUs are kind of a dying breed to begin with considering the quality of integrated GPUs. As impressive as they are, isn't there going to be a big jump with next gen that'll put most new ("big") games out of their range again? Or will they catch up quicker this time?

|

|

|

|

movax posted:What were some of the major strategic boo-boos Koduri made? We donít have that much visibility into AMD then but he presided over two lovely GPU generations while their marketing made pretty dumb claims about them. He seems to be repeating his performance at Intel by coyly hinting at the gaming performance of Xe discrete which Iím guessing will be a shitshow. And the funniest thing is heís supposedly in the running for CEO there.

|

|

|

|

ufarn posted:So here is what I don't get about DirectML; who's supposed to be in charge of building and training the data we will eventually use for DirectML, and how, or through what, would that be made available to a general audience? GPU drivers? A Windows 10 update? the model would be trained by whoever is developing the software that utilizes directml and shipped with the software directml is just a set of low-level ML primitives that a model can be implemented on top of, it doesn't handle models directly

|

|

|

|

I suspect that people who buy 3080 FE will be in for a bad time - the 2 slot dual fan form factor doesnít make sense cooling a 320W GPU. Even Nvidia marketing copy for the card shows it running 20c hotter than the 3090 at various noise levels.

|

|

|

|

Well good news, the FE reviews are supposed to hit 3 days before launch so hopefully some actual meaningful data will be available.

|

|

|

|

shrike82 posted:I suspect that people who buy 3080 FE will be in for a bad time - the 2 slot dual fan form factor doesnít make sense cooling a 320W GPU. Even Nvidia marketing copy for the card shows it running 20c hotter than the 3090 at various noise levels. Yeah I think I've relegated myself to shooting for a higher end EVGA or ASUS for the BIOS switch. Speaking of that, I know ASUS has confirmed it for the Strix but has anyone seen it for the EVGA FTW3?

|

|

|

|

Rinkles posted:As impressive as they are, isn't there going to be a big jump with next gen that'll put most new ("big") games out of their range again? Or will they catch up quicker this time? Even the best iGPUs these days are outperformed by a 1030, he's talking out his rear end.

|

|

|

|

shrike82 posted:4K PC gaming doesnít really make sense until we get decent cheap gaming monitors at that resolution. There is only one reason I care about 4K and plan my build around it: 4K OLED with a setup dedicated entirely to that, where you're sitting close to the set so you take full advantage of the pixels (for example that's about 6-8 feet away for a 65 inch). You need to be taking full advantage of the benefits of OLED and HDMI 2.1 VRR for the whole setup to really be worth it. It's insanely niche and I agree that almost no one should care about 4K pc gaming yet

|

|

|

|

Some Goon posted:Even the best iGPUs these days are outperformed by a 1030, he's talking out his rear end. I just checked and even the Iris Plus G7 is ~25 percent behind the 1030 on benchmarks. Fine for Windows, but yeah, incredibly anemic for most stuff past that.

|

|

|

|

highend gaming monitors going down the route of large ultrawides with huge curves is a deadend too -

|

|

|

|

jisforjosh posted:Yeah I think I've relegated myself to shooting for a higher end EVGA or ASUS for the BIOS switch. The FTW3 will have dual bios.

|

|

|

|

shrike82 posted:Except even the high end 4K gaming monitors are compromised - isnít the 2nd batch of Samsung G9s ridden with issues? If your argument is that one incredibly niche monitor had issues bad enough for a recall, therefore 4k gaming is dumb, then might I also suggest you never buy a video card at all because every GPU manufacturer out there has had a similar recall / whoopsie? I mean, even thread-darling EVGA has hosed it up pretty bad before. I will agree that current 4k@>100Hz monitors are all compromised in the sense that they need to use DSC to hit those numbers, which is part of why I'm excited to see HDMI 2.1 actually launch for real and hopefully spur some advances in monitor tech.

|

|

|

|

what's a no compromise 4k144 monitor I can buy today that I don't have to worry about for 5 years then?

|

|

|

|

shrike82 posted:what's a no compromise 4k144 monitor I can buy today that I don't have to worry about for 5 years then? Well its close but the lg 48 inch oled will do 4k/120fps +gsync on hdmi 2.1

|

|

|

|

shrike82 posted:what's a no compromise 4k144 monitor I can buy today that I don't have to worry about for 5 years then? I keep saying "now that HDMI 2.1 is actually launching we should see good 4k high-hz monitors soon" and you keep ignoring that "soon" is not "now." But, yeah, the C9 series is great if you're in the situation where you can use a TV for a monitor. I'm not, so I'm stuck waiting.

|

|

|

|

shrike82 posted:highend gaming monitors going down the route of large ultrawides with huge curves is a deadend too - Why do you think it's a dead end? Do you mean a fad that will die like 3D TV or something else?

|

|

|

|

HDMI 2.1 solves the bandwidth problem but oled is still the only solution for really good blacks and fast response times, oled options are pretty sparse in monitor sizes and then you get to deal with burn in and on top of all that gpus still aren't close to pushing high refresh rate. I figure 1440p144 is a good stopgap until 4k144 microled is actually viable in the market. If I'm gonna drop 1k+ on a monitor it better be perfect.

|

|

|

|

quote:Ethereum Miners Eye NVIDIAís RTX 30 Series GPU as RTX 3080 Offers 3-4x Better Performance in Eth

|

|

|

|

VorpalFish posted:gpus still aren't close to pushing high refresh rate. Watch me bitch

|

|

|

|

this is good news... for AMD!!!

|

|

|

|

|

| # ? May 30, 2024 15:04 |

|

AMD counters by announcing that it was never in fact Big Navi, but Dig Navi, the most powerful mining GPU of all time.

|

|

|