|

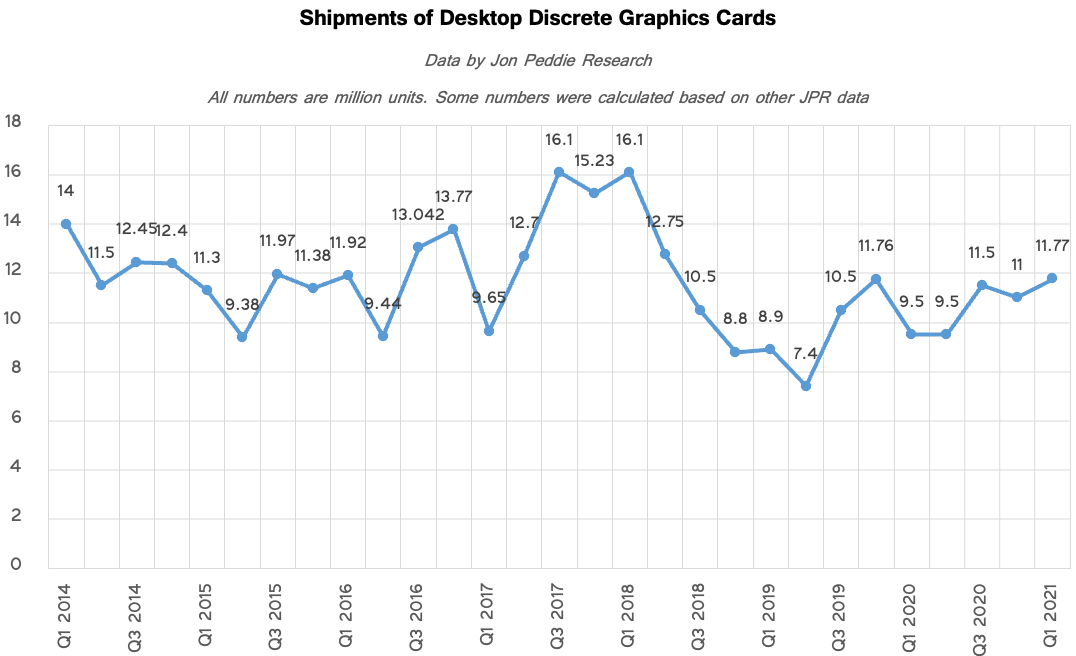

The prices can only drop so far though, after the crypto boom dies down in earnest, there is still the chip shortage.

|

|

|

|

|

| # ? May 29, 2024 02:32 |

|

Shifty Pony posted:Everything should just use the Metroid Prime reflection technique (@38sec) To be fair to Metroid Prime, it had may be my favourite visual effect in history, which was very occasionally seeing a brief flash of Samus' face reflected in the glass of her visor if you were near an explosion

|

|

|

|

Zedsdeadbaby posted:The prices can only drop so far though, after the crypto boom dies down in earnest, there is still the chip shortage. I thought the chip shortage was predominantly focused on chips with bigger nm structures than what's relevant for gaming, like low power stuff that goes in home appliances and stuff. Please don't tell me we have to compete with Bosch for GPU silicon now

|

|

|

Mr. Neutron posted:Seems so. Plenty of cards in stock now, only nobody wants them for those prices. The Ti models have the etherium mining limiter, but the non-Ti models don't. As far as the miners are concerned a 3070 Ti is worse than a 3060. So that's a good sign that the LHR rollout will bring prices down further.

|

|

|

|

|

Well I decided to pull the trigger on the 3070 and ordered it. Then 10 minutes later get an email saying the shop actually don't have it in stock and they don't know when it might be delivered. Fuckers.

|

|

|

|

Lord Stimperor posted:I thought the chip shortage was predominantly focused on chips with bigger nm structures than what's relevant for gaming, like low power stuff that goes in home appliances and stuff. Please don't tell me we have to compete with Bosch for GPU silicon now Yeah I don't think the chip shortage has affected GPUs much, both Nvidia and AMD have said in their investor reporting that they're making more boards than ever and demand is the bigger issue.

|

|

|

|

Zedsdeadbaby posted:The prices can only drop so far though, after the crypto boom dies down in earnest, there is still the chip shortage. Lord Stimperor posted:I thought the chip shortage was predominantly focused on chips with bigger nm structures than what's relevant for gaming, like low power stuff that goes in home appliances and stuff. Please don't tell me we have to compete with Bosch for GPU silicon now  Production of desktop dGPUs is not down currently. edit: not more boards than ever, though, that happened during the 2017/2018 crypto boom. they haven't been able to ramp up production this time like they did last time. Dr. Video Games 0031 fucked around with this message at 22:22 on Jul 4, 2021 |

|

|

|

Dr. Video Games 0031 posted:

yeah as i understand it the problem wasn't that they under allocated but instead they couldn't buy more fab for love nor money, which they'd have done in response to demand if they possibly could.

|

|

|

|

CoolCab posted:i'm extremely happy with my 3070 and will be for the foreseeable. i mean poo poo all i fuckin play is apex Downloading both Neverwinter Online and Black Desert Online from Steam right now. Both for $0.99 and got all three Witcher enhanced (graphics upgrades & dlc) for $13 after tax from GoG Galaxy. Should keep me busy for a while, and my 3070 has so many fans that even with four computers in the room under load it never gets above 45įC. Itís great, well worth the price for a prebuilt!

|

|

|

|

The most demanding game I play is RDR2 but I just upgraded to a 1440p monitor and finally had to turn settings down with my old 1070. I think I'll be able to get several years out of my new card, and for what it cost I intend to.

|

|

|

|

Just ordered a 6700XT. Memory Express has the Gigabyte  GAMING GAMING RX 6700 XT OC 12GB in stock for 999 CDN$ (~808 USD). I was going to hold out for a 3070 but I'm too impatient I guess. My monitor is both freesync and gync compatible, and hopefully the 12GB VRAM will be worth it. GAMING is in all caps in the actual product name. RX 6700 XT OC 12GB in stock for 999 CDN$ (~808 USD). I was going to hold out for a 3070 but I'm too impatient I guess. My monitor is both freesync and gync compatible, and hopefully the 12GB VRAM will be worth it. GAMING is in all caps in the actual product name.E: Coming from a 1070 and using a 1440p 144hz monitor. I'm fine with games not reaching the full 144 fps so long as Freesync works. Squatch Ambassador fucked around with this message at 08:22 on Jul 5, 2021 |

|

|

|

I can't wait to finally be able to replace my 1080 Ti in 2024!

|

|

|

|

Hungry Computer posted:Just ordered a 6700XT. Memory Express has the Gigabyte Dude, that's literally worse than scalper prices. If you still can, cancel the order and buy one from ebay for cheaper (or wait another week or two for it to go down even more) edit: This probably comes off as harsher than intended. Ebay prices (I checked canadian ebay) are often lower but not by much, and it varies. I'm mostly just angry at retailers that charge scalper prices directly. The situation will improve way more slowly with assholes like them trying to profit off the availability shortage. Dr. Video Games 0031 fucked around with this message at 13:16 on Jul 5, 2021 |

|

|

|

Kazinsal posted:I can't wait to finally be able to replace my 1080 in 2024!

|

|

|

|

There's a gap in the market for a large sticker with '3080 Super' and VRAM drawn on it that can be applied to any GPU shroud.

|

|

|

|

I read that as 'large sticker with 3080 Super and VRAM drawn on it [in sharpie pen].'

|

|

|

|

Dr. Video Games 0031 posted:Dude, that's literally worse than scalper prices. If you still can, cancel the order and buy one from ebay for cheaper (or wait another week or two for it to go down even more) Yeah, I cancelled it. I shouldn't have been looking at graphics cards in the middle of the night while on strong pain meds for an ear infection  . I can wait a few more months for prices to drop or better cards to be available. . I can wait a few more months for prices to drop or better cards to be available.

|

|

|

|

Hungry Computer posted:Yeah, I cancelled it. I shouldn't have been looking at graphics cards in the middle of the night while on strong pain meds for an ear infection that'd be a decent price for a 6800 XT and to be fair that's probably the MSRP from the AIB AMD doesn't seem to have any leverage over their AIBs in either contracts, allocations, or ability to produce their own reference cards. They're all sitting at +$400 or worse.

|

|

|

|

knox_harrington posted:Well I decided to pull the trigger on the 3070 and ordered it. Then 10 minutes later get an email saying the shop actually don't have it in stock and they don't know when it might be delivered. Fuckers. **UPDATE** panic over. I called the shop and they will deliver it on Monday. I'll try to use it a while before I rip off the standard fans. Can't wait to get Minesweeper up and running

|

|

|

|

knox_harrington posted:**UPDATE** panic over. I called the shop and they will deliver it on Monday. weren't you looking at https://www.evga.com/products/product.aspx?pn=08G-P5-3755-KR ? I thought that was one of the better coolers.

|

|

|

|

Yes it's an XC3 - I wasn't being totally serious so if it's quiet enough I'll keep it like that. Having done a deshroud on the current card (MSI Gaming - which was supposed to be quiet) the noise difference with Noctua fans was huge, though.

|

|

|

|

I have that exact one in a 9 liter ITX case and itís golden. I hear my CPU fan over it. Goongrats on your wait being over!

|

|

|

|

oooh, an XC3 ultra by any chance? that one seems pretty popular among goons, myself included. the cooling on the XC3 lines is exactly the same as the 3080 XC3 so it's honestly kind of overkill, it's sweet.

|

|

|

|

CoolCab posted:oooh, an XC3 ultra by any chance? That's the one

|

|

|

|

Paul MaudDib posted:upgrading your monitor is usually the most immediate driver of a need for more GPU power, whether that's stepping up in resolution or stepping up to a newer model with a higher refresh rate. Within a fixed resolution/refresh target the decline is pretty slow and you'll know when your card is starting to struggle but if you step up your target then it's pretty natural to feel the urge to try and drive your new monitor to its limit. I mean realistically most newer AAA games couldnít hit the 1440p 144hz target consistently anyway. I generally play on high/ultra but reduce the settings that really take away from my competitiveness.

|

|

|

|

depends on the title and the compromises you make on settings. there are what, four games that are both popular and run like poo poo (flight sim, cyberpunk, rdr2 and...i'm forgetting one i think)? you want the most frames possible for competitive shooters and the like but for a more cinematic experience like the witcher anything over 90 with VRR is absolutely fine, imo. people get it into their heads that they need to hit their max possible framerate from their monitor on every title are very strange to me - like wasn't that the point of gsync/freesync? lol?

|

|

|

|

CoolCab posted:depends on the title and the compromises you make on settings. there are what, four games that are both popular and run like poo poo (flight sim, cyberpunk, rdr2 and...i'm forgetting one i think)? you want the most frames possible for competitive shooters and the like but for a more cinematic experience like the witcher anything over 90 with VRR is absolutely fine, imo. I think a significant chunk of that is having FPS counters running all or most of the time. I know it definitely had that effect on me, and it was a hard habit to break. Once I turned off the FPS display and just paid attention to my subjective experience I've gotten way more relaxed about it. I couldn't tell you my average framerate in Cyberpunk 2077, but could tell you in what parts of the map I know my system struggles. For me, not obsessing over FPS has improved my gaming experience.

|

|

|

|

if you're not using your monitor to its full capacities you're just throwing money away which is why you need a $2000 gpu

|

|

|

|

CoolCab posted:depends on the title and the compromises you make on settings. there are what, four games that are both popular and run like poo poo (flight sim, cyberpunk, rdr2 and...i'm forgetting one i think)? you want the most frames possible for competitive shooters and the like but for a more cinematic experience like the witcher anything over 90 with VRR is absolutely fine, imo. I dunno man. People are paying thousands of dollars for their computers because they donít want to settle for what a console puts out. Itís not that strange. In fact the statement is like saying ďoh no one needs a car that fast.Ē In any case Iím not realistically trying to max my monitor, most likely none of the cards would do it and itís certainly prohibitively expensive if a solution did exist. But like I said, most cards wonít consistently max a 1440p 144hz monitor in newer non-esports titles that are competitive. My 1080ti didnít and the 3080 doesnít either, at least in some titles.

|

|

|

|

VulgarandStupid posted:I dunno man. People are paying thousands of dollars for their computers because they donít want to settle for what a console puts out. Itís not that strange. In fact the statement is like saying ďoh no one needs a car that fast.Ē quote:In any case Iím not realistically trying to max my monitor, most likely none of the cards would do it and itís certainly prohibitively expensive if a solution did exist. But like I said, most cards wonít consistently max a 1440p 144hz monitor in newer non-esports titles that are competitive. My 1080ti didnít and the 3080 doesnít either, at least in some titles. what's your CPU and what titles? other than RDR2 i can't really think of recent super demanding competitive titles. warzone?

|

|

|

|

Mr. Neutron posted:Seems so. Plenty of cards in stock now, only nobody wants them for those prices. the 3080 was originally priced higher because it is one of the "optimal" mining cards. 3080 Ti has always been LHR so the price is lower because miners never got into them. In theory 3080 has mostly switched to LHR chips also at this point and over time prices should fall (at least for the LHR variants) but I guess it's just a case of "sticky prices", nobody wants to be the first to lower prices and take a loss. (and I'd like to point out that despite all the bullshitting about "miners will just write their own firmware! they have people that do that, it's super easy and will be broken in like a week!" nobody has actually broken the LHR limiter yet, afaik. Of course they might not want to say if they did, since NVIDIA might respond again, but it's generally nowhere near as easy as miners claimed it would be.)

|

|

|

|

CoolCab posted:from my perspective sort of fools and their money being easily parted, but - even if you're building a showcase or conspicuous consumption piece with dual 3090s in it, there are titles, resolutions and settings that will choke it. that's the real advantage of PC in my opinion - it's infinitely more granular and you can tune it very carefully to hit price and performance targets. games are cheaper or often free because you're not paying a licencing fee to sony or whoever. build exactly enough computer to do some considered task, rather than buy as much computer as you can - because even if you do and spend a ton it's literally impossible to build a computer that will run everything at maxed settings because you're trying to hit a moving target. I have an 8700k. I could upgrade but havenít seen much of a need for it yet. Ya Warzone is certainly one of them. Iím not as competitive as I used to be, but I was a top 250 PUBG player for a while. I was diamond lobbying in Warzone before the whole hidden ranking was figured out but ended quitting right around when Cold War came out. Never got into RDR2 that much, not sure if anyone considers it competitive. I did the first zone in story mode and then quit. But yeah, if you play single player games primarily this isnít as big of a concern. When I played through Cyberpunk I never checked my FPS. Also if you suck at multiplayer it wonít matter that much either, you will still suck just as much. Iím historically in the group where I see tangible gains from faster hardware, and there are tons of people in it despite what these aging goons think.

|

|

|

|

VulgarandStupid posted:I have an 8700k. I could upgrade but havenít seen much of a need for it yet. yeah tbf i think warzone is the additional game i was trying to remember earlier, lol (i prefer apex but different strokes). looks like average FPS on a 5950 of 130ish native? so that's the degree to which you're cpu bound (if at all) - - with dlss quality on you should be clearing 145 easily though. see, i'm terrible at apex and i still saw ingame performance gains when i upgraded my monitor. going from 60->120+ is kind of total bullshit? like from a competitive fairness standpoint it's a non-trivial advantage imo - reaction time is additive, so even if you are older and a little slower you still see exactly the same advantage. i've converted a few of my friends, and one got his highest ever kill game using a weapon he'd been trash at before the first game he booted.

|

|

|

|

VulgarandStupid posted:I have an 8700k. I could upgrade but havenít seen much of a need for it yet. there's absolutely no reason to upgrade an 8700K yet unless you want more cores. Even almost 4 years from launch there has barely been any performance advancement, there are really only 2 generations that are even any faster. Using the 10600K as a proxy for the 8700K (same uarch but higher factory clocks) the 11600K is around 10% faster and the 5600X is 16% faster, geomean across all reviewers. games are certainly starting to exploit more cores, but you can look at RTSS and see at your overall CPU utilization during gameplay and see how you're doing. I'd expect it to be pretty loaded in a modern title at higher refresh rates and maybe you'd see slightly better frame pacing in high-refresh scenarios or when running very cpu-heavy titles, but it's still one of the faster gaming chips in the market. You're well-placed to ride it out until Zen 3+ or wait for the DDR5 platforms (even the second gen of ddr5 platforms) if you want to. the thing is that of course if you haven't already, do overclock it (maybe delid it), the 8700K was the last of the chips where Intel was still playing coy with the default clocks, chips like the 10600K are pushed significantly faster out of the box but you can replicate that yourself pretty easily. that said if you wanted, you could still do a 9900K or 9900KF and get higher clocks + more cores from a drop-in upgrade. You can easily sell your 8700K, prices are running $230 right now on ebay. If you're going to do it then decide fast because the 9-series is in the process of being discontinued, the final customer orders were just placed and will be delivered no later than december, and retailers might already be cut off. Amazon proper doesn't have any 9900Ks any more, only third-party sellers, but they do have the 9900KF for $348. You could wait and gamble if they will restock it (probably) and on the price, but there's no guarantee. It's not the world's best price - the 9900K was $315 on prime day. So figure you get maybe $200 net for your processor and the 9900KF is $348 making it a $150 upgrade. Not the world's best deal but it's the only one you can do as a drop-in upgrade. the 5900X is the long-term upgrade, they're still harder to find than the 5600X/5800X but they're nowhere near as hard to find as GPUs. You'd have to buy a new mobo of course. You're probably looking at around $700 all up (if you can get a 5900X at MSRP) and you could sell your motherboard as well. Let's say you make $250 like that, so that becomes a $450 upgrade. Note there's a Zen3+ coming later this year, or maybe early next year, with stacked SRAM which will further improve IPC. This is probably going to be the final AM4 generation. Obviously there's always something better coming but since you're in a good position you can afford to wait a little bit, this way you'd get both more cores (probably 12 cores will be the sweet spot again?) and also a decent bump in per-core performance (probably like 10-15% faster than Zen3 so maybe around 30% faster than the 10600K I'd guess). Waiting for DDR5 is an option too and I'm sure Zen4 will perform even better, but being an early adopter on a new RAM standard, new motherboard socket, etc is a gamble too. Paul MaudDib fucked around with this message at 23:47 on Jul 5, 2021 |

|

|

|

Lord Stimperor posted:I thought the chip shortage was predominantly focused on chips with bigger nm structures than what's relevant for gaming, like low power stuff that goes in home appliances and stuff. Please don't tell me we have to compete with Bosch for GPU silicon now njsykora posted:Yeah I don't think the chip shortage has affected GPUs much, both Nvidia and AMD have said in their investor reporting that they're making more boards than ever and demand is the bigger issue. the auto shortages are mostly in microcontrollers and ancillary chips (power mosfets, ignition electronics, etc) as far as I know. Microcontrollers have a lot of physical outputs (PHYs) that don't shrink well, mosfets and RF and so on don't shrink well either. It's not flashy but old nodes are still very profitable because of these kinds of products, and obviously without them you can't build cars. And it's also very difficult to just "substitute" something else, even if it is very similar you have to port and re-test and re-validate the code and re-validate the hardware and it's a giant mess (and probably a giant liability if there ends up being a problem - like Toyota and the throttle controller fiasco). now, with that said, AMD and NVIDIA still have been affected by shortages of some of those ancillary chips, like GDDR6, power mosfets for the VRMs, and supposedly some kind of display controller chip (not sure about that one but that's what I read at one point). It is not crisis-level for them like it was for the auto companies, because they didn't tell their suppliers to cut their orders in half, so they've got reasonable amounts for the amount of product they were planning to produce. But hypothetically if AMD and NVIDIA really wanted to ramp production by (eg) bringing Pascal/Turing back on TSMC 16/12nm and Polaris and Vega back on GF 12nm, they might have problems doing that because the supply of their own ancillary components isn't infinite either, they would have to figure out a way to ramp GDDR6 production and so on. they are also affected by shortages of input materials. There is a global shortage of the actual physical silicon wafers right now, as well as the Ajinomoto Build-Up Film (ABF) used to separate the metal layers inside the chips. Apparently for some reason this is generally sourced by the clients rather than provided by TSMC (seems weird to me but OK). Again, AMD and NVIDIA have done a good job of securing their own supply, but there is a global shortage, it's not like if they wanted to ramp production on older nodes with some availability that they could just wave a wand and have tons of chips either. So in short - no, they haven't really been directly affected by shortages, but the shortages are there, and would be a problem if they wanted to significantly ramp production. Not insurmountable as long as you're willing to throw money at it (and there is far more margin in something like a GPU chip than a random microcontroller to just "throw money at it"), but they can't just ramp infinitely, the supply chain for their parts is sized to the expected production and you'd have to work on expanding that supply chain too. With that said, I think they also resisted the idea of doing a mega ramp-up on production because of what happened last time. Both AMD and NVIDIA got stuck with a giant stockpile of chips, and actually had to buy back chips from partners in some cases (again, if you'll recall, the partners were crying that NVIDIA expected them to still fulfill their contractually obligated purchases... after a year and a half of profiteering from mining. please allow me to play the tiniest violin.... but yeah they actually did buy a bunch back from at least Gigabyte iirc and maybe one other partner). They likely could do more, but for them as companies the best option is to proceed as if nothing is happening, because miners aren't "real" sales, they are just a pull-forward, if they ramp production a ton those cards will eventually flood back into the gaming market and they will offset gaming sales that would have occurred, plus they will be stuck with a ton of inventory like before. They probably mildly ramped production but they clearly did not go gangbusters like they did in 2017/2018, and I think AMD mostly just chose to ignore the GPU market and keep doing CPU sales since those are multiple times the revenue per wafer and everything was selling anyway. It's also worth pointing out that this has still been a relatively short mining boom. It didn't really get started in earnest until January, it started crashing mid-june, so it was only about 5 months. The lead time on silicon orders is around 6 months even if you have all your ducks in a row, but those ducks are essentially the things I'm talking about as being in shortage, so it would have been more difficult there too. All in all, had it gone on longer maybe they would have ramped production a little harder, but I think the 2018 crash was front and center in both AMD and NVIDIA's minds here. apropos of nothing but I find the whole thing with the auto industry very aggravating. This whole saga is completely typical for them. They are used to wearing the pants in the relationship with their suppliers, they are frankly abusive in their partner relationships, they think they're doing you a favor by giving you the contract, and they expect aggressive pricing and favorable payment terms and for you to generally bend over backwards if they grace you with their patronage. But that's not how the silicon industry works (TSMC or GF is gonna sell their wafers anyway, they don't give a poo poo about any one customer or industry) and when they reduced their orders the foundries collectively shrugged and said "ok then" and sold it to someone else. And now the auto industry realizes they've goofed and wants it back and more besides, and it's a national crisis, if we don't fix it then millions of people will be out of work! it's another too-big-to-fail, they gambled and lost and now they want someone else to fix their mistake, because automakers are too important to let fail, and the aggravating part is that it turns out they're right and the USG is going to go to bat to fix it for them. Again. Paul MaudDib fucked around with this message at 01:41 on Jul 6, 2021 |

|

|

|

Paul MaudDib posted:there's absolutely no reason to upgrade an 8700K yet unless you want more cores. Even almost 4 years from launch there has barely been any performance advancement, there are really only 2 hexacore chips that are even any faster. Yea I upgraded my brother to a 9900k, for $250. My 8700K is delidded but I just run it stock, I have a mini-ITX build but I can support some OC. That said I will probably only do it if BF6 sees a bigger gain than most other games. As far as APEX goes I did fairly well in pre-season 1. Most of my characters were 2-2.5KDR. I didnít know at the time, but when season 1 came out, they actually gave you your stats. My Wraith KDR was 3, so she was definitely OP. I donít do particularly well in Apex though, Iím not a great precision shooter/head popper, where I usually do well is movement and recoil control, but you have to have all three to excel in Apex. Iím glad we have all decided that the 3080 was a logical upgrade for me. I just got my second one in today and will need to see which works in my build better. Again, the 3080 is in the perfectly reasonable value curve. The 3080ti and 3090 are not.

|

|

|

|

https://twitter.com/VideoCardz/status/1411973215484788736?s=20

|

|

|

|

https://twitter.com/VideoCardz/status/1411959407584419840?s=20 can't find it? Let it go

|

|

|

|

CoolCab posted:yeah tbf i think warzone is the additional game i was trying to remember earlier, lol (i prefer apex but different strokes). looks like average FPS on a 5950 of 130ish native? so that's the degree to which you're cpu bound (if at all) - - with dlss quality on you should be clearing 145 easily though. Paul MaudDib posted:apropos of nothing but I find the whole thing with the auto industry very aggravating. This whole saga is completely typical for them. They are used to wearing the pants in the relationship with their suppliers, they are frankly abusive in their partner relationships, they think they're doing you a favor by giving you the contract, and they expect aggressive pricing and favorable payment terms and for you to generally bend over backwards if they grace you with their commerce. But that's not how the silicon industry works (TSMC or GF is gonna sell their wafers anyway, they don't give a poo poo about any one customer) and when they reduced their orders the foundries collectively shrugged and said "ok then" and sold it to someone else. And now the auto industry realizes they've goofed and wants it back and more besides, and it's a national crisis, if we don't fix it then millions of people will be out of work! it's another too-big-to-fail, they gambled and lost and now they want someone else to fix their mistake, because automakers are too important to let fail, and the aggravating part is that it turns out they're right and the USG is going to go to bat to fix it for them. Again.

|

|

|

|

|

| # ? May 29, 2024 02:32 |

|

Alan Smithee posted:https://twitter.com/VideoCardz/status/1411959407584419840?s=20 I actually really like this, I'm a fan of the super barebones OEM style even if those coolers usually suck

|

|

|