|

e: nvm

|

|

|

|

|

| # ? May 30, 2024 18:42 |

|

Harik posted:It's an incredibly dumb idea. Notably, Epyc does not have a few MB of flash for a BIOS, so that's still on board in SPI NAND chips or whatever. It's utterly trivial to allocate 1k or whatever of ROM on flash chips when you're talking dell/hp/lenovo volume, so that's where the key should have lived. True secure boot means verifying that every single instruction executed during boot comes from a trusted source. In practice, this means the processor (or in this case a security coprocessor, it's really an Arm Cortex-A5 apparently) has to validate the firmware's cryptographic signature before allowing any of it to be used, whether it's data or code. That means the processor needs its own local secure key storage, and a way to make that storage immutable (fuse bits). You're proposing storing the key in the same R/W memory as the firmware. I do not think you know as much about secure systems design as you think you do.

|

|

|

|

BobHoward posted:True secure boot means verifying that every single instruction executed during boot comes from a trusted source. In practice, this means the processor (or in this case a security coprocessor, it's really an Arm Cortex-A5 apparently) has to validate the firmware's cryptographic signature before allowing any of it to be used, whether it's data or code. That means the processor needs its own local secure key storage, and a way to make that storage immutable (fuse bits). ROM isn't R/W. "But you could replace the ROM chip!" ... you can replace the processor a whole lot easier. E: In case you didn't understand: When you're ordering millions of flash chips you can request they customize them with a section of hard-burned ROM or OTP that's addressed via a separate chipselect. Absolute worst case, for smaller vendors, is placing a separate OTP ROM chip on the board. No matter how you slice it, it's much MUCH harder to replace surface mount components than socketed ones. A quick glance around suppliers shows a few of them already sell flash chips with OTP regions, because it's so obviously useful. Harik fucked around with this message at 23:46 on Feb 8, 2022 |

|

|

|

You already anticipated the response: you can, in fact, replace an external flash chip. Or hack its pseudo-OTP region. You seem to be starting from the assumption that nobody cares about making it extremely difficult (hopefully impossible) to boot untrusted software, and concluding that a secure boot system which puts the root of trust in the SoC itself must be motivated by other goals. What I am telling you is that this just isn't true. There are people who really care about minimizing the chance an attacker can compromise the root of trust, and in order to do that the root of trust pretty much has to live inside the SoC itself. And yes, an attacker could replace the whole SoC. There are ways to detect that - e.g. store a private key in each SoC's secure key storage, and when a new node comes up on your network, ask it to sign something to prove it has your organization's private key.

|

|

|

|

Harik posted:ROM isn't R/W. You don't have to replace the chip, you have to intercept the SPI lines which is far, far easier and is probably just an xacto knife and plugging into the right header, depending on motherboard. Epyc processors used in cloud servers are firmly on the "mossad" side of the "mossad/not mossad" security stance . Usually the root of trust on the SoC itself which gets into the territory where its annoying even for a state intelligence service (remember the FBI/apple thing). hobbesmaster fucked around with this message at 22:15 on Feb 9, 2022 |

|

|

|

hobbesmaster posted:hea This was demonstrated last year. Trusted platform module security defeated in 30 minutes, no soldering required

|

|

|

|

some good poo poo coming down the pipelines https://www.youtube.com/watch?v=bCgkzLayauA

|

|

|

|

Truga posted:some good poo poo coming down the pipelines oh man that's rad. thanks for posting this

|

|

|

|

HUBs review of a 6000 series part is up. https://www.youtube.com/watch?v=pNSnSkNGI3g Basically if you want a higher power laptop with a dedicated GPU, Alder Lake is the go-to. But if you want a lower power thin and light style design with an IGP, Rembrandt seems good. I imagine the 6800U is gonna be real interesting.

|

|

|

|

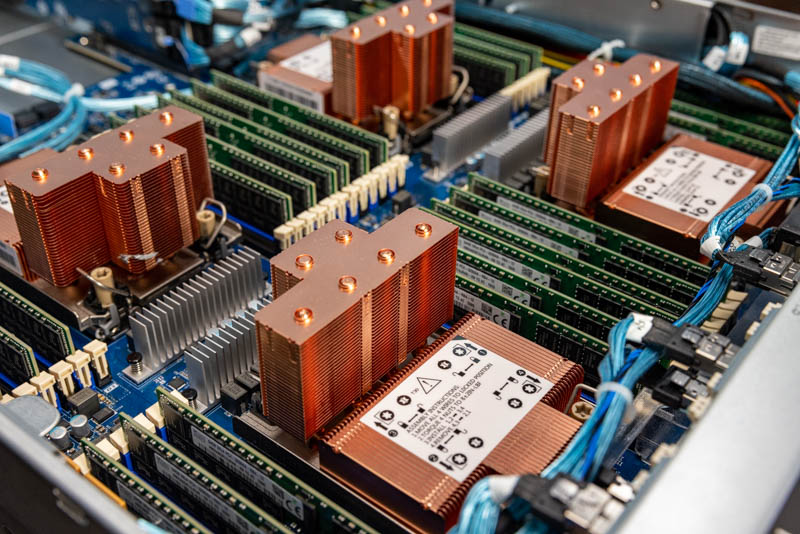

Is there 4P Epyc? 4x128?

|

|

|

|

EPYC boards have topped out at 2P so far. I don’t think we’ve heard anything about adding support for more

|

|

|

|

Supply chain disruptions have kept the ASRock engineers from getting enough cocaine all at once to fit the 4 sockets onto a single board.

|

|

|

|

This is just out of my rear end but I don’t think infinity fabric can scale that way. Since there’s no dedicated inter CPU bus ala QPI you’d run out of bandwidth cpu to cpu and have bottlenecks before it became effective unless you were running something really optimized for x86 CPU only

|

|

|

|

where would you even put the DIMMs for a 4-socket board, given how much goes into modern, high-density servers?

|

|

|

|

How many mem channels is epyc? Ok what if server SO-DIMM to save space.

|

|

|

|

mdxi posted:where would you even put the DIMMs for a 4-socket board, given how much goes into modern, high-density servers?

|

|

|

|

Crunchy Black posted:This is just out of my rear end but I don’t think infinity fabric can scale that way. Since there’s no dedicated inter CPU bus ala QPI you’d run out of bandwidth cpu to cpu and have bottlenecks before it became effective unless you were running something really optimized for x86 CPU only the terminology is fairly muddled because infinity fabric is actually a name for several different interconnects that do similar things, but there is an on-chip link that is used between the IO die and the chiplets (and presumably between IO die nodes on Rome/Milan) and also an external one that is basically PCIe based that is used between the CPU sockets or between CPU and devices in the PCIe sockets. so far they have been content to stay at a max of 8 nodes (4 per socket regardless of physical arrangement on chiplets or IO die) and presumably you could scale it bigger (potentially involving more links on the IO die, or a different topology for the IO die interconnects).

|

|

|

|

I'm sure that if there were customers out there that needed more cores, AMD would happily oblige them, but as it stands, between per-core licensing keeping things in check, and AMD only "needing" two sockets to match or exceed the core density of 4P Intel systems, that's clearly not a demand.

|

|

|

|

SwissArmyDruid posted:I'm sure that if there were customers out there that needed more cores, AMD would happily oblige them, but as it stands, between per-core licensing keeping things in check, and AMD only "needing" two sockets to match or exceed the core density of 4P Intel systems, that's clearly not a demand. and next generation they’re doubling their core count again anyway, 256 cores per system is still A Lot

|

|

|

|

Yeah, this kind of core density is both patently stupid, ridiculous, absurd, and massively liberating. I think AMD is more interested in pushing cache instead. I am still entirely behind Ian Cuttress's speculation that AMD's reveal of the 5800X3D is AMD putting out feelers for downbinning parts they intend to use in future EPYC chips. The benefits of increased cache, and, more importantly, routing interconnects through the stacked cache, resulting in 4D topologies to reduce intra-chiplet latencies will yield FAR MORE benefits in the datacenter than "lol FPS go higher" on the desktop. SwissArmyDruid fucked around with this message at 08:40 on Feb 26, 2022 |

|

|

|

Crunchy Black posted:This is just out of my rear end but I don’t think infinity fabric can scale that way. Since there’s no dedicated inter CPU bus ala QPI you’d run out of bandwidth cpu to cpu and have bottlenecks before it became effective unless you were running something really optimized for x86 CPU only  https://www.nextplatform.com/2017/07/12/heart-amds-epyc-comeback-infinity-fabric/ yeah you could scale that to 4 sockets by doing a hypercube, but then some sockets are an extra hop away and every link is half the speed. You really would probably want to double the amount of inter-chip IF links at a minimum, and you'd still have the latency. note that this is also true of Rome/Milan, the IO die is still four nodes internally. AMD has done a really good job making it feel like a uniform memory architecture, NPS1 is the default, but it's still actually NUMA underneath. AMD did really well though, on Rome it's only about a 12% hit to memory latency to have faux-UMA NPS1 mode, vs the NPS4 NUMA mode. On Milan it’s 6%. If you can localize load, it's still maybe worth it, but faux-UMA pretty much just works, to the extent most people don't even realize it's not UMA. One place where the NUMA thing can crop up unexpectedly is in memory configurations. Because it's actually four quadrants in a trenchcoat, you want to make sure that every quadrant has at least 1 memory channel, and ideally they should all have either 1 or 2 sticks. "Unbalanced" configurations can result in significant performance loss, Lenovo put out a bulletin showing that populating 7 out of 8 dimm channels can cost you 60% of your memory performance, same for 14 or 15 DIMM configurations. Most of the operators will be loading sets of 8 (or at least 4) anyway so it's not a big deal in practice, but it's something for homelab type people to be aware of, don't add or remove a single stick on Epyc.   https://lenovopress.com/lp1268.pdf At the high end, it also matters for bandwidth-sensitive applications (eg Netflix's CDN ran into problems with quadrant locality bottlenecking them before they could fully saturate their storage) or latency sensitive applications (for HFT and other FPGA-based stuff, it's another bit of latency to cut out). Paul MaudDib fucked around with this message at 09:41 on Feb 26, 2022 |

|

|

|

I wish my companys render farm were all virtualized. It's dumb af for a job that crashes the node to also nuke the other jobs running on that node. We assign nodes a number based on how many cores/ram it has. So if a node has a score of 10 it can run 5 jobs that require only 2 food but lol is it dumb for any of it not to be virtualized at this point.

|

|

|

|

Thank you for explaining my meaning with actual diagrams and words Paul. (What I should've said is, "while it absolutely could do 2^(n) nodes, it can't effectively scale to 4+ nodes without a lot of forethought to software architecture and NUMA saturation") Crunchy Black fucked around with this message at 00:09 on Feb 27, 2022 |

|

|

|

Crunchy Black posted:Thank you for explaining my meaning with actual diagrams and words Paul. I’d encourage you to think of Epyc as 4 nodes per socket. 2 sockets is 8 nodes (and this will also be true in Zen4 - they are going to two chiplets per quadrant). But yeah software scaling is really the Achilles heel of parallel approaches. The success of AMD really has hugely hinged on making 4 quadrants feel like one and play nicely for almost all software cases.

|

|

|

|

Right because it's already NUMA-ish, any additional overhead of multiple sockets is just a kick in the nuts, especially if you're doing a lot of threadswitching/memory swaps. I'd love to see some of the tools HPE/Cray/AMD have come up with conjunctively to make these things scale to supercomputer/multiple rack sizes because ultimately that'd be what you'd need to really throw horsepower at a given problem with lots of EPYC sockets.

|

|

|

|

Trolling Thunder posted:Can I get a sanity check? oh my loving god sometimes i hate computers so loving much Over two months, I slowly replaced every goddamned part out, one at a time -- cpu / ram / mobo / psu -- and it wouldn't loving boot, only a single click. even with a new psu!!! wtf???? I unplugged the 8-pin power supply connection from the mobo (left only the 24-pin power connected) and it booted! 6-month-old 5600X abruptly shuts down and won't boot. Solution is to remove the 8-pin CPU power supply that had been connected up to that moment, because?? ;alkdjfaihjf;oavl;kjnfk jasdff ika;ifja;kldfasjkadfs gently caress me.

|

|

|

|

Trolling Thunder posted:oh my loving god sometimes i hate computers so loving much What the hell, that should work the other way around. A classic reason for a non-functional computer after a fresh build is that you forget to connect the 4/8-PIN CPU power cable. My understanding has been that a computer can't work without that cable.

|

|

|

|

Saukkis posted:What the hell, that should work the other way around. A classic reason for a non-functional computer after a fresh build is that you forget to connect the 4/8-PIN CPU power cable. My understanding has been that a computer can't work without that cable. The 8-pin just provides extra power. It typically isn't necessary for mid-range parts, iirc. Only the 24 pin is required for all CPUs.

|

|

|

|

okay, gently caress, I was wrong. It's something to do with my 980ti. It has a 6+8 pin connection. If I have the 8-pin connected on the 980ti, the computer won't boot. Have I said I hated computers?

|

|

|

|

yep, video card is borked, lol. When the 8-pin is plugged into the card, I get one click from any psu/mobo/ram/cpu combo. Everything works fine with an old 670 put in.

|

|

|

|

The 8-pin for the motherboard and the 8-pin for the GPU are different connectors, and I think they're keyed so you can't plug in the wrong one? But you might want to make sure of that

|

|

|

|

If it's a modular PSU, also make sure everything's right PSU side.

|

|

|

|

Also that you are using only cables that came with that PSU and not cables from a different PSU. Modular PSU cables have no standard; they're incompatible between brands and sometimes incompatible between different models of the same brand.

|

|

|

|

Crunchy Black posted:This is just out of my rear end but I don’t think infinity fabric can scale that way. Since there’s no dedicated inter CPU bus ala QPI you’d run out of bandwidth cpu to cpu and have bottlenecks before it became effective unless you were running something really optimized for x86 CPU only Infinity fabric literally is AMD's equivalent of QPI??? Paul MaudDib posted:the terminology is fairly muddled because infinity fabric is actually a name for several different interconnects that do similar things, but there is an on-chip link that is used between the IO die and the chiplets (and presumably between IO die nodes on Rome/Milan) and also an external one that is basically PCIe based that is used between the CPU sockets or between CPU and devices in the PCIe sockets. No form of Infinity Fabric is based on PCIe technology. The idea that it is has been spread far and wide by tech writers whose limited understanding of what's going on has led them to unwarranted conclusions. The conceptual background needed here is that PCIe, Infinity Fabric, and QPI are all protocol stacks. That is, you can analyze (and implement) them as several distinct layers of software and hardware. At the bottom of all of them is a physical layer (PHY) which transmits and receives packets ("flits" in QPI terminology) over physical wires. The PHY is also known as a SERDES (serializer/deserializer) because as part of its functionality it translates between a wide datapath at a relatively low clock rate and a serial datapath at a much higher clock rate. What AMD did with IF was to define multiple options for the physical layer. One is a specialized SERDES only suitable for very short range die-to-die interconnect on a substrate. The other is a more conventional long-range SERDES which can handle off-package connections through a backplane (possibly with connectors in the signal path). The tradeoff here is that by reducing range and requiring all-soldered connections you can greatly reduce the energy per bit (possibly latency too). The protocol running through these different physical layers is identical, and is not in any sense PCIe. The grain of truth in the "IF is based on PCIe thing" is that the chips which support the long-distance option do so with multimode SERDES which can be configured as either PCIe or Infinity Fabric lanes. This lets system integrators choose what kind of tradeoff between general purpose I/O and socket-to-socket IF they want to make. However, it's a mistake to think that an AMD SERDES configured for Infinity Fabric is some form of PCIe. It probably isn't even fully electrically compatible with PCIe in that mode, and definitely isn't protocol compatible. They're using internal muxes/selectors to disconnect the PHY from one kind of controller and connect it to another. This kind of thing is pretty old. Over a decade ago I worked on a chip with a broadly similar concept - system integrators could decide whether they wanted each of three SERDES lanes to be a PCIe 1.x root port or a SATA port. Just like Infinity Fabric, you wouldn't say that one of those SERDES lanes in SATA mode was really a form of PCIe; in SATA mode the PHY was disconnected from the PCIe root complex and configured in ways which made it electrically incompatible with PCIe, both externally and internally.

|

|

|

|

Combat Pretzel posted:If it's a modular PSU, also make sure everything's right PSU side. Oh, yeah, the modular cables might not be keyed differently on the PSU end.

|

|

|

|

BobHoward posted:Infinity fabric literally is AMD's equivalent of QPI??? History time! Infinity Fabric is an evolution and extension of HyperTransport. HT itself began as a reimplementation -- and then became an evolution -- of the EV6 bus which DEC designed for the Alpha. AMD licensed it from HP after HP bought DEC. HT was a part of what let the Operons spank Intel's offerings of the time. Intel was stuck with off-die memory controllers and the old-style FSB until the EV6 patents expired. When that happened, the next generation of Intel CPUs switched to on-die MMCs and this new thing called "QPI" (which looked and acted a lot like HyperTransport). The FSB was never spoken of again.

|

|

|

|

5950X is currently $599 at bestbuy https://www.bestbuy.com/site/amd-ryzen-9-5950x-4th-gen-16-core-32-threads-unlocked-desktop-processor-without-cooler/6438941.p?skuId=6438941

|

|

|

|

Dang, that's tempting. E: So tempting I didn't wait to get home and bought it on the phone. The 3950x never dropped below $675 or so, looks like. v1ld fucked around with this message at 17:39 on Feb 28, 2022 |

|

|

|

When the 5800X3D presumably comes out, I assume it's also going to run a little cooler because they're downclocking it a bit?

|

|

|

|

|

| # ? May 30, 2024 18:42 |

|

kliras posted:When the 5800X3D presumably comes out, I assume it's also going to run a little cooler because they're downclocking it a bit? Not by any appreciable margin, no. A few hundred MHz on the clock is nothing compared to the lower voltages that mobile-binned parts would run at, or in other product segments, straight-up turning off sections of the design altogether. The increased cache in theory is also warmer, even when idle. Sidesaddle Cavalry fucked around with this message at 19:44 on Feb 28, 2022 |

|

|