|

hobbesmaster posted:Either way, if youíre talking 144 (or 141 after ďrecommendationsĒ) then it really doesnít matter. If it does matter youíll have a coachÖ Yeah, thatís the take away. Most of the pros themselves have repeatedly said that they will take every frame they can get obvi, but beyond a reasonably low consumer level equipment bar, those diminishing returns are more than offset by other factors. You arent losing in head clicker twitch shooter game because they have a 360hz screen and a 1oz lighter mouse, you are losing because they are 17 year old indoor kids. Also you are losing because you are playing that kinda game in the first place imo!!!

|

|

|

|

|

| # ? Jun 4, 2024 01:24 |

|

I dunno; in every sport or game Iíve ever played itís been the case that practise beats money, and the returns on money fall off way faster. Iíll admit I donít watch CS:GO or Apex, but Iím still not convinced 5-10ms matters in games with imperfect information.

|

|

|

|

Shumagorath posted:Do you have any research supporting that 1ms makes a measurable difference? Last I looked into it, Olympians have simple reaction times (starting pistol, ruler test, etc) on the order of 0.15s. 1ms would not have a measurable impact on a far more complex action like IFF ó> Track ó> Fire. It'd have to be a shitload of research to cover everything involved. It doesn't ultimately matter to demonstrate the point. BTW, 150ms is indeed a solid reaction time but I would expect top-tier athletes to be significantly better. It's quite possible for most people to have an average around 150 with practice, though maybe not once you're past your 20s. Cracked out 14 year olds on adderall can push 120ms or lower. Also, if you do want to go read research, keep in mind that a LOT of studies have no calibration for the delay inherent in the testing. They're usually more interested in the difference between two groups than they are trying to test the exact RT people have. The humanbenchmark dataset is a good example of what I'm talking about. If you imagine your RT as something like a normal distribution sitting under that curve, there's no point on that chart where shifting your average 1ms to the left is not a noticeable benefit, especially with metrics that objectively rate your performance like ranking systems. It's going to put you ahead of your peers who previously had the same average RT as you (again simplifying, because there are other skill factors involved). Because there's variation in RT on both sides as well as other factors, it's not like reducing your reaction times by 1ms is going to make you just auto-win every heads-up instagib engagement against a previously equally performing player, but it will obviously increase your win rate. If you play FPS games regularly, the people you consider your peers are going to have reaction times in the same general ballpark as you, somewhere in the area of like 130-200ms depending on how good you are. Pushing yourself ahead 1ms on every reaction will absolutely have a noticeable result. Most people wanting to get better at an FPS would benefit more from learning things like how to configure their mouse DPI (which can reduce your input latency by several milliseconds easily) and sensitivity, how to move and position, how to predict people, and how to pre-aim than gaining 1ms on their monitor, but it absolutely matters. Shumagorath posted:Iím arguing that 5-10ms of lag (input, network, whatever) is less impactful than many here are claiming it is outside of very simple games. Say your game is two players having a dot appear on screen and the fastest on-target click wins. In that scenario every millisecond matters. In a game where both players have free movement in 3-space, audio cues, materials, etc then quality of play is going to dominate minor latency gaps in everything but hypothetical quickdraws. 1. All that stuff gets abstracted away in the end. Any real-time game is a race to complete some task(s), and if you're 1ms ahead on the clock, that's literally a measurable advantage by definition. 2. It doesn't matter if you can perceive how good your equipment is, or your advantage. It matters if you win the race.

|

|

|

|

I feel like there's a higher than usual amount of irrational thinking in the esports community.

|

|

|

|

Basically in a contest between 17 year old indoor kids, the player with the significantly faster PC will have a consistent and measurable advantage over time even if the difference is only 5-10 MS. But for everyone else, it won't make you play as competitively as a 17 year old indoor kid again. (The only thing that would is being very physically fit and dedicating hours every day to practice.)

|

|

|

|

TOOT BOOT posted:I feel like there's a higher than usual amount of irrational thinking in the esports community. This is true. There are levels where they actually want to make sure that every possible advantage is there when championships are on the line. So, like for the dozen computers set up in identical configurations at a T1/major LAN. Thatís not very many sales, so just like with physical sports (yes yes the term is useful here) most sales are to people that think that if they have Michael Jordanís shoes theyíll get better at basketball. The amount of reaction time based twitch gameplay varies between game to game but I would think that someone capable of being on a t1 team playing on a potato would have the game sense to be on a successful t3 team and being scouted by a t2. There was a support player in t1 overwatch (OWL) that was playing on a very lovely laptop at a low resolution before being recruited into the org. OW support of course doesnít have corner clearing/peaking like VALORANT or CSGO but the gamesense to know the likely positions of opponents to prefire or how to swing or whatever is still going to make them stand far apart.

|

|

|

|

high refresh rates look nicer even if my reflexes aren't good enough to keep up with them anymore

|

|

|

|

So whatís the poop on the atx 3.0 thing? If I buy an EVGA 1300g2 on b-stock, am I gonna regret it not having atx 3.0? There will have to be cheater plugs for quite a while right?

|

|

|

|

TheScott2K posted:Got my 1660 for sale if anyone's looking for a starter GPU for their kid's fortnite box or something. You won't be able to reply though. I have PMs here and my twitter is @adequate_scott Hey, so if your thread gets gassed in SA-Mart, don't come trying to sell poo poo here. If you're going to sell something on SA, you need a thread in SA-Mart. If you can't follow SA-Mart rules, you can't sell something on SA.

|

|

|

|

Internet Explorer posted:Hey, so if your thread gets gassed in SA-Mart, don't come trying to sell poo poo here. If you're going to sell something on SA, you need a thread in SA-Mart. If you can't follow SA-Mart rules, you can't sell something on SA. I have to admit it's funny seeing 2 Toads going at it.

|

|

|

|

buglord posted:Is there any competitive advantage to running CS:GO or Valorant or whatever at +240fps? Maybe this is more Games forum related but Hardware Unboxed and Gamers Nexus talk about playing games at 300FPS and I really have to wonder if this stuff makes or breaks noscope headshot performance on a noticeable level or if any competitive advantage it brings is lost when playing online with latency. Here we walk into the land of the massive subjectivity of "motion smoothness" and its relationship to clarity. Theoretically a high fps monitor with good technology reduces ghosting the same way backlight strobing was thought to be the cure a few generations back. The way I heard apex player sweetdreams put it was that moving from 60hz to 120hz was a revelation and an improvement he thinks every player should make but 120 to 240 was marginal but worth keeping around. At the end of the day while things feel better, sometimes they feel better because the panel itself is just way loving more responsive because the market has advanced or you're paying for more response. Higher FPS panels tend to need to have better response time but you can still make trash. These gains are also entirely based on the individual playing the game too. Shumagorath posted:Yes, but Iím saying with 10ms ping and 15ms input and display lag is still faster than all but the best professionals can even perceive. This argument also assumes that the 240hz panel and the 300hz panel are functionally similar in all other aspects so 1ms of input is all you are gaining. Practically this is not the case as newer and more expensive monitors provide many other benefits to reproducing the image that is your fundamental feedback in a game. Shumagorath posted:I dunno; in every sport or game Iíve ever played itís been the case that practise beats money, and the returns on money fall off way faster. Iíll admit I donít watch CS:GO or Apex, but Iím still not convinced 5-10ms matters in games with imperfect information. Professional athletes wear lighter shoes, slippier swimsuits, or whatever other advantages are handed to them regardless of whether or not they're still the better athlete without that equipment on. This process follows the same logic. If you're paid to perform and you can pay for performance you do it regardless of how much sense it makes. CatelynIsAZombie fucked around with this message at 07:17 on Oct 5, 2022 |

|

|

|

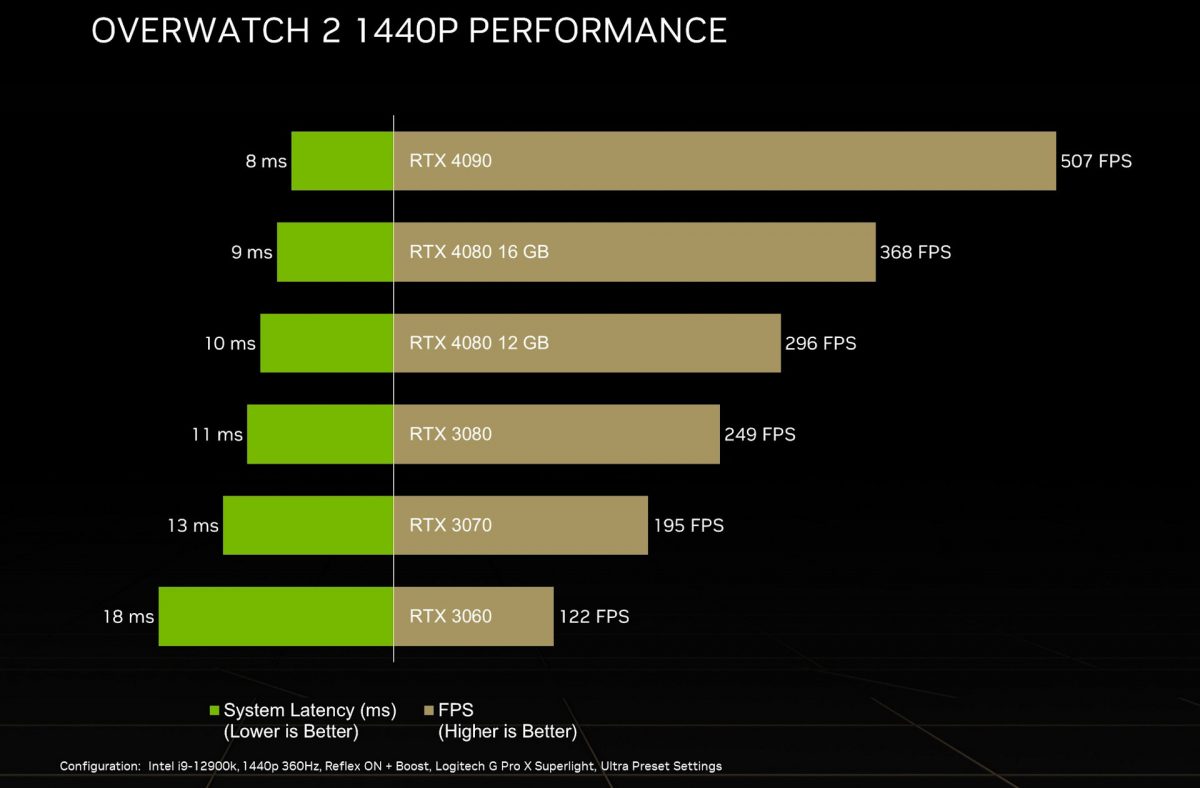

https://videocardz.com/newz/nvidia-geforce-rtx-4090-delivers-500-fps-in-overwatch-2-at-1440p-ultra This is an oddly straightforward, realistic-looking chart for Nvidia. There doesn't seem to be any DLSS tricks here (OW2 doesn't support it, right?), and this data is mostly in line with what we were expecting after seeing the limited no-DLSS/RT data from the announcement. Given the typical performance differentials between Ampere cards, the 3090 would probably be around 275 - 280 FPS in this chart and the 3090 Ti would be around 290 - 300 FPS.

|

|

|

|

ow2 has fsr1 as an option but that's it as far as upscaling

|

|

|

|

I still can't believe they named two cards with a huge performance gap the same (4080)

|

|

|

|

Zedsdeadbaby posted:I still can't believe they named two cards with a huge performance gap the same (4080) habeeb it

|

|

|

|

Since nobody seemed to know about the compute situation on Arc GPUs, they're pushing an open source alternative to CUDA:quote:"Today in the accelerated computing and GPU world, you can use CUDA and then you can only run on an Nvidia GPU, or you can go use AMD's CUDA equivalent running on an AMD GPU,‚ Lavender told VentureBeat. "You can't use CUDA to program an Intel GPU, so what do you use?" That's where Intel is contributing heavily to the open-source SYCL specification (SYCL is pronounced like "sickle") that aims to do for GPU and accelerated computing what Java did decades ago for application development. Intel's investment in SYCL is not entirely selfless and isn't just about supporting an open-source effort; it's also about helping to steer more development toward its recently released consumer and data center GPUs. SYCL is an approach for data parallel programming in the C++ language and, according to Lavender, it looks a lot like CUDA. Dr. Video Games 0031 posted:https://videocardz.com/newz/nvidia-geforce-rtx-4090-delivers-500-fps-in-overwatch-2-at-1440p-ultra Just lol at the 4080 12/16gb. E: And that 3080->4080 12gb is only 18% increase for $900 mobby_6kl fucked around with this message at 10:03 on Oct 5, 2022 |

|

|

|

Zedsdeadbaby posted:I still can't believe they named two cards with a huge performance gap the same (4080) the really galling part is that their excuse for this is "well we already did it with the 3080"

|

|

|

|

Man that really is a 4070 isn't it, especially looking at the gap between the 3080 and 3070. I don't think I'll see any valid reason to upgrade my 3080 10G this generation.

|

|

|

|

ijyt posted:Man that really is a 4070 isn't it, especially looking at the gap between the 3080 and 3070. I don't think I'll see any valid reason to upgrade my 3080 10G this generation. I've got a 3060ti currently sitting in my i6600k system which will be transferred across to whatever the optimal new build is once the dust settles with the releases this year, and even with VRAM limits being an issue I'm not sure I can justify upgrading to a high end 40 series card - I can just wait for the 50 series and the price/performance increase will likely be enormously better.

|

|

|

|

Alchenar posted:I've got a 3060ti currently sitting in my i6600k system which will be transferred across to whatever the optimal new build is once the dust settles with the releases this year, and even with VRAM limits being an issue I'm not sure I can justify upgrading to a high end 40 series card - I can just wait for the 50 series and the price/performance increase will likely be enormously better. I'm still on the vanilla 1070, didn't manage to get a 30 series for a reasonable price. But this year I'm definitely getting a new PC, Ivy Bridge is getting really long in the tooth and both AMD and Intel seem to have good updates. I was hoping to combine that with a new GPU but Ada isn't going to be it for now either.

|

|

|

|

mobby_6kl posted:I'm still on the vanilla 1070, didn't manage to get a 30 series for a reasonable price. But this year I'm definitely getting a new PC, Ivy Bridge is getting really long in the tooth and both AMD and Intel seem to have good updates. I was hoping to combine that with a new GPU but Ada isn't going to be it for now either.

|

|

|

|

https://www.youtube.com/watch?v=nEvdrbxTtVo https://www.youtube.com/watch?v=XTomqXuYK4s apparently there's an unboxing embargo for the 4090 too? https://www.youtube.com/watch?v=Y1l_G1pPwhY

|

|

|

|

whoof, performance is still all over the place skimming the TPU review it's often ahead of the 3060 in DX12 games but behind the 2060 in DX11 games

|

|

|

|

repiv posted:whoof, performance is still all over the place This is like when a friend of mine who plays a ton of CS upgraded from a 2500K to 1700X and his CS frame rates went down while everything else went up. I'm going to dig into reviews, but if it's not a very compelling upgrade for people on a 1060, Intel has no market.

|

|

|

|

Twerk from Home posted:This is like when a friend of mine who plays a ton of CS upgraded from a 2500K to 1700X and his CS frame rates went down while everything else went up. speaking of which, not a great first showing for the DX9-on-DX12 layer intel is using

|

|

|

|

i thought performance was going to be so-so, but man, the tpu average is just rough

|

|

|

|

repiv posted:speaking of which, not a great first showing for the DX9-on-DX12 layer intel is using Oh no, that's awful. Why didn't they test a 7970 or GTX 780 Ti or something to get a good comparison?

|

|

|

|

Seems like it really needs some driver support or something but I don't think it's a terrible first look.

|

|

|

|

does anyone work with hosted video encoding to give any idea of what the cost-benefit analysis is for a company like twitch to move from h264 to av1? at what point in this transition do companies start saving money from the transition

|

|

|

|

Honestly looks pretty good if you don't give a poo poo about a 20 year old game and 1080p  E: at least in the HU video, in GN it seems worse? mobby_6kl fucked around with this message at 14:55 on Oct 5, 2022 |

|

|

|

repiv posted:speaking of which, not a great first showing for the DX9-on-DX12 layer intel is using Those 1% frametimes being equivalent between the A770, A750, and A380 sure look bad. Is that something that might indicate an incompatibility between the game and hardware architecture, or is that still blameable on the drivers?

|

|

|

|

Chainclaw posted:don't most modern games decouple render framerate from input polling framerate? If you're rendering, say, 300 FPS chances are the game isn't polling input at 300 FPS. funny that you mention quake 2 here, because all quake engines have historically been insanely good on input. i played multiplayer doom2, q1 and q2 at sub30 fps for years because i was poor and had zero input issues, moving a mouse a set distance always also moved the viewport the same amount. it's modern game engines that are really bad about this poo poo

|

|

|

|

unpronounceable posted:Those 1% frametimes being equivalent between the A770, A750, and A380 sure look bad. Is that something that might indicate an incompatibility between the game and hardware architecture, or is that still blameable on the drivers? the thing that immediately comes to mind when translating older APIs to DX12 or Vulkan, as intel is doing with DX9, is shader compilation stutter. intel are hitting the same pain points that proton does, without the elaborate mitigations that valve has come up with. it would be useful to know if computerbase measured their first run (meaning the bad frametimes are potentially a one-off) or did a dry run first which primed the shader cache before they measured (meaning the frametimes are always that bad) repiv fucked around with this message at 14:59 on Oct 5, 2022 |

|

|

|

mobby_6kl posted:Honestly looks pretty good if you don't give a poo poo about a 20 year old game and 1080p It actually that gets close to the 3060ti in some titles. But I'd say half of the games I play are pretty old, and I value the (relative) peace of mind of an established vendor. The Radeons just seem like much better value.

|

|

|

|

another thing that would be interesting to explore is using DXVK on windows, to see if it can beat intels native DX10/DX11 implementation or the microsoft DX9-on-DX12 layer they use by default doesn't look like any reviewers have thought to try that yet though

|

|

|

|

kliras posted:does anyone work with hosted video encoding to give any idea of what the cost-benefit analysis is for a company like twitch to move from h264 to av1? at what point in this transition do companies start saving money from the transition If twitch is going to allow streamers to upload AV1? Almost immediately. If twitch is going to transcode streams to AV1 and make them available at a variety of bitrates to users? Well, YouTube chose to build their own chips to do that because available solutions weren't good enough: https://arstechnica.com/gadgets/2021/04/youtube-is-now-building-its-own-video-transcoding-chips/

|

|

|

|

mobby_6kl posted:Honestly looks pretty good if you don't give a poo poo about a 20 year old game and 1080p It's still not particularly good in the hardware unboxed video. It's beat by AMD's similarly priced cards in value and the driver situation still seems like a shitshow. The upsides over AMD for Intel are 1) better ray tracing, which is seen in the TechPowerUp review, though many games aren't very playable with ray tracing on no matter what, 2) better media encode, including AV1, and 3) XeSS on Intel hardware could be better than FSR2 in terms of both performance and image quality, but it honestly seems close enough to not really matter right now. Overall, there's not enough there to recommend arc over RDNA2. And Arc may be better at 1440p than the 3060/6600 (on average), but this is the general performance tier where I'd ideally recommend 1080p instead anyway, and Intel just sucks at that for some reason. fake edit:  good lord, lol that's the thing though. there are gonna be random games like this that just eat poo poo on arc

|

|

|

|

Twerk from Home posted:If twitch is going to allow streamers to upload AV1? Almost immediately. If twitch is going to transcode streams to AV1 and make them available at a variety of bitrates to users? Well, YouTube chose to build their own chips to do that because available solutions weren't good enough: https://arstechnica.com/gadgets/2021/04/youtube-is-now-building-its-own-video-transcoding-chips/ do you run into av1-like performance bottlenecks when transcoding from av1 to h264, or does it run similarly to h264-to-h264 transcodes?

|

|

|

|

I certainly wouldn't buy an arc this generation but I am kinda impressed with how it looks out of the gate, it's less terrible than I thought it would be. If it were on a cheaper OEM machine it'd be....fine? I just think we're all a bunch of enthusiasts and it's really not ready for that market yet.

|

|

|

|

|

| # ? Jun 4, 2024 01:24 |

|

I just wanna say that the Intel branded Arc cards themselves look so good. None of that garish gamer aesthetics. No Dark Obelisk.

|

|

|