|

BoldFace posted:I've heard somewhere that images in batches use sequential seeds. If the seed number is set to 340129172, fourth image in the batch would use the seed 340129175.

|

|

|

|

|

| # ? May 29, 2024 20:05 |

|

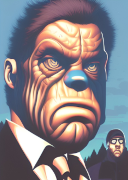

Brutal Garcon posted:I believe the seeds in a batch are just sequential. BoldFace posted:I've heard somewhere that images in batches use sequential seeds. If the seed number is set to 340129172, fourth image in the batch would use the seed 340129175. mobby_6kl posted:Yes that's correct, but the images in the preview window don't always seem to be in sequential order. So it's better to click the specific one you want before the recycle icon Thank you all! As a gift, here's a sample from a project I'm making that your advice has directly affected because this image was the 3rd one.  Family Matters

|

|

|

|

Can any of the current engines do, like, the exact image you upload to them but in a different art style? I just want to jazz up my own ugly face with Disco Elysium portrait-style or something, but still have it be recognizably me.

|

|

|

|

Were can I find this leaked anime model?

|

|

|

Megazver posted:Can any of the current engines do, like, the exact image you upload to them but in a different art style? I just want to jazz up my own ugly face with Disco Elysium portrait-style or something, but still have it be recognizably me. Stable Diffusion + Dreambooth can generate images based on a new subject. But you probably want something like style transfer if you want the exact same picture with a different style applied. wibble posted:Were can I find this leaked anime model? https://rentry.org/sdg_FAQ

|

|

|

|

|

Megazver posted:Can any of the current engines do, like, the exact image you upload to them but in a different art style? I just want to jazz up my own ugly face with Disco Elysium portrait-style or something, but still have it be recognizably me. You'd normally use img2img on a photo of yourself, but since the Disco Elysium art style is probably not trained in the database, your best is to use dreambooth to train your face. That unfortunately requires a pretty powerful GPU. https://www.youtube.com/watch?v=7m__xadX0z0

|

|

|

|

It's possible to train textual inversion and hypernetworks on a pretty mediocre GPU like the 1070. Getting it then rendered in the right style is a bit of a fight, but doable: https://ericri.medium.com/you-the-multiverse-of-you-and-stable-diffusion-93c2b8b8b3f6 https://ericri.medium.com/ai-art-of-me-textual-inversion-vs-dreambooth-in-stable-diffusion-5e54bb2b881 wibble posted:Were can I find this leaked anime model? magnet:?xt=urn:btih:5bde442da86265b670a3e5ea3163afad2c6f8ecc&dn=novelaileak

|

|

|

|

Is the NovelAI model good for anything other than single-subject waifu portraits? I saw that it has better coherency than StableDiffusion at non-square aspects, but that's a pretty low bar to clear and MJ + D2 (with outpainting) can handle those fine too. Is there any improvement to the practical things like action shots? Hand-object interactions? Multiple characters?

|

|

|

|

Thanks for the answers, everyone.

|

|

|

|

Elotana posted:Is the NovelAI model good for anything other than single-subject waifu portraits? I saw that it has better coherency than StableDiffusion at non-square aspects, but that's a pretty low bar to clear and MJ + D2 (with outpainting) can handle those fine too. Is there any improvement to the practical things like action shots? Hand-object interactions? Multiple characters? From what I understand hands are better.

|

|

|

|

Elotana posted:Is the NovelAI model good for anything other than single-subject waifu portraits? I saw that it has better coherency than StableDiffusion at non-square aspects, but that's a pretty low bar to clear and MJ + D2 (with outpainting) can handle those fine too. Is there any improvement to the practical things like action shots? Hand-object interactions? Multiple characters? One thing I noticed is that it can do full artist effects especially manga better than MJ or default SD but that's what is based on the training images. For example non-NAI Junji Ito will give you something like fan art of his style  NAI will go for it and full on go for it like his real artwork. (this is a sample of his work, not something made with AI)  I'll see if I can give a demo later

|

|

|

|

Elotana posted:Is the NovelAI model good for anything other than single-subject waifu portraits? I saw that it has better coherency than StableDiffusion at non-square aspects, but that's a pretty low bar to clear and MJ + D2 (with outpainting) can handle those fine too. Is there any improvement to the practical things like action shots? Hand-object interactions? Multiple characters? I think it's a problem with the training data. The training images have human-generated tags like "1girl, 1boy, red hair, blue hair, apple", but there is no tag for "a red-headed girl giving an apple to a boy with blue hair". If there is only one subject in the image, almost all of the tags refer to that subject and the AI is able to draw it pretty well. It is possible to use something like inpainting to select different parts of the image to draw different characters in, but it's difficult to have them interact with each other.

|

|

|

|

Honestly, MJ seems reasonably competent at Junji Ito? (the prompt for this was literally just "surprise me, junji ito" with default settings, then remastered to --test --creative) Whereas it will completely flop emulating the "generic" pixiv style due to a combination of low internal resolution and training size. NovelAI definitely has that down pat from what I've seen, so I'm wondering whether the hyper-specific tagging of the booru datasets helped anything else. Elotana fucked around with this message at 17:18 on Oct 17, 2022 |

|

|

|

Went to a twelve-hour Labyrinth masquerade ball recently. Bowie was everywhere. I asked Dream to make a David Bowie Bagel.

|

|

|

|

Elotana posted:Whereas it will completely flop emulating the "generic" pixiv style due to a combination of low internal resolution and training size. NovelAI definitely has that down pat from what I've seen, so I'm wondering whether the hyper-specific tagging of the booru datasets helped anything else. The trinart2 model is trained specifically on detailed artsy trending-on-pixiv style images, so it can be better for those kind of results as opposed to the general anime models which also include a lot of simpler art in the training set  It goes for that kind of style even without specific details or style modifiers in the prompt. for example here the prompt is just "among us"  and here is "final fantasy"

|

|

|

|

https://twitter.com/StabilityAI/status/1582053531686645760

|

|

|

|

BrainDance posted:Yes it tells it to use cuda twice and only one of them is gonna be necessary I got too lazy to figure out which way works This is the code I use. The 2 magic incantations are the .to(device) bits, which send the data to the device selected above. You can also see if you actually have cuda available by adding a print(f'Using device {device}') after the device = "cuda:0" etc line Python code:Wheany fucked around with this message at 18:21 on Oct 17, 2022 |

|

|

|

Great, I'm sure 1.5 will get released annnnnny minute now

|

|

|

|

cool. I bet their models will be released even more frequently and for free! Just like.... openai... edit: Yep, kinda peeved how openai and SD use "open source" in all their pitches where the only open nature is how easy it is to use the paid API. they don't even release code so people can't make their own models . EVIL Gibson fucked around with this message at 18:55 on Oct 17, 2022 |

|

|

|

They're a little more immune to that kind of crap. During a recent Q&A, Emad mentioned that all of Stability's engineers have clauses in their contracts letting them open-source any of the models at any time. So even if the money makes them want to kind of turn inwards institutionally, they won't be able to shut all that stuff up.

|

|

|

|

RPATDO_LAMD posted:They're a little more immune to that kind of crap. During a recent Q&A, Emad mentioned that all of Stability's engineers have clauses in their contracts letting them open-source any of the models at any time. So even if the money makes them want to kind of turn inwards institutionally, they won't be able to shut all that stuff up. but they won't, because of the implication

|

|

|

|

Isn't talking about the models themselves kind of a red herring? It's the training process and resulting weights that are cost prohibitive, and while Emad made a big splash releasing 1.4 he doesn't have infinite money. I could see those only being released on a delayed basis, like when a new version is out.

|

|

|

|

I used the automatic1111111 checkpoint merger to mix the standard SD 1.4 with the novelai leak and used it for img2img prompt: best quality, Junji Ito, Geiger

|

|

|

|

I don't like the new season of Attack on Titan.

|

|

|

|

Elotana posted:Isn't talking about the models themselves kind of a red herring? It's the training process and resulting weights that are cost prohibitive, and while Emad made a big splash releasing 1.4 he doesn't have infinite money. I could see those only being released on a delayed basis, like when a new version is out. At least I'm not talking about models. Stable Diffusions full suite and special sauce for training of existing and entirely news models can be found here: https://github.com/pesser/stable-diffusion Go-to OpenAIs GitHub page https://github.com/orgs/openai/repositories The most popular projects are tools to access their closed source API. None of their tools will let you make something similar to Dall-e. Now we talk about models. You can download models from SD. You cannot for OpenAI, but I am mostly about "open-source" EVIL Gibson fucked around with this message at 19:56 on Oct 17, 2022 |

|

|

|

Elotana posted:Isn't talking about the models themselves kind of a red herring? It's the training process and resulting weights that are cost prohibitive, and while Emad made a big splash releasing 1.4 he doesn't have infinite money. I could see those only being released on a delayed basis, like when a new version is out. In fact 1.5 is already trained and the beta is available on the dreamstudio website. They are doing some last minute refinement or safety checking or something before releasing the weights publicly. Same thing happened with 1.4, it was available privately a few weeks before they dropped the public weights.

|

|

|

|

WhiteHowler posted:I don't like the new season of Attack on Titan.  E: on my first attempt I had inpaint set on 'fill' instead of 'original'

|

|

|

|

Elotana posted:Honestly, MJ seems reasonably competent at Junji Ito? (the prompt for this was literally just "surprise me, junji ito" with default settings, then remastered to --test --creative) you must combine with Jon Burgerman to unlock the full power of Junji Ito!

|

|

|

|

This AI stuff is magical. Used Stable Diffusion to put Christopher Walken in Twin Peaks and it was just so easy. I've only been playing around with it for a few days so I'm real pleased I could get something recognizable. Doing this I ran into a few issues, so got a couple of questions on processes. For this picture, the initial prompt was 'Christopher Walken and Kyle MacLachlan in the Red Room, still from a 1990 episode of Twin Peaks'. The first generation (which sadly I forgot to save) generally correctly took that to mean there should be two humans, but each figure had blended features from both actors. To correct, I picked the most distinct image and sent it to Inpaint. In Inpaint, I masked out one face, set denoising to 0.25 and altered the prompt to 'Christopher Walken in the Red Room, still from a 1990 episode of Twin Peaks'. Once that had generated a decent Chris Walken, I repeated it on the other face for Kyle. This always seems to lead to those overprocessed looking faces. Is there anything I should be doing differently to get a better result? The other issue I ran into is that originally I wanted Walken to be holding a log, but SD has a hard time understanding that sort of thing even without multiple people in the image. Once I'd arrived at the above image, I tried to insert a log using the above method, but in a whole bunch of attempts it didn't generate anything that remotely looked like it fit. Any tips for seamlessly adding objects to an existing scene, or is that currently basically impossible?

|

|

|

|

Ripper Swarm posted:Any tips for seamlessly adding objects to an existing scene, or is that currently basically impossible? Crudely add the object to the scene, then img2img to smooth out the harsh edges and integrate it

|

|

|

|

Elotana posted:Honestly, MJ seems reasonably competent at Junji Ito? (the prompt for this was literally just "surprise me, junji ito" with default settings, then remastered to --test --creative) I stand corrected. It looks like Novel AI is hard trained to make good looking images. NovelAI  SD 1.4  Both used the same prompt: a hyper detailed of a morbid scene of a slaughter room (((in the style of Junji_Ito))), crosshatching edit: I just realized, I am using the SFW version now. I am working away from home, so I'll have to load the NSFW model back in to see.

|

|

|

|

Ripper Swarm posted:Once I'd arrived at the above image, I tried to insert a log using the above method, but in a whole bunch of attempts it didn't generate anything that remotely looked like it fit. Any tips for seamlessly adding objects to an existing scene, or is that currently basically impossible? I've generally found inpainting to only be useful with it set to base it on the original, but that also means it can only get so far away from what you've masked. It's basically taking the area you've masked and blurring it and then regenerating with the blurred area as the basis. So you'll probably have better luck if you draw or photoshop a log in where you want it, and then inpaint over that. If you do very flat ms paint style drawing you'll generally end up with something very flat looking, but I've found that adding a bunch of noise over a crappy drawing gives it more to work with. You'll probably get best results using photo source for your log being held though.

|

|

|

|

Rutibex posted:you must combine with Jon Burgerman to unlock the full power of Junji Ito! Oh man, these are amazing. This is why I love this AI poo poo - you can just type in two different artists and voila, you can see what they'd make if they were merged into a single artist.

|

|

|

|

Further Automatic1111 tips: If you put an mp3 titled "notification.mp3" in the webui root folder, the next time you launch it and afterwards it will play that mp3 when it's done generating a batch.

|

|

|

|

|

Yes...haha, yes! From this crude stock picture/ MSPaint addition  to this  First pass so could be made better, but it works. Definitely seems like it would be better to composite the scene first and then get the AI involved so you don't accidently lose details, but 'composite first' feels like advice that applies to basically all art anyway ...goddamn, if the txt2image feels like a search engine that gives you pictures that don't exist, the img2img feels like you're a wizard magicing a lump of clay into a living creature

|

|

|

|

The most powerful brit has transcended the physical and now traveling the metaphysical. if you remember this prompt from while back

|

|

|

|

|

|

|

|

Just because DallE2 has filters doesn't mean you can't get some real horror out of it.

|

|

|

|

Jesus Christ this is perfect for Halloween but drat

|

|

|

|

|

| # ? May 29, 2024 20:05 |

|

oh boy you really did it

|

|

|

the Mods, they knew!

the Mods, they knew!