|

xgalaxy posted:Doesnít explain why we have no evidence of someone with an FE edition having this problem. it might just be sampling bias, FE cards are only sold in a handful of countries so presumably there are far fewer of them in the wild than AIB cards there was a second report of an MSI PSU with native 12VHPWR melting today, so score another for the adapter maybe not being the problem specifically

|

|

|

|

|

| # ? Jun 4, 2024 07:22 |

|

Virtually every cable I've seen appears to have the melted terminals pushed back through the housing, which has always pointed to a problem on insertion. If it's not happening with FEs, it's probably because Nvidia used a better housing that holds the terminals in place more strongly, or perhaps there are tighter tolerances so it's impossible to shove it in at enough of an angle that the pin starts driving the terminal backwards. It's always been obvious that the heat is happening at the tip of the connector, and that's going to happen when the length of pin and terminal that are touching is too short. This specific kind of problem has existed as long as PCs have, people used to drive the terminals out of molex connectors all the time.

|

|

|

|

xgalaxy posted:Doesnít explain why we have no evidence of someone with an FE edition having this problem. FE owners are smarter and better looking, and always fully insert their plugs. (Also still small N, I think.)

|

|

|

|

Trying to price out a build for a friend. Whatís the best 1080p card for $300? No real preference for team.

|

|

|

|

K8.0 posted:Virtually every cable I've seen appears to have the melted terminals pushed back through the housing, which has always pointed to a problem on insertion. If it's not happening with FEs, it's probably because Nvidia used a better housing that holds the terminals in place more strongly, or perhaps there are tighter tolerances so it's impossible to shove it in at enough of an angle that the pin starts driving the terminal backwards. Nvidia supplied all of the connectors to AIBs

|

|

|

|

buglord posted:Trying to price out a build for a friend. Whatís the best 1080p card for $300? No real preference for team. 6600XT

|

|

|

|

buglord posted:Trying to price out a build for a friend. Whatís the best 1080p card for $300? No real preference for team. Used 3060ti

|

|

|

|

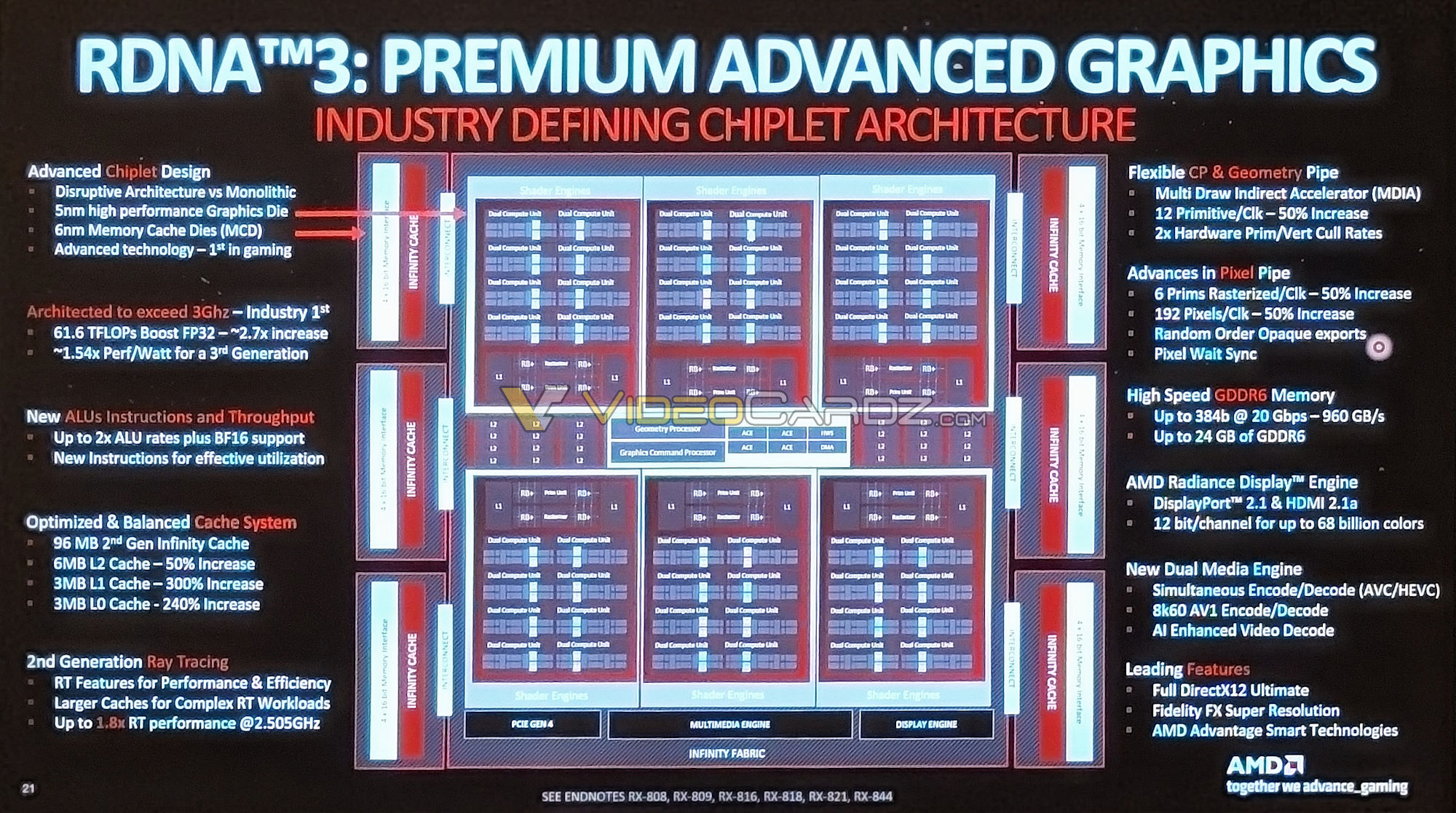

Paul MaudDib posted:Long term, if AMD can get to MCM next gen (even just a pair of GCDs in direct-attach configuration) they win, the scotsman is absolutely right about that, I agree. But 300mm2 GCD just isn't enough to win yet. I guess that's RDNA4, but, NVIDIA gets their riposte too.  Alleged slide from the presentation, courtesy of Videocardz. Hmm. It doesn't seem like they were able to divest *anything* from the GPU die except the memory interface(s). And then as a result, found themselves with a bunch of spare room that they decided to whack L3 onto. I don't.... it's not like Zen 1 block diagrams, right? Where you looked at the Zen 1 block diagram and went, "Ah, I see where the next evolution is going to be, they will take the two side-by-side 4-core CCXes and fuse them together into a single unified 8-core CCX with unified L3 cache, that they then bond with another 8-core CCX to make their new CCD." They wouldn't, of course, they'd just leave it at a single CCX per CCD/die. And it took them two generations to unify the L3 across all eight cores, but you could see where the tech was going. I don't know what they do here unless they go vertical. Stacking cache on top of the MCDs is what everyone thinks, but is AMD crazy enough to stack GCDs? We might need that original pre-revision 4090 cooler if that's the case. SwissArmyDruid fucked around with this message at 12:49 on Nov 14, 2022 |

|

|

|

RDNA3s flavour of chiplets almost looks like a HBM architecture adapted to outboard GDDR

|

|

|

|

Oh yeah, speaking of different memory: I also don't understand their growth plan for eventual GDDR6X adoption will work out. Faster memory allows for a narrower memory bus, do they just not connect up all the MCDs to the RAM? Do they put in 4 bigger MCDs with the same amount of/more L3 as 6 small MCDs? Tinfoil hat theory: The asynchronous clocks thing is them tipping their hand. Bottom GCD die runs all the slow bits, doesn't put out as much heat, upper GCD die runs all the hot bits, gets prime cooler placement. Stack cache dies on top of the MCDs, instead of structural silicon. There are TSVs in the design that they aren't talking about. Somebody take a grinder and an electron microscope and take photos. edit: I'm thinking in terms of monolithic dies too much. AMD has no "reticule limit", they don't have to worry about fringing at the edges. They could blow past Nvidia's Hopper being 1000mm^2. Make their GPUs 2000mm^2 by assembling them out of chiplets. Spread that heat out over a massive area, it'll be the inverse of the problem with current Zen chips, where the heat is being concentrated in the I/O die. SwissArmyDruid fucked around with this message at 20:46 on Nov 5, 2022 |

|

|

|

If they do die stacking on the MCD's do they really need to do GDDR6X? The whole point of the stupid size cache approach is that it allows you to get away with lower bandwidth cheaper VRAM than you'd otherwise need after all.

|

|

|

|

depends what you're doing, rasterization scales well with cache but advanced RT effects are inherently incoherent and tend to blow up caches

|

|

|

|

repiv posted:depends what you're doing, rasterization scales well with cache but advanced RT effects are inherently incoherent and tend to blow up caches

|

|

|

|

incoherent as in random, with traditional rasterization methods adjacent pixels/threads access nearly the same data, which caches very nicely, but RT effects are typically based on stochastic sampling where rays fire off in random directions and there's little correlation in what data they touch even if they spawned near each other. take reflections as an example, if you raytrace the reflections in a perfect mirror then the reflections rays will still be parallel/coherent and you might get some milage out of the cache. but for reflections in a rougher surface, the rays scatter randomly in a cone and diverge from each other, expanding the amount of data required until the cache bursts. with diffuse global illumination the rays scatter over an entire hemisphere, which is even harder. repiv fucked around with this message at 18:53 on Nov 5, 2022 |

|

|

|

Shumagorath posted:I lasted one lecture of my graphics course before dropping it in my usual register-six take-five scheme. Is there a good source on what "inherently incoherent" means in this context? raytracing produces a very noisy output (until you get to absurd amounts of samples per pixel anyway, which no card can do), which is then re-constructed to look normal  e;fb

|

|

|

|

Thank you both!

|

|

|

|

I think the terminology might be wrong but basically rasterization has fairly organized and predictable memory access pattern which lends itself well to cache use. It is easy to guess what data will be needed next and pull it into the cache so it is already there when the GPU needs to start working on it with no delay. Where as ray tracing is random rear end bullshit that shoots off rays in every direction at once meaning it just slamming memory with a totally unpredictable access non-pattern that makes attempting to cache it mostly futile.

|

|

|

|

there's a tension too, because optimizations for getting more value out of fewer rays focus on deliberately decorrelating the rays so each ray produces more unique data for the denoiser to work with. for example RT shadows in their naÔve form are kinda coherent because adjacent pixels are shooting shadow rays in roughly the same direction towards the same light. but that doesn't scale, if you shoot shadow rays for every light which touches a pixel then your ray count will blow up in complex scenes with lots of lights. the improvement nvidia came up with (which underpins portal RTX, that RC car demo and cyberpunk RT overdrive) instead samples just one random light per pixel, so they can handle an unlimited number of lights with a fixed ray budget, but that means there's practically no correlation between adjacent pixels anymore because they're probably shooting rays towards different lights, and the cache becomes very sad. repiv fucked around with this message at 19:26 on Nov 5, 2022 |

|

|

|

PC LOAD LETTER posted:If they do die stacking on the MCD's do they really need to do GDDR6X? Yes but at the flagship and slightly under level these cards are being measured up against 4K and beyond performance - where there is no replacement for raw bandwidth, cache size be damned unless its like a whole gigabyte. We knew full well the 256bit bus from the 6900 was never going to make it into the 7900 series and not get laughed out of the building. Also the would-have-been laughingstock that is the 192bit bus on the cancelled 4080.

|

|

|

|

Seamonster posted:Yes but at the flagship and slightly under level these cards are being measured up against 4K and beyond performance - where there is no replacement for raw bandwidth, cache size be damned unless its like a whole gigabyte. We knew full well the 256bit bus from the 6900 was never going to make it into the 7900 series and not get laughed out of the building. Also the would-have-been laughingstock that is the 192bit bus on the cancelled 4080. AMD sells CPUs with 768MB of L3 cache, if they wanted to put 1GB of cache on a GPU they could!

|

|

|

|

AMD comes off as fundamentally less interested in RT. Assuming that the next gen of consoles sticks with them and continue not to have dedicated/"good" RT hardware, that'll just push out the horizon for the gamdevs to move to RT solutions en masse

|

|

|

|

Seamonster posted:Also the would-have-been laughingstock that is the 192bit bus on the cancelled 4080. the cut down 4080 just has been renamed to 4070 its not canceled.

|

|

|

|

shrike82 posted:AMD comes off as fundamentally less interested in RT. Assuming that the next gen of consoles sticks with them and continue not to have dedicated/"good" RT hardware, that'll just push out the horizon for the gamdevs to move to RT solutions en masse I was super-excited to get a 3080 and crank up all of the RT sliders in CP2077, except that one INSANE or whatever setting which I set at THE-TEETERING-BRINK-OF-INSANITY instead. And the game looks great, it's so much more real, there are entire sets of lighting effects and emitters that simply don't exist if RT isn't present, etc. IIRC the kerbside advertising - literally in the side of the kerb - is a simple decal without RT and becomes a light emitter with RT for example. The increase in the number of active light emitters is great. But it's also true that the "faked" raster lighting is so well done in that game that in many scenes I couldn't tell the difference without stopping and looking for them. I had to teach myself the differences so I could be happy to be in the superior RT world - street lamps in rainy scenes are infinitely superior for example - but I wouldn't have noticed if I hadn't been looking for those improvements. Except reflections and partial transparency - RT is just superb there, there's no doubt. But other lighting effects are less pronounced in their improvements. Which is to say IMO there's a lot of room for raster to continue looking good and also IMO RT is still way over-priced relative to the rest of a decent PC or console, so we're probably a good ways away still from Metro Exodus EE being the norm.

|

|

|

|

Twerk from Home posted:AMD sells CPUs with 768MB of L3 cache, if they wanted to put 1GB of cache on a GPU they could! SwissArmyDruid posted:

|

|

|

|

ConanTheLibrarian posted:They could take a leaf out of Apple's book and design the next set of GCDs similarly to the M1 Max where you can connect two of them to make an M1 Ultra. The chiplet layouts are superficially alike after all, memory surrounding compute. AMD is using the same TSMC 2.5d packaging (CoWoS-L or InFO-LSI) as Apple is with the M1 Ultra, but i think the Ultra is a bit of a cautionary tale and shows why AMD and Nvidia haven't used that same tech to make a multi GCD GPU yet. There are absolutely situations where the Ultra is able to 2x the M1 Max's GPU performance, but there is also a good deal of other circumstances where that hasnt been the case despite having 2x the silicon thrown at the problem... specifically games. I imagine if AMD/Nvidia/Intel thought it was ready for prime time, they would jump all over it as it would dramatically increase yield per wafer. That AMD is taking this kinda half step with only the memory controllers makes me think they weren't fully convinced that InFO-LSI would be enough for a multi-compute die config. We will def see it in workcenter loads that don't stress the interconnect as much.

|

|

|

|

I was gonna wait on getting a new GPU but then I saw a RX6800 XT for under £600 and yeah this is my card now.

|

|

|

|

A goon put it pretty well ages ago in the thread when they said that ray tracing mass adoption is more of a workflow benefit than the actual results in games. It's considerably less work on the lighting since you wouldn't have to 'fake' it so much for example

|

|

|

|

at this point, the most likely gaming usage of raytracing is going to be for a vtuber avatar streaming themselves playing apex legends on minimum settings

|

|

|

|

Ray traced lighting on dem big tiddes As god intended

|

|

|

|

kliras posted:at this point, the most likely gaming usage of raytracing is going to be for a vtuber avatar streaming themselves playing apex legends on minimum settings this is truly the dumbest timeline

|

|

|

|

Light reflecting off skin tones, so many variables...

|

|

|

|

Zedsdeadbaby posted:A goon put it pretty well ages ago in the thread when they said that ray tracing mass adoption is more of a workflow benefit than the actual results in games. It's considerably less work on the lighting since you wouldn't have to 'fake' it so much for example even that's not always the case, a lot of games have super stylized art *and* lighting, and getting that to work as well with RT would probably be more work than just plopping some pink lights in your corner it is absolutely loving amazing for realism tho

|

|

|

|

every pixar and disney movie is done with pathtracing, it's by no means only useful for plain photorealism even enter the spiderverse is pathtraced

|

|

|

|

disney/pixar did actually use rasterization in the early days (not quite the way games do it, but close enough) and you can easily observe the jump in fidelity when they switched away despite most of their stuff being highly stylized frozen 1 was the last disney film to use their old raster renderer and it looks conspicuously video-gamey compared to big hero 6 just a year later

|

|

|

|

it's different for gamedev tho, developers will have to offer both solutions until/if hardware gets fast enough for RT-only options

|

|

|

|

yeah of course, raster isn't going anywhere for a while yet

|

|

|

|

MarcusSA posted:Ray traced lighting on dem big tiddes YEAH

|

|

|

|

repiv posted:frozen 1 was the last disney film to use their old raster renderer and it looks conspicuously video-gamey compared to big hero 6 just a year later Apparently production scene sizes are getting so large that they won't actually fit into any amount of ram any more and they're becoming impractical to just move around on company networks. They're totally disk and network bandwidth limited now so a lot of current research is focusing on ray re-ordering and clever caching to increase locality of reference. I wonder if some of it will filter down to GPU sized problems where keeping local caches full is as important as ray/primitive intersection performance. Incidentally, UE5's Nanite has some REYES-ish qualities to it. They managed to crack the automatic mesh decimation problem in a way that nobody could figure out with REYES, but I think there's a lot of similarity there. I heard from somebody that Weta was experimenting with something like Nanite for their production renderer. Basically, they were running geometry through a preprocessing step every frame to reduce the whole scene down to 1-2 triangles/texels per pixel for visible geometry and used some super simple heuristics for off-screen geometry. The resulting geometry is absolutely tiny compared to the input scene so they don't need to worry about disk or network latency, everything would fit memory. Not sure if it went anywhere, but that's supposedly what they were researching. Something like that might be possible on a GPU too, although I think the API would need to expose some way to update BVH coordinates rather than the current black-box that it is right now. Exciting times.

|

|

|

|

epic did a seriously impressive flex by loading up a scene from disney's moana and rendering it in realtime through nanite https://www.youtube.com/watch?v=NRnj_lnpORU&t=279s that's an absurd amount of geometry to deal with

|

|

|

|

|

| # ? Jun 4, 2024 07:22 |

|

I was going to reply to repiv's original pixar post with: but full pathtracing is fine when you don't any rendering time constraint other than get the movie out by next year. Doesn't that also hold for when the rendering overhead for increased fidelity means you can't even buy boxes big enough to hold a single scene? Sure, you're swapping off-box, but you have more time than a video game to deal with the mess, surely? Genuinely asking here, not being skeptical. It just seems like such a different problem space to games. Are more traditional parallelization algos like map-reduce useless for patchtracing? For e.g., the mapping phase is different bundles of rays, the reduce is the aggregate effect on the scene? There have to be really good reasons why this isn't done, it's pretty obvious, but would be good to know why a movie studio which has no realtime constraint on rendering a scene wouldn't pursue those kinds of approaches.

|

|

|