|

Every time I type out "AI" and the I doesn't have serifs:

|

|

|

|

|

| # ? Apr 28, 2024 09:28 |

|

I've been thinking about the rapid advancement of genAI in the past couple of years, and self driving vehicles popped in my head as an interesting comparison point. I remember back around 2015 when the self driving hype was getting started - it seemed like every couple of months there was a new update with new features getting announced. Within a year or so Teslas were able to drive on the freeway mostly by themselves. It seemed like a bunch of people - not just Musk - were expecting fully autonomous level 5 vehicles within the next few years. Then around 2019 progress hit a wall and it's been mostly minor incremental improvements since then. We're barely at level 3 self driving and level 5 seems decades away. I'm not saying genAI will necessarily follow the same trajectory, but it made me think if it's possible to know where in the development curve we are with it? Are all the recent advancements just the tip of the iceberg, or is it possible we're getting to the point where we hit diminishing returns (whether that's on compute time, development time, or training data)?

|

|

|

|

Seph posted:I'm not saying genAI will necessarily follow the same trajectory, but it made me think if it's possible to know where in the development curve we are with it? Are all the recent advancements just the tip of the iceberg, or is it possible we're getting to the point where we hit diminishing returns (whether that's on compute time, development time, or training data)? Can't say yet, still too early. GPT5 will be a bellwether. Over the last few weeks I've seen several significant "hard" problems get solved in the AI space, and the improvements are still happening too rapidly to say where things might land.

|

|

|

|

Waymo is level 4, no? And from what I've heard through the grapevine, the main barrier to their expansion at this point isn't the self-driving tech itself, but the economics of the cars, which require expensive nightly maintenance.

|

|

|

|

Seph posted:I'm not saying genAI will necessarily follow the same trajectory, but it made me think if it's possible to know where in the development curve we are with it? Are all the recent advancements just the tip of the iceberg, or is it possible we're getting to the point where we hit diminishing returns (whether that's on compute time, development time, or training data)? Yeah, it's really hard to say. We know there is at least a decent amount of improvement likely left to make with our current technology, it would be very surprising to me if we found out "oh, wow, we accidentally have hit the optimal way to train transformers, this is about as good as it gets." That and there have been a lot of things that look like they might be big improvements just popping up all the time that absolutely haven't been fully explored yet, and might be big deals. There are some approaches that aren't feasible now but will become feasible with even more powerful hardware, what we're doing at the peak of it all right now is basically just pushing the limits of the hardware we have. I always think about what this would all be like if we had the idea and understanding of current AIs but 20+ years ago when the hardware just wasn't there, like if Attention is All You Need was published in 98, would we have sat around waiting, or done something with super small models? Are we kinda waiting right now? I think so. Like, I think we can take the whole mixture of experts thing a lot further than we have. I don't think it's a coincidence that the models we're using right now completely require basically the limits of the hardware we have. The big optimizations we've found that allow bigger models to run on weaker hardware, that doesn't just benefit people at home running models on their 3090, that also means we can potentially run even more powerful, hardware intensive models on the huge, 8 GPU datacenter stuff, too. This is why I wish we knew more about GPT4, is it what it is right now because even they don't have the hardware for what it could be? Though at the same time I think most people do believe that with transformers there is a limit. There's probably a size out there where just making the model bigger stops mattering all that much, or where you cant make it better at something with better training data on that thing. But, there was research on alternative methods and improvements 5+ years ago too. Now there's a whole lot of research. Tons of people working on finding a better way, even new approaches that weren't doing that before, since AI has everyone's attention now. We aren't living in the environment that led to the discovery of transformers now, but something much bigger, so I actually think it's very unlikely we're not gonna find some huge advancements and completely new approaches much faster than we were finding them before. There's so much money being thrown at it now. If it all has some really hard line to cross where we just cant figure out some big problems even throwing tons of research money at it, I don't see any reason to think we're actually that close to it. Like, so far from it that what is possible with just what we have now wont look all that similar to what we have now. I think diffusion models are even further from what's possible with them. That's just my feeling looking at how we're using them now and the progress we've made. So I think there is a limit, I don't think we're close to it. Where I think we're still really far behind with just the models and technology we have though is implementation. There's a ton of stuff that could be done with what we have, but no ones gone and implemented it yet or even thought of it, we haven't had as much time with the current technology to do it since this all still really only blew up a short time ago. We're still mostly treating it like a toy with some implementations just using it in the most obvious way. Even with transformers, I suspect it's useful for more than just chatbots, natural language search engines, and lovely automated customer support. If hardware gets to the point where it's easy to finetune very large models for whatever specific purpose you have in mind and run them then we'll find a lot more uses for them, and I don't see hardware just reaching its limit any time soon. I would like to see an extremely large task master get trained, and see how it does at whatever that one thing it does is. If that was cheap I think it'd be tried more instead of these general models that get finetuned on top.

|

|

|

|

BrainDance posted:Like, I think we can take the whole mixture of experts thing a lot further than we have. My baseless speculation is that the rumored Q* breakthrough at OpenAI is a way to navigate a higher dimensional mixture of experts or something.

|

|

|

|

It's a good point though. People constantly assume "it's only going to get better" based on basically nothing but it's just something we have gotten used to with technology. Lots of technologies hit a plateau far faster than people thought they would or the advances get priced out of usefulness. GenAI has advanced far quicker than I think most experts predicted but it could also just as quickly hit a dead end and stall out for years, it did before until neural networks came along and kick started it back into high gear. With all the various directions research is going in and the vast amounts of money being poured in, this cycle probably has legs still but who the hell knows. Mega Comrade fucked around with this message at 10:12 on Feb 24, 2024 |

|

|

|

Thoom posted:Waymo is level 4, no? And from what I've heard through the grapevine, the main barrier to their expansion at this point isn't the self-driving tech itself, but the economics of the cars, which require expensive nightly maintenance. Waymo requires pre-mapping with lidar, which limits where the cars can go.

|

|

|

|

Waymo has to very extensively test in each new area to handle whatever edge cases may be specific to a new metro (though at least in theory, they should have to do less of this for each new area). They're clearly fairly conservative if you compare them to the rivals they've had; when both Cruise and Waymo were rolling out in SF, the self driving cars subreddit got way more posts about randomly stuck Cruise cars than Waymos, but that didn't seem to stop Cruise from rapidly announcing new expansion plans. Waymo still has some things they haven't fully tackled yet too, like freeways and (most?) bad weather.

|

|

|

SaTaMaS posted:which requires pre-mapping with lidar our bourgi-controlled society is sleeping at the wheel on stuff like this

|

|

|

|

|

from now on i will refer to all AI hype and marketing practices as "that willy wonka poo poo"       at least this event got people fuckin talkin about AI driven advertising in the actually correct terms: "how this gonna get used to hose us over"

|

|

|

|

From https://willyschocolateexperience.com/index.htmlquote:Any resemblance to any character, fictitious or living, is purely coincidental. At least this part was true

|

|

|

|

https://twitter.com/bene25_/status/1762631362597519859

|

|

|

|

The press managed to find and interview this poor oompa loompa too. https://twitter.com/davidmackau/status/1762981623115465156 highlights:

https://www.vulture.com/article/glasgow-sad-oompa-loompa-interview.html quote:The internet loves a fiasco. Whether it be 2017ís infamous Fyre Festival, 2014ís sad ball pit at Dashcon, or last yearís muddy hike for freedom at Burning Man, we love to marvel at events that make big promises but flop spectacularly. Itís the online equivalent of slowing down in your car to look at a giant wreck.

|

|

|

|

Good on her and thanks for humanizing her. Hopefully she goes onto bigger and better things!

|

|

|

|

Is it just me, or is this less of an "AI grifting story" and more of a "grifters who happened to use AI" story? Like, maybe it lowered the amount of effort required, but all of the grifting elements could've easily been done before ChatGPT existed. They would've had to do a GIS/Pinterest search for the picture instead of entering an AI prompt.

|

|

|

|

Quixzlizx posted:Is it just me, or is this less of an "AI grifting story" and more of a "grifters who happened to use AI" story? Like, maybe it lowered the amount of effort required, but all of the grifting elements could've easily been done before ChatGPT existed. They would've had to do a GIS/Pinterest search for the picture instead of entering an AI prompt. That said the generative AI stuff will make it easier and cheaper. You could GIS but you'd need to find a bunch of images that show what you want to grift, are consistent, are not recognizably an existing thing, or reverse-searchable. One could also subtly or not so subtly enhance images of the real location/product so when people do show up, it's vaguely similar to what they expected, just a bit (much) shittier. Same with the scripts, you could write that stuff yourself or steal it somewhere of course, but you could more easily generate the specific scripts you need by asking ChatGPT.

|

|

|

|

mobby_6kl posted:No that's right. To be honest you donít necessarily need to pass those hurdles, local social media had identified this as a grift in the weeks leading up to it and even had the name of the organiser (who has previous form for it), but it still sold tickets. If theyíd used pictures from the film and not even bothered with a script I doubt it would have made much of a difference. I imagine that for the vast, vast majority of customers what convinced them the event was legit was that one of our local event ticketing websites was selling tickets. Whats On Glasgow donít really do any validation of the events they list, but the people who bought these tickets probably assumed the opposite. By the time they ended up on the website it was probably after seeing the listing on WhatsOn, and so they were primed to assume it was real. And I imagine a lot of them never even cared to look up the eventís website.

|

|

|

|

Quixzlizx posted:Is it just me, or is this less of an "AI grifting story" and more of a "grifters who happened to use AI" story? Like, maybe it lowered the amount of effort required, but all of the grifting elements could've easily been done before ChatGPT existed. They would've had to do a GIS/Pinterest search for the picture instead of entering an AI prompt.

|

|

|

|

cat botherer posted:Yeah, all the images and everything were obviously not photos to anyone with half a brain. I donít thing anyone showed up expecting it to look like that - they just naturally expected it wouldnít be so out-of-this-world grim and half-assed. Except... now they are and want it reopened  https://www.scotsman.com/news/peopl...outrage-4539528 For a bit more content, here are some pictures of the actress playing the Oompa Loompa and trying to make the best of the situation. https://imgur.com/gallery/i6FQOaK It has to be absolutely awful to have the entire internet crash down on top of you all at once like that. Not one bit of this was the any of the actors fault so I'm glad to see people pushing back. More content: The actual 15 page script that was supposed to be read by the actors. https://i.dailymail.co.uk/i/pix/2024/02/27/willys_chocolate_experience.pdf I've read this and some of this truly is... magical. I give it even odds that someone reworks this and it ends up in an Off-Broadway production. https://www.themarysue.com/karen-gillan-scottish-willy-wonka-experience/ Better than even odds that it ends up on Broadway.

|

|

|

|

I enjoyed this line about the Jelly Beans That Make You Horny:quote:Willy McDuff: And let's not forget our secret inventionsóthe soup-flavored jelly

|

|

|

|

Kagrenak posted:My understanding is the training datatset for GitHub copilot is made up entirely of permissively licensed source code. The lawsuits won't affect that product in a direct way and I highly doubt MS is going to go bankrupt over them. This is definitely untrue, the very first thing that went viral with copilot was using it to generate the infamous "fast inverse square root" function from Quake III, which is open source nowadays but is licensed under the very restrictive GPL2 license. A business that included this function in their product could easily be sued for copyright violation unless they complied with the GPL2 terms, including things like a requirement to open source their own code and allowing others to redistribute it for free. https://twitter.com/StefanKarpinski/status/1410971061181681674 Note that it won't generate this any more but only because this particular code snippet was so famous that Microsoft explicitly blacklisted it. There's still the potential for it to regurgitate other, less-recognizable code from its dataset that's also copyrighted under restrictive licenses. RPATDO_LAMD fucked around with this message at 06:58 on Mar 4, 2024 |

|

|

|

Well I guess AI should be waaay more effective at rooting out or identifying IP infringement then current methods.

|

|

|

|

RPATDO_LAMD posted:This is definitely untrue, the very first thing that went viral with copilot was using it to generate the infamous "fast inverse square root" function from Quake III, which is open source nowadays but is licensed under the very restrictive GPL2 license. lol ontology Copilot just like look man, someone gave me proper justification and everything, I see nothing wrong here.

|

|

|

|

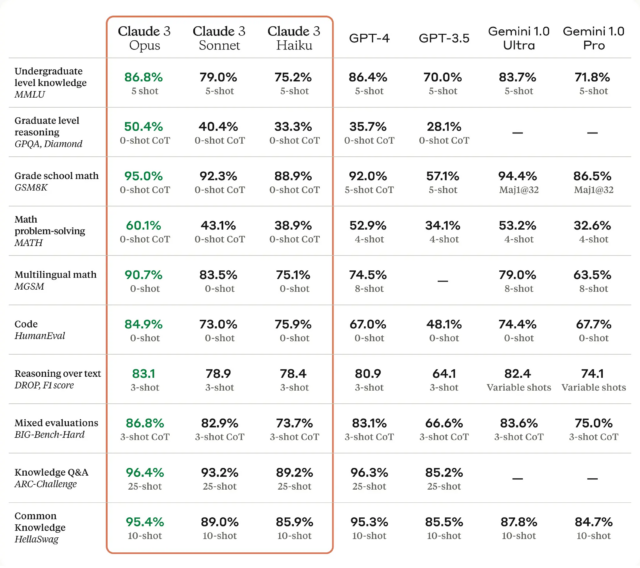

I finally had a reason to try ChatGPT for code. Told it to replace a function that printed sensor values over serial with one that saves it in a database using the SimplePgSQL library and it came up with this:C++ code:The problem is that this isn't how the library works, like at all. Looking at the example, it's supposed to be configured like this: C++ code: E: Claude 3 is out, claims to be better than everything in every way, several times by exactly 0.1%   https://arstechnica.com/information-technology/2024/03/the-ai-wars-heat-up-with-claude-3-claimed-to-have-near-human-abilities/ Whether or not that's really true is another matter of course. mobby_6kl fucked around with this message at 22:38 on Mar 4, 2024 |

|

|

|

Mega Comrade posted:It's a good point though. People constantly assume "it's only going to get better" based on basically nothing but it's just something we have gotten used to with technology. The real stall is that there isn't an infinite corpus to feed the models, and they've already pulled the low-hanging fruit of an internet scrape and started getting legal action in response. I've been quite happy to see more ways start being proposed to salt images to make them actively harmful to generative AI if included in datasets as well. I am all for grifters wasting huge amounts of time and money only to learn that they should have just hired someone who went to art school for $40 an hour to do work for hire. Edit: Same as I will never stop laughing when obvious bad code gets implemented because 'ChatGPT says it works' and they have to hire an actual software engineer at consulting rates to unfuck themselves. Liquid Communism fucked around with this message at 05:56 on Mar 5, 2024 |

|

|

|

Liquid Communism posted:The real stall is that there isn't an infinite corpus to feed the models, and they've already pulled the low-hanging fruit of an internet scrape and started getting legal action in response. I've been quite happy to see more ways start being proposed to salt images to make them actively harmful to generative AI if included in datasets as well. Don't forget companies like Air Canada getting burned after they replace their customer service folks with AI - which decides to make up policy on the spot, because it wasn't trained properly.

|

|

|

gettin burnt' by artificial intelligence

|

|

|

|

|

Once AI begins scraping AI the hallucinations will improve I'm sure

|

|

|

|

smoobles posted:Once AI begins scraping AI the hallucinations will improve I'm sure I don't count on that. Somebody will create algorithms to achieve "feedback suppresion". We will have to wait a few more years to see the type of damage language models and ai art will have on people.

|

|

|

|

Tei posted:I don't count on that. Somebody will create algorithms to achieve "feedback suppresion". the humans in the matrix weren't there as a power source, their input was the only means by which ai could harvest original nonhallucinatory data

|

|

|

|

Staluigi posted:the humans in the matrix weren't there as a power source, their input was the only means by which ai could harvest original nonhallucinatory data

|

|

|

|

Staluigi posted:the humans in the matrix weren't there as a power source, their input was the only means by which ai could harvest original nonhallucinatory data

|

|

|

|

cat botherer posted:The Wachowskiís original script actually had the humans being used for computation, but the studio thought that was too complicated for viewers to understand, so they changed it to the dumbshit power plant thing that makes no sense. If that were the case, it could have been a message about the world being what people want it to be instead of what they blindly accept. I dunno, maybe I'm just disillusioned with the sequels and would have preferred almost anything else, lol.

|

|

|

|

JazzFlight posted:Geez, that just makes me think of a cooler way the series could have gone if the robots were using human brains for actually sustaining the simulation (like, Neo's superpowers were an example of a single brain changing the simulation itself). So Neo threatening to wake everyone up to reality would be different to just the physical act of yanking their body out of the pod. Everything you've said, to me, seems to blend perfectly into what the rest of the movie did anyways, just that it would've made a lot more sense with the computational bit.

|

|

|

|

cat botherer posted:The Wachowskiís original script actually had the humans being used for computation, but the studio thought that was too complicated for viewers to understand, so they changed it to the dumbshit power plant thing that makes no sense. ...and then, because the power source bit is *literally* bullshit from a scientific standpoint, people assumed that the Wachowskis put it in as a secret signal to the Really Smart People or something.

|

|

|

|

mobby_6kl posted:I finally had a reason to try ChatGPT for code. Told it to replace a function that printed sensor values over serial with one that saves it in a database using the SimplePgSQL library and it came up with this: So, the thing is that the further you get off the beaten path, like you surmised already, it may not have complete information or it may get 'confused' with a similar library. I tried the same thing with the library and got similarly poor (albeit different) results. However, when I put the .h in or the sample code from the library (I tried both) I get what appear to be very good results. When dealing with relatively niche cases like libraries for comparatively lesser known stuff like ESP SQL libraries you can dump some sample stuff that you know works or should work and it can put it together from context. Interestingly enough, Claude and GPT4 did know what the library was but not exactly how to use it. Claude is a very good tool for this because its context window is so much larger, even the free Sonnet version is very handy for this. What's interesting as well is that when I have bizarre issues that befuddle me, I can give it code, tell it what I'm experiencing and it can usually tell me what the problem is. Especially if it's a programmatic issue, it'll know and tell me 99% of the time. It's an incredibly powerful tool.

|

|

|

|

Shooting Blanks posted:Don't forget companies like Air Canada getting burned after they replace their customer service folks with AI - which decides to make up policy on the spot, because it wasn't trained properly. Heh, I've had human customer service folks do that too...

|

|

|

|

Burnt by Artificial Intelligence

|

|

|

|

|

|

| # ? Apr 28, 2024 09:28 |

|

AI software engineer dropping soon https://www.pcmag.com/news/this-software-engineer-ai-can-train-other-ais-code-websites-by-itself quote:In one video, Cognition Labs CEO Scott Wu shows how Devin users are able to view the AI tool's command line, code editor, and workflow as it moves through various steps to complete coding projects and data research tasks. Once Devin receives a request, it's able to scour the internet for educational materials to learn how to complete tasks and can debug its own issues encountered during the engineering process. Users are able to intervene if desired, however.

|

|

|