|

Sagebrush posted:chatgpt has an even better one lol wait what does it mean "was"

|

|

|

|

|

| # ? May 29, 2024 00:13 |

|

forum's getting closed at midnight, sorry you had to find out like this

|

|

|

|

i kinda do wish that was what it stood for tho

|

|

|

|

|

|

|

|

the future is going to be worthless

|

|

|

|

|

|

|

|

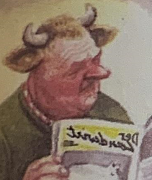

lol What was going on while René Descartes was getting his portrait painted..? the next NIKE AIR ad right fucken there.

|

|

|

|

NoneMoreNegative posted:lol What was going on while René Descartes was getting his portrait painted..? holy poo poo, lol'd out loud irl, fuckin lmao

|

|

|

|

NoneMoreNegative posted:lol What was going on while René Descartes was getting his portrait painted..? hahahahahahahaha

|

|

|

|

i squat therefore i am

|

|

|

|

NoneMoreNegative posted:lol What was going on while René Descartes was getting his portrait painted..? lmfao

|

|

|

|

post hole digger posted:i squat therefore i am lol gently caress you i was coming to make this exact line haha

|

|

|

|

cartesian? cartin' dees huge nuts around

|

|

|

|

sam altman: "please, somebody make laws about ai. my product is just so incredibly amazing and powerful, only superior regulation can save us from my excellent, superlative product."

|

|

|

|

"We just let anybody buy it! its crazy! we're just so nuts to let everyone buy our incredible world changing ai! SoMeBoDy StOp Us!!"

|

|

|

|

I like when folks use Midjourney or SD for a single aesthetic project, you can see where the strengths and weaknesses of the various current image-gens lie when there's a bunch of stuff fitting the same vibe. eg: https://www.instagram.com/toriyamastreet/ None of the image tools can do realistic jewelry yet, it always has that 'overly greebled' look to it. The skin detail and fur on a few of these is probably the best I have seen so far. Also;  Man has been careless with a lathe or saw or smth.

|

|

|

|

i’ve said this before and i’ll say it again

|

|

|

|

give him some room folks here he goes

|

|

|

|

i like this even though the visuals are obviously ai generated. nsfw at times https://www.youtube.com/watch?v=meIfqVtArMo

|

|

|

|

Sagebrush posted:i have been screwing around with some ai upscaling tools and i gotta say -- as long as you're okay with the results being hallucinated rather than literally true, they are getting shockingly good. SB I just saw this and thought of your upscaling project  Jacob Jansma, Dutch safety poster, 1940 Do not grease or polish while machine is running edit: while I'm here, this is surprisingly 'stable' for a sequence of AI pics in a video, very little of the popping in and out of details as the subject moves in the frame I would expect https://twitter.com/kagra_ai/status/1666005320819412993 Anime up there with Porno for the vanguard uses of new technologies. NoneMoreNegative fucked around with this message at 23:22 on Jun 15, 2023 |

|

|

|

i wish you could customize pixelmator photo's upscaler. it did a decent enough job but i feel like it'd be better if it went megahuge with it and then downscaled (apologies for using imgur to those outside of the US but idk how to get postimages to embed full res) e: hmm. tried it on my own and it looks basically the same  gotta specify huge before it scales i guess Beeftweeter fucked around with this message at 23:25 on Jun 15, 2023 |

|

|

|

saw some nerd who really should know better arguing that we'll soon reach singularity because we'll train the neural networks to make better neural networks and so on. genius, why hasn't anyone thought of that yet??

|

|

|

|

making better cars by making cars make cars

|

|

|

|

Reading something unrelated:quote:And it's not just text. If you train a music model on Mozart, you can expect output that's a bit like Mozart but without the sparkle – let's call it 'Salieri'. And if Salieri now trains the next generation, and so on, what will the fifth or sixth generation sound like? oh shiiit.

|

|

|

More here

|

|

|

|

|

That's cheaper than a lot of TOTO and TOTO knock off toilets.

|

|

|

|

https://twitter.com/protosphinx/status/1670231845630345216 https://flower-nutria-41d.notion.site/No-GPT4-can-t-ace-MIT-b27e6796ab5a48368127a98216c76864 Apparently the recent paper about GPT-4 getting 100% solve rate on an out of sample set of MIT EECS questions was faked. From the replication study: quote:We’ve run preliminary replication experiments for all zero-shot testing here — we’ve reviewed about 33% of the pure-zero-shot data set. Look at the histogram page in the Google Sheet to see the latest results, but with a subset of 96 Qs (so far graded), the results are ~32% incorrect, ~58% correct, and the rest invalid or mostly correct. To be honest 58% still sounds pretty good and this result fits in with the anecdotal themes people are talking about, i.e. how current generation LLMs are close to replacing lower knowledge workers. It's a shame that AI researchers feel the need to embellish an already amazing result and lose their credibility like this.

|

|

|

|

mondomole posted:i.e. how current generation LLMs are close to replacing lower knowledge workers. It's a shame that AI researchers feel the need to embellish an already amazing result and lose their credibility like this. they are not. that is literally not a thing an LLM is capable of doing like, even ignoring the "we faked the test completely to make a headline", large language models do not have any type of cognitive skill whatsoever. e: unless your definition of lower knowledge worker is someone writing content mill articles with no interest in veracity or accuracy, then yeah, fair enough infernal machines fucked around with this message at 02:55 on Jun 20, 2023 |

|

|

|

infernal machines posted:they are not. that is literally not a thing an LLM is capable of doing Yeah, I mean the tasks roughly at the level of what you might have sent to Amazon Mechanical Turk a few years ago. Stuff like: 1. Enter this data from (difficult to parse source) into this spreadsheet 2. Read this article and tell me if the article is good or bad for company X 3. Transcribe all of the words spoken in this video fragment

|

|

|

|

fair, but even then i'd bet on 2 and 3 being wrong or partially wrong more often than right 2 requires analysis, which requires contextual knowledge 3 is considerably more difficult than people seem to think with anything but carefully prepared audio clips, or perfect diction in ideal recording environments as for 1, i mean, regex exists, so maybe LLMs can be a very computationally expensive regex replacement, but i still wouldn't actually trust the output to be accurate infernal machines fucked around with this message at 03:11 on Jun 20, 2023 |

|

|

|

idk speech to text is pretty good these days. i don't think you necessarily need a LLM but it might be decent at predicting unintelligible words of course that's no guarantee of literally any degree of accuracy, but if it's unintelligible to a human in general anyway it can't really be worse than nothing

|

|

|

|

infernal machines posted:fair, even then i'd bet on 2 and 3 being wrong or partially wrong more often than right For (2) at least (can't speak directly to 1 or 3) GPT-4 seems to do reasonably well in the sense that I've spot checked its predictions against a 5+ year old model that I'm currently using and found that they agree. The articles I'm looking at are very narrowly scoped to a certain structure in a specific domain so I don't think it's too much of a stretch to believe that a machine can do a reasonable job. Imagine language like "we find a statistically significant effect" or "the effect size is negligible." You could probably get to reasonable accuracy on keyword matches alone. GPT-4 feels like it can replace that manual process of curating the keywords, although I agree it may not be able to do significantly better than that.

|

|

|

|

that sounds incredibly specific, so i'm curious, but i understand if you can't say more without doxing yourself.

|

|

|

|

infernal machines posted:that sounds incredibly specific, so i'm curious, but i understand if you can't say more without doxing yourself. About a decade ago, a bunch of data vendors popped up to help trading firms understand whether incoming news was "good" or "bad." Their algorithms weren't great but they were definitely faster than a human and good enough to make some money. In the years since, there's been two diverging paths: there's a speed dimension to this where making the same meh decision faster than others can lead to a profitable trade, and then there's an information dimension where throwing in more data to look at can lead to a more accurate decision. GPT-4 is interesting here mainly for the second problem but people are also thinking about ways to have GPT-4 come up with lists of tokens to scan for to fit the first use case. It's pretty openly discussed these days so I'm definitely not alone in exploring this stuff.

|

|

|

|

sounds a lot like digital haruspicy to me, but i can see GPT based models competing favourably with existing models if only because the accuracy probably isn't particularly high to begin with

|

|

|

|

every time i see the word "haruspicy" i read it like richard nixon eating peppers "haru! spicy."

|

|

|

|

infernal machines posted:sounds a lot like digital haruspicy to me, but i can see GPT based models competing favourably with existing models if only because the accuracy probably isn't particularly high to begin with Yeah I think that's fair. GPT is also significantly better at labeling entities, so even if the accuracy itself isn't that different, you get to see a lot more datapoints. For example, before if you saw a headline like "FDA bans Comirnaty from the US market on safety concerns," unless somebody had manually tagged Comirnaty as Phizer's drug, which is pretty time-intensive to cover all the entities you would want to listen to, you would ignore the headline. With chat GPT-4, you get this in parseable form

|

|

|

|

that's useful. any accuracy errors there in terms of brand/manufacturer matching?

|

|

|

|

infernal machines posted:that's useful. any accuracy errors there in terms of brand/manufacturer matching? In this particular case, spot on. I can definitely come up with bad labels; the question is how much worse are they than existing ones and doing it by hand. One key challenge is that you can probably extract the most value from recent entities that aren't followed by anybody, but these also won't be in the GPT-4 dataset. In principle this is solvable since you can summarize the last N years of "important product developments" using quarterly filings by feeding in those filings and asking GPT-4 to label the entities before moving on to the sentiment prompts. But right now it's not practical to do this for all companies and then also give all company context before every prompt. This gets into the realm of needing to train your own models, and right now it's not cost effective to do that for what we would gain, which is some super noisy estimate of "good" or "bad." If Moore's law manages to kick in here, I can see how in a few generations of GPT we might be able to automate a lot of this kind of work.

|

|

|

|

|

| # ? May 29, 2024 00:13 |

|

what happens if you ask it to explain why it rated that a -7? why not, idk, -3? any consistency to the ratings?

|

|

|