|

If going past an 11' diagonal means you lose accuracy in the centre, wouldn't that mean that even below an 11' diagonal you'd still lose accuracy in the corners without cameras, because that's again more than 6 feet from either camera? By that logic the largest square you could get with solid tracking everywhere would be 6'x6'.

|

|

|

|

|

| # ¿ May 12, 2024 18:04 |

|

They're not really clear on how they handled height in the two-camera tests; hopefully they tested at ground level so the actual distances are really more than 6', but if not then with head-height cameras you're going to get position inaccuracy at ground level in *any* size of tracking area. Of course hopefully as they say the position swim won't be noticeable in normal use; we'll have to wait and see until there are more users.

|

|

|

|

s.i.r.e. posted:The only thing I can think of off the top of my head is Monument Valley... But yeah, not many games exist where there isn't a time limit or health bar that will send you back if it depletes.

|

|

|

|

TorakFade posted:I am very intrigued by the concept, but seen that the prices are so steep it's not really an impulse buy; first of all, is it worth the admission price? Computing power is no issue, if my rig can't run it it's time for a new rig If your luxuries budget can stretch to it then you're unlikely to regret it; a lot of people bought headsets purely for Elite and driving games and they're very happy with the results. That said, there's little doubt that a year from now you'll be able to get a headset that's twice as good, and a year after that you'll be able to get one that's twice as good again and half the price. Early adoption is a pretty poor deal here. I'd echo the advice others have given; get a demo somewhere. If you're in a major US city there should be a lot of options, if not a bit of googling should find you a local enthusiast group that'll have someone willing to do demos. Nothing replaces trying it yourself, both for the good aspects and the "still first generation" aspects.

|

|

|

|

AndrewP posted:Anyone have any tips about the supersampling in Elite? I turned it to 1.5 in the game menu and it really seemed to help a lot with the aliasing, but a lot of places are saying that using the Oculus Debug Tool to do it is better. Anyone with any insight into this? Use whichever looks better to you. They do slightly different things; I believe the in-game setting removes slightly more aliasing but blurs things more.

|

|

|

|

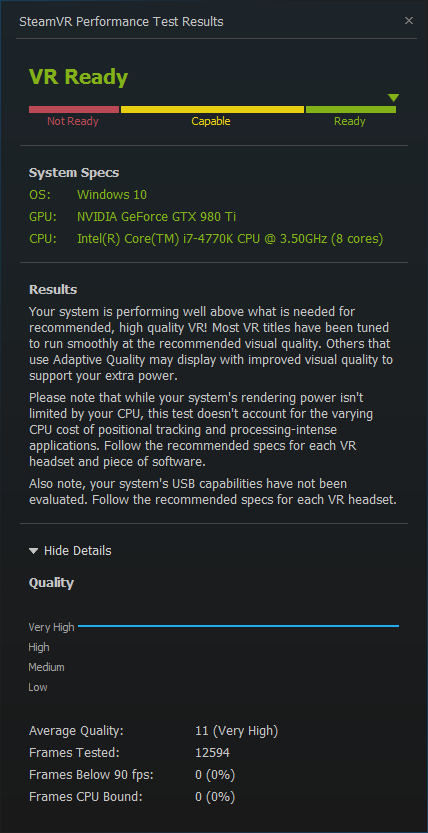

Truga posted:That is odd, I'm getting 11 on an older pc: Better CPU, so fewer CPU-bound frames. e: If anyone's still on the fence about Elite the standalone deathmatch mode is free (to keep) this weekend: store link. NRVNQSR fucked around with this message at 07:53 on Jul 8, 2016 |

|

|

|

El Grillo posted:Anyone got ideas about what might be the minimum GPU that might be able to run a Rift, not to play games but just for movies/virtual desktop? I kind of hoped the Ion netbook I have would do fine, but even once I'd got past the (currently arbitrary) SSE 2.4 CPU check, I can't proceed through the full setup because it needs a more recent NVidia gpu driver. Although I haven't tried it with SteamVR - I take it that doesn't work without all the Oculus stuff installed? The Rift only works with the very latest AMD and Nvidia drivers because those drivers had code specifically added to support it, so only cards supported by those drivers are going to work. It also needs a direct HDMI output from the AMD or Nvidia card, so Optimus laptops won't work. I've seen claims that the minimum spec the installer will allow is a GTX 650/AMD 7750, but those are unsubstantiated so don't take them as gospel. SteamVR won't help, as it uses the Rift drivers under the hood.

|

|

|

|

Cojawfee posted:I love most of their quicklooks because they don't know what's going on and it's hilarious. Their quicklook format is usually "one person has played the game for a couple of days and has to explain it to the others", which I think works pretty well. For the VR ones they generally don't bother with the person who knows what's going on, which seems fair because most of the current titles aren't worth that much effort.

|

|

|

|

SlayVus posted:Couldn't most VR improvements come from using Valve's scaling rendering engine in Unity? Is this something that would be hard to implement for games have already been released? If a game uses Unity's built in renderer it would be a moderate amount of work. If it uses a custom renderer it would be a large amount of work. Note that Valve's dynamic scaling technique, while effective, can significantly reduce picture quality. Instanced stereo and fixed foveated rendering are mostly lossless, and they're the things people usually talk about as the new Nvidia tech (even though you can do them almost as well on other hardware). There are no current Unity or Unreal renderers that do these, so it would be a large amount of work for any game. The ideal would be for games to use all three techniques, since they're all compatible with each other and they're all possible on any hardware.

|

|

|

|

The Unholy Ghost posted:E: I'm bad at jokes If you thought even for a moment that porn wasn't legit 90% of the use of this cutting edge technology then you're as naive as the CEO of Oculus.

|

|

|

|

Zero VGS posted:I feel ridiculous for having to ask this, but will NVidia VR Funhouse run on an AMD card? Will it even "run" in an intentionally gimped fashion? I would assume not; it sounds like even the lowest settings are set up to use Nvidia's proprietary features.

|

|

|

|

Cojawfee posted:It also only works with the vive. Nvidia is fracturing they community. Does it actually somehow only work with the Vive, or have they just not put the Rift logo on the page because Touch isn't out yet?

|

|

|

|

Kazy posted:It has a headset check, and loads the Oculus SDK if you have that. Which doesn't have Touch implemented. Welp. Score another one for incompetence over malice, I guess.

|

|

|

|

Cojawfee posted:If their features truly are just on Pascal, the 1060 probably really is the minimum. They say the 980Ti is supported, though; isn't that Maxwell? e: To clarify, there are Maxwell-and-later features that I'm sure it uses.

|

|

|

|

mobby_6kl posted:Does the Funhouse use their own Simultaneous Multi-Projection stuff? You'd think that with it they could seriously cut down on hardware requirements. Nvidia's minor improvements to simultaneous multi-projection are only available on Maxwell and later cards (i.e. 900-series, roughly), so it's not going to reduce them below that. Of course Nvidia are naturally going to quote inflated GPU requirements for their own software on top of that, that's just common sense.

|

|

|

|

Abu Dave posted:Just had my first experience with big boy VR the PlayStation VR. It was super impressive but I didn't like the large amoutns of black space aroudn the peripheral vision; it felt like I was looking out a porthole, which is what I didn't like about google cardboard. Mostly? The field of view varies slightly between them, with the Vive being largest, but it's only a matter of a few degrees. It'll be second generation at least before there are headsets that fill the peripheral vision.

|

|

|

|

Helter Skelter posted:Rez does sound awesome but being an early adopoter of console VR seems like an even stupider idea than being an early adopter of PC VR. Not to turn this thread into a console versus PC war - there'll be more than enough time for that in September - but there are some potential advantages of console VR over PC VR for the early adopter; it's not entirely biased in PC's favor. The environment will be more curated, so you'll be more confident that you're getting the same framerate the developers were and not being sick every time your antivirus wakes up. They've got broader reach and more established manufacturing lines, so you would expect wider adoption of console VR to happen much faster. And of course it's currently significantly cheaper. What it'll lose to PC on is graphical fidelity, number and breadth of titles, and potentially framerate in over-ambitious titles like RE7. So like everything it's a trade-off.

|

|

|

|

Surprise Giraffe posted:I still really want both headsets so I can try them out consecutively and hash out the pros and cons of the displays. I wonder how much of a difference the relative brightness of the vive makes for e.g. kind of wonder if that's not more of an advantage than you might imagine It's pretty much undetectable. Since it's the only source of light available to you your eyes just adjust to the screen's brightness, whatever it is. If the headset screens had significantly different contrast ratios then that would be another matter, but I've not seen anything to suggest that they do.

|

|

|

|

Sky Shadowing posted:Heads up, apparently that overlay does NOT work with the Rift in some games, most prominently Elite. It uses SteamVR and those games forcibly use Oculus's rendering solution, which prevents it from working. In principle it should be possible to get it working by hiding the Rift dlls from Elite but not from SteamVR; that'd require a bunch of messy copying and renaming of DLLs each time you run it, though. Stormangel posted:I approve of watching Macross Delta while playing Elite. It'd be possible for someone to implement it in the Rift software using the same approach ReVive takes, it's just substantially more work. Valve provide an official mechanism for putting overlay windows in the VR scene, in Oculus it would have to be done by hand.

|

|

|

|

The Gay Bean posted:Does anybody see a future for OSVR that would warrant spending any effort in that direction? Unfortunately no, I still don't. Currently I'd place OSVR support about level with Linux support; if your target market overlaps with that kind of enthusiast, you already have the hardware, and it's trivial to add support - i.e. you're using Unity or Unreal and the plugins work first time - then it might be worth doing. Even then, though, you've got to remember that you're adding a big post-release support burden for your title, as OSVR - like Linux - introduces a huge number of weird cobbled together hardware and software setups that people will expect you to provide support for. The thing is, even though it has a price advantage and its features are approaching parity the install base of the HDK is still vanishingly small compared to the Vive or the Rift. Unless Razer can turn that around there's always going to be a chicken-and-egg problem that it can't overcome, as no-one buys it because no-one develops for it because no-one buys it. The one thing that might sway you: If you're currently sitting at zero marketing, Razer are naturally desperate for titles to show off OSVR and the HDK so getting featured by them is going to be easier than getting featured by Oculus or Valve. Of course, given how few people are paying attention to them you might question how much that featuring is actually worth.

|

|

|

|

homeless snail posted:The question was about APIs rather than the hardware, though. For the purpose of development priorities they're effectively the same thing. Install base of the runtime is what matters, and 98% of users are going to install what comes with their hardware - and maybe SteamVR if they have Steam - and nothing else. The number of people who have the OSVR runtime installed with the Vive or Rift probably doesn't even reach four figures, and I'm including the engineers at Razer. homeless snail posted:The OSVR API has totally different design goals than OpenVR, and I think its fairly successful at what it tries to do. OpenVR abstracts everything at a fairly high level so applications have a hardware independent interface for handling tracked objects and hand controllers, it makes a lot of decisions on the behalf of hardware in how it should be presented in the interest of orthogonality. OSVR goes the other way and makes very few judgements about how hardware is presented, its just a very regular and cross-platform interface for enumerating and accessing hardware without going through a dozen different vendor APIs. If you're purely talking about the API from a technical point of view I'd agree with all of this. It's a lower-level API that makes much fewer assumptions about what's going on. You could say that OSVR is to OpenVR as Raw Input is to Xinput: If you want to work with weird and esoteric flight sticks you use raw input to access all the different things they can do; if you just want your game to be playable with a gamepad it's a lot easier to use Xinput. homeless snail posted:If you're making a game OpenVR is definitely the way to go, but if you're doing something weird or experimental in VR that falls outside of OpenVR's purview you might actually be better served by OSVR. I'd say the benefit specifically only comes if you want to support unusual hardware that does things the lowest common denominator doesn't - if you're developing for an HDR device, or a treadmill, or whatever. If the things you want to do only use features that exist on the Vive then implementing OpenVR is always going to be easier.

|

|

|

|

Truga posted:Looks like someone finally got the loving memo: http://store.steampowered.com/app/372650/ Envelop have been doing private demos for over a year now. Their marketing is good, but the burden's still on them to demonstrate that they've fixed all the standard issues of occluded windows, weird drawing mechanisms, et cetera et cetera, that have plagued everyone else's attempts to do this on windows. I guess we'll see now that there's a public build out.

|

|

|

|

Okay, that actually manages to be worse than what I was expecting. What the hell have they been doing for the past year?

|

|

|

|

SwissCM posted:Something I like to remind people of is that WSAD+Mouse wasn't settled on as the standard input for first person games until the late 90s, despite it being, in hindsight, kinda obvious. Mice certainly weren't ubiquitous when W3D and Doom came out. Our household technically owned one, but it never came out of the box because GEM wasn't worth using. I would almost look at things the opposite way; there has been almost no change in how we control first person games on PC since the days of Doom. Mouselook a year or so later was a big innovation, but it's pretty much the only one. The control mechanisms haven't improved, we've just become so used to them that they're now second nature.

|

|

|

|

Windows 3.1 certainly wasn't ubiquitous while Doom was in development; it wasn't even out for most of that time. I would point to Marathon in '94 as being the first new FPS developed in the GUI era, and that controlled pretty much identically to today's shooters - I don't consider the switch of default bindings from arrow keys to WASD as a huge innovation. Significant changes to FPS control schemes since then have only come when they were forced to: Halo on consoles being the obvious example. And there has been no significant innovation on gamepad FPS controls since Bungie's first implementation, either; generally once you have controls for a genre that basically work no-one changes them because players are unwilling to relearn. Eyud posted:You take that back. Never!

|

|

|

|

Have Razer ever actually made a premium product in the past? The most I've ever seen from them is flashy but cheap stuff.

|

|

|

|

sliderule posted:e: Also, your "eyes" would be further forward than normal and I have no clue how that would gently caress with you. Judging by our experience with VR it'd mean that turning your head gives immediate and significant nausea. Miscalculating the eye camera positions is one of those things that badly breaks the human sense of balance. Fortunately you wouldn't necessarily need to have the eye positions be wrong. With reprojection and suitable lenses you should be able to present a "correct" view to the eyes; it would have artifacts on the edges of objects, but that should be a much less disruptive problem than presenting a completely incorrect view. Technically with large enough lenses on the cameras you could even do it without the reprojection, but that's starting to get mechanically infeasible.

|

|

|

|

SwissCM posted:If a device is capable of excellent AR then it should also be capable of excellent VR, provided it's capable of blocking photons from the outside world from hitting your retinas. This has traditionally been a huge stumbling block in AR, and not for want of trying; high contrast obscuration with pixel-level control just hasn't been cracked yet. Of course if all you want is complete obscuration and you don't care about switch times then you can just put a blindfold on over the AR headset, so admittedly this specific use case isn't particularly hard.

|

|

|

|

AndrewP posted:is this actually ever going to happen? e: Apparently Unreal has also had it since 4.11? I've not seen anything claiming to use it since then, which is odd. e2: Correction: Looking at the release notes more closely, Unity 5.4 only added single-pass stereo rendering, not instanced stereo rendering, so they won't be getting the full savings yet. NRVNQSR fucked around with this message at 20:38 on Aug 27, 2016 |

|

|

|

LLSix posted:Any word from Vive or Occulus about their next generation headsets? I've been wanting to get into VR since it came out but its going to require a whole new PC for me. If there's something coming with higher HW requirements even six months ahead I think I'd rather wait than invest all that money (and time spent researching parts) now. HTC Still In Planning Stage For ‘Vive 2’, so that's quite a long way off. No corresponding word from Oculus, though I would personally say that "within six months" isn't particularly plausible. Within 2017 maybe.

|

|

|

|

FuzzySlippers posted:I'm betting it has some weird results on post effects and such so it probably isn't just flipping a switch on your build. Even when Unity gets it and for UE4 games it may require some extra work for existing games to support it. Instanced stereo rendering shouldn't require any changes to post effects; it only changes how you draw the scene in the first place, the scene output is unchanged. It does affect shaders, though; if a game's just using Unity surface shaders or Unreal's material editor then it should be fine, but any games that write their own shader code will likely need to rework it. I mean, technically you could change the post effects to use instanced stereo rendering as well, but the savings from that would be minuscule so there's no reason to bother.

|

|

|

|

Subjunctive posted:For things like sun glare appearing/scaling as the sun becomes visible, don't you need to test per-eye? I always assumed that was done by adding geometry, but maybe it's a post effect. If it's done as a post effect then it'll already be working per-eye so it won't need changes. You're right, though, anything in the scene that wants to do different draw calls in different eyes needs to be adjusted for instanced stereo; billboards are another classic example. I actually don't know what the current best practice is on billboards in VR, other than "don't use them". I'd guess "do the billboard selection logic once for both, but orient each eye's billboard to face that eye", but I don't know if that's better or worse than matching their world space orientations.

|

|

|

|

CapnBry posted:Single pass stereo rendering seems like it would be a godsend for VR, double the perf right?! But really GPUs these days have gotten really good at processing the ole fixed function pipeline so pushing the same geometry and textures twice with a different camera doesn't hurt as much as you'd expect. For (waggles hand) somewhat complex geometry scenes you're looking at _maybe_ 20% perf boost. CapnBry posted:As Alex Vlachos has explained, the problem is the number of pixels especially because so many post processing effects are rendered at the pixel level (in image space). A 1080p monitor at 60Hz is shading 124M pixels/sec, the vive is shading 457M pixels/sec so the real gains come from reducing the number of shaded pixels. Fixed FOVeated Rendering, Lens-Matched Shading, Multi-resolution Shading, and Radial Density Masking all are techniques that reduce the number of pixels to compute I'm still a little skeptical of the value of density masking on high-end cards, mind you. I know Valve reported ~10% wins from it, but it's inevitably going to be very hardware dependent; I'd be interested to see tables of results from it on different GPUs, particularly AMD ones. CapnBry posted:The point is that you won't see these coming into AAA games for at least another year

|

|

|

|

Hadlock posted:Is there a graph for declining return on resolution works for ROR? Like obviously 1.15x is better than 1.0x and 1.5x is even better, but 10.0x is probably not appreciably sharper than 2.5x, I am guessing. I don't believe so, because even if you take VR out of the equation it's not clear what you would measure. The logic that goes into graphs like that one is pretty dubious in the first place; they're measuring the distance at which the eye can no longer distinguish pixels, which is reasonable for movies but it's not the whole story for video games. Games would technically need to be running supersampled at an enormous resolution to achieve the same visual acuity as film at the same distance. You're right that there are going to be diminishing returns on perceived quality as supersampling increases, but since there's no way to accurately measure perceived quality there's no way to draw a graph of it. Intuitively we know that it's approximately a logarithmic curve with small peaks around the integer multipliers (1x, 2x, 3x etc), but that's about the limit of what can be said.

|

|

|

|

So this is mildly interesting: Qualcomm are demonstrating a reference device for standalone VR that they believe could cost $400-$500. Boasts dual external cameras for inside-out tracking, dual internal cameras for eye-tracking, and 1440p screens. It's running on a snapdragon and the screens are only 70hz, though, so we're definitely talking "better GearVR" rather than desktop VR competitor. My gut feeling is that it won't really go anywhere - the refresh rate is too low for eye-tracked foveated rendering to be effective and standalone VR is always going to be much less attractive to consumers than smartphone VR. Still, as the article notes there's a long history of Chinese manufacturers turning these Qualcomm reference devices into ultra-cheap market products, so we might start to see a lot of devices based on this model in the next year or so. NRVNQSR fucked around with this message at 14:26 on Sep 2, 2016 |

|

|

|

If you define depth as "things you can't do with motion controls", then sure, you're not going to get depth with motion controls. That doesn't mean you can't have deep and complex game mechanics with interesting relationships between them and an expressive vocabulary of player input. Motion controls absolutely have significant limitations. They will reduce the speed and responsiveness of a game, and they mean that the player has certain built-in assumptions about the effects of certain control inputs. These are both limitations that developers can work around, though, and even where that's not possible there are buttons on the controllers so you can use hybrid control schemes. In the past there hasn't been much impetus for developers to find solutions to the difficulties of motion controls. The lack of precision in the controllers - and in most cases few or zero buttons - meant that it wasn't worth putting in the effort for the small market size available. If VR doesn't take off then perhaps it still won't be, but assuming it does then I'm sure you'll get the sort of action games you're looking for; you just might be playing Spiderman, or Iron Man, or a flying wizard rather than Wolverine or Geralt.

|

|

|

|

There might be an issue with GPU output bandwidth? If it can only afford three output streams that'll limit the number of displays you can connect, Direct mode or no.

|

|

|

|

Basic Chunnel posted:But seriously, the only obvious internal VR use that I can envision is telecommunication, eg creating those virtual corporate star chambers that cyberpunk fiction always feature. Everything else I think it would depend on the scale of public adoption and its use as something beyond novelty - that is, will consumers not think it strange or laborious to move through a virtual grocery isle to make their selections in Freshdirect? Or will it be something like we saw with Second Life, where companies build virtual offices that no one visits. I I'd see augmented reality as the clearer / cheaper / more practical alternative in reaching consumers. I've talked to a lot of people looking at using VR in enterprise; primarily for engineering or medical purposes, but some for communications - both social and corporate. I think the handful of developers ITT are all in games, though. e: If you've seen the kind of bizarre technology people already throw at teleconferencing you'll know that VR is pretty tame and sensible by comparison. NRVNQSR fucked around with this message at 17:49 on Sep 10, 2016 |

|

|

|

Zero VGS posted:I think by "the VR" he means the PSVR. Oh, you mean the Nintendo! Yeah, it's pretty good. ljw1004 posted:I'd love to know if that's a real problem. Does the loop really prevent you from getting on your hands and knees and crawling through ducts? From what I've seen I definitely wouldn't want to put any significant weight on the loops.

|

|

|

|

|

| # ¿ May 12, 2024 18:04 |

|

People have been working on backwards compatibility layers for older SDKs; last I checked they didn't quite go back far enough to run Alien: Isolation, but that was a couple of months ago and it was still in active development, so maybe?

|

|

|